On this tutorial, we are going to discover tips on how to develop a Neural Community (NN) with JAX. And what higher mannequin to decide on than the Transformer. As JAX is rising in reputation, an increasing number of developer groups are beginning to experiment with it and incorporating it into their initiatives. Even if it lacks the maturity of Tensorflow or Pytorch, it gives some nice options for constructing and coaching Deep Studying fashions.

For a stable understanding of JAX fundamentals, test my earlier article for those who haven’t already. Additionally you’ll find the total code in our Github repository.

One of many widespread issues individuals have when beginning with JAX is the selection of a framework. The individuals in Deepmind appear to be very busy and have already launched a plethora of frameworks on prime of JAX. Here’s a checklist of essentially the most well-known ones:

-

Haiku: Haiku is the go-to framework for Deep Studying and it’s utilized by many Google and Deepmind inner groups. It gives some easy, composable abstractions for machine studying analysis in addition to ready-to-use modules and layers.

-

Optax: Optax is a gradient processing and optimization library that incorporates out-of-the-box optimizers and associated mathematical operations.

-

RLax: RLax is a reinforcement studying framework with many RL subcomponents and operations.

-

Chex: Chex is a library of utilities for testing and debugging JAX code.

-

Jraph: Jraph is a Graph Neural Networks library in JAX.

-

Flax: Flax is one other neural community library with a wide range of ready-to-use modules, optimizers, and utilities. It’s more than likely the closest we now have in an all-in JAX framework.

-

Objax: Objax is a 3rd ml library that focuses on object-oriented programming and code readability. As soon as once more it incorporates the preferred modules, activation capabilities, losses, optimizers as nicely a handful of pre-trained fashions.

-

Trax: Trax is an end-to-end library for deep studying that focuses on Transformers

-

JAXline: JAXline is a supervised-learning library that’s used for distributed JAX coaching and analysis.

-

ACME: ACME is one other analysis framework for reinforcement studying.

-

JAX-MD: JAX-MD is a distinct segment framework that offers with molecular dynamics.

-

Jaxchem: JAXChem is one other area of interest library that emphasizes on chemical modeling.

After all, the query is which one do I select?

To be sincere I’m undecided.

But when I have been you and I wished to be taught JAX, I’d begin with the preferred ones. Haiku and Flax appear to be used so much inside Google/Deepmind and have essentially the most lively Github neighborhood. For this text, I’ll begin with the primary one and see if I’ll want one other one down the street.

So are you able to construct a Transformer with JAX and Haiku? By the best way, I assume that you’ve a stable understanding of transformers. In the event you haven’t, please advise our articles on consideration and transformers.

Let’s begin with the self-attention block.

The self-attention block

First, we have to import JAX and Haiku

import jax

import jax.numpy as jnp

import haiku as hk

Import numpy as np

Fortunately for us, Haiku has a built-in MultiHeadAttention block that may be prolonged to construct a masked self-attention block. Our block accepts the question, key, worth in addition to the masks and returns the output as a JAX array. You’ll be able to see that the code may be very acquainted with commonplace Pytorch or Tensorflow code. All we do is construct the causal masks, utilizing np.trill()which nullify all components of the array above the kth, multiply with our masks and cross all the pieces into the hk.MultiHeadAttention module.

class SelfAttention(hk.MultiHeadAttention):

"""Self consideration with a causal masks utilized."""

def __call__(

self,

question: jnp.ndarray,

key: Optionally available[jnp.ndarray] = None,

worth: Optionally available[jnp.ndarray] = None,

masks: Optionally available[jnp.ndarray] = None,

) -> jnp.ndarray:

key = key if key is not None else question

worth = worth if worth is not None else question

seq_len = question.form[1]

causal_mask = np.tril(np.ones((seq_len, seq_len)))

masks = masks * causal_mask if masks is not None else causal_mask

return tremendous().__call__(question, key, worth, masks)

This snippet permits me to introduce the primary key precept of Haiku. All modules must be a subclass of hk.Module. Which means they need to implement __init__ and __call__, alongside every other technique. In a way, it’s the identical structure with Pytorch modules, the place we implement an __init__ and a ahead.

To make that crystal clear, let’s construct a easy 2-layer MultilayerPerceptron as an hk.Module, which conveniently can be used within the Transformer beneath.

The linear layer

A easy 2-layer MLP will seem like this. As soon as once more, you’ll be able to discover how acquainted it seems.

class DenseBlock(hk.Module):

"""A 2-layer MLP"""

def __init__(self,

init_scale: float,

widening_factor: int = 4,

title: Optionally available[str] = None):

tremendous().__init__(title=title)

self._init_scale = init_scale

self._widening_factor = widening_factor

def __call__(self, x: jnp.ndarray) -> jnp.ndarray:

hiddens = x.form[-1]

initializer = hk.initializers.VarianceScaling(self._init_scale)

x = hk.Linear(self._widening_factor * hiddens, w_init=initializer)(x)

x = jax.nn.gelu(x)

return hk.Linear(hiddens, w_init=initializer)(x)

A number of issues to note right here:

-

Haiku gives us with a set of weights initializers below

hk.initializers, the place we will discover the most typical approaches. -

It additionally has built-in many well-liked layers and modules comparable to

hk.Linear. For the whole checklist, take a peek on the official documentation. -

Activation capabilities should not supplied as a result of JAX already has a subpackage known as

jax.nn, the place we will discover activation capabilities comparable toreluorsoftmax.

The normalization layer

Layer normalization is one other integral block of the transformer structure, which we will additionally discover within the widespread modules inside Haiku.

def layer_norm(x: jnp.ndarray, title: Optionally available[str] = None) -> jnp.ndarray:

"""Apply a singular LayerNorm to x with default settings."""

return hk.LayerNorm(axis=-1,

create_scale=True,

create_offset=True,

title=title)(x)

The transformer

And now for the good things. Beneath you’ll find a really simplistic Transformer, which makes use of our predefined modules. Inside __init__, we outline the fundamental variables such because the variety of layers, consideration heads, and the dropout price. Inside __call__, we compose an inventory of blocks utilizing a for loop.

As you’ll be able to see, every block contains:

In the long run, we additionally add a remaining normalization layer.

class Transformer(hk.Module):

"""A transformer stack."""

def __init__(self,

num_heads: int,

num_layers: int,

dropout_rate: float,

title: Optionally available[str] = None):

tremendous().__init__(title=title)

self._num_layers = num_layers

self._num_heads = num_heads

self._dropout_rate = dropout_rate

def __call__(self,

h: jnp.ndarray,

masks: Optionally available[jnp.ndarray],

is_training: bool) -> jnp.ndarray:

"""Connects the transformer.

Args:

h: Inputs, [B, T, H].

masks: Padding masks, [B, T].

is_training: Whether or not we're coaching or not.

Returns:

Array of form [B, T, H].

"""

init_scale = 2. / self._num_layers

dropout_rate = self._dropout_rate if is_training else 0.

if masks is not None:

masks = masks[:, None, None, :]

for i in vary(self._num_layers):

h_norm = layer_norm(h, title=f'h{i}_ln_1')

h_attn = SelfAttention(

num_heads=self._num_heads,

key_size=64,

w_init_scale=init_scale,

title=f'h{i}_attn')(h_norm, masks=masks)

h_attn = hk.dropout(hk.next_rng_key(), dropout_rate, h_attn)

h = h + h_attn

h_norm = layer_norm(h, title=f'h{i}_ln_2')

h_dense = DenseBlock(init_scale, title=f'h{i}_mlp')(h_norm)

h_dense = hk.dropout(hk.next_rng_key(), dropout_rate, h_dense)

h = h + h_dense

h = layer_norm(h, title='ln_f')

return h

I feel that by now you’ve got realized that constructing a Neural Community with JAX is useless easy.

The embeddings layer

For completion, let’s additionally embody the embeddings layer. It’s good to know that Haiku additionally gives an embedding layer which can create the tokens from our enter sentence. The token are then added to the positional embeddings, which produce the ultimate enter.

def embeddings(knowledge: Mapping[str, jnp.ndarray], vocab_size: int) :

tokens = knowledge['obs']

input_mask = jnp.higher(tokens, 0)

seq_length = tokens.form[1]

embed_init = hk.initializers.TruncatedNormal(stddev=0.02)

token_embedding_map = hk.Embed(vocab_size, d_model, w_init=embed_init)

token_embs = token_embedding_map(tokens)

positional_embeddings = hk.get_parameter(

'pos_embs', [seq_length, d_model], init=embed_init)

input_embeddings = token_embs + positional_embeddings

return input_embeddings, input_mask

hk.get_parameter(param_name, ...) is used to entry the trainable parameters of a module. However you might ask, why not simply utilizing object properties as we do in Pytorch. That is the place the second key precept of Haiku comes into play. We use this API in order that we will convert the code right into a pure perform utilizing hk.remodel. This isn’t quite simple to know however I’ll attempt to make it as clear as doable.

Why pure capabilities?

The facility of JAX comes into its perform transformations: the power to vectorize a perform with vmap, the automated parallelization with pmap, simply in time compilation with jit. The caveat right here is that with the intention to remodel a perform, it must be pure.

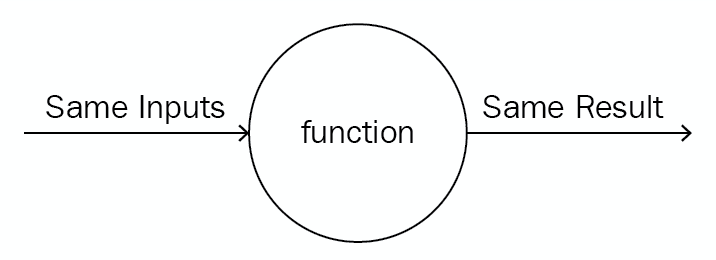

A pure perform is a perform that has the next properties:

-

The perform return values are similar for similar arguments (no variation with native static variables, non-local variables, mutable reference arguments, or enter streams).

-

The perform software has no unwanted side effects (no mutation of native static variables, non-local variables, mutable reference arguments, or enter/output streams).

Supply: Scala pure capabilities by O’Reily

This virtually signifies that a pure perform will at all times:

-

return the identical end result if invoked with the identical inputs

-

all of the enter knowledge is handed by way of the perform arguments, all the outcomes are output by way of the perform outcomes

Haiku gives a perform transformation, known as hk.remodel, that turns capabilities with object-oriented, functionally “impure” modules into pure capabilities that can be utilized with JAX. To see that in follow, let’s proceed with the coaching of our Transformer mannequin.

The ahead cross

A typical ahead cross contains:

-

Taking the enter and compute the enter embedding

-

Run by way of the Transformer’s blocks

-

Return the output

The aforementioned steps will be simply composed with JAX as following:

def build_forward_fn(vocab_size: int, d_model: int, num_heads: int,

num_layers: int, dropout_rate: float):

"""Create the mannequin's ahead cross."""

def forward_fn(knowledge: Mapping[str, jnp.ndarray],

is_training: bool = True) -> jnp.ndarray:

"""Ahead cross."""

input_embeddings, input_mask = embeddings(knowledge, vocab_size)

transformer = Transformer(

num_heads=num_heads, num_layers=num_layers, dropout_rate=dropout_rate)

output_embeddings = transformer(input_embeddings, input_mask, is_training)

return hk.Linear(vocab_size)(output_embeddings)

return forward_fn

Though the code is easy, its construction may appear a bit odd. The precise ahead cross is executed by way of the forward_fn perform. Nevertheless, we wrap this with the build_forward_fn perform which returns the forward_fn. What the heck?

Down the street, we might want to remodel the forward_fn perform right into a pure perform utilizing hk.remodel in order that we will reap the benefits of automated differentiation, parallelization and many others.

This can be completed by:

forward_fn = build_forward_fn(vocab_size, d_model, num_heads,

num_layers, dropout_rate)

forward_fn = hk.remodel(forward_fn)

That’s why as a substitute of merely defining a perform, we wrapp and return the perform itself, or a callable to be extra exact. This callable can then be handed into the hk.remodel and turn out to be a pure perform. If that is clear, let’s proceed with our loss perform.

The loss perform

The loss perform is our well-known cross-entropy perform with the distinction that we’re additionally taking the masks into consideration. As soon as once more, JAX gives one_hot and log_softmax functionalities.

def lm_loss_fn(forward_fn,

vocab_size: int,

params,

rng,

knowledge: Mapping[str, jnp.ndarray],

is_training: bool = True) -> jnp.ndarray:

"""Compute the loss on knowledge wrt params."""

logits = forward_fn(params, rng, knowledge, is_training)

targets = jax.nn.one_hot(knowledge['target'], vocab_size)

assert logits.form == targets.form

masks = jnp.higher(knowledge['obs'], 0)

loss = -jnp.sum(targets * jax.nn.log_softmax(logits), axis=-1)

loss = jnp.sum(loss * masks) / jnp.sum(masks)

return loss

If you’re nonetheless with me, take a sip of espresso as a result of issues are going to get severe any further. It’s time to construct our coaching loop.

The coaching loop

As a result of neither Jax nor Haiku has optimization functionalities built-in, we are going to make use of one other framework, known as Optax. As talked about to start with, Optax is the goto package deal for gradient processing.

First listed below are some issues you’ll want to find out about Optax:

The important thing transformation of Optax is the GradientTransformation. The transformation is outlined by two capabilities, the __init__ and the __update__. The __init__ initializes the state and the __update__ transforms the gradients with respect to the state and the present worth of the parameters

state = init(params)

grads, state = replace(grads, state, params=None)

Another factor to know earlier than we see the code, is Python’s built-in functools.partial perform. The functools package deal offers with higher-order capabilities and operations on callable objects.

A perform known as a Larger Order perform if it incorporates different capabilities as a parameter or returns a perform as an output.

The partial, which will also be used as an annotation, returns a brand new perform primarily based on an unique one, however with fewer or fastened arguments. If for instance, f multiplies two values x,y, the partial will create a brand new perform the place x can be fastened and equal with 2

from functools import partial

def f(x,y):

return x * y

g = partial(f,2)

print(g(4))

After this brief detour, let’s proceed. To decongest our most important perform, we are going to extract the gradients replace into its personal class.

To begin with the GradientUpdater accepts the mannequin, the loss perform, and an optimizer.

- The mannequin can be a pure

forward_fnperform remodeled byhk.remodel

forward_fn = build_forward_fn(vocab_size, d_model, num_heads,

num_layers, dropout_rate)

forward_fn = hk.remodel(forward_fn)

- The loss perform would be the results of a partial with a hard and fast

forward_fnand `vocab_size

loss_fn = functools.partial(lm_loss_fn, forward_fn.apply, vocab_size)

- The optimizer is a set of optimization transformations that may run sequentially ( operations will be mixed utilizing

optax.chain)

optimizer = optax.chain(

optax.clip_by_global_norm(grad_clip_value),

optax.adam(learning_rate, b1=0.9, b2=0.99))

The Gradient updater can be initialized as follows:

updater = GradientUpdater(forward_fn.init, loss_fn, optimizer)

and can seem like this:

class GradientUpdater:

"""A stateless abstraction round an init_fn/update_fn pair.

This extracts some widespread boilerplate from the coaching loop.

"""

def __init__(self, net_init, loss_fn,

optimizer: optax.GradientTransformation):

self._net_init = net_init

self._loss_fn = loss_fn

self._opt = optimizer

@functools.partial(jax.jit, static_argnums=0)

def init(self, master_rng, knowledge):

"""Initializes state of the updater."""

out_rng, init_rng = jax.random.break up(master_rng)

params = self._net_init(init_rng, knowledge)

opt_state = self._opt.init(params)

out = dict(

step=np.array(0),

rng=out_rng,

opt_state=opt_state,

params=params,

)

return out

@functools.partial(jax.jit, static_argnums=0)

def replace(self, state: Mapping[str, Any], knowledge: Mapping[str, jnp.ndarray]):

"""Updates the state utilizing some knowledge and returns metrics."""

rng, new_rng = jax.random.break up(state['rng'])

params = state['params']

loss, g = jax.value_and_grad(self._loss_fn)(params, rng, knowledge)

updates, opt_state = self._opt.replace(g, state['opt_state'])

params = optax.apply_updates(params, updates)

new_state = {

'step': state['step'] + 1,

'rng': new_rng,

'opt_state': opt_state,

'params': params,

}

metrics = {

'step': state['step'],

'loss': loss,

}

return new_state, metrics

Inside __init__, we initialize our optimizer with self._opt.init(params) and we declare the state of the optimization. The state can be a dictionary with:

The replace perform will replace each the state of the optimizer in addition to the trainable parameters. In the long run, it should return the brand new state.

updates, opt_state = self._opt.replace(g, state['opt_state'])

params = optax.apply_updates(params, updates)

Two extra issues to note right here:

-

jax.value_and_grad()is a particular perform that returns a differentiable perform with its gradients -

Each

__init__and__update__are annotated with@functools.partial(jax.jit, static_argnums=0), which can set off the just-in-time compiler and compile them into XLA throughout runtime. Be aware that if we haven’t remodeledforward_fnright into a pure perform, this wouldn’t be doable.

Lastly, we’re able to construct all the coaching loop, which mixes all of the concepts and code talked about up to now.

def most important():

train_dataset, vocab_size = load(batch_size,

sequence_length)

forward_fn = build_forward_fn(vocab_size, d_model, num_heads,

num_layers, dropout_rate)

forward_fn = hk.remodel(forward_fn)

loss_fn = functools.partial(lm_loss_fn, forward_fn.apply, vocab_size)

optimizer = optax.chain(

optax.clip_by_global_norm(grad_clip_value),

optax.adam(learning_rate, b1=0.9, b2=0.99))

updater = GradientUpdater(forward_fn.init, loss_fn, optimizer)

logging.data('Initializing parameters...')

rng = jax.random.PRNGKey(428)

knowledge = subsequent(train_dataset)

state = updater.init(rng, knowledge)

logging.data('Beginning prepare loop...')

prev_time = time.time()

for step in vary(MAX_STEPS):

knowledge = subsequent(train_dataset)

state, metrics = updater.replace(state, knowledge)

Discover how we incorporate the GradientUpdate. It’s simply two strains of code:

-

state = updater.init(rng, knowledge) -

state, metrics = updater.replace(state, knowledge)

And that’s it. I hope that by now you’ve got a extra clear understanding of JAX and its capabilities.

Acknowledgments

The code offered is closely impressed by the official examples of the Haiku framework. It has been modified to suit the wants of this text. For the whole checklist of examples, test the official repository

Conclusion

On this article, we noticed how one can develop and prepare a vanilla Transformer in JAX utilizing Haiku. Though the code isn’t essentially laborious to know, it nonetheless lacks the readability of Pytorch or Tensorflow. I extremely advocate to mess around with it, uncover the strengths and weaknesses of JAX and see if it’d be a superb match in your subsequent mission. In my expertise, JAX may be very robust for analysis functions that require excessive efficiency however fairly immature for real-life initiatives. Tell us what you suppose in our discord channel.

* Disclosure: Please notice that a number of the hyperlinks above may be affiliate hyperlinks, and at no further value to you, we are going to earn a fee for those who determine to make a purchase order after clicking by way of.