It’s well-established that Imaginative and prescient Transformers (ViTs) can outperform convolutional neural networks (CNNs), equivalent to ResNets in picture recognition. However what are the elements that trigger ViTs’ superior efficiency? To reply this, we examine the discovered representations of pretrained fashions.

On this article, we are going to discover varied subjects based mostly on high-impact pc imaginative and prescient papers:

-

The feel-shape cue battle and the problems that include supervised coaching on ImageNet.

-

A number of methods to study sturdy and significant visible representations, like self-supervision and pure language supervision.

-

The robustness of ViTs vs CNNs, in addition to spotlight the intriguing properties that emerge from educated ViTs.

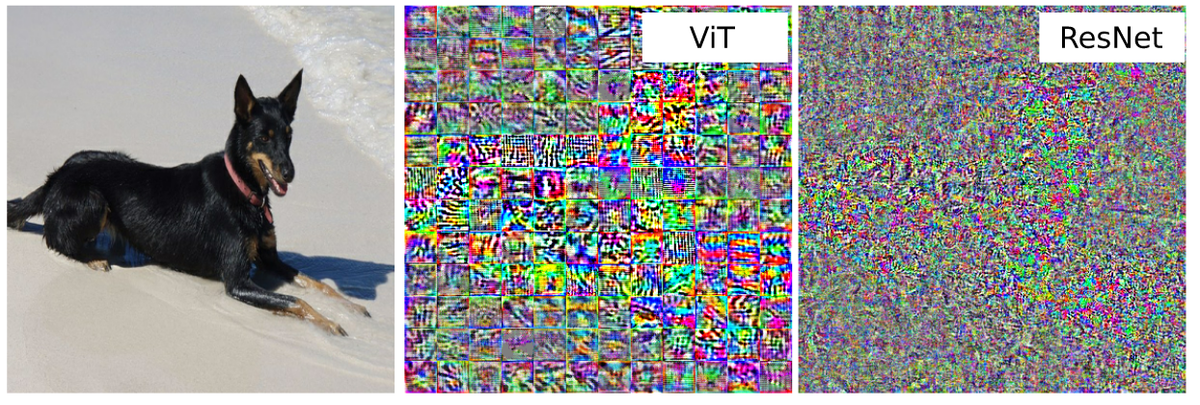

Adversarial Assaults are well-known experiments that assist us achieve perception into the workings of a classification community. They’re designed to idiot neural networks by leveraging their gradients (Goodfellow et al. ). As a substitute of minimizing the loss by altering the weights, an adversarial perturbation modifications the inputs to maximise the loss based mostly on the computed gradients. Let’s take a look at the adversarial perturbations computed for a ViT and a ResNet mannequin.

Fig. 1: ViTs and ResNets course of their inputs very in a different way. Supply

As depicted within the above determine, the adversarial perturbations are qualitatively very completely different. Regardless that each fashions might carry out equally in picture recognition, why have they got completely different adversarial perturbations?

Let’s introduce some background information first.

Robustness: We apply a perturbation to the enter photographs (i.e. masking, blurring) and observe the efficiency drop of the educated mannequin. The smaller the efficiency degradation, the extra sturdy the classifier!

Robustness is measured in supervised setups, so the efficiency metric is often classification accuracy. Moreover, robustness may be outlined with respect to mannequin perturbations; for instance by eradicating a couple of layers. However this isn’t so widespread. Word that our definition of robustness at all times features a perturbation.

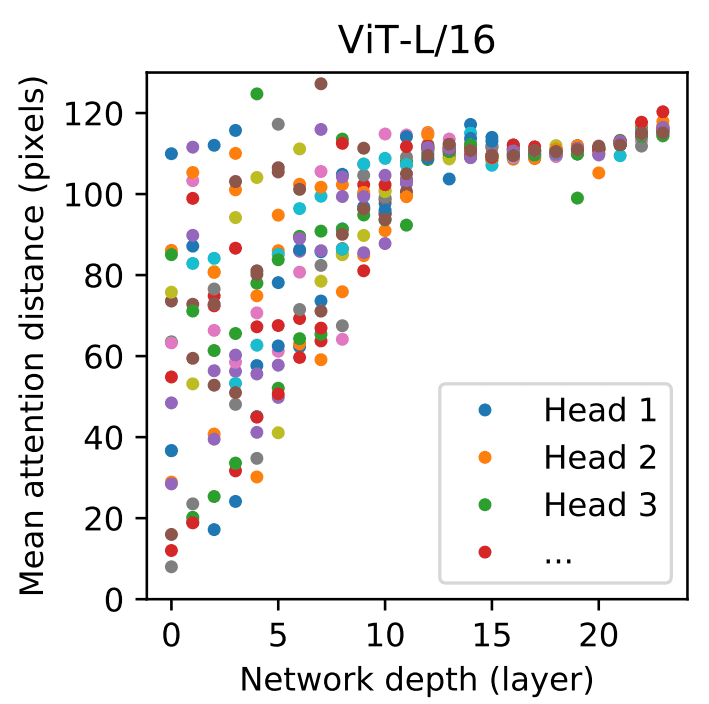

The transformer can attend to all of the tokens (16×16 picture patches) at every block by design. The initially proposed ViT mannequin from Dosovitskiy et al. already demonstrated that heads from early layers are inclined to attend to far-away pixels, whereas heads from later layers don’t.

Fig. 2: How heads of various layers attend to their encompass pixels. Supply: Dosovitskiy et al.

Lengthy-range correlations are certainly helpful for picture classification, however is that this the one cause for the superior efficiency of ViTs? For that, we have to take a step again and take a better take a look at the representations of CNNs, particularly ResNets as they’ve been studied in larger depth.

ImageNet-pretrained CNNs are biased in direction of texture

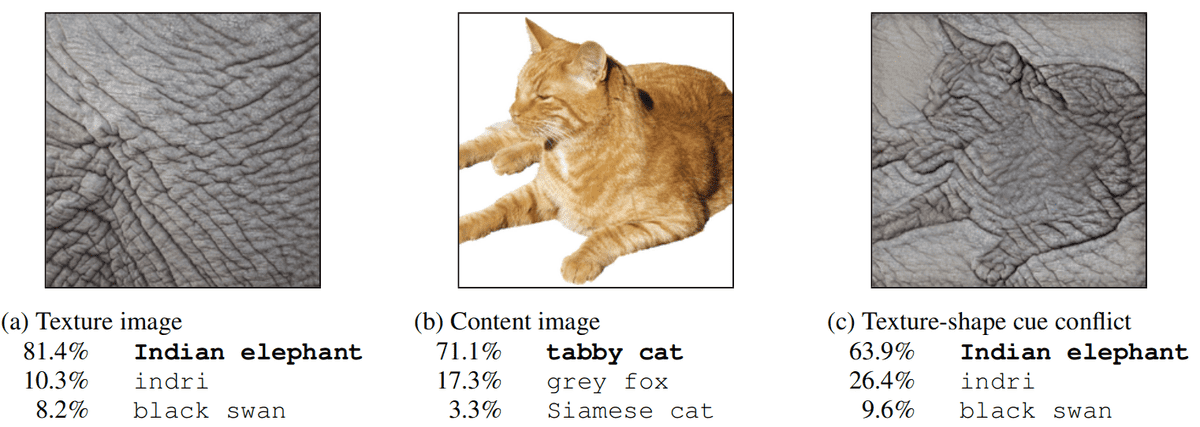

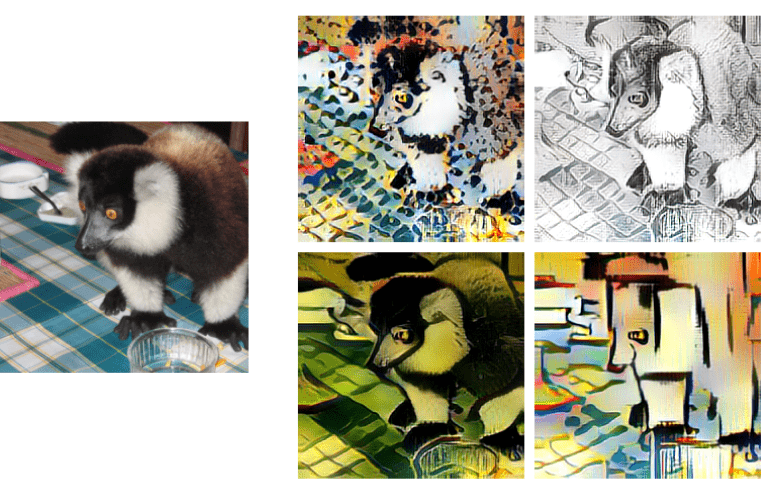

Of their paper “Are we achieved with ImageNet?”, Beyer et al. argue whether or not present mannequin merely overfit to the idiosyncrasies of ImageNet’s labeling process. To delve deeper into the discovered representations of pretrained fashions, we are going to concentrate on the notorious ResNet50 examine by . Extra particularly, Geirhos et al. demonstrated that CNNs educated on ImageNet are strongly biased in direction of recognizing textures relatively than shapes. Under is a superb instance of such a case:

Fig. 3: Classification of a typical ResNet-50 of (a) a texture picture (elephant pores and skin: solely texture cues); (b) a traditional picture of a cat (with each form and texture cues), and (c) a picture with a texture-shape cue battle, generated by fashion switch between the primary two photographs. Supply: Geirhos et al. .

Left: a texture picture (elephant pores and skin) that’s appropriately acknowledged. Heart: a appropriately categorised picture of a beautiful cat. Proper: when the community is offered with an overlay of the elephant texture with the cat form (proper) the prediction extremely favors the feel relatively than the item’s form. That is the so-called texture-shape cue battle. The picture on the correct was generated utilizing adaptive occasion normalization.

At this level, it’s possible you’ll be questioning, what’s improper with texture?

Neuroscience research (Landau et al. ) confirmed that object form is the only most essential cue for human object recognition. By learning the visible pathway of people concerning picture recognition, researchers recognized that the notion of object form is invariant to most perturbations. So so far as we all know, the form is probably the most dependable cue.

Intuitively, the item form stays comparatively secure whereas different cues may be simply distorted by all types of noise, equivalent to rain and snow in a real-life state of affairs . Form-based representations are thus extremely helpful for picture classification.

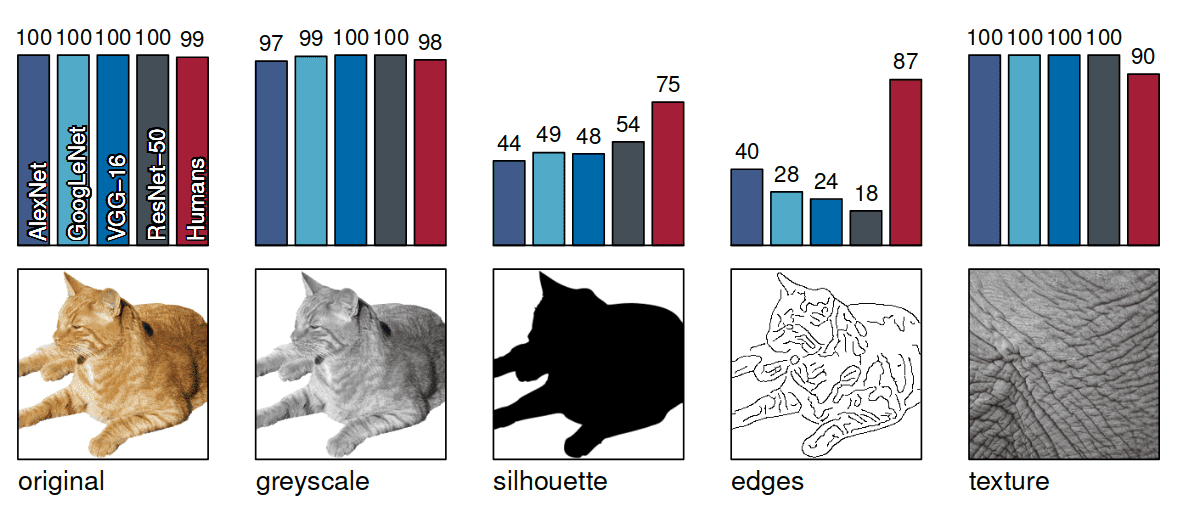

That explains why people can acknowledge sketches, work, or drawings whereas neural networks wrestle (efficiency deteriorates considerably).

Fig. 4: Accuracies and instance stimuli for 5 completely different experiments with out cue battle. Supply: Geirhos et al. .

Within the above picture, silhouettes and edges are created from conventional pc imaginative and prescient algorithms. It is very important word at this level that every one the CNNs had been educated on Imagenet utilizing the picture label as supervision, which begs the query: is ImageNet a part of the issue?

What’s improper with ImageNet?

Brendel et al. offered ample experimental outcomes to state that ImageNet may be “solved” (decently excessive accuracy) utilizing solely native data. In different phrases, it suffices to combine proof from many native texture options relatively than going by means of the method of integrating and classifying world shapes.

The issue? ImageNet discovered options generalize poorly within the presence of sturdy perturbations. This severely limits using pretrained fashions in settings the place form options translate effectively, however texture options don’t.

One instance of poor generalization is the Stylized ImageNet (SIN) dataset.

Fig. 5: The SIN dataset. Proper: reference picture. Left: Instance texture-free photographs that may be acknowledged solely by texture. Geirhos et al. .

SIN is an artificial texture-free dataset, whereby the item class can solely be decided by studying shape-based representations.

Primarily based on in depth experiments, Geirhos et al. discovered that texture bias in present CNNs shouldn’t be by design, however induced by ImageNet coaching information, which hinders the transferability of these options in tougher datasets (i.e. SIN).

Therefore, supervised ImageNet-trained CNNs are in all probability taking a “shortcut” by specializing in native textures : “If textures are ample, why ought to a CNN study a lot else?”

So how can we implement the mannequin to be texture debiased? Let’s begin with a quite simple workaround.

Hand-crafted duties: rotation prediction

Varied hand-crafted pretext duties have been proposed to enhance the discovered representations. Such pretext duties can be utilized both for self-supervised pretraining or as auxiliary goals. Self-supervised pretraining requires extra assets and often a bigger dataset, whereas the auxiliary goal introduces a brand new hyperparameter to stability the contribution of the a number of losses.

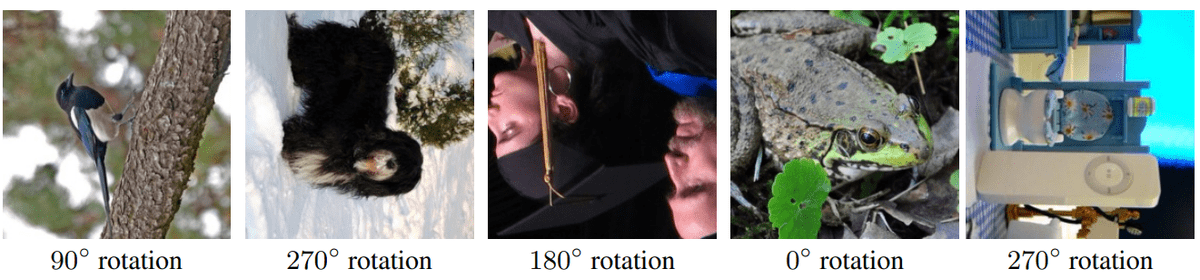

As an illustration, Gidaris et al. used rotation prediction for self-supervised pretraining. The core instinct of rotation prediction (sometimes [0,90,180,270]) is that if somebody shouldn’t be conscious of the objects depicted within the photographs, he can’t acknowledge the rotation that was utilized to them.

Fig. 6: Utilized rotations. Supply: Gidaris et al. ICLR 2018

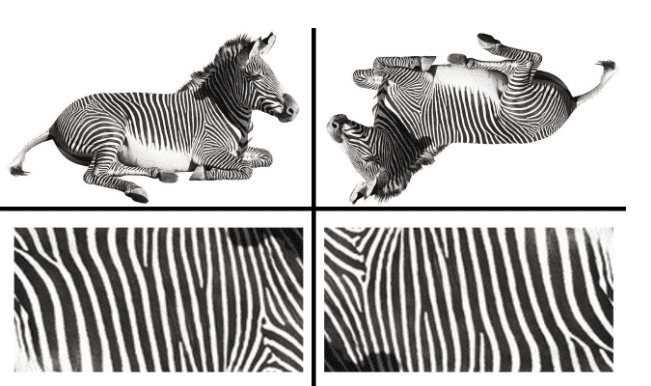

Within the subsequent instance, the texture shouldn’t be ample for figuring out whether or not the zebra is rotated. Thus, predicting rotation requires modeling form, to some extent.

Fig. 7: The article’s form may be invariant to rotations. Supply: Hendrycks et al. (NeurIPS 2019)

Hendrycks et al. used the rotation prediction as an auxiliary goal on par with the supervised goal. Apparently, they discovered that rotation prediction can profit robustness towards adversarial examples, in addition to label and enter corruption. It additionally advantages supervised out-of-distribution detection. Nonetheless, this precept might not be true for different objects equivalent to oranges.

To this point, no hand-crafted pretext activity (i.e. inpainting, jigsaw puzzles, and so on.) has been broadly utilized, which brings us to the following query: what’s our greatest shot to study informative representations?

The reply lies in self-supervised joint-embedding architectures.

DINO: self-distillation mixed with Imaginative and prescient Transformers

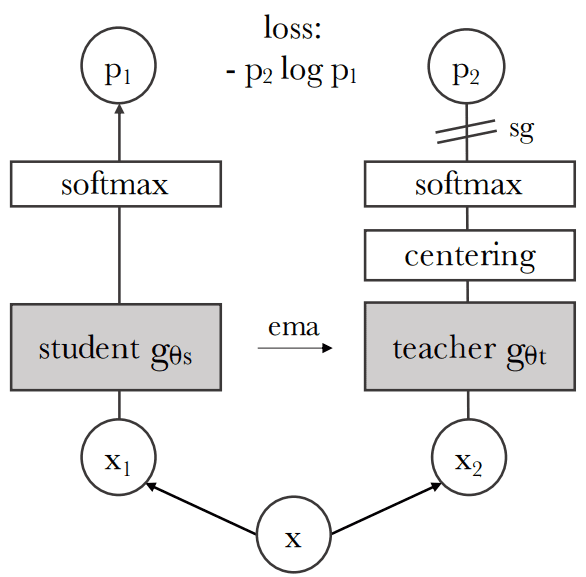

Over time, a plethora of joint-embedding architectures has been developed. On this weblog submit, we are going to concentrate on the current work of Caron et al. , specifically DINO.

Fig. 8: The DINO structure. Supply: Caron et al. .

Listed below are probably the most important elements from the literature of self-supervised studying:

-

Robust stochastic transformations (cropping, jittering, solarization, blur) are utilized to every picture x to create a pair x1, x2 (the so-called views).

-

Self-distillation: The instructor is constructed from previous iterations of the coed, the place the instructor’s weights are an exponential transferring common of the coed’s weights.

-

A number of views are created for every picture, exactly 8 native (96×96) and a pair of world crops (224×224)

The aforementioned elements have been beforehand explored by different joint embedding approaches. Then why DINO is so essential?

Properly, as a result of this was the primary work to indicate the intriguing property of ViTs to study class-specific options. Earlier works have primarily centered on ResNets.

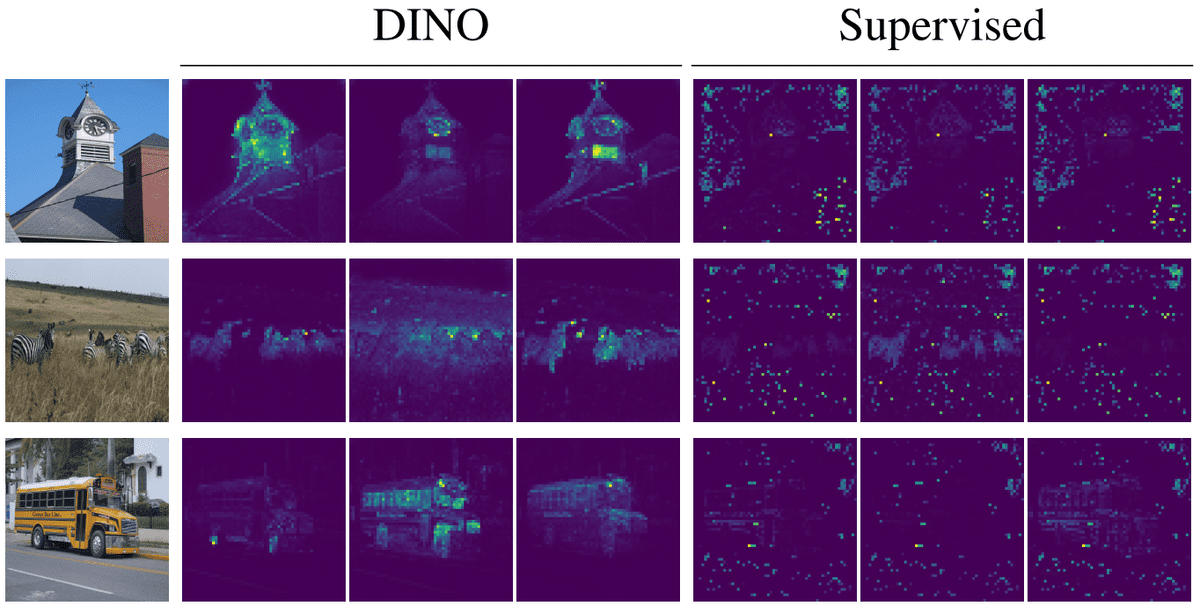

Fig. 9: Supply: Caron et al. .

For this visualization, the authors seemed on the self-attention of the CLS token on the heads of the final layer. Crucially, no labels are used in the course of the self-supervised coaching. These maps exhibit that the discovered class-specific options result in outstanding unsupervised segmentation masks, and visibly correlate with the form of semantic objects within the photographs.

Concerning adversarial robustness, Bai et al. declare that ViTs attain comparable robustness in comparison with CNNs in defending towards each perturbation-based adversarial assaults and patch-based adversarial assaults.

Subsequently, neural networks are nonetheless fairly delicate to pixel data. The rationale stays the identical: the educated fashions solely depend on the visible sign.

One believable approach to study extra “summary” representations lies in incorporating present image-text paired information on the web with out explicitly counting on human annotators. That is the so-called pure language supervision method, launched by OpenAI.

Pixel-insensitive representations: pure language supervision

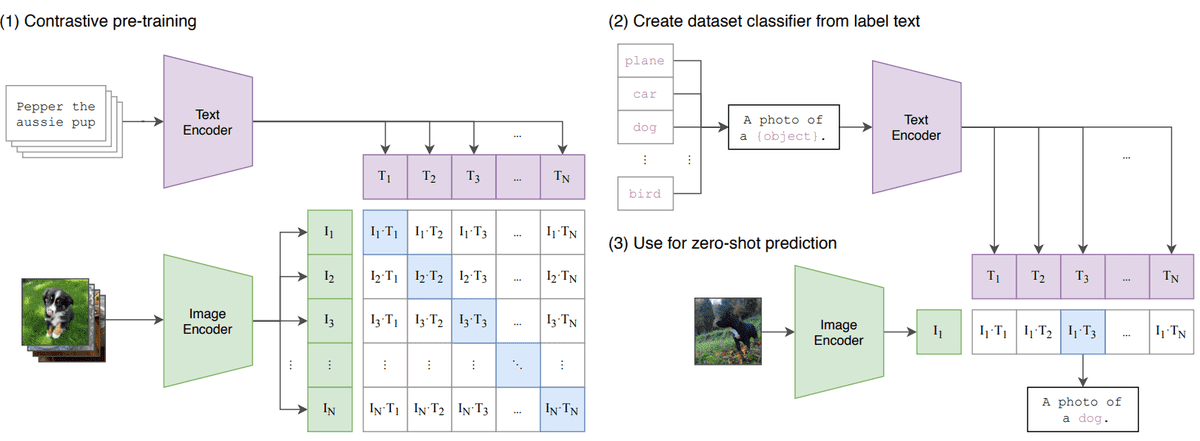

In CLIP , Radford et al. scrapped a 400M image-text description dataset from the net. As a substitute of getting a single label (e.g. automobile) and encoding it as a one-hot vector we now have a sentence. The captions are probably extra descriptive than mere class labels.

The sentence might be processed by a textual content transformer and an aggregated illustration might be used. On this means, they suggest CLIP to collectively practice the picture and textual content transformer.

Fig. 10: Supply: Radford et al.

Provided that the label names can be found for the downstream dataset one can do zero-shot classification, by leveraging the textual content transformer and taking the image-text pair with the utmost similarity.

Discover how sturdy the mannequin is in comparison with a supervised ResNet with respect to (w.r.t.) information perturbations like sketches.

Fig. 11: Supply: Radford et al.

For the reason that mannequin was educated with way more information, sketches had been probably included within the web-scraped information in addition to picture captions which might be extra descriptive than easy class labels. Its accuracy on pure adversarial examples remains to be outstanding.

Perception: “The presence of options that signify conceptual classes is one other consequence of CLIP coaching” ~ Ghiasi et al. .

In distinction to supervised ViTs whereby options detect single objects, CLIP-trained ViTs produce options in deeper layers activated by objects in clearly discernible conceptual classes .

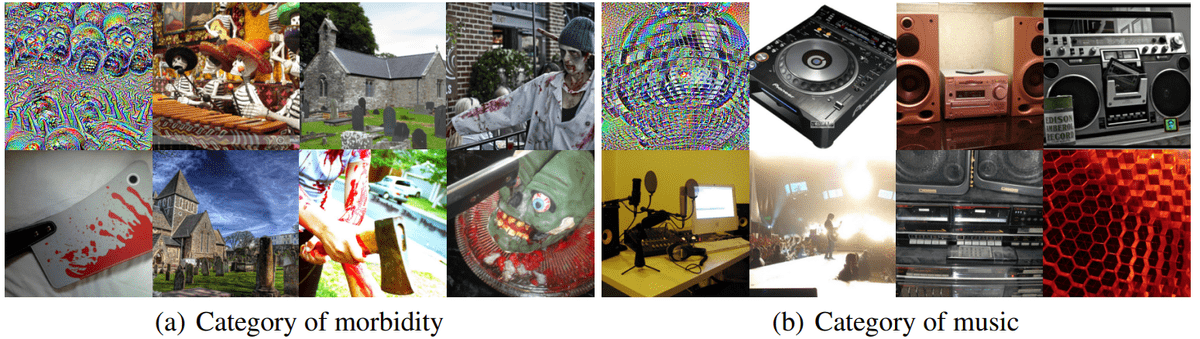

Fig. 12: Options from ViT educated with CLIP that pertains to the class of morbidity and music. Supply: Ghiasi et al.

Left (a): characteristic activated by what resembles skulls alongside tombstones. The remaining seven photographs (with the best activation) embrace different semantic lessons equivalent to bloody weapons, zombies, and skeletons. These lessons have very dissimilar attributes pixel-wise, suggesting that the discovered characteristic is broadly associated to the summary idea of “morbidity”. Proper (b): we observe that the disco ball options are associated to boomboxes, audio system, a file participant, audio recording tools, and a performer.

CLIP fashions thus create a higher-level group for the objects they acknowledge than customary supervised fashions.

From the above comparability, it isn’t clear if the superior accuracy stems from the structure, the pretrained goal, or the enlarged coaching dataset. Fang et al. have proven by means of in depth testing that the big robustness beneficial properties are a results of the big pretraining dataset. Exactly:

“CLIP’s robustness is dominated by the selection of coaching distribution, with different elements enjoying a small or non-existent function. Whereas language supervision remains to be useful for simply assembling coaching units, it isn’t the first driver for robustness” ~ Fang et al. .

Now we transfer again to the widespread supervised setups.

Robustness of ViTs versus ResNets below a number of perturbations

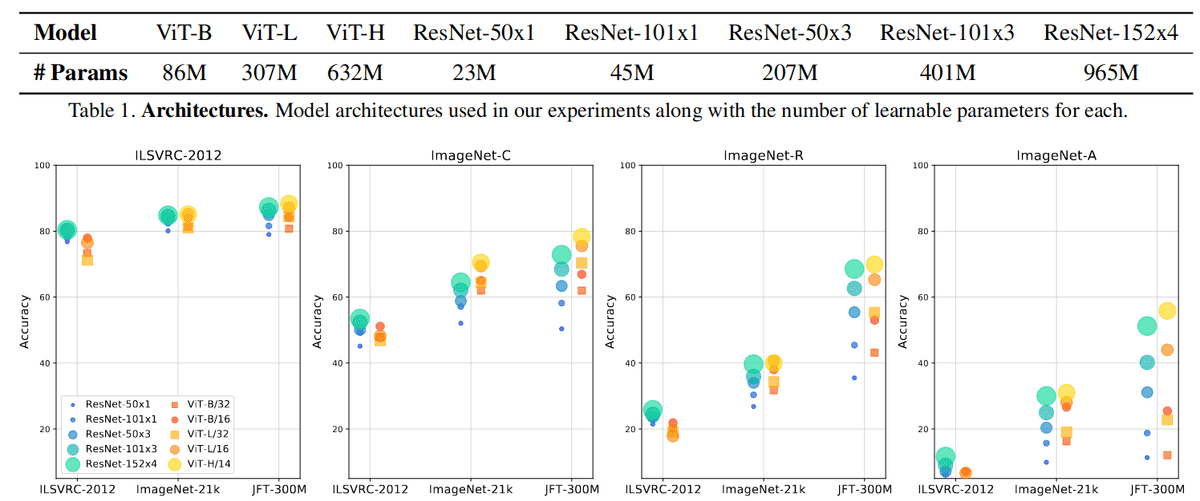

Google AI has carried out in depth experiments to review the habits of supervised educated fashions below completely different perturbation setups. In the usual supervised enviornment, Bhojanapalli et al. explored how ViTs and ResNets behave when it comes to their robustness towards perturbations to inputs in addition to to model-based perturbations.

Fig. 13: Supply: Bhojanapalli et al.

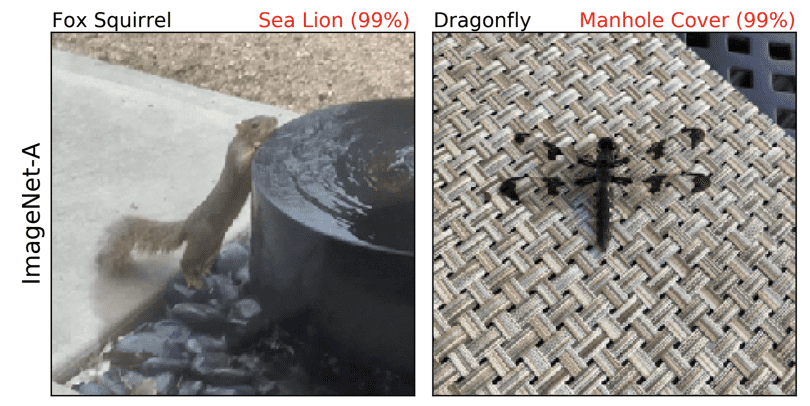

ILSVRC-2012 stands for ImageNet, ImageNet-C is a corrupted model of ImageNet, and ImageNet-R consists of photographs with real-world distribution shifts. ImageNet-A consists of pure adversarial examples as illustrated beneath:

Fig. 14: Pure Adversarial Examples from Dan Hendrycks et al. Supply

Right here, the black textual content is the precise class, and the purple textual content is a ResNet-50 prediction and its confidence.

The core findings of this examine are summarized beneath:

ViTs scale higher with mannequin and dataset dimension than ResNets. Extra importantly, the accuracy of the usual ImageNet validation set is predictive of efficiency below a number of information perturbations.

ViT robustness w.r.t. model-based perturbations: The authors observed that apart from the primary transformer block, one can take away any single block, with out substantial efficiency deterioration. Furthermore, eradicating self-attention layers hurts greater than eradicating MLP layers.

ViT robustness w.r.t. patch dimension: As well as, ViTs have completely different robustness with respect to their patch dimension. Exactly, the authors discovered that smaller patch sizes make ViT fashions extra sturdy to spatial transformations (i.e. roto-translations), but in addition enhance their texture bias (undesirable). Intuitively, a patch dimension of 1 would discard all of the spatial construction (flattened picture) whereas a patch dimension near the picture dimension would restrict fine-grained representations. For instance, a number of objects in the identical patch would have the identical embedding vector. The tough pure language equal to a patch dimension of 1 can be character-level encoding. The large patch dimension would conceptually correspond to representing a number of sentences with a single embedding vector.

ViT robustness w.r.t. world self-attention: Lastly, proscribing self-attention to be native, as an alternative of world, has a comparatively small impression on the general accuracy.

Experimental outcomes from this examine are fairly convincing, however they don’t present any clarification in any respect. This brings us to the NeurIPS 2021 paper known as “Intriguing properties of ViTs.”

Intriguing Properties of Imaginative and prescient Transformers

On this glorious work, Naseer et al. investigated the discovered representations of ViTs in larger depth. Under are the principle takeaways:

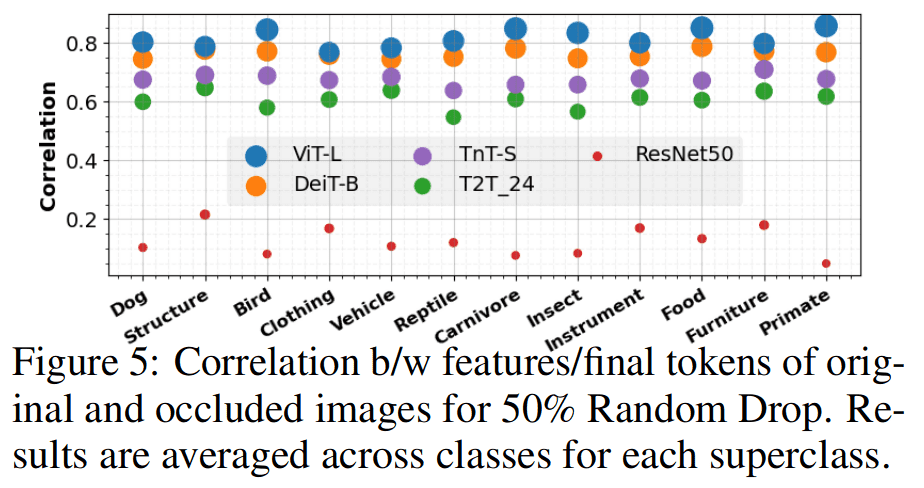

1) ViTs are extremely sturdy to occlusions, permutations, and distribution shifts.

Fig. 15: Robustness towards occlusions examine. Supply: Naseer et al. NeurIPS 2021

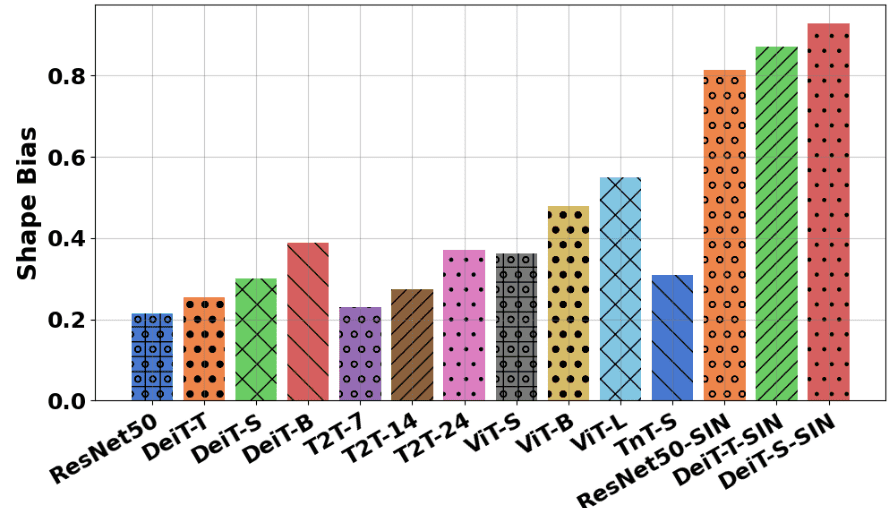

2) The robustness w.r.t. occlusions is not attributable to texture bias. ViTs are considerably much less biased in direction of native textures, in comparison with CNNs.

Fig. 16: Supply: Naseer et al. NeurIPS 2021

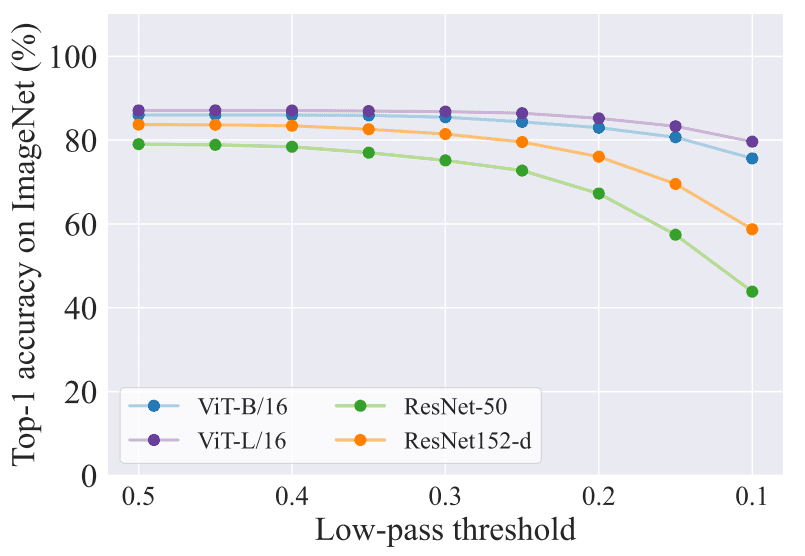

The latter discovering is according to a current work that utilized a low-pass filter within the photographs . Textures are high-frequency options, so the smaller the low-pass threshold the decrease the utmost frequency.

Fig. 17: Supply: Ghiasi et al.

ResNets are extra depending on high-frequency (and doubtless texture-related data) than ViTs.

3) Utilizing ViTs to encode shape-based illustration results in an fascinating consequence of correct semantic segmentation with out pixel-level supervision.

Fig. 18: Supply: Naseer et al. NeurIPS 2021

Computerized segmentation of photographs utilizing the CLS token. High: Supervised DeiT-S mannequin. Backside: SIN (Stylized ImageNet) educated DeiT-S.

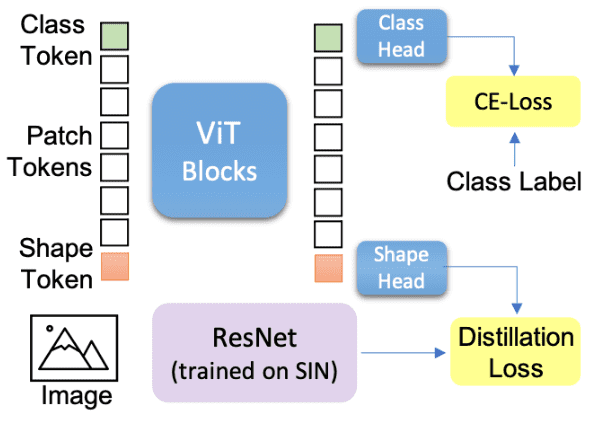

To implement shape-based illustration they used token-based information distillation. Therein, the mannequin auxiliary goals to match the output of a pretrained ResNet on SIN. The KL divergence is used as a distillation loss.

Fig. 19: Token-based distillation with ViTs. Supply: Naseer et al. NeurIPS 2021

The emerged background segmentation masks are fairly much like DINO. This reality signifies that each DINO and the shape-distilled ViT (DeiT) study shape-based representations.

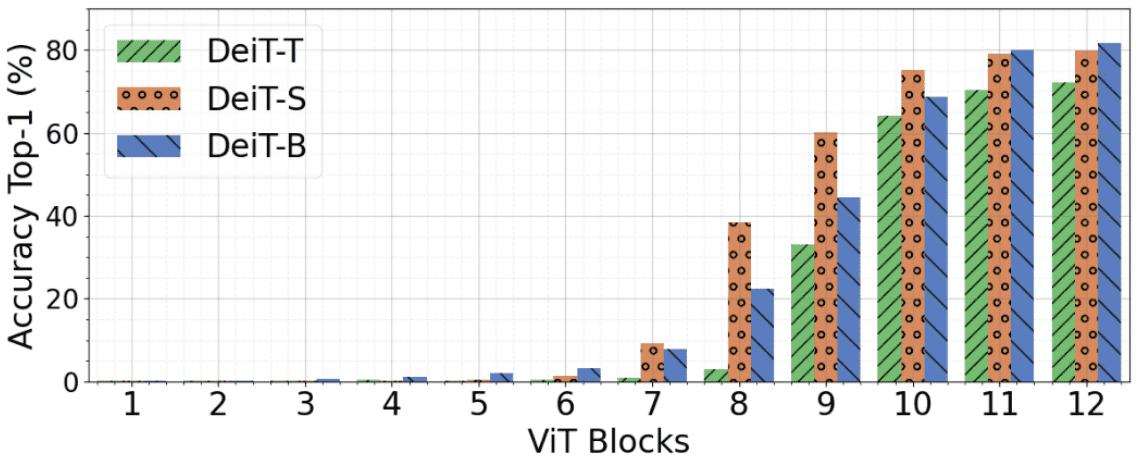

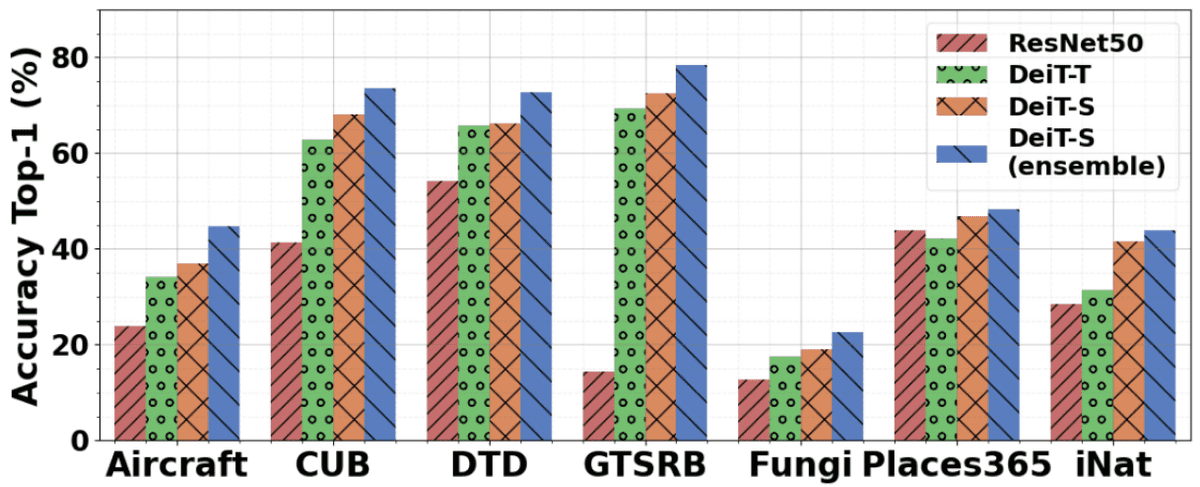

4) The discovered ViT options from a number of consideration layers (CLS tokens) may be mixed to create a characteristic ensemble, resulting in excessive accuracy charges throughout a spread of classification datasets.

Fig. 20: High-1 (%) for ImageNet val. set for sophistication tokens produced by every ViT block. Supply: Naseer et al. NeurIPS 2021

High-1 (%) for ImageNet validation set for CLS tokens produced by every ViT block.

“Class tokens from the previous couple of layers exhibit the best efficiency indicating probably the most discriminative tokens.” ~ Naseer et al.

5) ViT options generalize higher than the thought-about CNNs. Crucially, the robustness and superiority of ViT options may be attributed to the versatile and dynamic receptive fields that in all probability originate from the self-attention mechanism.

Fig. 21: ViT options are extra transferable. Supply: Naseer et al. NeurIPS 2021

Lastly, we current a concurrent work to , that studied ViT robustness.

Imaginative and prescient Transformers are Strong Learners

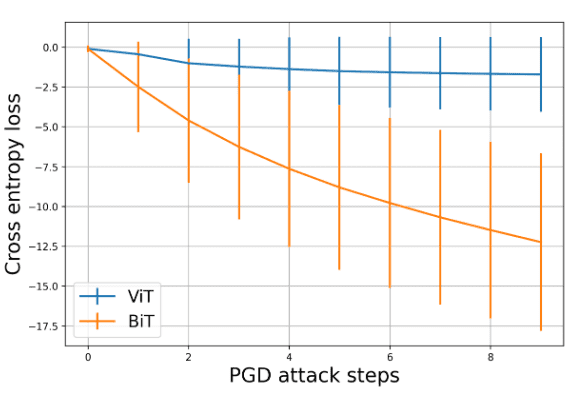

Sayak Paul and Pin-Yu Chen investigated the robustness of ViTs towards: a) corruptions, b) perturbations, c) distribution shifts, and d) pure adversarial examples. Extra importantly, they used a stronger CNN-based baseline known as BiT . The core outcomes are the next:

-

An extended pretraining schedule and bigger pretraining dataset enhance robustness (consistent with ).

-

Consideration is essential to robustness, which is according to all of the offered works.

-

ViTs have higher robustness to occlusions (picture masking and so on.) as proven in .

-

ViTs have a smoother loss panorama to enter perturbations (see beneath).

Fig. 22: Loss development (imply and customary deviation) ViT-L/16 and BiT below completely different PGD adversarial assaults

Core Takeaways

To conclude, here’s a transient listing of probably the most important takeaways from this weblog submit:

-

ViTs scale higher with mannequin and dataset dimension than CNNs.

-

ImageNet-pretrained CNNs are biased in direction of texture.

-

Form-based representations are extra sturdy to out-of-distribution generalization (extra transferable) in comparison with texture-based ones.

-

ViTs are considerably much less biased in direction of native textures than CNNs.

-

ViTs are equally unhealthy to adversarial assaults and pure adversarial examples as CNNs.

-

ViTs are extremely sturdy to occlusions, permutations, and distribution shifts.

-

ViTs educated with shape-based distillation or self-supervised studying (DINO) result in representations that implicitly encode the foreground (background segmentation maps).

-

ViTs obtain superior out-of-distribution generalization than CNNs.

In the event you discover our work fascinating, you possibly can cite us as follows:

@article{adaloglou2023robustness,

title = "Understanding Imaginative and prescient Transformers (ViTs): Hidden properties, insights, and robustness of their representations",

creator = "Adaloglou, Nikolas, Kaiser, Tim",

journal = "https://theaisummer.com/",

yr = "2023",

url = "https://theaisummer.com/vit-properties/"

}

Alternatively, help us by sharing this text on social media. It feels extraordinarily rewarding and we actually admire it! As at all times, thanks to your curiosity in deep studying and AI.

References

* Disclosure: Please word that a few of the hyperlinks above could be affiliate hyperlinks, and at no further price to you, we are going to earn a fee in case you determine to make a purchase order after clicking by means of.