Over the previous decade, we’ve seen that Neural Networks can carry out tremendously

properly in structured information like pictures and textual content. Many of the in style fashions like

convolutional networks, recurrent, autoencoders work very properly on information which have

a tabular format like a matrix or a vector. However what about unstructured information?

What about Graph information? Is there a mannequin that may be taught effectively from them?

Most likely you guessed it from the title. The reply is

Graph Neural Networks.

Graph Neural Networks have been launched again in 2005 (like all the opposite good

concepts) however they began to achieve reputation within the final 5 years. The GNNs are

in a position to mannequin the connection between the nodes in a graph and produce a

numeric illustration of it. The significance of GNNs is sort of vital

as a result of there are such a lot of real-world information that may be represented as a graph.

Social networks, chemical compounds, maps, transportation methods to call a couple of.

So let’s discover out the essential rules behind GNNs and why they work.

Let’s outline our primary drawback first: We need to map a given graph to a single

label, which is usually a numeric worth, a category or no matter actually. In different

phrases:

And we need to discover the operate F. For instance, think about that every graph is a

chemical compound or a molecule and the label is the chance that this

molecule can be utilized to provide a sure drug. If we have now a technique to extract the

label from each graph, we basically discovered a technique to predict which molecules

are extra doubtless for use in a drug. Cool, proper?

How can we do that? We already know a kind of Neural Community that can be utilized on

Graphs (type of). If you consider it, recurrent neural networks can function

on a particular sort of graph. A chained graph (This a graph that’s principally a

line). Time collection are literally chained graphs, the place every timestamp is a node

adopted by the following timestamp.

So, in truth, we will construct a community the place every graph node is a recurrent unit

(LSTM or one thing else) and the knowledge of the node is an embedding that

will probably be transferred by means of the chain (like a message). And since the items

are all recurrent, the knowledge received’t be misplaced when the embedding travels

by means of the graph. It’s our acquainted Recurrent neural networks. Precisely the

identical as those utilized in language translation and the opposite pure language

processing purposes.

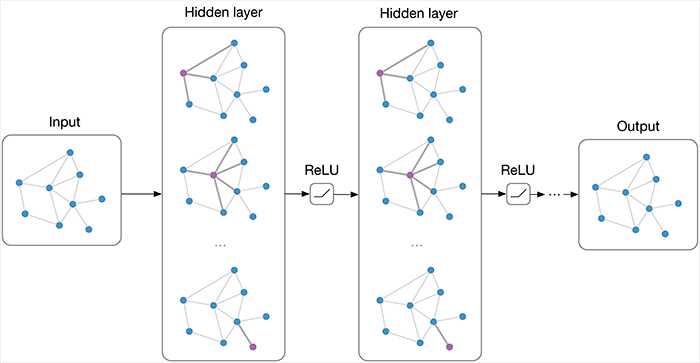

We will in fact lengthen this concept to correct graphs and we get this:

Graph neural networks: Variations and purposes

This slide is taken from an superior discuss GNNs by Microsoft

Analysis. Let’s see for a second what’s going on right here.

Every orange triangle was graph node and it’s now changed by a recurrent

unit. The envelopes symbolize the embeddings of the nodes that may journey

by means of the graph. Every graph edge can be changed by a Neural community to

seize the knowledge of the sting (its weight).

Now for the training half. At a single time step, every node pulls the embedding

from all its neighbors, calculates their sum and passes them together with its

embedding to the recurrent unit, which is able to produce a brand new embedding. This new

embedding accommodates the knowledge of the node plus the knowledge of all of the

neighbors. Within the subsequent time step, it would additionally include the knowledge of its

second-order neighbors. And so forth and so forth. The method continues till each

node is aware of about all the opposite nodes within the graph. Every one of many embeddings

has now data from all the opposite nodes. The ultimate step is to gather all

embeddings and add them, which is able to give us a single embedding for the entire

graph.

https://tkipf.github.io/graph-convolutional-networks/

That’s it. We did it. We handle to seize the entire graph in a single

embedding. This embedding can now be utilized in another mannequin to carry out some

classification, prediction, clustering no matter. Let your creativeness wander.

If you wish to experiment with Graph Neural Networks, I obtained you coated:

I might personally go together with the third one because it has higher documentation however

is your alternative.

Right here you go. Ta-ra lad.

* Disclosure: Please observe that a number of the hyperlinks above may be affiliate hyperlinks, and at no extra value to you, we are going to earn a fee when you resolve to make a purchase order after clicking by means of.