Neural radiance fields (NeRFs) slowly grow to be the following sizzling matter on this planet of Deep Studying. Since they have been initially proposed in 2020, there may be an explosion of papers as it may be seen from CVPR’s 2022 submissions. Time journal not too long ago included a variation of NeRFs, referred to as prompt graphics neural primitives, of their finest innovations of 2022 listing. However what precisely are NeRFs they and what are their purposes?

On this article, I’ll attempt to demystify all of the totally different terminologies similar to neural fields, NeRFs, neural graphic primitives and many others. To offer you a preview, all of them stand for a similar factor relying on who you ask. I may even current a proof of how they work by analyzing the 2 most influential papers.

What’s a neural area?

The time period neural area was popularized by Xie et al. and describes a neural community that parametrizes a sign. This sign often is a single 3D scene or object however that’s not obligatory. We are able to additionally use neural fields to signify any kind of alerts (discrete or steady) similar to audio or photographs.

Their hottest use is in pc graphics purposes similar to picture synthesis and 3D reconstruction, which is the primary matter of this text.

Please word that neural fields have additionally been utilized in different purposes similar to generative modeling, 2D Picture Processing, robotics, medical imaging and audio parameterization.

In most neural area variations, totally linked neural networks encode objects or scenes’ properties. Importantly, one community must be educated to encode (seize) a single scene. Be aware that in distinction with customary machine studying, the objective is to overfit the neural community to a specific scene. In essence, neural fields embed the scene into the weights of the community.

Why use neural fields?

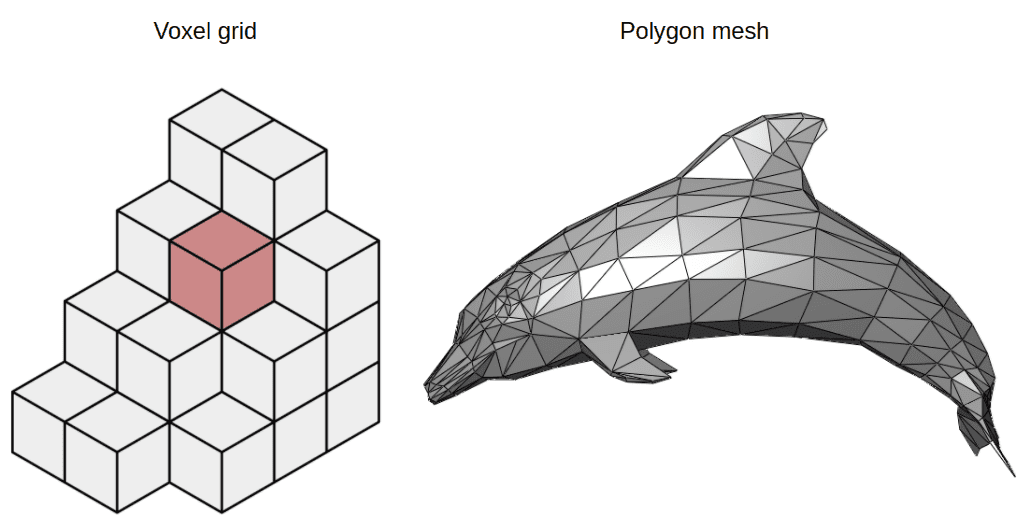

3D scenes are sometimes saved utilizing voxel grids or polygon meshes. On the one hand, voxels are often very costly to retailer. Then again, polygon meshes can signify solely exhausting surfaces and aren’t appropriate for purposes similar to medical imaging.

Voxels vs Polygon meshes. Supply: Wikipedia on Voxels, Wikipedia on Polygon Meshes

Neural fields have gained rising reputation in pc graphics purposes as they’re very environment friendly and compact 3D representations of objects or scenes. Why? In distinction with voxels or meshes, they’re differentiable and steady. One different benefit is that they will even have arbitrary dimensions and resolutions. Plus they’re area agnostic and don’t rely upon the enter for every job.

At that time, you could ask: the place does the identify neural fields come from?

What do fields stand for?

In physics, a area is a amount outlined for all spatial and/or temporal coordinates. It may be represented as a mapping from a coordinate to a amount , sometimes a scalar, a vector, or a tensor. Examples embody gravitational fields and electromagnetic fields.

Subsequent query you could ask: what are the steps to “be taught” a neural area?

Steps to coach a neural area

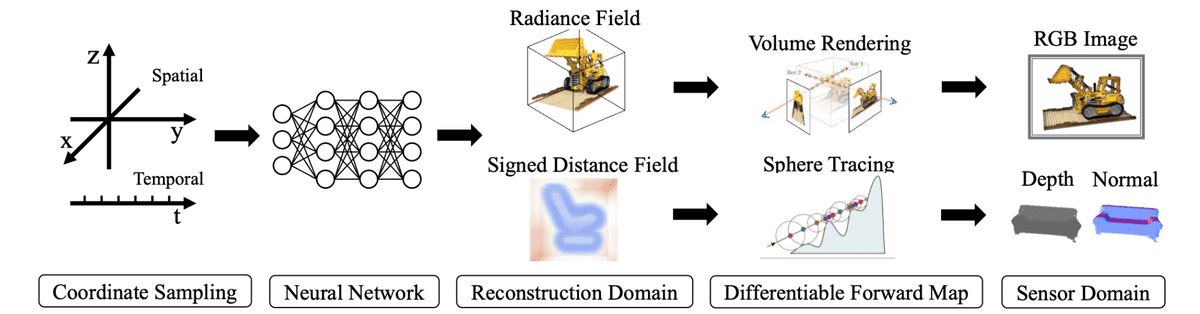

Following Xie et al. , the standard progress of computing neural fields will be formulated as follows:

-

Pattern coordinates of a scene.

-

Feed them to a neural community to provide area portions.

-

Pattern the sphere portions from the specified reconstruction area of the issue.

-

Map the reconstruction again to the sensor area (e.g 2D RGB photographs).

-

Calculate the reconstruction error and optimize the neural community.

A typical neural area algorithm. Supply: Xie et al.

For readability, let’s use some mathematical phrases to indicate the method. The reconstruction is a neural area, denoted as , that maps the world coordinates to area portions . A sensor statement can be a neural area that transforms the sensor coordinates into measurements . The ahead map is a mapping between the 2 neural fields and is differentiable.

Consequently, we are able to remedy the next optimization drawback to calculate the neural area .

The desk under (Xie et al.) illustrates totally different purposes of neural fields alongside the reconstruction and sensor domains.

Examples of ahead maps. Supply: Xie et al.

Let’s analyze the preferred structure of neural fields referred to as NeRFs that solves the issue of view synthesis.

Neural Radiance Fields (NeRFs) for view synthesis

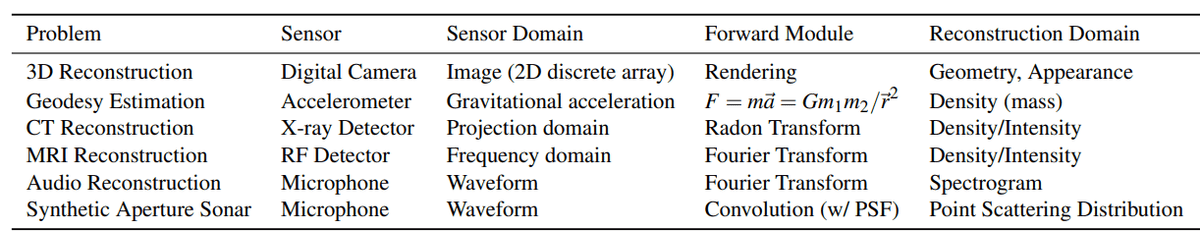

Essentially the most distinguished neural area structure is known as Neural Radiance Fields or NeRFs. They have been initially proposed as a way to remedy view synthesis. View synthesis is the duty the place you generate a 3D object or scene given a set of images from totally different angles (or views). View synthesis is sort of equal to 3D reconstruction.

Multi-view 3D reconstruction. Supply: Convex Variational Strategies for Single-View and Area-Time Multi-View Reconstruction

Be aware that as a way to totally perceive NeRFs, one has to familiarize themselves with many pc graphics ideas similar to volumetric rendering and ray casting. On this part, I’ll attempt to clarify them as effectively as potential but in addition go away just a few further assets to increase your analysis. In the event you look for a structured course to get began with pc graphics, Laptop Graphics by UC San Diego is the most effective one afaik

NeRFs and Neural fields terminology facet by facet

As I already talked about, NeRFs are a particular case of neural fields. For that cause, let’s see a side-by-side comparability. Be at liberty to revisit this desk as soon as we clarify NeRFs as a way to draw the connection between them and neural fields.

| Neural Fields | Neural Radiance Fields (NeRF) |

|---|---|

| World coordinate | Spatial location and viewing route |

| Area portions | Colour and quantity density |

| Area | MLP |

| Sensor coordinates | 2D photographs |

| Measurements | Radiance |

| Sensor | Digital digicam |

| Ahead mapping | Quantity rendering |

The explanation I made a decision to first current neural fields after which NeRFs is to know that neural fields are a much more normal framework

NeRFs defined

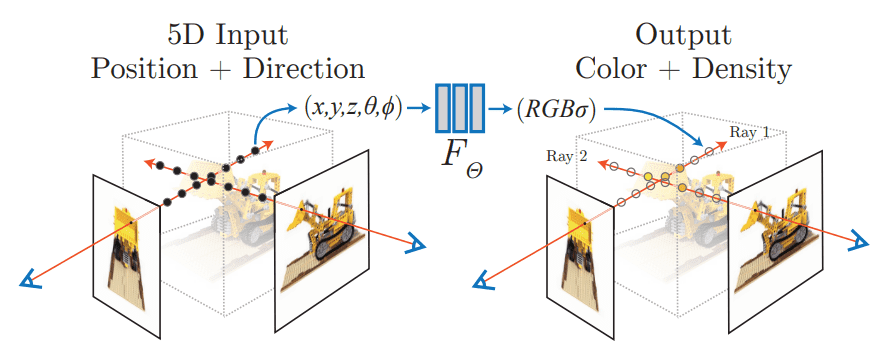

NeRFs as proposed by Mildenhall et al . settle for a single steady 5D coordinate as enter, which consists of a spatial location and viewing route . This explicit level of the article/scene is fed into an MLP, which outputs the corresponding shade intensities and its quantity density .

The (chance) quantity density signifies how a lot radiance (or luminance) is accrued by a ray passing by and is a measure of the “impact” this level has on the general scene. Intuitively, the chance quantity density offers the chance that the anticipated shade worth ought to be taken under consideration.

Neural Radiance Fields. Supply: Mildenhall et al.

The facility of the neural area is that it may possibly output totally different representations for a similar level when seen from totally different angles. Consequently, it may possibly seize varied lighting results similar to reflections, and transparencies, making it very best to render totally different views of the identical scene. This makes it a a lot better illustration in comparison with voxels grid or meshes.

Coaching NeRFs

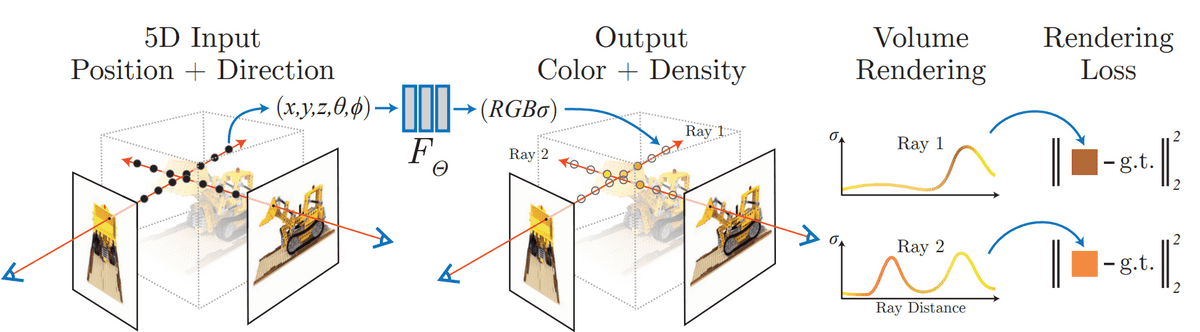

The issue with coaching these architectures is that the goal density and shade should not recognized. Due to this fact we want a (differentiable) technique to map them again to 2D photographs. These photographs are then in contrast with the bottom reality photographs formulating a rendering loss in opposition to which we are able to optimize the community.

NeRFs coaching course of. Supply: Mildenhall et al.

As proven within the picture above, quantity rendering is used to map the neural area output again to 2D the picture. The usual L2 loss will be computed utilizing the enter picture/pixel in an autoencoder style. Be aware that quantity rendering is a quite common course of in pc graphics. Let’s see briefly the way it works.

Quantity rendering

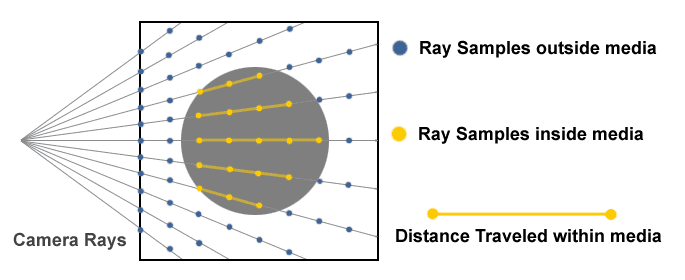

When sampling coordinates from the unique photographs, we emit rays at every pixel and pattern at totally different timesteps, a course of generally known as ray marching. Every pattern level has a spatial location, a shade, and a quantity density. These are the inputs of the neural area.

A ray is a perform of its origin , its route , and its samples at timesteps . It may be formulated as . Each the quantity density and the colour are depending on the ray and will be denoted as and respectively.

Ray Marching. Supply: Making a Volumetric Ray Marcher by Ryan Brucks

To map them again to the picture, all now we have to do is combine these rays and purchase the colour of every pixel.

provided that and are the certain of the ray and its transmittance. The transmittance is a measure of how a lot the ray can penetrate the 3D house to a sure level and is outlined as

The aforementioned approach, whereby you generate photographs or video by tracing a ray and integrating alongside additionally it is referred as neural rendering or differentiable rendering in bibliography.

Rifferentiable ray marching. Supply: Neural Volumes by Stephen Lombardi

Rifferentiable ray marching. Supply: Neural Volumes by Stephen Lombardi

For extra particulars on quantity rendering, take a look at this nice lecture from Cem Yuksel and College of Utah.

For a extra full rationalization of NeRFs, right here is a good video by Yannic Klitcher:

A number of extra notes on NeRFs

The variety of variations and enhancements over NeRFs is rising quickly over the previous few years. These sometimes fall into 4 totally different classes:

-

Assist reconstruction by computing good priors over the 3D scenes and conditioning the neural fields.

-

Enhance the efficiency and complexity of coaching/inference. That is steadily achieved utilizing hybrid representations which mix neural fields with discrete information buildings.

-

Choose higher community architectures to get rid of spectral bias and effectively compute derivatives and integrals

-

Manipulate neural fields representations

Be aware that it’s nearly unimaginable to curate an excellent survey of all NeRF papers. Dellaert et al and Xie et al are the one good sources I might discover. The authors from the latter have created a superb database of associated papers in addition to a really helpful Twitter account.

Prompt Neural Graphics Primitives with a Multiresolution Hash Encoding

Some of the necessary papers following NeRFs is Prompt Neural Graphics Primitives as proposed by Muller et al . Notably, the authors by Nvidia handle to hurry up the coaching from hours to a few seconds, utilizing a novel enter illustration.

The authors make the most of typical neural fields, additionally known as neural graphic primitives, mixed with a novel illustration of the enter referred to as multiresolution hash encoding. Such a encoding permits the usage of small neural networks lowering the entire floating factors operation wanted.

So far as I do know, neural graphics primitives is only a totally different terminology for neural fields.

Furthermore, the authors counsel the usage of particular GPU implementations for every job, lowering, much more, the general computational complexity. One such proposal is to implement all the MLP as a single CUDA kernel so that each calculation is carried out within the GPU’s native cache.

Multiresolution Hash Encoding

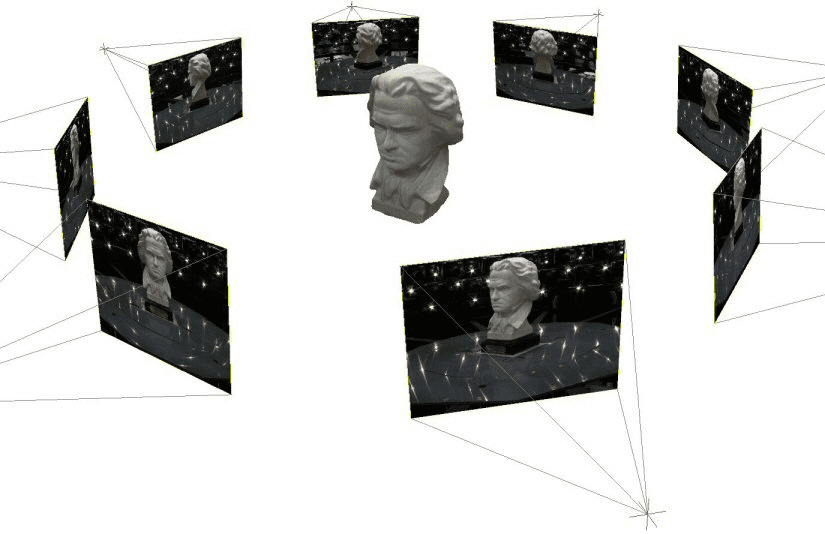

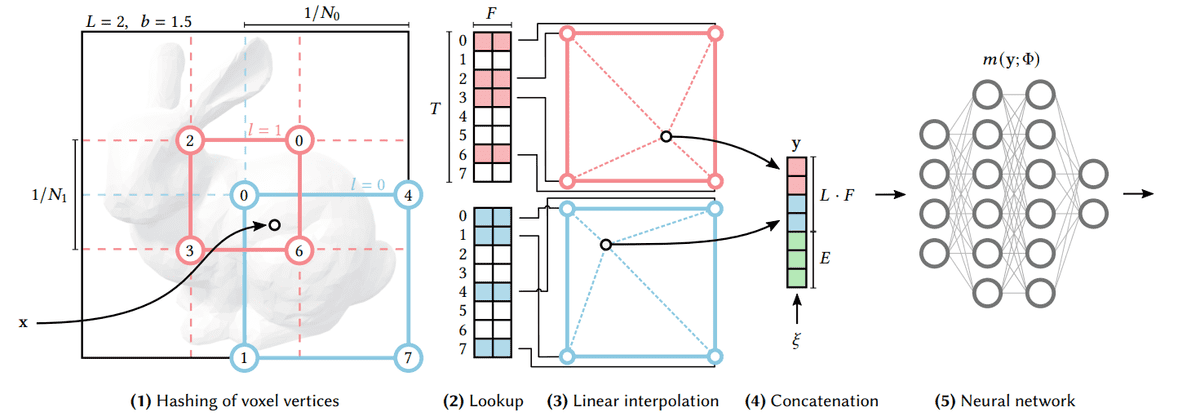

Let’s now speak about multiresolution hash encoding, which is arguably a very powerful contribution of the paper. We’ll think about a 2D instance for simplicity functions however the next steps generalize in 3 dimensions.

As a substitute of coaching solely the community parameters, we additionally prepare encoding parameters (characteristic vectors). These vectors are organized into totally different decision ranges and saved on the vertices of a grid. Every grid corresponds to a special decision.

Beginning with a selected location on a 2D picture,

-

We discover the encircling grids (pink and blue squares within the following determine) and assign indices to the vertices of the grids by hashing their coordinates.

-

As a result of every decision grid has a corresponding predefined hash desk, we are able to merely lookup the corresponding trainable characteristic vectors. Be aware that hashing the vertices will give the indices within the corresponding look-up tables.

-

To mix the characteristic vectors of various resolutions, we linearly interpolate them.

-

Subsequent up, we concatenate them alongside different auxiliary inputs to provide the ultimate vector.

-

The ensuing characteristic vector is handed into the neural community

The entire process is totally differentiable. To coach the encodings, the loss gradients are propagated by the MLP, concatenation, and linear interpolation, after which accrued within the looked-up characteristic vectors. Additionally, it’s necessary to notice that this process is solely task-agnostic and can be utilized for various architectures and duties moreover NeRFs.

Multiresolution Hash Encoding. Supply: Müller et al.

However what will we achieve from this considerably sophisticated encoding?

-

By coaching the encoding parameters alongside the community, we get a giant increase within the high quality of the ultimate end result.

-

By utilizing a number of resolutions, we achieve an automated degree of element, that means that the community learns each coarse and fantastic options.

-

By utilizing hashing to affiliate the 3d house with characteristic vectors, the encoding course of turns into solely task-agnostic.

The next video offers a superb deep dive into the paper for these .

Conclusion

In my view , NeRFs is without doubt one of the most enjoyable purposes of neural networks of the previous few years. With the ability to render 3D fashions in a matter of seconds was merely inconceivable a few years in the past. It gained’t be lengthy earlier than we see these architectures enter the gaming and simulation industries.

To experiment with NeRFs, I like to recommend visiting the instant-ngp repo by Nvidia, set up the required dependencies and mess around by creating your individual fashions.

In the event you’d wish to see extra articles of pc graphics, please tell us on our discord server. Lastly, if you happen to like our blogposts, be at liberty to assist us by shopping for our programs or books.

References

* Disclosure: Please word that a number of the hyperlinks above is likely to be affiliate hyperlinks, and at no extra value to you, we’ll earn a fee if you happen to determine to make a purchase order after clicking by.