Self-Supervised Studying (SSL) is a pre-training different to switch studying. Despite the fact that SSL emerged from large NLP datasets, it has additionally proven vital progress in pc imaginative and prescient. Self-supervised studying in pc imaginative and prescient began from pretext duties like rotation, jigsaw puzzles and even video ordering. All of those strategies had been formulating hand-crafted classification issues to generate labels with out human annotators.

Why?

As a result of many software domains are disadvantaged of human labels. To this finish, self-supervised studying is one solution to switch weights. By pretraining your mannequin on labels which might be artificially produced from the info.

These days, SSL has shifted to illustration studying, which largely occurs within the characteristic house. I wager you’ve got heard that earlier than. However what does illustration studying even imply?

In response to David Marr’s ebook (Imaginative and prescient: A Computational Investigation), a illustration makes specific sure entities and sorts of info, and which may be operated on by an algorithm to attain some info processing objective. Deep studying is all about studying these representations.

In a self-supervised studying setup, we indicate that the loss operate is minimized within the house the place the representations reside: the characteristic house! Some could name it latent house or embedding house, however we’ll follow the time period characteristic house all through this text.

So as an alternative of fixing a handmade process, we attempt to create a sturdy illustration by taking part in with characteristic vectors.

TL;DR

On this article we’ll:

-

spotlight the core rules of SSL that took me quite a lot of time to grok.

-

introduce a basic framework for SSL.

-

describe the challenges, and introduce some sensible tips.

Self-supervised studying workflow

A typical framework for SSL has the next steps:

-

Discover unlabeled information, often from the identical area (distribution)

-

Resolve on the illustration studying goal (pretext process), or the tactic that you just need to attempt.

-

Select your augmentations correctly.

-

Prepare for a lot of epochs!

-

Take the pre-trained characteristic extractor and fine-tune it with an MLP on prime. MLP often stands for two linear layers with ReLU activations in-between.

-

Prepare on the downstream process with out bells and whistles. You possibly can fine-tune the pre-trained community or hold its weights frozen.

-

Examine with baseline. Sure, you must have already got one! For those who don’t, run the structure with out self-supervised pre-training.

The objective is in fact to seize sturdy characteristic representations for the ultimate (downstream process). We don’t care in regards to the pretraining efficiency.

Okay cool, how will we do this? Properly, a technique is by contrastive studying.

Contrastive Self-Supervised Studying (SSL)

Since we should not have labels, it is rather widespread to tell apart information by comparability. GANs are the best instance of studying by comparability, or contrastive studying as it’s often known as.

We educate the mannequin what a pretend picture (detrimental pattern) is in comparison with an actual one (optimistic pattern).

Contrastive studying is a coaching technique whereby a classifier distinguishes between “comparable” (optimistic) and “dissimilar” (detrimental) enter pairs.

In our context, positives and negatives would be the picture options. To that finish, contrastive studying goals to align optimistic characteristic vectors whereas pushing away detrimental ones.

And that’s precisely the place augmentations come into play. To make a optimistic pair, we apply 2 totally different stochastic transformations in the identical picture. To make a detrimental pair, we apply 2 totally different stochastic transformations in a unique picture. When a change is utilized on a picture we name it ‘view’. Within the easiest case now we have 2 views of a picture. However this selection is type of arbitrary. Many approaches use greater than 2 views, however for instructional functions we ‘ll follow 2!

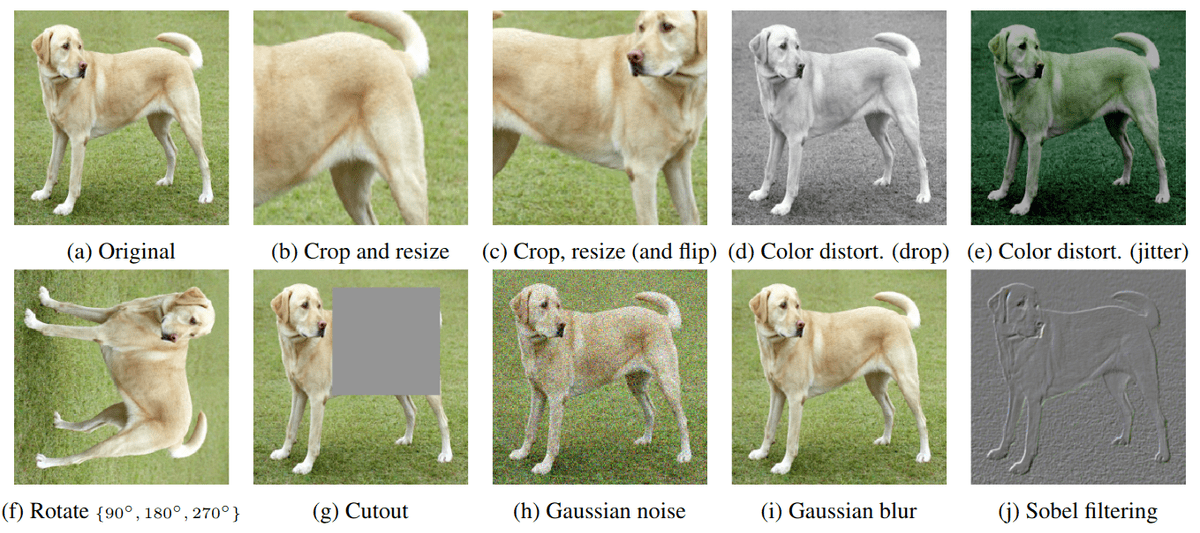

Right here is an instance of some augmentations that you could apply on a picture:

Examples of picture augmentations. Supply:SimCLR

The query is clear. Which of them are the perfect for the downstream process? How do you select augmentations and why do some work higher than others?

Augmentations and their rules

In language processing, you don’t care about augmentations. The pretexts duties are fairly easy. The most typical process for NLP is to foretell lacking phrases from a sentence.

In pc imaginative and prescient, we’re nonetheless caught with augmentations.

Augmentations is an oblique solution to go human prior information into the mannequin.

Nevertheless, it isn’t easy how to decide on these augmentations. Within the well-known SimCLR paper, an in depth evaluation is supplied to determine which of them work:

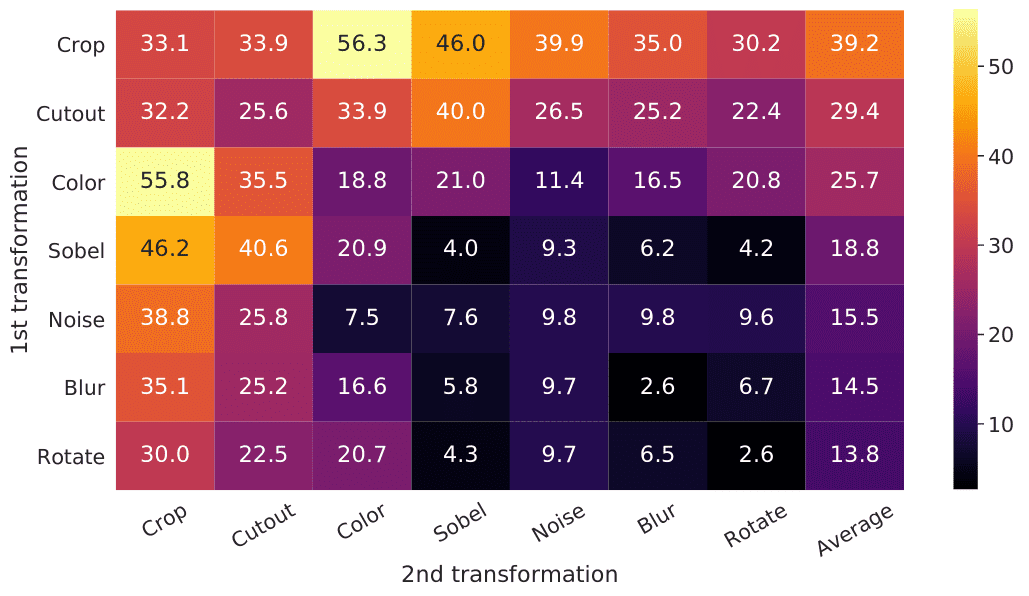

Augmentation ablation examine of SimCLR. Supply

The colored share is the ImageNet High-1 accuracy after pretraining with a mix of augmentations, as proven within the non-diagonal components. The final column displays the typical over the row.

What does this diagram imply?

Merely, that color distortion and cropping are the important thing transformations to supply our views for the thought-about dataset.

Primarily based on the Autoaugment paper, in datasets like SVHN, geometric transformations are extra fascinating, whereas ImageNet and CIFAR work higher with colour-based transformations.

This could present us with some kind of instinct:

Augmentation ought to discard the unimportant options for the downstream process. As an example, one may say that they take away the “noise” to categorise a picture. No matter will get remodeled, don’t take note of it!

Primarily based on my quick expertise, listed here are the core rules:

-

Augmentations ought to make sense when it comes to the downstream process. To grasp this one contemplate rotation for pure pictures (90, 180, and 270 levels). Despite the fact that it’s utilized in fine-tuning or when coaching from scratch, you’ll not see it within the SSL. Why? As a result of it closely adjustments the semantics of pure pictures and scenes, which brings us to the next precept.

-

Augmentation should preserve the picture semantics (which means). Something that’s invariant to the utilized transformations have to be the picture schematics. We are going to then maximize the mutual information between the semantics between views of the identical picture.

-

Augmentation should give the mannequin a tough time. If the mannequin minimizes the loss too rapidly it implies that it’s not studying in any respect or that the augmentations are too simple. You possibly can take away the crop & resize and see what occurs ;).

-

Lastly, augmentations are depending on the dataset’s variety and dimension.

Subsequent I’m referencing the augmentation pipeline for RGB pictures in PyTorch (for ImageNet):

import torchvision.transforms as T

import torch

img_size = 224

color_jitter = T.ColorJitter(

0.8 * s, 0.8 * s, 0.8 * s, 0.2 * s)

blur = T.GaussianBlur((3, 3), (0.1, 2.0))

train_transform = torch.nn.Sequential(

T.RandomResizedCrop(dimension=img_size),

T.RandomHorizontalFlip(p=0.5),

T.RandomApply([color_jitter], p=0.8),

T.RandomApply([blur], p=0.5),

T.RandomGrayscale(p=0.2),

T.Normalize(imply=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

)

Some papers additionally embrace picture solarization, totally different augmentation pipelines for positives and negatives, and even totally different prepare and check augmentations. For small datasets like CIFAR blurring could be a horrible thought as the pictures are already small and blurry.

Earlier than we delve into the house of options and loss features, let’s revisit some highschool math.

Logarithmic properties, and temperature softmax

You in all probability have seen this in highschool:

Discover how the division within the logarithm may be written as a subtraction.

Softmax with temperature

A closely used standardization layer earlier than feeding the output to the loss features is the softmax with temperature:

Instinct: The decrease the temperature the sharper the mannequin’s predictions. The closest to 0 the closest to argmax.

Argmax may be considered a one-hot distribution the place the component with the best worth will probably be 1 and the opposite components will probably be 0. As such, a low temperature (<1) discourages the predictions to break down to a uniform distribution which is undesirable.

Counterintuitively, the self-supervised fashions are very delicate to this hyperparameter! This hyperparameter can also be used within the context of data distillation.

Now that augmentations, logarithms and softmax are out of the way in which let’s see how we create a self-supervised loss between picture pairs with out human labels.

Loss features: the core thought behind SSL

Let’s use the aforementioned properties. By combining the softmax with the log now we have:

That is the core thought of self-supervised studying. The one distinction is that as an alternative of a vector we can have similarities of vector pairs.

The primary time period (nominator) is the “optimistic” pair similarity (+ within the math beneath). Curiously, the second time period is what we distinction the similarity on. It may be seen as a scalar.

And since we need to decrease the similarity we want a minus signal:

Contrastive studying: SimCLR loss operate

SimCLR was the primary that was proposed to study contrastive representations.

The loss operate for a optimistic pair of examples (i,j) contrasted to 2N detrimental examples within the batch is outlined as:

Admittedly, it isn’t that removed from the context I offered thus far:

In SimCLR is computed from the detrimental pairs within the batch. The overall thought is imply subtraction (see part beneath).

To start with, I may simply get how vector similarity can align issues however how does this “pushing away” really occurs?

Properly, it’s simply logarithmic properties 🙂 Or much more merely a subtraction by .

Why is that this subtraction so vital? Why will we care a lot about subtracting one thing (implicit/specific contrastive studying)?

As you in all probability have heard in GANs, the primary drawback is mode collapse! Let’s formally introduce it within the context of self-supervised studying.

Mode collapse and regularization

Mode collapse in GANs

Mode collapse refers back to the generator G that fails to adequately characterize the pixel-space of all of the doable outputs. As a substitute, G selects just some restricted influential modes that correspond to noise pictures. On this approach, D is ready to simply distinguish actual from fakes. Consequently, the lack of G will get extremely unstable (on account of exploding gradients).

Mainly, G is caught in a parameter setting the place it at all times emits the identical output.

After collapse has occurred, the discriminator learns that this output comes from the generator and the adversarial coaching losses diverge.

Mode collapse in self-supervised studying

Primarily based on the DINO paper, 2 types of mode collapse are recognized in self-supervised studying [3]:

-

whatever the enter, the mannequin output is uniform alongside all of the characteristic dimensions, which ends up in random predictions. This implies cross-entropy of for lessons.

-

whatever the enter, the mannequin output is dominated by one dimension, which ends up in zero entropy.

In each circumstances, the enter is disregarded, and the output is identical for all of the inputs.

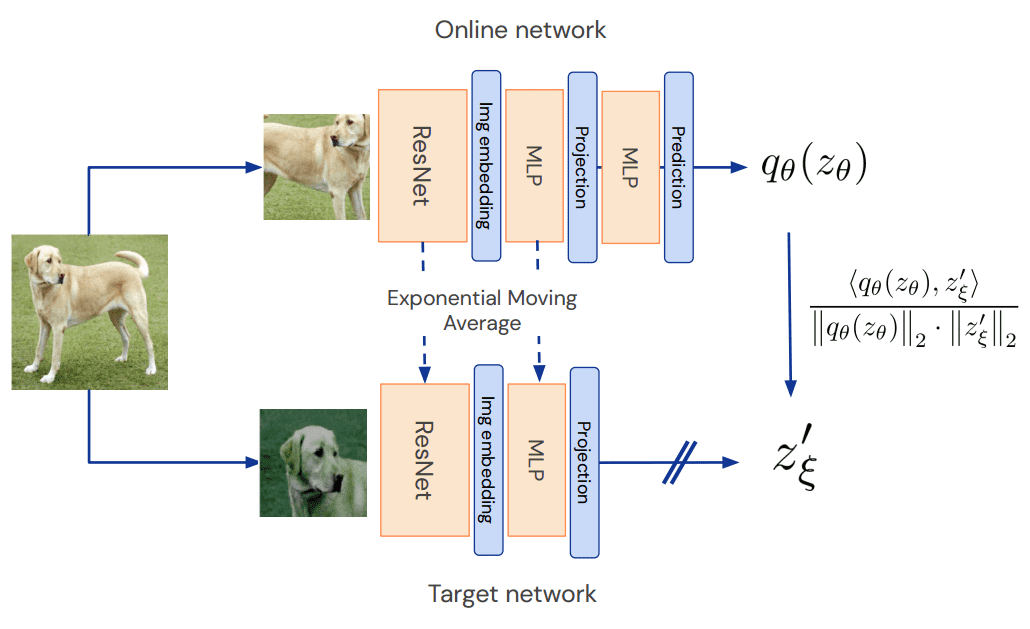

EMA (Exponential Shifting Common)

Surprisingly, the equivalent characteristic extractors don’t must be up to date with backpropagation. When this occurs the gradients don’t circulate again to one of many networks. This operator within the literature is symbolized as stop-gradient.

The frozen mannequin is named goal or instructor whereas the opposite community is named on-line or pupil.

So how do you alter the parameters with out backpropagation? If the gradients stay unchanged the community will output random characteristic vectors.

The reply is easy: for the reason that networks share the identical structure, we weigh the parameters of the net community with the goal community. That is achieved with the so-called Exponential Shifting Common (EMA).

We fuse a tiny portion of the skilled weights (lower than 5%) to the frozen weights ( ).

This technique is critical for some SSL strategies to work.

Regularization strategies

Lastly, self-supervised studying wants heavy regularization. Why? As a result of the house of doable options is extraordinarily large and there are quite a lot of possibilities of overfitting.

To come across this drawback we often use L2 weight regularization (weight decay), LARS optimizer, studying price warmup, studying price decay, in addition to batch normalization.

Abe Fetterman and Josh Albrecht experimented with many self-supervised approaches to attract experimental insights on the significance of implicit regularization and wrote an distinctive weblog put up about it. Primarily based on their experimental evaluation, they state that:

-

Non-contrastive studying strategies like BYOL [2] usually carry out no higher than random (mode collapse) when batch normalization is eliminated

-

The presence of batch normalization implicitly causes a type of contrastive studying by subtracting the imply based mostly on the mini-batch statistics.

Superior: Imply subtraction

The core distinction between established SSL strategies is that this scalar . You should utilize the detrimental pairs from the batch. You should utilize a median of all of the detrimental pairs all through coaching. Or by imply subtraction from batch (batch normalization) as in BYOL. And even subtracting the shifting common options of all coaching batches. The latter was launched by Fb AI analysis in one other sort of implicit contrastive studying in DINO.

Needless to say there are strategies that do not use detrimental examples from the batch to distinction representations. Considered one of them is BYOL [2]. Nevertheless, the imply subtraction on this technique comes from batch normalization, which is a type of implicit contrastive studying.

Furthermore, as a way to address mode collapse BYOL launched different tips. First, a further MLP known as the predictor community.

Supply: BYOL paper, Jean-Bastien Grill et al. 2020

The predictor breaks the symmetry between the 2 networks. Secondly, BYOL additionally enforces an Exponential Shifting Common (EMA) on the goal community.

On this approach, the net community slowly passes its weights to the goal community. This may be considered a imply mannequin distillation apply. The benefit is that the noise from the scholar is averaged and the goal community makes extra steady steps. Once more, the goal community is not being up to date with gradient descent.

The shocking outcomes of DINO cross-entropy vs characteristic alignment

The minimization of similarity is immediately maximizing mutual info. By making use of softmax with temperature to each negatives and positives we really pull far aside the toughest detrimental examples and convey collectively the closest optimistic picture options.

On this sense, it was mind-blowing that an SSL technique, known as DINO (proven beneath), used cross-entropy.

Intuitively, cross-entropy after softmax (in a dimension with size Okay) is utilized is roughly equal to creating some kind of clusters (delicate lessons). In a supervised method, the clusters could be the picture lessons. In addition to, given a low temperature (<1) the mannequin has to softly assign the enter in one of many Okay clusters, much like absolutely supervised coaching. The variety of lessons/clusters is chosen arbitrarily to greater than 60K. In my thoughts, the mannequin extracts options based mostly on the dataset and assigns comparable options like wings of birds, faces, or canine shapes to those soft-classes.

Sensible concerns

I’m closing up by offering you with some concepts and tips to make your life simpler and extra sane in case you are experimenting with SSL strategies:

-

Begin with Adam earlier than LARS optimizer. You will discover a PyTorch implementation of LARS right here.

-

Normalize/standardize the info on the very finish of your augmentation pipeline, because the transformations could destroy the normalization. It’s endorsed to make use of the worldwide stats of the dataset for imply/std standardization.

-

Begin with a small mannequin like ResNet18 and prepare it for no less than 300 epochs.

-

Discover a larger dataset however from the identical area (distribution), if doable.

-

Have some type of analysis throughout self-supervised pre-training. Examples are k-NN (okay=1), or freeze the spine and prepare a single linear layer for 100 epochs each couple of epochs. The latter is named linear analysis within the literature.

Conclusion

For those who realized one thing from this text, I might enormously respect sharing it with your folks & colleagues in addition to in your social media. In a future article, I’ll try to run SimCLR on a small dataset like STL10 (100K unlabelled pictures). Lastly, you’ll be able to benefit from the Machine Studying Road Speak interview of can regarded Dr. Ishan Misra from Fb AI Analysis to study extra in regards to the subject:

Cited as:

@article{adaloglou2021ssl,

title = "Grokking self-supervised (illustration) studying: the way it works in pc imaginative and prescient and why",

writer = "Adaloglou, Nikolas",

journal = "https://theaisummer.com/",

yr = "2021",

url = "https://theaisummer.com/self-supervised-representation-learning-computer-vision/"

}

References

-

Chen, T., Kornblith, S., Norouzi, M., & Hinton, G. (2020, November). A easy framework for contrastive studying of visible representations. In Worldwide convention on machine studying (pp. 1597-1607). PMLR.

-

Grill, J. B., Strub, F., Altché, F., Tallec, C., Richemond, P. H., Buchatskaya, E., … & Valko, M. (2020). Bootstrap your individual latent: A brand new method to self-supervised studying. arXiv preprint arXiv:2006.07733.

-

Caron, M., Touvron, H., Misra, I., Jégou, H., Mairal, J., Bojanowski, P., & Joulin, A. (2021). Rising properties in self-supervised imaginative and prescient transformers. arXiv preprint arXiv:2104.14294.

* Disclosure: Please word that a few of the hyperlinks above may be affiliate hyperlinks, and at no extra value to you, we’ll earn a fee when you resolve to make a purchase order after clicking by.