Should you open any introductory machine studying textbook, you can see the thought of enter scaling. It’s undesirable to coach a mannequin with gradient descent with non-normalized options.

On this article, we’ll evaluate and perceive the commonest normalization strategies. Completely different strategies have been launched for various duties and architectures. We are going to try and affiliate the duties with the strategies though some approaches are fairly normal.

Why?

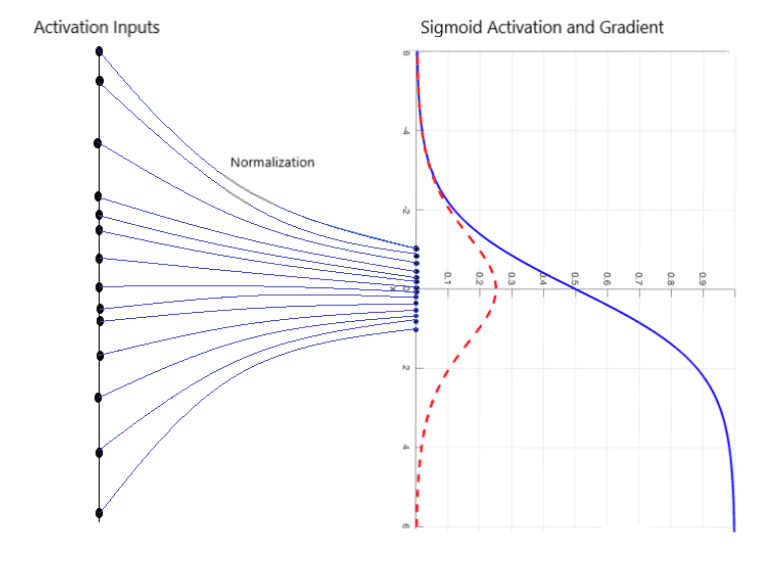

Let’s begin with an intuitive instance to grasp why we would like normalization inside any mannequin.

Think about what is going to occur if the enter options are mendacity in numerous ranges. Think about that one enter function lies within the vary [0,1] and one other within the vary [0,10000]. Consequently, the mannequin will merely ignore the primary function, provided that weight is initialized in a small and shut vary. You don’t even want exploding gradients to happen. Yeap, that’s one other downside you’ll face.

Equally, we encounter the identical points contained in the layers of deep neural networks. This concern is unbiased of the structure (transformers, convolutional neural networks, recurrent neural networks, GANs).

If we expect out of the field, any intermediate layer is conceptually the identical because the enter layer: it accepts options and transforms them.

To this finish, we have to develop methods to coach our fashions extra successfully. Effectiveness will be evaluated when it comes to coaching time, efficiency, and stability.

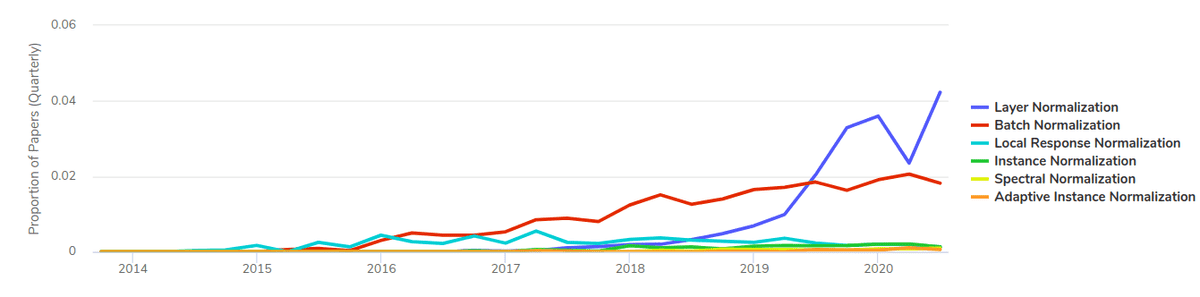

Under you possibly can see a graph depicting the tendencies in normalization strategies utilized by completely different papers by means of time.

Supply: papers with code

To get a greater maintain of all the basic constructing blocks of deep studying, we suggest the Coursera specialization.

Notations

All through this text, would be the batch measurement, whereas refers back to the top, to the width, and to the function channels. The greek letter μ() refers to imply and the greek letter σ() refers to straightforward deviation. The batch options are with a form of [N, C, H, W]. For the referenced type picture I exploit the image whereas for the segmentation masks I exploit the image or simply masks. To recap:

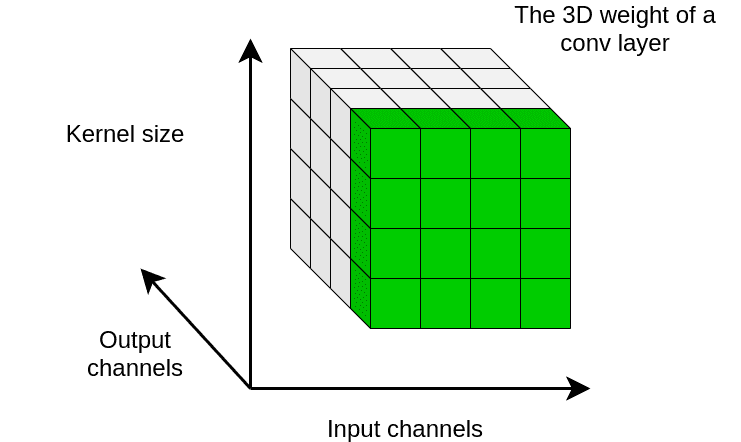

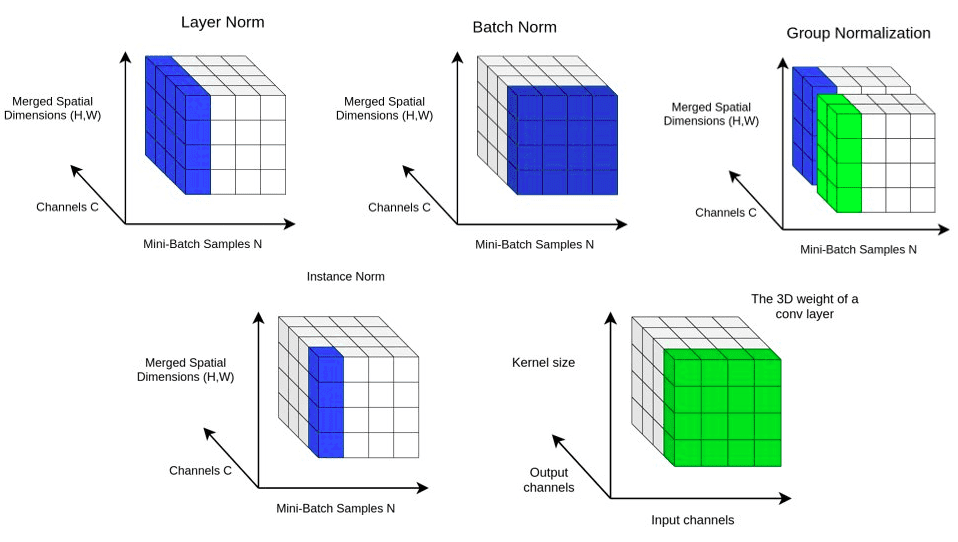

Apart from, we’ll visualize the 4D activation maps x by merging the spatial dimensions. Now we’ve a 3D form that appears like this:

An 3D vizualization of the 4D tensor

Now, we’re prepared!

Batch normalization (2015)

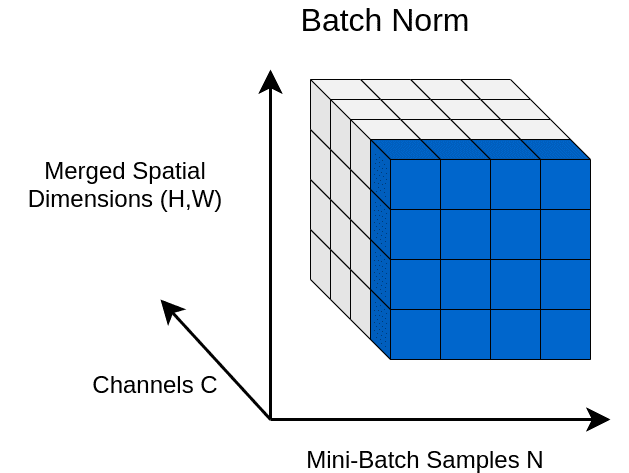

Batch Normalization (BN) normalizes the imply and commonplace deviation for every particular person function channel/map.

To begin with, the imply and commonplace deviation of picture options are first-order statistics. So, they relate to the world traits (such because the picture type). On this means, we in some way mix the worldwide traits. Such a technique is efficient after we need our illustration to share these traits. That is the rationale that we broadly make the most of BN in downstream duties (i.e. picture classification).

From a mathematical standpoint, you possibly can consider it as bringing the options of the picture in the identical vary.

Picture by MC.AI. It present how batch norm brings the values in a compact vary. Supply Picture

Particularly, we demand from our options to comply with a Gaussian distribution with zero imply and unit variance. Mathematically, this may be expressed as:

Let’s see this operation vizually:

An illustration of Batch Norm.

Notably, the spatial dimensions, in addition to the picture batch, are averaged. This fashion, we focus our options in a compact Gaussian-like house, which is normally helpful.

In reality, γ and β correspond to the trainable parameters that outcome within the linear/affine transformation, which is completely different for all channels. Particularly γ,β are vectors with the channel dimensionality. The index c denotes the per-channel imply.

You possibly can flip this possibility on or off in a deep studying framework comparable to PyTorch by setting affine = True/False in Python.

Benefits and downsides of utilizing batch normalization

Let’s see some benefits of BN:

-

BN accelerates the coaching of deep neural networks.

-

For each enter mini-batch we calculate completely different statistics. This introduces some kind of regularization. Regularization refers to any type of method/constraint that restricts the complexity of a deep neural community throughout coaching.

-

Each mini-batch has a unique mini-distribution. We name the change between these mini-distributions Inner Covariate Shift. BN was thought to eradicate this phenomenon. Later, Santurkar et al. [7] present that this isn’t precisely the case why BN works.

-

BN additionally has a helpful impact on the gradient stream by means of the community. It reduces the dependence of gradients on the size of the parameters or of their preliminary values. This permits us to make use of a lot larger studying charges.

-

In idea, BN makes it potential to make use of saturating nonlinearities by stopping the community from getting caught, however we by no means use these sorts of activation capabilities.

What about disadvantages?

-

Inaccurate estimation of batch statistics with small batch measurement, which will increase the mannequin error. In duties comparable to video prediction, segmentation and 3D medical picture processing the batch measurement is normally too small. BN wants a sufficiently giant batch measurement.

-

Issues when batch measurement is various. Instance showcases are coaching VS inference, pretraining VS superb tuning, spine structure VS head.

It’s a level of dialogue if the launched regularization reduces the necessity for Dropout. Current work [7] means that the mixture of those two strategies could also be superior. Additionally they offered some insights on how and why BN works. Briefly, they proved that BN makes the gradients extra predictive. Right here is the video of their video presentation (NeuIPS 2018):

Synchronized Batch Normalization (2018)

Because the coaching scale went huge, some changes to BN have been mandatory. The pure evolution of BN is Synchronized BN(Synch BN). Synchronized signifies that the imply and variance is not up to date in every GPU individually.

As an alternative, in multi-worker setups, Synch BN signifies that the imply and standard-deviation are communicated throughout staff (GPUs, TPUs and so on).

Credit for this module belong to Zhang et al. [6]. Let’s see the computation of imply and std because the calculation of those two sums:

They first calculate , and individually on every gadget. Then the worldwide sums are calculated by making use of the scale back parallel programing method. The idea of discount in parallel processing will be grasped with this video from Udacity from the parallel programming course.

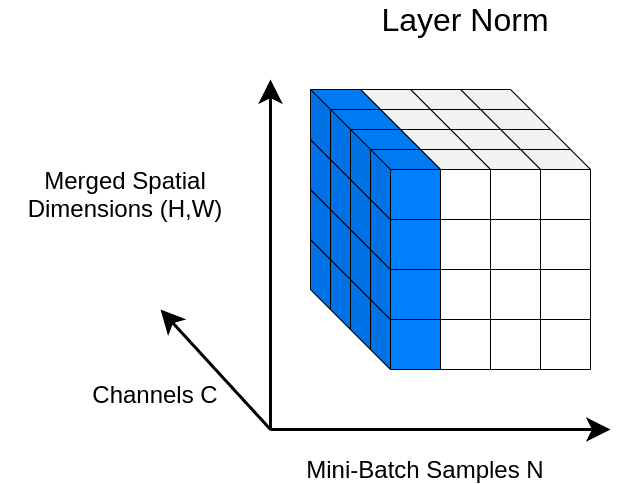

Layer normalization (2016)

In ΒΝ, the statistics are computed throughout the batch and the spatial dims. In distinction, in Layer Normalization (LN), the statistics (imply and variance) are computed throughout all channels and spatial dims. Thus, the statistics are unbiased of the batch. This layer was initially launched to deal with vectors (principally the RNN outputs).

We will visually comprehend this with the next determine:

An illustration of Layer Norm.

And to be trustworthy no one spoke about it till the Transformers paper got here out. So when coping with vectors with batch measurement of , you virtually have 2D tensors of form .

Since we don’t need to be depending on the selection of batch and it’s statistics, we normalize with the imply and variance of every vector. The mathematics:

Generalizing into 4D function map tensors, we will take the imply throughout the spatial dimension and throughout all channels, as illustrated beneath:

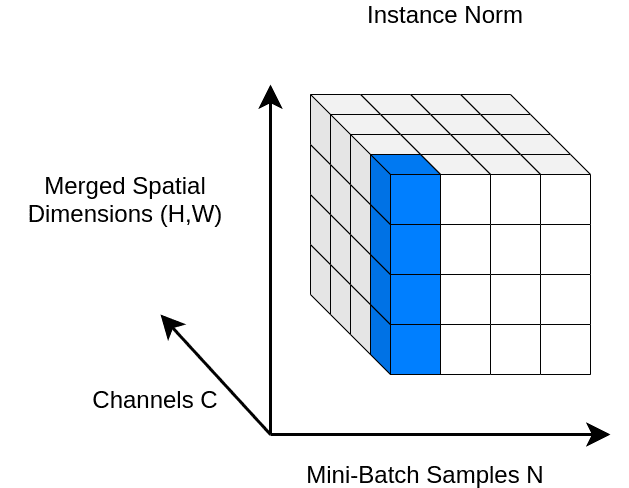

Occasion Normalization: The Lacking Ingredient for Quick Stylization (2016)

Occasion Normalization (IN) is computed solely throughout the options’ spatial dimensions. So it’s unbiased for every channel and pattern.

Actually, we simply take away the sum over within the earlier equation in comparison with BN. The determine beneath depicts the method:

An illustration of Occasion Norm.

Surprisingly, the affine parameters in IN can utterly change the type of the output picture. Versus BN, IN can normalize the type of every particular person pattern to a goal type (modeled by γ and β). Because of this, coaching a mannequin to switch to a selected type is simpler. As a result of the remainder of the community can focus its studying capability on content material manipulation and native particulars whereas discarding the unique world ones (i.e. type data). For completeness much less test the maths:

By extending this concept, by introducing a set that consists of a number of γ and β, one can design a community to mannequin a plethora of finite kinds, which is strictly the case of the so-called conditional IN [8].

However we aren’t solely solely in stylization, or can we?

Weight normalization (2016)

Although it’s hardly ever mentioned we’ll point out its ideas. So, in Weight Normalization 2 as a substitute of normalizing the activations x immediately, we normalize the weights. Weight normalization reparametrize the weights w (vector) of any layer within the neural community within the following means:

We now have the magnitude , unbiased of the parameters v. Weight normalization separates the norm of the load vector from its route with out decreasing expressiveness. Principally the trainable weight vector is now v.

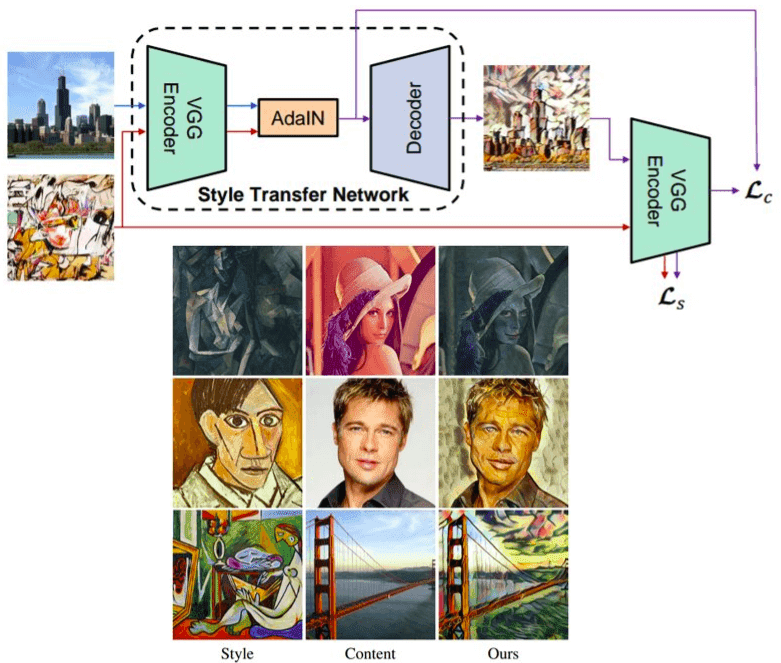

Adaptive Occasion Normalization (2017)

Normalization and elegance switch are carefully associated. Bear in mind how we described IN. What if γ,β is injected from the function statistics of one other picture ? On this means, we can mannequin any arbitrary type by simply giving our desired function picture imply as β and variance as γ from type picture .

Adaptive Occasion Normalization (AdaIN) receives an enter picture (content material) and a mode enter , and easily aligns the channel-wise imply and variance of x to match these of y. Mathematically:

That is all! So what can we do with only a single layer with this minor modification? Allow us to see!

Structure and outcomes utilizing AdaIN. Borrowed from the unique work

Within the higher half, you see a easy encoder-decoder community structure with an additional layer of AdaIN for type alignment. Within the decrease half, you see some outcomes of this superb thought! If you wish to mess around with this concept code is accessible right here (official).

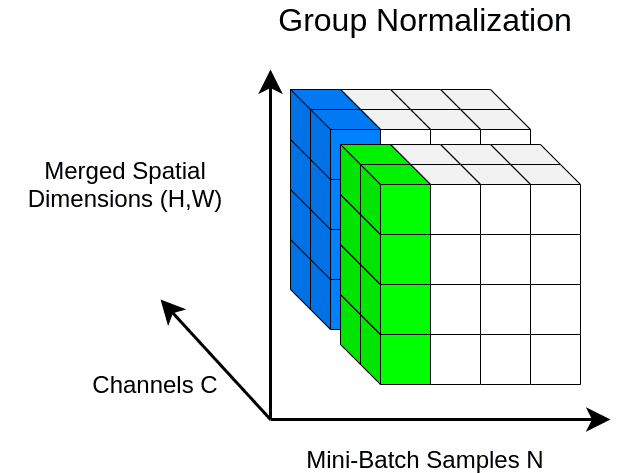

Group normalization (2018)

Group normalization (GN) divides the channels into teams and computes the first-order statistics inside every group.

Consequently, GN’s computation is unbiased of batch sizes, and its accuracy is extra steady than BN in a variety of batch sizes. Let’s simply visualize it to make the thought crystal clear:

An illustration of group normalization

Right here, I break up the function maps in 2 teams. The selection is unfair.

For teams=variety of channels we get occasion normalization, whereas for`teams=1 the tactic is decreased to layer normalization. The ugly math:

Notice that G is the variety of teams, which is a hyper-parameter. C/G is the variety of channels per group. Thus, GN computes µ and σ alongside the (H, W) axes and alongside a gaggle of C/G channels.

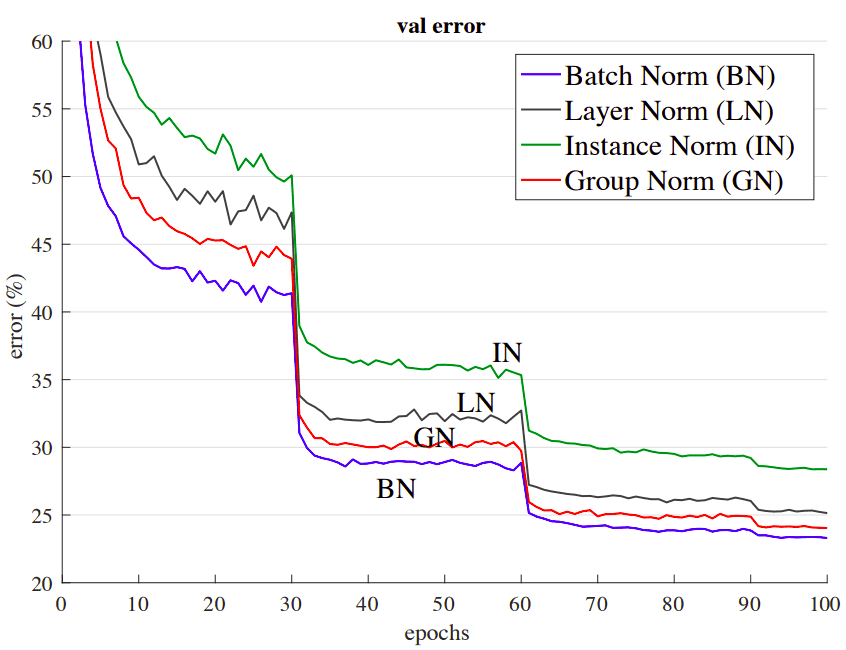

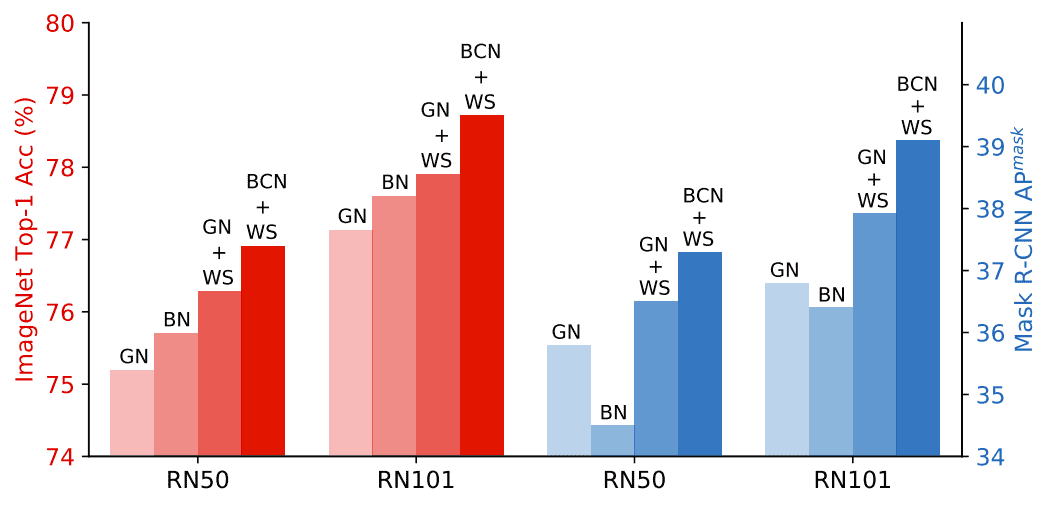

Lastly, let’s see a plot of how these strategies carry out in the identical structure:

Comparability utilizing a batch measurement of 32 photographs per GPU in ImageNet. Validation error VS the numbers of coaching epochs is proven. The mannequin is ResNet-50. Supply: Group Normalization

The official oral paper presentation can also be accessible from Fb AI Analysis in ECCV2018:

Weight Standardization (2019)

Weight Standardization 12 is a pure evolution of Weight Normalization that we briefly mentioned. Completely different from commonplace strategies that concentrate on activations, WS considers the smoothing results of weights. The ugly math:

All these math is a flowery option to say that we’re calculating the imply and std for every output channel individually. Here’s a good option to see by means of the maths:

An illustration of weight standarization

In essence, WS controls the first-order statistics of the weights of every output channel individually. On this means, WS goals to normalize gradients throughout back-propagation.

It’s theoretically and experimentally validated that it smooths the loss panorama by standardizing the weights in convolutional layers.

Theoretically, WS reduces the Lipschitz constants of the loss and the gradients. The core thought is to maintain the convolutional weights in a compact house. Therefore, WS smooths the loss panorama and improves coaching.

Keep in mind that we noticed the same end in Wasserstein GANs. Some outcomes from the official paper: For the report, they mixed WS with Group Normalization (GN) and achieved outstanding outcomes.

Evaluating normalization strategies on ImageNet and COCO. GN+WS outperforms each BN and GN by a giant margin. Supply: Weight standardization paper

Afterward, GN + WS have been efficiently utilized with large success in switch studying for NLP. For more information test Kolesnikov et al. [11]. Within the following animation batch norm is colored with purple to indicate that by changing the BN layers they’ll obtain higher generalization in NLP duties.

SPADE (2019)

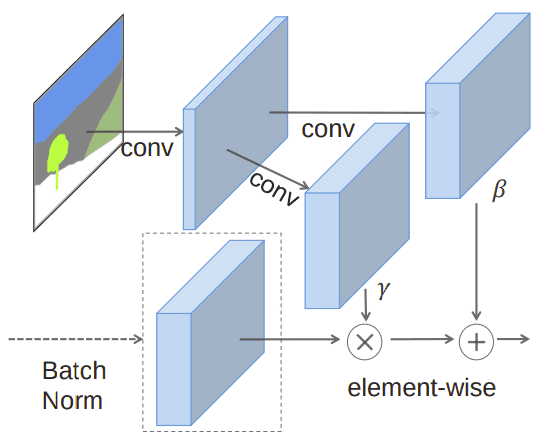

We noticed how we will inject a mode picture within the normalization module. Let’s see what we will do with segmentation maps to implement the consistency in picture synthesis. Let’s increase the thought of AdaIN a bit extra. Once more, we’ve 2 photographs: the segmentation map and a reference picture.

How can we additional constrain our mannequin to account for the segmentation map?

Just like BN, we’ll first normalize with the channel clever imply and commonplace deviation. However we is not going to rescale all of the values within the channel with a scalar γ and β. As an alternative, we’ll make them tensors, which can be computed with convolutions based mostly on the segmentation masks. The mathematics:

This step is much like batch norm. within the final equation is the normalized worth. Nevertheless, since we don’t need to lose the grid construction we is not going to rescale all of the values equally. Not like current normalization approaches, the brand new γ and β can be 3D tensors (not vectors!). Exactly, given a two-layer convolutional community with two outputs (normally referred to as heads within the literature), we’ve:

We will visually illustrate this module like this:

The SPADE layer. The picture is taken from GAU-GAN paper from NVIDIA.

To see how this strategy was utilized to semantic picture synthesis with GANs test our earlier article.

Conclusion

Lastly, let’s examine all of the strategies comparative and attempt to analyze how they work.

An summary of the offered normalization strategies

We offered essentially the most well-known in-layer normalization strategies for coaching very deep fashions. Should you appreciated our article, you could share it in your social media web page to succeed in a broader viewers.

Cited as:

@article{adaloglou2020normalization,

title = "In-layer normalization strategies for coaching very deep neural networks",

creator = "Adaloglou, Nikolas",

journal = "https://theaisummer.com/",

12 months = "2020",

url = "https://theaisummer.com/normalization/"

}

References

- Ioffe, S., & Szegedy, C. (2015).Batch normalization: Accelerating deep community coaching by decreasing inside covariate shift. arXiv preprint arXiv:1502.03167.

- Salimans, T., & Kingma, D. P. (2016). Weight normalization: A easy reparameterization to speed up coaching of deep neural networks. In Advances in neural data processing programs (pp. 901-909).

- Ba, J. L., Kiros, J. R., & Hinton, G. E. (2016). Layer normalization. arXiv preprint arXiv:1607.06450.

- Ulyanov, D., Vedaldi, A., & Lempitsky, V. (2016). Occasion normalization: The lacking ingredient for quick stylization. arXiv preprint arXiv:1607.08022.

- Wu, Y., & He, Ok. (2018). Group normalization. In Proceedings of the European convention on laptop imaginative and prescient (ECCV) (pp. 3-19).

- Zhang, H., Dana, Ok., Shi, J., Zhang, Z., Wang, X., Tyagi, A., & Agrawal, A. (2018). Context encoding for semantic segmentation. In Proceedings of the IEEE convention on Pc Imaginative and prescient and Sample Recognition (pp. 7151-7160).

- Santurkar, S., Tsipras, D., Ilyas, A., & Madry, A. (2018). How does batch normalization assist optimization?. In Advances in Neural Info Processing Programs (pp. 2483-2493).

- Dumoulin, V., Shlens, J., & Kudlur, M. (2016). A realized illustration for creative type. arXiv preprint arXiv:1610.07629.

- Park, T., Liu, M. Y., Wang, T. C., & Zhu, J. Y. (2019). Semantic picture synthesis with spatially-adaptive normalization. In Proceedings of the IEEE Convention on Pc Imaginative and prescient and Sample Recognition (pp. 2337-2346).

- Huang, X., & Belongie, S. (2017). Arbitrary type switch in real-time with adaptive occasion normalization. In Proceedings of the IEEE Worldwide Convention on Pc Imaginative and prescient (pp. 1501-1510).

- Kolesnikov, A., Beyer, L., Zhai, X., Puigcerver, J., Yung, J., Gelly, S., & Houlsby, N. (2019). Large switch (BiT): Basic visible illustration studying. arXiv preprint arXiv:1912.11370.

- Qiao, S., Wang, H., Liu, C., Shen, W., & Yuille, A. (2019). Weight standardization. arXiv preprint arXiv:1903.10520.

* Disclosure: Please notice that a few of the hyperlinks above is perhaps affiliate hyperlinks, and at no further value to you, we’ll earn a fee for those who resolve to make a purchase order after clicking by means of.