Partially 1 of the Deep Studying in Manufacturing course, we outlined the aim of this article-series which is to convert a python deep studying pocket book into production-ready code that can be utilized to serve hundreds of thousands of customers. In the direction of that finish, we proceed our collection with a set of greatest practices when programming a deep studying mannequin. These practices largely seek advice from how we are able to write organized, modularized, and extensible python code.

You may think about that the majority of them aren’t unique for machine studying functions however they are often utilized on all kinds of python tasks. However right here we are going to see how we are able to apply them in deep studying utilizing a hands-on method (so brace yourselves for some programming).

One last item earlier than we begin and one thing that I’ll in all probability repeat rather a lot on this course. Machine studying code is bizarre software program and may at all times be handled as one. Due to this fact, as bizarre code it has a challenge construction, a documentation and design ideas corresponding to object-oriented programming.

Additionally, I’ll assume that you’ve already arrange your laptop computer and surroundings as we talked about on half 1 (should you haven’t be at liberty to try this now and are available again)

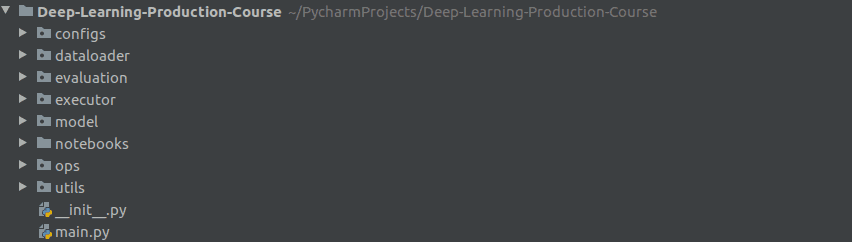

Undertaking Construction

One crucial facet when writing code is the way you construction your challenge. A great construction ought to obey the “Separation of considerations” precept in phrases that every performance ought to be a definite element. On this manner, it may be simply modified and prolonged with out breaking different elements of the code. Furthermore, it may also be reused in lots of locations with out the necessity to write duplicate code.

Tip: Writing the identical code as soon as is ideal, twice is kinda effective however thrice it’s not.

The best way I like to prepare most of my deep studying tasks is one thing like this :

And that, in fact, is my private desire. Be at liberty to mess around with this till you discover what fits you greatest.

Python modules and packages

Discover that within the challenge construction that I introduced, every folder is a separate module that may be imported in different modules simply by doing “import module”. Right here, we should always make a fast distinction between what python calls module and what bundle. A module is just a file containing Python code. A bundle, nonetheless, is sort of a listing that holds sub-packages and modules. To ensure that a bundle to be importable, it ought to comprise a init.py file ( even when it’s empty). That’s not the case for modules. Thus, in our case every folder is a bundle and it accommodates an “init” file.

In our instance, now we have 8 totally different packages:

- configs: in configs we outline each single factor that may be configurable and could be modified sooner or later. Good examples are coaching hyperparameters, folder paths, the mannequin structure, metrics, flags.

A quite simple config is one thing like this:

CFG = {

"knowledge": {

"path": "oxford_iiit_pet:3.*.*",

"image_size": 128,

"load_with_info": True

},

"practice": {

"batch_size": 64,

"buffer_size": 1000,

"epoches": 20,

"val_subsplits": 5,

"optimizer": {

"sort": "adam"

},

"metrics": ["accuracy"]

},

"mannequin": {

"enter": [128, 128, 3],

"up_stack": {

"layer_1": 512,

"layer_2": 256,

"layer_3": 128,

"layer_4": 64,

"kernels": 3

},

"output": 3

}

}

-

dataloader is sort of self-explanatory. All the information loading and knowledge preprocessing courses and features reside right here.

-

analysis is a set of code that goals to guage the efficiency and accuracy of our mannequin.

-

executor: on this folder, we often have all of the features and scripts that practice the mannequin or use it to foretell one thing in several environments. And by totally different environments I imply: executors for GPUs, executors for distributed programs. This bundle is our reference to the outer world and it’s what our “essential.py” will use.

-

mannequin accommodates the precise deep studying code (we speak about tensorflow, pytorch and many others)

-

notebooks embody all of our jupyter/colab notebooks in a single place.

-

ops: this one just isn’t at all times wanted, because it contains operations not associated with machine studying corresponding to algebraic transformations, picture manipulation strategies or possibly graph operations.

-

utils: utilities features which are utilized in multiple locations and every part that don’t fall in on the above come right here.

Now that now we have our challenge properly structured and all, we are able to start to see how our code ought to seem like on a decrease stage.

Object-oriented programming in Python

The solutions to that query is courses and every part that comes with this. Admittedly, Object-Oriented Programming (OOP) won’t be the very first thing that involves thoughts when writing python code (it positively is when coding in Java), however you can be stunned on how simple it’s to develop software program when considering in objects.

Tip: A great way to code your manner is to attempt to write python the identical manner you’ll write java (yup I do know that’s not your ordinary recommendation, however you’ll perceive what I imply in time)

If we reprogram our Unet mannequin in an object-oriented manner, the outcome could be one thing like this:

class UNet():

def __init__(self, config):

self.base_model = tf.keras.functions.MobileNetV2( input_shape=self.config.mannequin.enter, include_top=False)

self.batch_size = self.config.practice.batch_size

. . .

def load_data(self):

"""Hundreds and Preprocess knowledge """

self.dataset, self.information = DataLoader().load_data(self.config.knowledge)

self._preprocess_data()

def _preprocess_data(self):

. . .

def _set_training_parameters(self):

. . .

def _normalize(self, input_image, input_mask):

. . .

def _load_image_train(self, datapoint):

. . .

def _load_image_test(self, datapoint):

. . .

def construct(self):

""" Builds the Keras mannequin primarily based """

layer_names = [

'block_1_expand_relu',

'block_3_expand_relu',

'block_6_expand_relu',

'block_13_expand_relu',

'block_16_project',

]

layers = [self.base_model.get_layer(name).output for name in layer_names]

. . .

self.mannequin = tf.keras.Mannequin(inputs=inputs, outputs=x)

def practice(self):

. . .

def consider(self):

. . .

Keep in mind the code within the colab pocket book. Now take a look at this once more. Do you begin to perceive what these practises accomplish?

Mainly, you possibly can see that the mannequin is a category, every separate performance is encapsulated inside a technique and all of the frequent variables are declared as occasion variables.

As you possibly can simply understand, it turns into a lot simpler to change the coaching performance of our mannequin, or to vary the layers or to flip the default of a flag. Alternatively, when writing spaghetti code (which is a programming slang for chaotic code) , it’s way more troublesome to search out what does what, if a change impacts different elements of the code and tips on how to debug the code.

Because of this, we get just about without cost maintainability, extensibility and ease (at all times bear in mind our ideas).

Abstraction and Inheritance

Nevertheless, utilizing courses offers us much more than that. Abstraction and inheritance are two of an important subjects on OOP.

Utilizing abstraction we are able to declare the specified functionalities with out coping with how they’ll be carried out. To this finish, we are able to first take into consideration the logic behind our code after which diving into programming each single a part of it. The identical sample can simply be adopted in deep studying code. To higher perceive what I’m saying, take a look within the code under:

class BaseModel(ABC):

"""Summary Mannequin class that's inherited to all fashions"""

def __init__(self, cfg):

self.config = Config.from_json(cfg)

@abstractmethod

def load_data(self):

move

@abstractmethod

def construct(self):

move

@abstractmethod

def practice(self):

move

@abstractmethod

def consider(self):

move

One can simply observe that the features don’t have any physique. They’re only a declaration. In the identical logic, you possibly can consider each performance you’re gonna want, declare it as an summary operate or class and you’re executed. It’s like having a contract of what the code ought to seem like. That manner you possibly can determine first on the excessive stage implementation after which deal with every half intimately.

Consequently, that contract can now be utilized by different courses that may “prolong” our summary class. That is referred to as inheritance. The bottom class shall be inherited within the “baby” class and it’ll instantly outline the construction of the kid class. So, the brand new class is obligated to have all of the summary features as properly. In fact, it will possibly even have many different features, not declared within the summary class.

Look under how we move the BaseModel class as an argument within the UNet. That’s all we want. Additionally in our case, we have to name the init operate of the mum or dad class, which we accomplish with the “tremendous()”. “tremendous” is a particular python operate that calls the constructor ( the operate that initializes the thing aka the init) of the mum or dad class). The remainder of the code is regular deep studying code

The primary solution to have abstraction in Python is through the use of the ABC library.

class UNet(BaseModel):

def __init__(self, config):

tremendous().__init__(config)

self.base_model = tf.keras.functions.MobileNetV2(input_shape=self.config.mannequin.enter, include_top=False)

. . .

def load_data(self):

self.dataset, self.information = DataLoader().load_data(self.config.knowledge )

self._preprocess_data()

. . .

def construct(self):

. . .

self.mannequin = tf.keras.Mannequin(inputs=inputs, outputs=x)

def practice(self):

self.mannequin.compile(optimizer=self.config.practice.optimizer.sort,

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=self.config.practice.metrics)

model_history = self.mannequin.match(self.train_dataset, epochs=self.epoches,

steps_per_epoch=self.steps_per_epoch,

validation_steps=self.validation_steps,

validation_data=self.test_dataset)

return model_history.historical past['loss'], model_history.historical past['val_loss']

def consider(self):

predictions = []

for picture, masks in self.dataset.take(1):

predictions.append(self.mannequin.predict(picture))

return predictions

Furthermore, you possibly can think about that the bottom class could be inherited by many kids ( that’s referred to as polymorphism). That manner you possibly can have many fashions with the identical base mannequin as a mum or dad however with totally different logic. In one other sensible facet, if a brand new developer joins your staff, he can simply discover out what his mannequin ought to seem like simply by inheriting our summary class.

Static and sophistication strategies

Static and sophistication strategies are one other two cool patterns that may simplify and make our code much less error-prone. In OOP now we have the category and the cases. The category is just like the blueprint when creating objects and objects are the cases of a category.

Class strategies take as arguments the category and they’re often used as constructors for creating new cases of the category.

Static strategies are strategies that refer solely to the category, not its cases, and they won’t modify the category state. Static strategies are largely used for utils features that aren’t gonna change.

Let’s see how they are often utilized in our code:

Under we see how we are able to assemble a config from a json file. The classmethod “from_json” returns an precise occasion of the Config class created from a json file by simply calling “Config.from_json( json_file )” . In different phrases, it’s a constructor of our class. By doing this, we are able to have a number of constructors for a similar class ( we’d for instance wish to create a config utilizing a yaml file).

class Config:

"""Config class which accommodates knowledge, practice and mannequin hyperparameters"""

def __init__(self, knowledge, practice, mannequin):

self.knowledge = knowledge

self.practice = practice

self.mannequin = mannequin

@classmethod

def from_json(cls, cfg):

"""Creates config from json"""

params = json.hundreds(json.dumps(cfg), object_hook=HelperObject)

return cls(params.knowledge, params.practice, params.mannequin)

Static strategies then again are strategies which are referred to as on the precise object and never some occasion of it. An ideal instance in our case is the DataLoader class, the place we merely wish to load the information from a url. Is there a motive to have a brand new DataLoader occasion? Not likely, as a result of every part is secure on this performance and nothing will ever change. When states are altering, it’s higher to make use of an occasion and occasion strategies.

class DataLoader:

"""Knowledge Loader class"""

@staticmethod

def load_data(data_config):

"""Hundreds dataset from path"""

return tfds.load(data_config.path, with_info=data_config.load_with_info)

Kind Checking

One other cool and helpful function (borrowed from Java) is sort checking. Kind checking is the method of verifying and implementing the constraints of sorts. And by sorts we imply whether or not a variable is a string, an integer or an object. To be extra exact, in python now we have sort hints. Python doesn’t help sort checking, as a result of it’s a dynamically typed language. However we are going to see tips on how to get round that. Kind checking is crucial as a result of it will possibly acknowledge bugs and errors very early and may also help you write higher code total.

It is extremely frequent when coding in Python to have moments the place you marvel if that variable is a string or an integer. And you end up monitoring it all through the code making an attempt to determine what sort it’s. And it’s way more difficult to do when that code is utilizing Tensorflow or Pytorch.

A quite simple solution to do sort checking is that this:

def ai_summer_func(x:int) -> int:

return x+5

As you possibly can see we declare that each x and the outcome should be sort of integer.

Word that this is not going to throw an error on an exception. It’s only a suggestion. IDEs like Pycharm will routinely uncover them and present a warning. That manner you possibly can simply detect bugs and repair them as you’re constructing your code.

If you wish to catch these sorts of errors, you need to use a static sort checker like Pytype. After putting in it and together with your sort hints in your code you possibly can run one thing like this and it’ll present you all the sort errors in your code. Pytype is utilized in many tensorflow official codebases and it’s a Google library. An instance could be illustrated under:

$ pytype essential.py

One essential factor that I would like to say right here is that checking sorts in Tensorflow code just isn’t simple. I don’t wish to get into many particulars however you possibly can’t merely outline the kind of x as a “tf.Tensor”. Kind checking is nice for easier features and primary knowledge sorts however on the subject of tensorflow code issues could be exhausting. Pytype has the flexibility to deduce some sorts out of your code and it will possibly resolve sorts corresponding to Tensor or Module nevertheless it doesn’t at all times work as anticipated.

For completeness linting instruments corresponding to Pylint could be nice to search out sort errors out of your IDE. Additionally examine this out. It’s a code type checker particularly designed for Tensorflow code.

Documentation

Documenting our code is the only most essential factor on this checklist and the factor that the majority of us are responsible of not doing. Writing easy feedback on our code could make the lifetime of our teammates but additionally of our future selves a lot a lot simpler. It’s much more essential once we write deep studying code due to the advanced nature of our software program. In the identical sense, it’s equally essential to offer correct and descriptive names in our courses, features and variables. Check out this:

def n(self, ii, im):

ii = tf.solid(ii, tf.float32) / 255.0

im -= 1

return ii, im

I’m 100% sure that you haven’t any concept what it does.

Now take a look at this:

def _normalize(self, input_image, input_mask):

""" Normalise enter picture

Args:

input_image (tf.picture): The enter picture

input_mask (int): The picture masks

Returns:

input_image (tf.picture): The normalized enter picture

input_mask (int): The brand new picture masks

"""

input_image = tf.solid(input_image, tf.float32) / 255.0

input_mask -= 1

return input_image, input_mask

Can it BE extra descriptive? (in Chandler’s voice)

The feedback you possibly can see above are referred to as docstrings and are python’s solution to doc the duty of a chunk of code. What I love to do is to incorporate a docstring at first of a file/module, indicating the aim of a file, beneath each class declaration and inside each operate.

There are numerous methods to format the docstrings. I personally desire the google type which appears just like the above code.

The primary line at all times signifies what the code does and it’s the solely actual important half (I personally attempt to at all times embody this in my code). The opposite elements clarify what arguments the operate accepts (with sorts and descriptions) and what it returns. These can generally be ignored in the event that they don’t present a lot worth.

Mix all of them collectively

All of the aforementioned greatest practises could be mixed into the identical challenge, leading to a extremely extensible and simply maintainable code. Take a look at our repo in Github to see the ultimate outcome. The code supplied within the repo is absolutely purposeful and can be utilized as a template in your personal tasks.

On this article, we discover some greatest practices that you could observe when programming deep studying functions. We noticed tips on how to construction our challenge, how object oriented programming could make our code extremely maintainable and extensible and tips on how to doc your code with a view to keep away from future complications.

However in fact that’s not all we are able to do. Within the subsequent article, we are going to dive deep into unit testing. We are going to see tips on how to write unit exams for tensorflow code, tips on how to use mock objects to simplify your take a look at instances and why take a look at protection is an crucial a part of every bit of software program that lives on-line. Lastly, we are going to focus on some examples of which elements of your machine studying code it’s higher to check and what exams you completely want to write down.

I’m positive you don’t wish to miss that. So keep tuned. And even higher subscribe to our e-newsletter and sleep properly at evening, understanding that the article shall be delivered in your inbox as quickly because it’s out.

Have a pleasant day. Or evening..

References:

* Disclosure: Please word that a few of the hyperlinks above could be affiliate hyperlinks, and at no extra value to you, we are going to earn a fee should you determine to make a purchase order after clicking via.