Whats up howdy howdy and welcome to the Deep Studying in Manufacturing course.

On this article sequence, our aim is lifeless easy. We’re gonna begin with a colab pocket book containing prototype deep studying code (i.e. a analysis undertaking) and we’re gonna deploy and scale it to serve hundreds of thousands or billions (okay possibly I’m overexcited) of customers.

We are going to incrementally discover the next ideas and concepts:

-

the best way to construction and develop production-ready machine studying code,

-

the best way to optimize the mannequin’s efficiency and reminiscence necessities, and

-

the best way to make it accessible to the general public by organising a small server on the cloud.

However that’s not all of it. Afterwards, we have to scale our server to have the ability to deal with the site visitors because the userbase grows and grows.

So be ready for some critical software program engineering from the scope of machine studying. Really now I’m interested by it, a extra appropriate title for this sequence can be “Deep Studying and Software program Engineering”.

To make clear why Software program Engineering is an plain important half in deep studying, take for instance Google Assistant. Behind Google Assistant is, for sure, an ingenious machine studying algorithm (most likely a mix of BERT, LSTMs and God is aware of what else). However do you assume that this superb analysis alone is able to answering the queries of hundreds of thousands of customers on the identical time? Completely not. There are dozens of software program engineers behind the scenes, who keep, optimize, and construct the system. That is precisely what we’re about to find.

One last item earlier than we start. “Deep Studying in Manufacturing” isn’t going to be a kind of high-level, summary articles that speak an excessive amount of with out sensible worth. Right here we’re going to go actually deep into software program, we’ll analyze particulars that will appear too low stage, we’ll write numerous code and we’ll current the total deep studying software program growth cycle from begin to finish. From a pocket book to serving hundreds of thousands of customers.

From analysis to manufacturing

However sufficient with the pitching (I hope you might be satisfied by now). Let’s minimize to the chase with an outline of the upcoming articles. Observe that every bullet just isn’t essentially a separate article (it may very well be 2 or 3). I’m simply outlining all the topics we’ll contact.

-

Setup your laptop computer and atmosphere.

-

Greatest practises to construction your python code and develop the mannequin.

-

Optimize the efficiency of the code by way of latency, reminiscence and many others.

-

Prepare the mannequin within the cloud.

-

Construct an API to serve the mannequin.

-

Containerize and deploy the mannequin within the cloud.

-

Scale it to deal with a great deal of site visitors.

On this side, a number of the applied sciences, frameworks and instruments we’ll use (in random order):

Python, Pycharm, Anaconda, Tensorflow, Git and Github, Flask, WSGI, Docker, Kubernetes, Google Cloud and doubtless way more.

Alongside the method, I may also offer you some additional tricks to higher make the most of the instruments, enhance your productiveness and improve your workflow.

Let’s get began with some context first.

For the premise of our undertaking, we’ll use an official tutorial type Tensorflow wherein the authors use a barely modified U-Web to carry out picture segmentation. You’ll find the colab pocket book within the official docs right here or in our Github repo right here.

I received’t go into a lot particulars however principally semantic segmentation is the duty of assigning a label to each pixel of a picture based mostly on its context. That is tremendous helpful as a result of it permits computer systems to know what they see. And I’m assured you’ll be able to consider many purposes that might be useful. A few of them embody autonomous vehicles, robotics and medical imaging.

A well-known mannequin that’s utilized in picture segmentation are UNets. UNets are symmetric convolutional neural networks that encompass an encoder and a decoder. However as an alternative of being a linear formed mannequin, each layer of the encoder connects with a layer of the decoder through skip connections. The result’s to have a U formed Community, therefore their title.

However since UNets usually are not what we’re focusing right here, I’ll immediate you to a different article of ours in case you want extra info. Test this out .

base_model = tf.keras.purposes.MobileNetV2(input_shape=[128, 128, 3], include_top=False)

layer_names = [

'block_1_expand_relu',

'block_3_expand_relu',

'block_6_expand_relu',

'block_13_expand_relu',

'block_16_project',

]

layers = [base_model.get_layer(name).output for name in layer_names]

down_stack = tf.keras.Mannequin(inputs=base_model.enter, outputs=layers)

down_stack.trainable = False

up_stack = [

pix2pix.upsample(512, 3),

pix2pix.upsample(256, 3),

pix2pix.upsample(128, 3),

pix2pix.upsample(64, 3),

]

def unet_model(output_channels):

inputs = tf.keras.layers.Enter(form=[128, 128, 3])

x = inputs

skips = down_stack(x)

x = skips[-1]

skips = reversed(skips[:-1])

for up, skip in zip(up_stack, skips):

x = up(x)

concat = tf.keras.layers.Concatenate()

x = concat([x, skip])

final = tf.keras.layers.Conv2DTranspose(

output_channels, 3, strides=2,

padding='identical')

x = final(x)

return tf.keras.Mannequin(inputs=inputs, outputs=x)

In case you are excited about extra particulars concerning the UNet mannequin and the structure, you must verify the official Tensorflow tutorial. It’s actually superb. I believe it’s higher not to enter particulars concerning the machine studying side as a result of right here we’re specializing in the programming and software program a part of Deep Studying.

As you will note, the official pocket book additionally contains some knowledge loading functionalities, primary utils features, the coaching course of and code to foretell the segmentation on the take a look at knowledge. However we’ll dive into them in time.

It’s time. You spent hours studying analysis papers, experimenting on completely different knowledge, testing the accuracy of various fashions however you bought it. After coaching your mannequin regionally, you’ve seen some fairly superior outcomes and also you’re satisfied that your mannequin is able to go. What’s subsequent?

The subsequent step is to take your experimentation code and migrate it into an precise python undertaking with correct construction, unit checks, static code evaluation, parallel processing and many others.

However earlier than that, we might want to arrange our laptop computer. You’ve most likely already finished that however for consistency’s sake, I’ll describe the method to ensure we’re on the identical web page and to familiarize ourselves with the instruments I’m going to make use of on this course.

Organising the atmosphere for Deep Studying

I’m going to make use of Ubuntu 20.04 as my working system and the usual Linux terminal. I extremely advocate to put in zsh as an alternative of bash. The default linux shell on Ubuntu is bash and you can be completely effective with it. Zsh is an extension that comes with some cool options equivalent to line completion, spelling correction, command historical past.

You may see the best way to set up it within the hyperlink (word that as an alternative of utilizing homebrew as you’ll do on a mac, you should use apt-get to obtain it which is the usual packaging system in most linux distributions) and you may as well benefit from its superb plugins equivalent to zsh-syntax-highlighting and zsh-autosuggestions . Zsh will certainly come in useful once you wish to swap python digital environments and once you’re working with git and completely different branches. Beneath you’ll be able to see how I arrange my terminal (which is fairly minimal)

After organising the terminal, it’s time to obtain some primary software program. Git is the primary one in our checklist.

Git and Github

Git is an open coursed model management system and is crucial in case you work with different builders on the identical code because it enables you to observe who modified what, revert the code to a earlier state and permit a number of folks to alter the identical file. Git is utilized by the vast majority of builders these days and might be the only most vital software within the checklist.

You may set up and configure git utilizing the next instructions.:

sudo apt-get set up git

git config --global consumer.title "AI Summer time"

git config --global consumer.electronic mail "sergios@theaisummer.com"

You must also use Github to avoid wasting your code and collaborate with different engineers. Github is a superb software to evaluation code, to trace what it is advisable to do and to just be sure you received’t lose your code it doesn’t matter what. To have the ability to use it, you’ll need to arrange an ssh key to have the ability to commit and push code from the terminal. Personally I take advantage of each git and github just about in all my initiatives (even when I’m the one the developer)

ssh-keygen -t rsa -C "your_name@github.com"

cat ~/.ssh/id_rsa.pub

Then go to your GitHub account within the browser -> Account settings -> ssh keys and add the ssh key you simply copied.

Lastly, run the under command to ensure the whole lot went nicely.

ssh -T git@github.com

I additionally advocate Github Desktop to work together with GitHub utilizing a GUI and never the command line, as it may well typically be fairly tedious.

Tip: Github Desktop doesn’t have a linux model however this superior repo works completely effective and it’s what I personally use.

Subsequent in our checklist is Anaconda

Anaconda and Miniconda

In an effort to isolate our deep studying atmosphere from the remainder of our system and to have the ability to take a look at completely different library releases, digital environments are the answer. Digital environments enables you to isolate the python set up with all of the libraries that will likely be wanted for a undertaking. That manner you’ll be able to have for instance each Python 2.7 and three.6 on the identical machine. rule of thumb is to have an atmosphere per undertaking.

One of the crucial common python distributions out there may be Anaconda, which is extensively utilized by many knowledge scientists and machine studying engineers, because it comes prebaked with a lot of common ML libraries and instruments and it helps natively digital environments. It additionally comes with a bundle supervisor (referred to as conda) and a GUI as a strategy to manipulate libraries from there as an alternative of the command line. And it’s what we’ll use. I’ll set up the miniconda model, which incorporates solely the mandatory stuff, as I don’t need my laptop to litter with pointless libraries however be happy to get the total model.

wget https://repo.anaconda.com/miniconda/Miniconda2-latest-Linux-x86_64.sh

./sh Miniconda2-latest-Linux-x86_64.sh

At this level we might usually create a conda atmosphere and set up some primary python libraries. However we received’t. As a result of within the subsequent step Pycharm will create one for us free of charge.

Pycharm

That is the place many builders can develop into very opinionated on which ide/editor is the very best. In my expertise, it doesn’t actually matter which one to make use of so long as it may well enhance your productiveness and aid you write and debug code sooner. You may select between VsCode, Atom, Emacs, Vim, Pycharm and extra. In my case, Pycharm appears extra pure because it gives some out of the field functionalities, equivalent to integration with git and conda, unit testing and plugins like cloud code and bash assist.

You may obtain the free group version from right here and create a brand new undertaking.

Tip: Once you create a undertaking, Pycharm will ask you to pick your python interpreter. On this step you’ll be able to select to create a brand new conda atmosphere and the IDE will discover your conda set up and create one for you with out doing the rest. It’ll mechanically activate it when beginning the built-in terminal. In the entire course, I’m going to make use of Python 3.7.7 so if you wish to observe alongside, it will be higher if we’ve got the identical model (any 3.7.x ought to work).

When you create your undertaking and create your conda atmosphere, you’ll most likely wish to set up some python libraries. There are two methods to try this:

-

Open the terminal and run “conda set up library” inside your env

-

Go File->Settings->Venture->Venture Interpreter and set up from right here utilizing Pycharm

One other cool characteristic of Pycharm is that it incorporates an built-in model management performance (VCS). It’ll detect your git configuration in your undertaking (if exists) and it will provide you with the power to commit, pull, push and even open a PR (pull request) from the VCS menu tab (in case you don’t wish to use both the terminal or GithubDesktop).

Different instruments

Different instruments which you would possibly wish to use, particularly if you’re a part of a staff are: ( and I’m not suggesting them due to an affiliate relationship or one thing. I’m utilizing all of them in my every day life/work)

-

Slack is an excellent software to speak along with your staff. And it include a plethora of integrations equivalent to github and google calendar.

-

Zenhub is a undertaking administration software, particularly designed for Github. Because it’s not free, an excellent different is the built-in initiatives performance inside Github.

-

Zoom for video calls and conferences.

Now we’re all set. Our laptop computer is rock strong, our surroundings is prepared for some Deep Studying (type of) and our instruments are put in and correctly configured.

My favourite time is right here: Time to put in writing some code!

Naah we nonetheless have a number of issues to speak about.

One parenthesis right here: You realize what distinguishes an excellent engineer from a terrific engineer? (and that’s, after all, my private opinion)

His potential to plan and design the system earlier than even touching the keyboard. Fastidiously interested by the structure, making knowledgeable selections, discussing it with different engineers, and documenting issues can save numerous time beforehand. A complete lot of time. Belief me on this. I’m responsible of this extra occasions than I’m proud to confess. That is why, this time we’ll do it the precise manner. Thus, it seems it received’t be such a brief parenthesis!

System Design for Deep Studying

One crucial recommendation I discovered through the years designing software program is to at all times ask why. Remember the fact that machine studying is extraordinary software program and not some magic algorithm. It’s plain easy software program and must be handled as one, particularly once you assume by way of manufacturing level-software.

So, why use Python? Why do we want a server? Why run on the cloud and never regionally? Why do we want GPUs? Why writing unit checks?

I’ll attempt to reply all these questions all through the course and I hope that can assist you perceive why some greatest practices are in actual fact greatest and why you must attempt to undertake them.

Excessive stage structure

Let’s begin first with a high-level overview of what we wish to do.

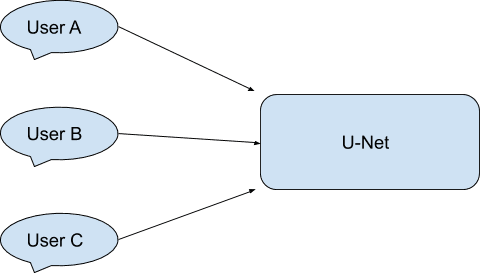

Let’s assume that we’ve got a web site and for some cause we wish to add a picture segmentation performance on that web page. So the consumer will navigate to that web page, click on on a button to add a picture after which we’ll show again the end result after we carry out the segmentation.

Taken from the tensorflow tutorial

When it comes to software program, the circulation is one thing like this: the consumer uploads the picture to the browser, the browser sends the picture to our backend, the UNet predicts the segmented picture and we ship the picture again to the consumer’s browser, the place it’s rendered.

After watching the above system, there are lots of questions that are available in our thoughts:

-

What is going to occur if many customers ask the mannequin on the identical time?

-

What is going to occur if for some cause the mannequin crashes?

-

If it incorporates a bug we haven’t beforehand seen?

-

If the mannequin is too sluggish and the consumer has to attend for too lengthy to get a response?

-

And the way can we replace our mannequin?

-

Can we retrain it on new knowledge?

-

Can we get some suggestions if the mannequin performs nicely?

-

Can we see how briskly or sluggish the mannequin is?

-

How a lot reminiscence does it use?

-

The place will we retailer the mannequin weights?

-

What concerning the coaching knowledge?

-

What if the consumer sends a malicious request as an alternative of a correct picture?

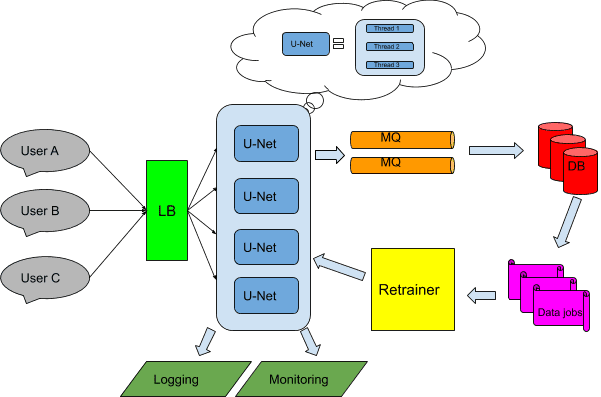

I can go on ceaselessly however I’m positive you get my level. Most of those questions will likely be answered within the following articles. That’s why in most real-life instances, the ultimate structure will likely be one thing like this:

Okay, we received’t go that far. That’s a complete startup up there. However we’ll cowl many factors specializing in optimizing the software program at first and touching a few of these elements on our newest articles.

Software program design rules

However to develop our software program, we have to keep in mind a tough thought of our finish aim and what we wish to obtain. Each system must be constructed based mostly on some primary rules:

-

Separation of considerations: The system must be modularized into completely different parts with every part being a separate maintainable, reusable and extensible entity.

-

Scalability: The system wants to have the ability to scale because the site visitors will increase

-

Reliability: The system ought to proceed to be useful even when there may be software program of {hardware} failure

-

Availability: The system must proceed working always

-

Simplicity: The system must be as easy and intuitive as attainable

Given the aforementioned rules, let’s talk about the above picture:

Every consumer sends a request to our backend. To have the ability to deal with all of the simultaneous requests, first we want a Load Balancer (LB) to deal with the site visitors and route the requests to the correct server occasion.

We keep a couple of occasion of the mannequin in an effort to obtain each scalability and availability. Observe that these may be bodily situations, digital machines or docker containers and they’re organized by a distributed system orchestrator (equivalent to Kubernetes)

As you’ll be able to most likely see, every occasion might have a couple of threads or parallel processes. We’d like that to guarantee that the mannequin is quick sufficient to return a response in actual time.

A serious downside most machine studying methods face is retraining as a result of to ensure that the mannequin to remain updated, you must always replace the mannequin weights based mostly on the brand new knowledge. In our case, this pipeline consists of:

-

A database (DB) to avoid wasting the requests, the responses and all relative knowledge

-

A message queue (MQ) to ship them within the database in an asynchronous method ( to maintain the system dependable and accessible)

-

Knowledge jobs to preprocess and rework the info within the right format in order that can be utilized from our mannequin

-

Retrainer situations that execute the precise coaching based mostly on the saved knowledge

After retraining the mannequin, the brand new model will progressively exchange all of the UNet situations. That manner we construct deep studying fashions versioning performance, the place we at all times replace the mannequin with the most recent model.

Lastly, we want some type of monitoring and logging to have a whole picture of what’s occurring in our system, to catch errors shortly and to find bottlenecks simply.

I’m fairly positive that a few of you’re feeling that I launched numerous new phrases on this part. Don’t fear. You aren’t gonna want all of those till the most recent articles. However now, we’ve got a reasonably clear picture of what we wish to obtain and why we’re going to want all these items I’m about to debate on this course.

They’ll all handle the rules I discussed earlier than.

As a aspect materials, I strongly recommend the TensorFlow: Superior Methods Specialization course by deeplearning.ai hosted on Coursera, which will provide you with a foundational understanding on Tensorflow

Prepare for Software program Engineering

On this article, I gave you two fundamental duties to complete whereas ready for the subsequent one:

-

Organising your laptop computer and putting in the instruments that we are going to want all through the course and

-

perceive the whys of a contemporary deep studying system.

The primary one is a low effort, type of boring job that can enhance your productiveness and prevent a while down the highway so we will focus solely on the software program. The second requires some psychological effort as a result of deep studying architectures are moderately advanced methods. However the principle factor that it is advisable to bear in mind are the 5 rules as they’ll accompany us down the highway.

We’re all arrange and able to start our journey in direction of deploying our UNet mannequin and serve it to our customers. As I mentioned to start with, brace your self to go deep into programming deep studying apps, to dive into particulars that you simply most likely by no means considered and most of all to benefit from the course of.

In case you are into this and also you wish to uncover the software program aspect of Deep Studying, be a part of the AI Summer time group, to obtain our subsequent article proper into your inbox.

See you then…

References:

* Disclosure: Please word that a number of the hyperlinks above may be affiliate hyperlinks, and at no extra price to you, we’ll earn a fee in case you resolve to make a purchase order after clicking by.