Visitor submit by José Hernández-Orallo, Professor at Technical College of Valencia

20 years in the past I began engaged on metrics of machine intelligence. By that point, throughout the glacial days of the second AI winter, few have been actually curious about measuring one thing that AI lacked fully. And only a few, reminiscent of David L. Dowe and I, have been curious about metrics of intelligence linked to algorithmic data idea, the place the fashions of interplay between an agent and the world have been sequences of bits, and intelligence was formulated utilizing Solomonoff’s and Wallace’s theories of inductive inference.

Within the meantime, seemingly dozens of variants of the Turing check have been proposed yearly, the CAPTCHAs have been launched and David confirmed how simple it’s to resolve some IQ assessments utilizing a quite simple program based mostly on a big-switch strategy. And, right this moment, a brand new AI spring has arrived, triggered by a blossoming machine studying subject, bringing a extra experimental strategy to AI with an rising variety of AI benchmarks and competitions (see a earlier entry on this weblog for a survey).

Contemplating this 20-year perspective, final yr was particular in some ways. The primary in a collection of workshops on evaluating general-purpose AI took off, echoing the rising curiosity within the evaluation of synthetic normal intelligence (AGI) programs, able to find various options for a spread of duties. Evaluating these programs is totally different, and more difficult, than the standard task-oriented analysis of particular programs, reminiscent of a robotic cleaner, a credit score scoring mannequin, a machine translator or a self-driving automotive. The thought of evaluating general-purpose AI programs utilizing videogames had caught on. The arcade studying setting (the Atari 2600 video games) or the extra versatile Video Sport Definition Language and related competitors grew to become more and more well-liked for the analysis of AGI and its current breakthroughs.

Final yr additionally witnessed the introduction of a distinct type of AI analysis platforms, reminiscent of Microsoft’s Malmö, GoodAI’s College, OpenAI’s Fitness center and Universe, DeepMind’s Lab, Fb’s TorchCraft and CommAI-env. Primarily based on a reinforcement studying (RL) setting, these platforms make it doable to create many various duties and join RL brokers by means of a normal interface. Many of those platforms are effectively fitted to the brand new paradigms in AI, reminiscent of deep reinforcement studying and a few open-source machine studying libraries. After 1000’s of episodes or tens of millions of steps towards a brand new job, these programs are in a position to excel, with often higher than human efficiency.

Regardless of the myriads of purposes and breakthroughs which were derived from this paradigm, there appears to be a consensus within the subject that the principle open drawback lies in how an AI agent can reuse the representations and expertise from one job to new ones, making it doable to study a brand new job a lot sooner, with a number of examples, as people do. This may be seen as a mapping drawback (often below the time period switch studying) or may be seen as a sequential drawback (often below the phrases gradual, cumulative, incremental, continuous or curriculum studying).

One of many key notions that’s related to this functionality of a system of constructing new ideas and expertise over earlier ones is often known as “compositionality”, which is effectively documented in people from early childhood. Programs are in a position to mix the representations, ideas or expertise which were discovered beforehand to be able to remedy a brand new drawback. As an illustration, an agent can mix the power of climbing up a ladder with its use as a doable manner out of a room, or an agent can study multiplication after studying addition.

For my part, two of the earlier platforms are higher fitted to compositionality: Malmö and CommAI-env. Malmö has all of the elements of a 3D recreation, and AI researchers can experiment and consider brokers with imaginative and prescient and 3D navigation, which is what many analysis papers utilizing Malmö have carried out to this point, as this can be a sizzling matter in AI in the intervening time. Nonetheless, to me, essentially the most fascinating characteristic of Malmö is constructing and crafting, the place brokers should essentially mix earlier ideas and expertise to be able to create extra complicated issues.

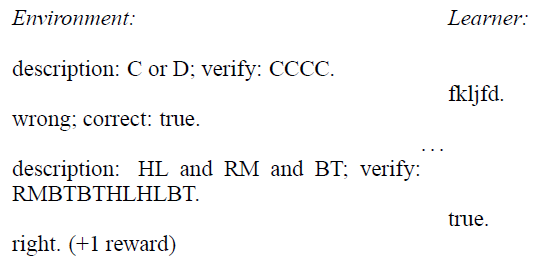

CommAI-env is clearly an outlier on this set of platforms. It isn’t a online game in 2D or 3D. Video or audio don’t have any function there. Interplay is simply produced by means of a stream of enter/output bits and rewards, that are simply +1, 0 or -1. Principally, actions and observations are binary. The rationale behind CommAI-env is to present prominence to communication expertise, but it surely nonetheless permits for wealthy interplay, patterns and duties, whereas “maintaining all additional complexities to a minimal”.

Once I was conscious that the Common AI Problem was utilizing CommAI-env for his or her warm-up spherical I used to be ecstatic. Contributors may concentrate on RL brokers with out the complexities of imaginative and prescient and navigation. After all, imaginative and prescient and navigation are essential for AI purposes, however they create many further problems if we need to perceive (and consider) gradual studying. As an illustration, two equal duties for which the feel of the partitions modifications may be seen as requiring increased switch effort than two barely totally different duties with the identical texture. In different phrases, this is able to be further confounding elements that may make the evaluation of job switch and job dependencies a lot more durable. It’s then a clever option to exclude this from the warm-up spherical. There will likely be events throughout different rounds of the problem for together with imaginative and prescient, navigation and different kinds of complicated embodiment. Beginning with a minimal interface to guage whether or not the brokers are in a position to study incrementally shouldn’t be solely a difficult however an necessary open drawback for normal AI.

Additionally, the warm-up spherical has modified CommAI-env in such a manner that bits are packed into 8-bit (1 byte) characters. This makes the definition of duties extra intuitive and makes the ASCII coding clear to the brokers. Principally, the set of actions and observations is prolonged to 256. However apparently, the set of observations and actions is similar, which permits many potentialities which are uncommon in reinforcement studying, the place these subsets are totally different. As an illustration, an agent with primitives reminiscent of “copy enter to output” and different sequence transformation operators can compose them to be able to remedy the duty. Variables, and different kinds of abstractions, play a key function.

This would possibly give the impression that we’re again to Turing machines and symbolic AI. In a manner, that is the case, and far in alignment to Turing’s imaginative and prescient in his 1950 paper: “it’s doable to show a machine by punishments and rewards to obey orders given in some language, e.g., a symbolic language”. However in 2017 we now have a spread of strategies that weren’t out there just some years in the past. As an illustration, Neural Turing Machines and different neural networks with symbolic reminiscence may be very effectively fitted to this drawback.

Not at all does this point out that the legion of deep reinforcement studying fanatics can not convey their equipment to this warm-up spherical. Certainly they gained’t be dissatisfied by this problem in the event that they actually work onerous to adapt deep studying to this drawback. They gained’t in all probability want a convolutional community tuned for visible sample recognition, however there are a lot of potentialities and challenges in tips on how to make deep studying work in a setting like this, particularly as a result of the less examples, the higher, and deep studying often requires many examples.

As a plus, the straightforward, symbolic sequential interface opens the problem to many different areas in AI, not solely recurrent neural networks however strategies from pure language processing, evolutionary computation, compression-inspired algorithms and even areas reminiscent of inductive programming, with highly effective string-handling primitives and its appropriateness for issues with only a few examples.

I believe that all the above makes this warm-up spherical a singular competitors. After all, since we haven’t had something related up to now, we’d have some surprises. It would occur that an sudden (and even naïve) method may behave significantly better than others (and people) or maybe we discover that no method is ready to do one thing significant at this time.

I’m desirous to see how this spherical develops and what the individuals are in a position to combine and invent to be able to remedy the sequence of micro and mini-tasks. I’m positive that we’ll study lots from this. I hope that machines will, too. And all of us will transfer ahead to the following spherical!

José Hernández-Orallo is a professor at Technical College of Valencia and creator of “The Measure of All Minds, Evaluating Pure and Synthetic Intelligence”, Cambridge College Press, 2017.

Again to the core of intelligence … to essentially transfer to the long run was initially printed in AI Roadmap Institute Weblog on Medium, the place individuals are persevering with the dialog by highlighting and responding to this story.