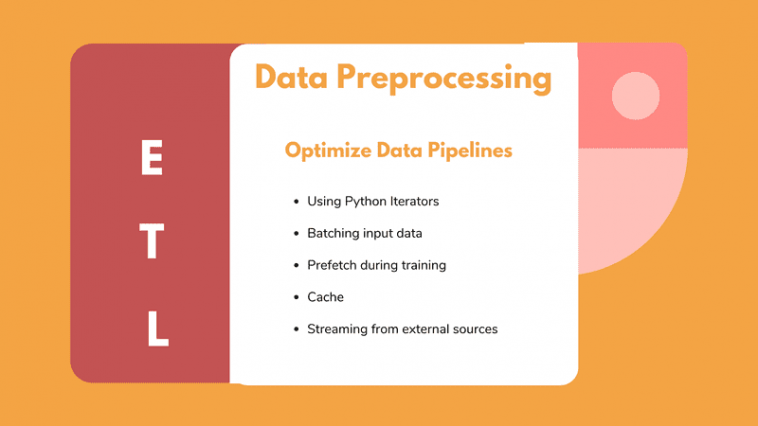

Knowledge preprocessing is an integral a part of constructing machine studying purposes. Nonetheless, most machine studying engineers do not spend the suitable period of time on it as a result of typically it may be arduous and tedious. In the case of deep studying particularly, the quantity of knowledge we now have to govern, makes it much more troublesome to take action. And naturally, is not only sufficient to construct an information pipeline, we additionally need to make it as environment friendly and as quick as potential.

As we noticed in our earlier article, knowledge pipelines comply with the ETL paradigm. ETL is an acronym and stands for extraction, transformation, loading. Final time we discover the primary two components (E, T) and noticed learn how to use TensorFlow to extract knowledge from a number of knowledge sources and rework them into our desired format. We additionally talked about useful programming and the way it may be very useful when constructing enter pipelines as a result of we will specify all of our transformations within the type of a series.

This time, we’re going to talk about the final a part of the pipeline referred to as loading. Loading primarily means the feeding of our knowledge into the deep studying mannequin for coaching or inference. However we’ll take it a step additional as we will even deal with learn how to make the pipeline excessive performant when it comes to pace and {hardware} utilization, utilizing methods similar to batching, prefetching, and caching.

So with out additional ado, let’s get began with loading. However earlier than that, let me remind you of our preliminary drawback all through this text collection. Tl;dr: we’re attempting to transform a Jupyter pocket book that performs semantic segmentation on photos into production-ready code and deploy it within the cloud. So far as the info we’re utilizing issues, they’re a group of pet photos borrowed by the Oxford College. Observe that yow will discover our complete codebase to this point in our GitHub repository.

OK let’s do it.

Loading

Loading primarily refers to passing the info into our mannequin for coaching. When it comes to the code is so simple as writing:

self.mannequin.match(self.train_dataset, epochs=self.epoches, steps_per_epoch=self.steps_per_epoch, validation_steps=self.validation_steps, validation_data=self.test_dataset)

All we did right here, was calling the “match()” perform of the Keras API, defining the variety of epochs, the variety of steps per epoch, the validation steps and easily cross the info as an argument. Is that sufficient? Can I name off the article now? If solely it was that straightforward.

Really, let me remind us of our present pipeline till now.

self.dataset, self.information = DataLoader().load_data(self.config.knowledge)

practice = self.dataset['train'].map(lambda picture: DataLoader._preprocess_train(picture,image_size), num_parallel_calls=tf.knowledge.experimental.AUTOTUNE)

train_dataset = practice.shuffle(buffer_size)

As you’ll be able to see and also you may even bear in mind from the final article, we’re loading our knowledge utilizing the TensorFlow dataset library, we then use the “map()” perform to use some kind of preprocessing into every knowledge level, after which we shuffle them. The preprocessing perform resizes every knowledge level, flips it, and normalizes the picture. So that you may suppose that, for the reason that photos are extracted from the info supply and reworked into the suitable format, we will simply go forward and cross them into the match() perform. In idea yeah that is right. However we additionally have to maintain another issues.

Generally we do not simply wish to cross the info into our perform as we could care about having extra specific management of the coaching loop. To do this who could have to iterate over the info so we will correctly assemble the coaching loop as we’d like.

Tip:Operating a for-loop in a dataset is nearly at all times a foul thought as a result of it should load all the dataset into reminiscence. As a substitute, we wish to use Python’s iterators.

Iterators

An iterator is nothing greater than an object that allows us to traverse all through our assortment, normally a listing. In our case, that assortment is an information set. The large benefit of iterators is lazy loading. As a substitute of loading all the dataset into reminiscence, the iterator hundreds every knowledge level solely when it is wanted. Evidently that that is what tf.knowledge is utilizing behind the scenes. However we will additionally try this manually.

In tf.knowledge code we will have one thing like this:

dataset = tf.knowledge.Dataset.vary(2)

for factor in dataset:

practice(factor)

Or we will merely get the iterator utilizing the “iter” perform after which loop over it utilizing the “get_next” perform

iterator = iter(dataset)

practice(iterator.get_next())

Or we will use Python’s built-in “iter” perform:

iterator = iter(dataset)

practice(subsequent(iterator))

We are able to additionally get an numpy iterator from a Tensorflow Dataset object:

for factor in dataset.as_numpy_iterator():

practice(factor)

We’ve got many choices, that’s for certain. We additionally do care about efficiency.

Okay, I preserve saying efficiency and efficiency, however I have never actually defined what does which means. Efficiency when it comes to what? And the way can we measure efficiency? If I needed to put it in just a few phrases, I’d say that efficiency is how briskly the entire pipeline from extraction to loading is executed. If I needed to dive slightly deeper, I’d say that efficiency is latency, throughput, ease of implementation, upkeep, and {hardware} utilization.

You see the factor is that knowledge for deep studying is massive. I imply actually large. And typically we aren’t capable of load all of them into reminiscence or every processing step may take far too lengthy and the mannequin must wait till it is accomplished. Or we merely do not have sufficient sources to govern all of them.

Within the earlier article, we mentioned a well known trick to handle a few of the points, referred to as parallel processing the place we run the operation concurrently into our totally different CPU cores. At present we are going to primarily deal with another methods. The primary one is named batching.

Batching

Batch processing has a barely totally different which means for a software program engineer and a machine studying engineer. The previous thinks batching as a technique to run excessive quantity, repetitive jobs into teams with no human interplay whereas the latter thinks of it because the partitioning of knowledge into chunks.

Whereas in classical software program engineering, batching assist us keep away from having laptop sources idle and run the roles when the sources can be found, in machine studying batches make the coaching rather more environment friendly due to the way in which the stochastic gradient descent algorithm works.

I am not gonna go deep into many particulars however in essence, as a substitute of updating the weights after coaching each single knowledge level, we replace the weights after each batch. This modification of the algorithm is named by many Batch Gradient Descent (for extra particulars try the hyperlink on the finish).

In tensorflow and tf.knowledge, creating batches is as straightforward as this:

practice = practice.batch(batch_size)

That means after loading and manipulating our knowledge we will break up them into small chunks so we will cross them into the mannequin for coaching. Not solely can we practice our mannequin utilizing batch gradient descent but in addition we apply all of our transformations on one batch at a time, avoiding to load all our knowledge into reminiscence without delay. Please be aware that the batch dimension refers back to the variety of components in every batch.

Now take note of this: we load a batch, we preprocess it after which we feed it into the mannequin for coaching in sequential order. Doesn’t imply that whereas the mannequin is operating, the entire pipeline stays idle ready for the coaching to be accomplished so it will possibly start processing the subsequent batch? That is proper. Ideally, we might wish to do each of those operations on the similar time. Whereas the mannequin is coaching on a batch, we will preprocess the subsequent batch concurrently.

However can we try this? After all!

Prefetching

Tensorflow lets us prefetch the info whereas our mannequin is skilled utilizing the prefetching perform. Prefetching overlaps the preprocessing and mannequin execution of a coaching step. Whereas the mannequin is executing coaching step n, the enter pipeline is studying the info for step n+1. That means we will scale back not solely the general processing time however the coaching time as effectively.

practice= train_train.prefetch(buffer_size=tf.knowledge.experimental.AUTOTUNE)

For individuals who are extra tech-savvy, utilizing prefetching is like having a decoupled producer-consumer system coordinated by a buffer. In our case, the producer is the info processing and the patron is the mannequin. The buffer is dealing with the transportation of the info from one to the opposite. Understand that the buffer dimension needs to be equal or much less with the variety of components the mannequin is anticipating for coaching.

Caching

One other cool trick that we will make the most of to extend our pipeline efficiency is caching. Caching is a method to quickly retailer knowledge in reminiscence or in native storage to keep away from repeating stuff just like the studying and the extraction. Since every knowledge level will probably be fed into the mannequin greater than as soon as (one time for every epoch), why not retailer it into the reminiscence? That’s precisely we will do utilizing the caching perform from tf.knowledge

train_ practice = practice.cache()

So every transformation is utilized earlier than the caching perform will probably be executed and solely on the primary epoch. The caveat right here is that we now have to be very cautious on the constraints of our sources, to keep away from overloading the cache with an excessive amount of knowledge. Nonetheless, if we now have complicated transformations, is normally most popular to do them offline quite than executing them on a coaching job and cache the outcomes.

At this level, I wish to say that that is all you could learn about constructing knowledge pipelines and make them as environment friendly as potential. Our complete pipeline is lastly in place and it seems to be like this:

knowledge = tfds.load(data_config.path, with_info=data_config.load_with_info)

practice = dataset['train'].map(lambda picture: DataLoader._preprocess_train(picture, image_size),num_parallel_calls=tf.knowledge.experimental.AUTOTUNE)

train_dataset = practice.shuffle(buffer_size).batch(batch_size).cache().repeat()

mannequin.match(train_dataset)

Nonetheless, earlier than I allow you to go I wish to talk about one other essential matter that you could be or could not want in your on a regular basis coding life. Streaming. In actual fact, with streaming we return to the extraction part of our pipeline, however I really feel like I would like to incorporate that right here for completion.

Streaming

So initially what are we attempting to resolve right here with streaming? And second what’s streaming?

There are use circumstances the place we do not know the total dimension of our knowledge as they could come from an unbounded supply. For instance, we will purchase them by an exterior API or we could extract them from a database of one other service that we do not know many particulars. Think about for instance that we now have an Web of Issues software the place we accumulate knowledge from totally different sensors and we apply some kind of machine studying to them. On this situation, we do not actually know the total aspect of the info and we could say that we now have an infinite supply that may generate knowledge ceaselessly. So how can we deal with that and the way we will incorporate these knowledge into an information pipeline?

Right here is when Streaming comes actually useful. However what’s streaming?

Streaming is a technique of transmitting or receiving knowledge (over a pc community) as a gentle, steady movement, permitting playback to start out whereas the remainder of the info remains to be being obtained.

What is occurring behind the scenes, is that the sender and the receiver open a connection that continues to be open for so long as they want. Then the sender sends very small chunks of our knowledge by means of the connection and the receiver will get them and reassembles them into their unique type.

Are you able to now see how helpful that may be for us? We are able to open a reference to an exterior knowledge supply and preserve processing the info and coaching our mannequin on them for so long as they arrive.

To make our lives simpler, there may be an open-source library referred to as Tensorflow I/O. Tensorflow I/O helps many alternative knowledge sources not included within the unique TensorFlow code similar to BigQuery and Kafka and a number of codecs like audio, medical photos, and genomic knowledge. And naturally, the output is absolutely suitable with tf.knowledge. So we will apply all of the capabilities and tips we talked to this point prior to now two articles.

Let’s see an instance of when our knowledge come from Kafka. For these of you who don’t know, Kafka is a excessive performant, distributed messaging system that’s been used broadly within the trade.

import tensorflow_io.kafka as kafka_io

dataset = kafka_io.KafkaDataset ('matter', server = "our server", group=" our group")

Dataset = dataset.map(...)

Don’t dangle up an excessive amount of on the Kafka particulars. The essence is that it makes streaming so easy I wish to cry from pleasure.

As a aspect materials, I strongly recommend the TensorFlow: Superior Methods Specialization course by deeplearning.ai hosted on Coursera, which provides you with a foundational understanding on Tensorflow

Conclusion

Within the final two articles of the Deep Studying within the manufacturing collection, we found learn how to construct environment friendly knowledge pipelines in TensorFlow utilizing patterns like ETL and useful programming and explored totally different methods and tips to optimize their efficiency. So I believe at this level we’re able to say goodbye to knowledge processing and proceed with the precise coaching of our mannequin.

Coaching could sound easy and perhaps you suppose that there is not a lot new stuff to study right here, however I can guarantee you that it is not the case. Have you ever ever practice your knowledge on distributed programs? Have you ever used cloud computing to make the most of its sources as a substitute of draining your laptop computer? And what about GPU’s?

These are solely a few of the matters we are going to cowl later. If that sounds attention-grabbing, you’re greater than welcome to return aboard and be a part of our AI Summer season group by subscribing to our e-newsletter.

See you quickly…

References

-

cloud.google.com, Batch processing

-

tensorflow youtube channel, Inside TensorFlow: tf.knowledge + tf.distribute

-

tensorflow youtube channel, tf.knowledge: Quick, versatile, and easy-to-use enter pipelines

-

tensorflow youtube channel, Scaling Tensorflow knowledge processing with tf.knowledge

-

tensorflow.org, Higher efficiency with the tf.knowledge API

-

datacamp.com, Python Iterator Tutorial

-

ruder.io, An summary of gradient descent optimization algorithms

-

aws.amazon.com, What’s Streaming Knowledge?

-

searchstorage.techtarget.com, Cache (computing)

* Disclosure: Please be aware that a few of the hyperlinks above could be affiliate hyperlinks, and at no extra value to you, we are going to earn a fee for those who determine to make a purchase order after clicking by means of.