-

For a complete checklist of all of the papers and articles of this collection test our Git repo

-

For a hands-on course we extremely advocate coursera’s brand-new GAN specialization

Laptop imaginative and prescient is certainly a promising utility discipline for GANs. Till now, we targeted on conditional and unconditional picture era. Within the earlier publish, we supplied quite a lot of facets equivalent to coaching with Wasserstein loss, understanding disentangled representations, modeling world and native constructions with totally different methods, progressive incremental coaching of GANs, and so on. Nonetheless, deep studying in laptop imaginative and prescient contains a wide range of superior duties equivalent to object era, video era, and so on. We truly reached the purpose of megapixel decision with progressive GANs for the primary time. The query is: can we do higher?

On this half, we’ll examine 2K picture and video synthesis, large-scale conditional picture era. Our in depth evaluation makes an attempt to bridge the gaps that you’ll have from earlier works on the sector. We are going to revise a bunch of laptop imaginative and prescient ideas equivalent to object detection, semantic segmentation, occasion semantic segmentation. Mainly, we wish to exploit all of the accessible labels and extremely correct networks to maximise visible high quality. Meaning even those that may be generated from state-of-the-art deep studying fashions. Since 2018 GANs gained elevated consideration in the neighborhood attributable to their avast cool purposes, particularly in laptop imaginative and prescient. Nonetheless, one simply grasps that because the introduction of progressive GANs on the finish of 2017, NVidia began to personal GANs in laptop imaginative and prescient! We are going to analyze the next three foundational works:

pix2pixHD (Excessive-Decision Picture Synthesis and Semantic Manipulation with Conditional GANs 2017)

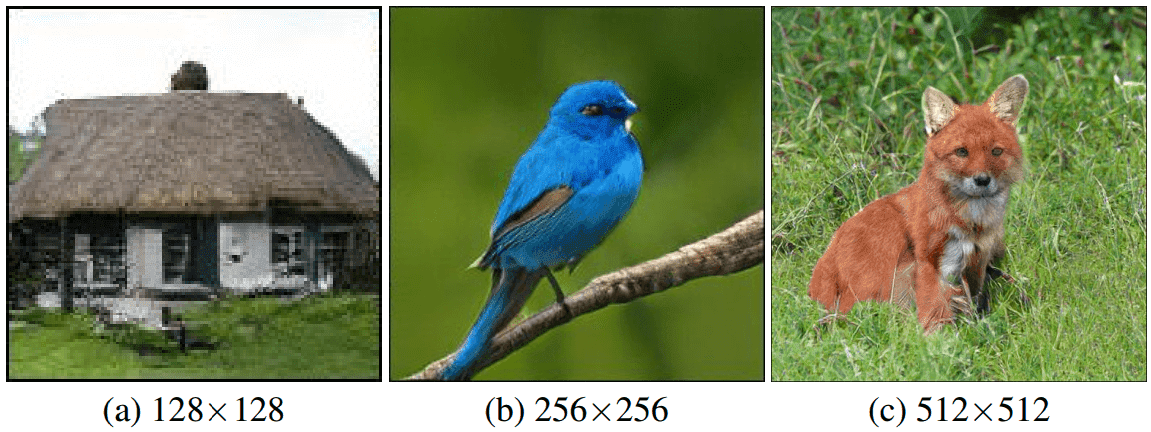

As you may think about this work extends the pix2pix mannequin that we mentioned partially 2. In essence, the pix2pix technique adopts a symmetric u-net structure for the generator and a patch-based discriminator. Extra importantly, the enter to the discriminator is each the enter picture and the generated one (i.e. concatenation of picture with semantic label). Nonetheless, the picture decision reaches as much as 256 × 256, which continues to be low. It’s extensively recognized in laptop imaginative and prescient {that a} multi-resolution pipeline is a well-established follow, with two-scale approaches to dominate as a result of it’s typically sufficient to mannequin 2 scales.

However how did they handle to enhance pix2pix in a multi-scale trend?

Decomposing the generator

The generator G is decomposed in G1 and G2. G1 acts as the worldwide generator community and G2 acts because the native enhancer community. G1 operates in a decrease dimension, particularly half of the dimension of G2 in every spatial dim. Out of the field, it may be seen near the thought of progressive GANs, however as an alternative of symmetrically including greater working layers for each G and D, they improve this concept simply within the generator structure.

G1 and G2 are skilled individually after which G2 is skilled along with G1, just like a two-step progressive GAN with a lot larger constructing blocks. As you’ll begin to perceive, increasingly consideration will likely be given to the cautious design of the generator. A visible illustration could be seen beneath:

The generator of pix2pixHD, borrowed from the unique publication.

International Generator G1: On this path, they adopted and constructed upon an already profitable structure in 512×512 resolutions. G1 alone consists of three parts:

-

A convolutional front-end structure G1_front, that decreases the spatial dimensions.

-

A big set of skip residual blocks G1_res, that the heavy processing is carried out.

-

A transposed convolutional back-end G_back that restores spatial dimensions.

On this sequential method, a segmentation map of decision 1024×512 is handed by G1 to output a picture of decision 1024 × 512.

Native enhancer community G2: G2 follows the identical 3-component hierarchy, with the primary distinction being that the decision of the enter is 2048 × 1024. Secondly, the enter to the residual block G2_res is the residual connection (element-wise sum) of two function maps:

-

the output function map of G2_front (left black rectangle within the determine), and

-

the final function map of G1_back

On this approach, the combination of worldwide picture info from G1 to G2 is achieved. Now, G2 can deal with studying the high-level frequencies that correspond to the native particulars.

Let’s transfer on to see the design modifications and their reasoning for the alternatives of D.

Multi-scale discriminators

The motivation lies in the truth that the discriminator must have a big receptive discipline. To realize this with convolutional layers, you may both add extra layers, enhance kernel measurement, or use dilated convolutions. Nevertheless, these selections require excessive computational house complexity, which is intractable for such high-resolution picture era.

However let’s begin our evaluation with an remark. What would occur if we do not use a number of discriminators? The authors spotlight that with out the utilization of multi-scale discriminators, many repeated patterns are sometimes noticed. To this finish, the authors use 3 discriminators (D1, D2, D3), which have an similar community construction. Nonetheless, they function at totally different picture scales.

In additional particulars, each the actual and synthesized high-resolution photos are downsampled by an element of two and 4. Subsequently, we now have 3 scales of picture pairs, particularly 2048×1024, 1024×512, 512×256. That is normally known as a picture pyramid in laptop imaginative and prescient phrases.

The discriminator D1 that operates on the coarsest scale(highest decision) has the biggest receptive discipline. Subsequently, it’s apparent that it perceives a extra world view of the picture. This will assist the steering of the generator (particularly the worldwide gen. G1) to make sure world consistency within the photos. In the other way, the discriminator D3 on the best scale encourages the generator (particularly the native enhancer G2) to mannequin finer native particulars.

So, we now have a multi-scale G and D. Let’s now discover an thrilling solution to match their scales in a feature-loss method.

Multi-scale function matching loss

Characteristic matching loss allows G to match the anticipated worth of the options on an intermediate layer of the discriminator. This goal requires the generator to generate information that matches the statistics of the actual information, right here in a number of scales! By coaching the multi-scale discriminator on this trend, we ask D1, D2, D3 to seek out essentially the most discriminative options of actual information versus the generated ones. Consequently, multi-scale function loss stabilizes the coaching as G has to provide multi-scale pure statistics. Let’s see the mathematics:

Within the above equation, T is the layers of every discriminator and N is the variety of components of the function map of every layer. Indexing D with okay exhibits that this loss could be utilized in all of the discriminators. s is the enter picture(i.e. segmentation) and x is the actual goal information. Discover once more that D has entry to each enter and hallucinated photos. For 3 D’s we now have three function matching losses, working at totally different scales.

Utilizing semantic occasion segmentation

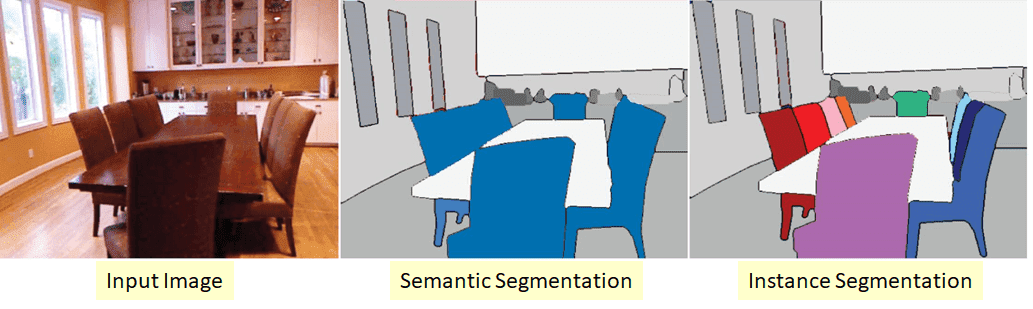

First, let’s begin by guaranteeing that we perceive the kinds of segmentation and detection:

-

Object Detection: We detect an object, normally with a bounding field(2-pixel places) in a picture.

-

Semantic Segmentation: We classify every pixel in a category(class), known as semantic labels (i.e. automobiles, bushes)

-

Occasion Segmentation: We prolong semantic segmentation by additional figuring out the category occasion it belongs to (i.e. Automobile 1, Automobile 2, Tree 1 Tree 2, and so on…) So, an instance-level segmentation map accommodates a distinctive class ID for every particular person class (object).

Taken from Silberman et. al 2014 (ECCV). Paper could be discovered right here hyperlink.

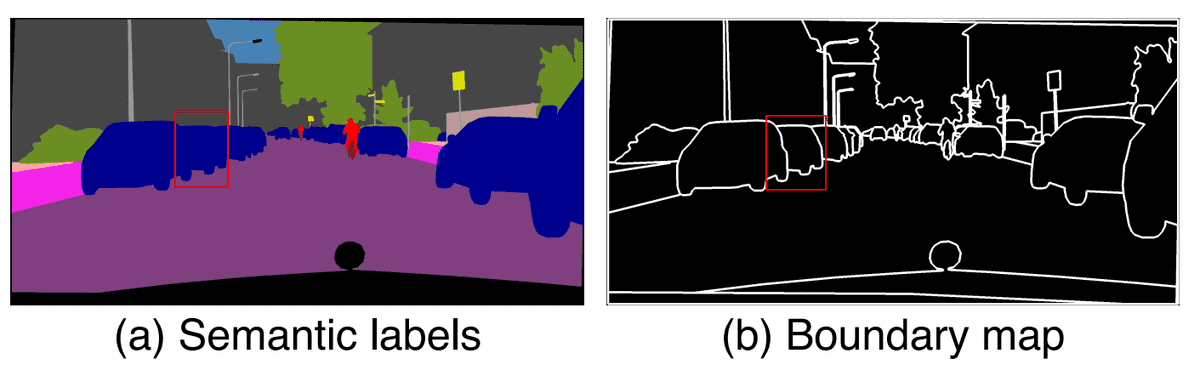

Primarily based on the above, it’s apparent that segmentation maps don’t differentiate objects of the identical class. The authors imagine that the most important info which lies within the occasion map is the article boundary. Let’s examine how one can create such a boundary map.

Of their implementation, a binary occasion boundary map could be shaped as merely as this: a pixel has a price of 1 if its distinctive class ID is totally different from any of its 4-neighbors, and 0 in any other case.

Boundary map from instance-level segmentation maps, taken from pix2pixHD

Let’s deal with the pink rectangle within the illustrated photos. When we now have a collection of automobiles with the identical semantic label, semantic segmentation just isn’t sufficient. By utilizing occasion labels and searching within the neighbors, one can derive a binary boundary map.

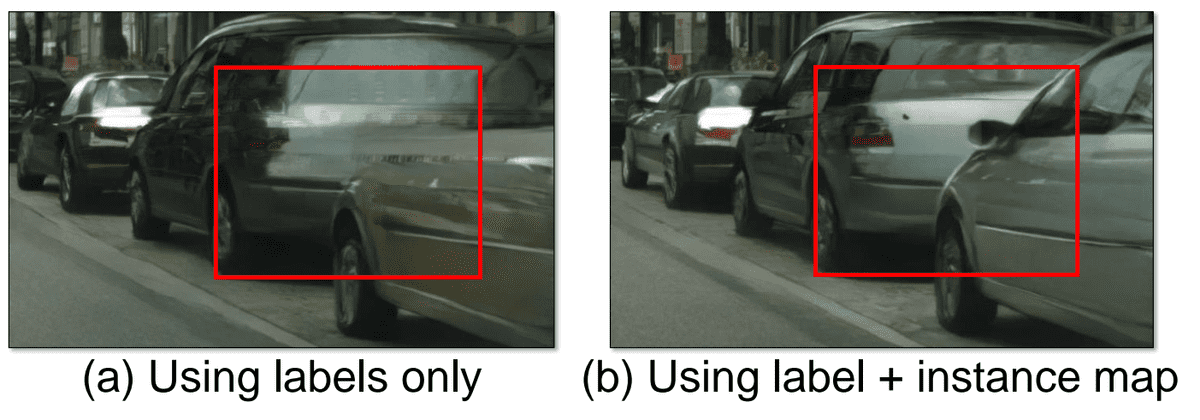

The occasion boundary map is then concatenated with the one-hot vector illustration of the semantic label map, and fed into the generator community as another enter channel. This technique may appear easy and intuitive, however in follow, it really works extraordinarily effectively, as depicted beneath:

Exploiting hand-crafted instance-based boundary maps, taken from right here

Studying an Occasion-level Characteristic Embedding

Transferring one step additional it’s attainable to be taught and embed such maps within the enter of the generator to extend range that ensures picture and instance-level consistency. As an alternative of feeding hand-crafted maps such because the binary boundary map, we are able to prepare a community to provide numerous function embeddings. Let’s summarize the method of producing the low-dimensional options:

-

We prepare an encoder E(x) to discover a low-dimensional function vector. The latter ought to correspond to the bottom reality for every occasion within the picture. Observe that x is the actual picture.

-

So, we add an instance-wise common pooling layer to the output of the encoder to compute the typical function for the article occasion. This ensures that the options are constant inside every occasion.

-

The ensuing common function is broadcasted to all of the pixel places of the precise occasion. For instance, all pixels that discuss with automotive 1 will share a standard common function, which will likely be totally different from automotive 2.

-

We exchange G(s) with G(s | E(x) ) and prepare the encoder collectively with the mills and discriminators.

-

After coaching, we run it on all situations of the coaching photos and save the obtained options (offline!).

-

For every semantic class (NOT occasion) we carry out a Ok-means clustering on all options. So, we now have a set of Ok clusters that correspond to totally different representations of every class. This may be considered: every cluster encodes the options for a particular type of the identical occasion.

-

For inference, we are able to simply randomly choose one of many cluster facilities and use it because the encoded steady options.

All of the above could be illustrated in follow on this picture (street type and major automotive type are modified based mostly on this technique):

Outcomes of pix2pixHD GAN by introducing instance-level function embeddings

Lastly, it’s highlighted that the pix2pixHD learns to embed the type of various objects, which could be confirmed useful to different utility domains, that pre-trained fashions aren’t accessible. Additional outcomes and official code are supplied on the official web site.

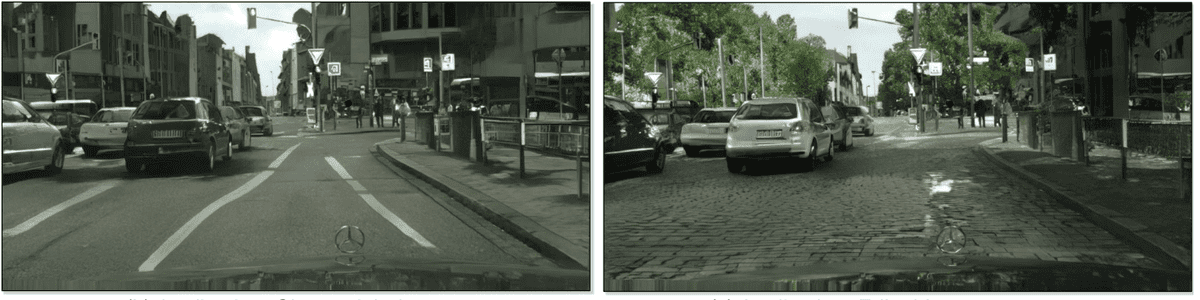

We already noticed a bunch of gorgeous picture era. However what about video era? On this thrilling work, the authors sort out the novel process of video-to-video era.

Mainly, we’re conditioning on the earlier video sequence in addition to their corresponding segmentation maps. However let’s see why this process introduces some actually troublesome challenges. One could argue you could simply use one of the best high-resolution conditional picture prediction, which is pix2pixHD.

Though this broad class of fashions achieves impeccable photo-realistic outcomes, the ensuing stacked video lacks temporal coherence. The roads, buildings, and bushes could seem inconsistent throughout frames. The primary purpose that we observe these visible artifacts is as a result of there’s no constraint that ensures temporal consistency (throughout frames).

An superior illustration of video incoherence is illustrated within the official video.

Formulating the issue when it comes to machine studying

In video synthesis, we purpose to be taught a mapping that may convert an enter video to an output video. Mathematically, given a pair of segmentations maps s and RGB movies x we wish to generate a sequence y conditioned on s in order that: p(x|s) = p(y|s).

By expressing the issue as conditional sequence distribution matching, it’s attainable to be taught the specified temporal coherence. To this finish, the authors mannequin the issue by factorizing p(y|s) as a product of the chances of the final two timesteps.

We index the brand new timestep of the video by index 3, and the 2 earlier timesteps as 1 and a pair of all through this tutorial. This notation is adopted to simplify the reason. Nevertheless, this method could be generalized to bear in mind extra frames.

Extra particularly frames are produced sequentially given:

-

present segmentation masks (s3)

-

the previous two segmentation masks (s1,s2)

-

the previous two generated frames (x1,x2)

This conditional assumption is named the Markovian assumption. It’s principally the conditional independence assumption for sequences. Because of this, we use a deep community to be taught this mapping known as F. Therefore, we are able to get hold of the ultimate video by recursively making use of F. Is that this alone sufficient to provide a state-of-the-art 2K decision video era? After all not! Let’s see some extra magical engineering.

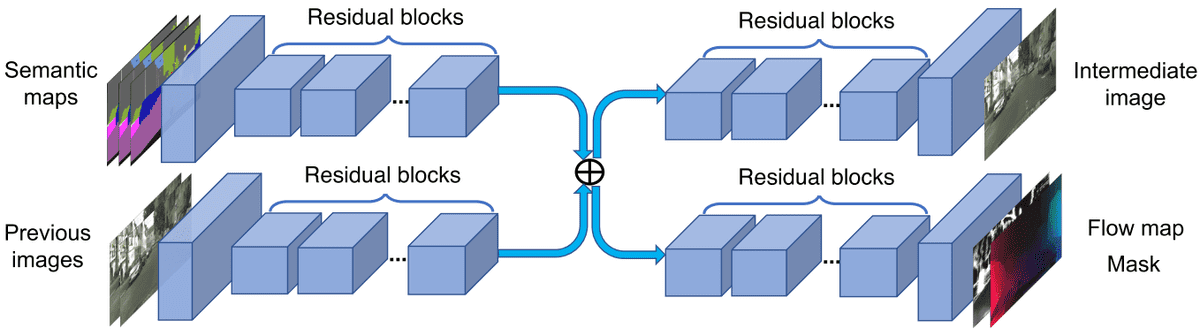

Generator: Exploiting optical circulate

It’s recognized that consecutive frames comprise a major quantity of redundant info. How can we optimize this? If optical circulate info (W) was accessible, it might be attainable to estimate the following body by a easy course of known as body warping. If you’re not acquainted with optical circulate ideas, you may rapidly test right here.

Now, we now have a brand new drawback. Optical circulate warping is an estimation that fails in occluded areas however works effectively on the opposite elements of the picture. Ideally, we would want a further masks (M) to tell us in regards to the occluded and non-occluded pixels in order that we are able to use optical circulate.

And even when all of the above had been attainable, we’d nonetheless want a picture generator (H) to generate picture h, specializing in the occlusion pixels.

Sadly, we don’t have this info. Fortuitously, since we use deep studying fashions, it’s attainable to make use of pre-trained state-of-the-art fashions for W and M, particularly Flownet2 and Masks-RCNN. These fashions produce w(x) – warped picture of x, given optical circulate community W– and m (delicate masks), respectively. It’s price mentioning that the produced masks are delicate masks (steady in a specified vary). Subsequently, the community slowly provides particulars by mixing the warped pixels and the generated pixels.

Lastly, we are able to categorical the issue like this:

the place m’ is the inverse masks, w the warped picture of x2, estimated from W, and h the hallucinated picture from the generator H.

An illustration of the generator parts, taken from the unique paper

All of the above fashions M, W, and H use brief residual skip connections. The generator is just like pix2pixHD in an effort to deal with high-resolution photos. A wonderful official repository is offered from NVidia right here.

Discriminator: going through the spatio-temporal nature

For the reason that drawback is spatio-temporal, one solution to design it’s by dividing it into two sub-problems. It’s recognized that you should utilize a couple of discriminator, whereas each focuses on totally different sub-problems. In our case, the issue is decomposed as discriminating photos and movies.

We are going to discuss with the conditional picture discriminator as DI and the conditional video discriminator as DV. Each observe the precept of PatchGAN from pix2pix, which implies that the adversarial studying is calculated for some a part of the enter. This concept makes coaching sooner and fashions detailed and localized high-level frequencies (constructions).

On one hand,DI is fed with a single pair of segmentation masks and a picture (video slice) and tries to tell apart the generated photos. The pair is uniformly sampled from the video sequence. However, DV is conditioned on the circulate to attain temporal coherence. That’s why it’s fed with pairs of a sequence of photos and a sequence of optical flows. The patches are generated by selecting okay consecutive frames from the sequence. The sampling method is essential right here, because it makes computations extra tractable by decreasing the time and house complexity.

Furthermore, given a floor reality of optical flows, one can calculate an optical circulate estimation loss to regularize coaching. Lastly, the authors use the discriminator function matching loss in addition to the VGG function matching loss, just like pix2pixHD. These extensively accepted strategies regularize additional the coaching course of and enhance convergence pace and coaching stability. All of the above could be mixed to attain the entire optimization criterion to sort out video synthesis. The official video that follows would possibly persuade you extra:

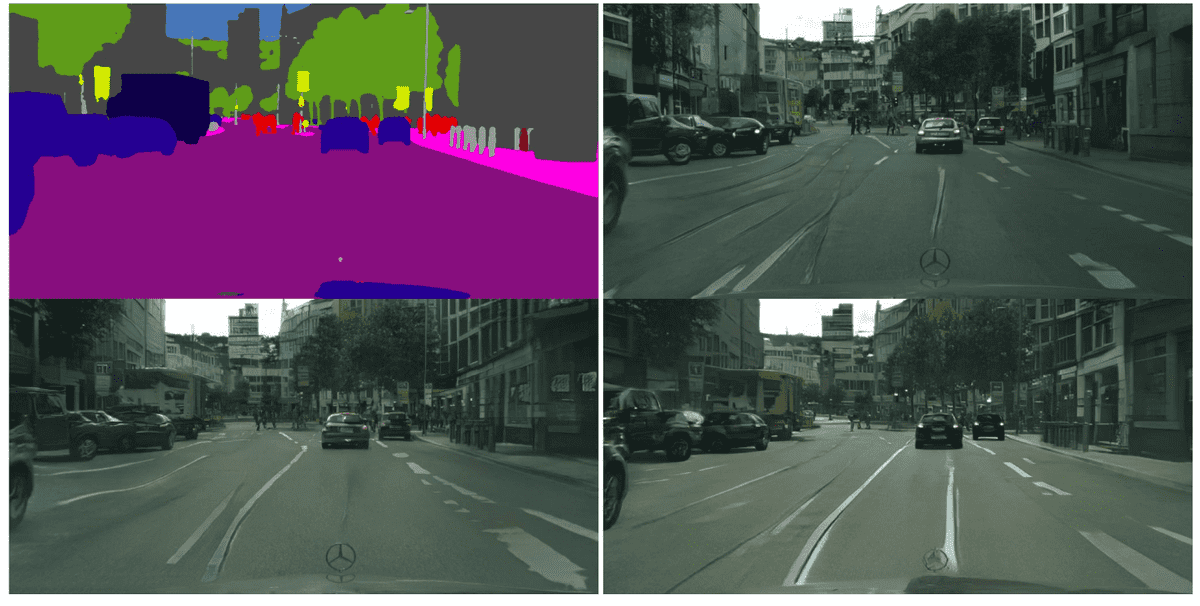

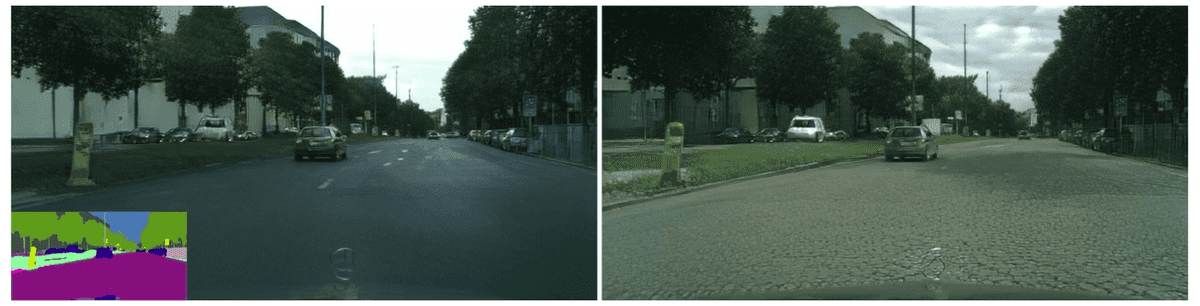

Additional distilling efficiency from semantic segmentation masks

Semantic segmentation treats a number of objects of the identical class as a single entity. So, it’s attainable to divide a picture into foreground and background areas based mostly on the semantic labels. As an example, buildings belong to the background, whereas automobiles belong to the foreground. The motivation behind that is that the background movement could be modeled as a worldwide transformation. In different phrases, the optical circulate could be estimated extra precisely than the foreground. Quite the opposite, a foreground object typically has a big movement, which makes optical circulate estimation ineffective. Subsequently, the community has to synthesize the foreground content material from scratch. This extra prior data considerably improves the visible high quality by a big margin, as you may see beneath (backside left is the vid-to-vid mannequin).

Outcomes from vid-to-vid GAN, taken from the unique work

What about occasion semantic segmentation?

This course of is closely based mostly in pix2pixHD. Occasion segmentation treats a number of objects of the identical class as distinct particular person objects. How might you presumably add stochasticity to provide significant range on this big mannequin?

A technique to take action is by producing a significant latent house. That is attainable by function embedding. Virtually, to map every occasion (automotive, constructing, avenue) to a function house.

That is executed through a convolutional layer adopted by a customized instance-wise common pooling layer within the enter picture, to provide a distinct function vector for every occasion. However is not this a latent house vector that can be utilized to introduce stochasticity? Sure, it’s! Subsequently to introduce stochasticity within the mannequin (multimodal synthesis), the authors undertake the aforementioned function embedding scheme of the instance-level semantic segmentation masks.

This latent function embedding vector is fed together with the segmentation masks to F. On this approach, one can match a distinct distribution for every occasion (i.e. Gaussian) in order that he can simply pattern from the specified occasion at take a look at time to provide instance-wise significant range! The official code has been launched right here.

The street occasion is modified within the video. Taken from the unique work.

BigGAN (Giant Scale GAN Coaching for Excessive Constancy Pure Picture Synthesis 2018)

What would you do to enhance a generative community, for those who had all of the computing energy and time on the planet? You’d most likely wish to use them as successfully as attainable with one of the best strategies that scale up effectively. We referred to megapixel decision for unconditional era, however what occurs after we wish to have class-conditional high-resolution picture era?

This work tackles conditional picture era on actually massive scales. It’s well-known that instability and mode collapse are more durable issues to resolve within the class-conditional setup. Though the official TensorFlow implementation (with pre-trained weights) is offered, I might not wager you could deploy these fashions on a single GPU. By the best way, one other superior accompanying PyTorch repo with extra lifelike necessities is supplied right here.

Summarizing large-scale modifications

Let’s briefly summarize the totally different strategies and methods of this work.

-

Incrementally scaling as much as a most of 4 instances the parameters in comparison with earlier fashions.

-

Scaling up batch measurement as much as 8 instances (2048!) with a distributed coaching setup.

-

Use an current well-performing GAN, particularly SA-GAN, and enhance its function maps and depth.

-

Make use of one of the best normalization method for GANs, particularly spectral normalization

-

Use the efficient hinge loss, described intimately right here

-

They supplied class info with an already established method, known as conditional-batch norm with shared embedding, described right here and right here

-

They used orthogonal initialization for the parameters.

-

They decrease the training charges and make two D steps per G step.

-

They lastly add a skip residual block construction to extend community depth successfully, due to the vanishing gradient issues.

-

Final however not least, they introduce the so-called truncation trick!

Enhance batch measurement

Growing the batch measurement offers higher gradients for each networks. Making use of solely this specific modification of accelerating the batch measurement by an element of 8 improves the efficiency rating by 46%. One notable facet impact is that the proposed mannequin reaches the ultimate efficiency in fewer iterations, however with extra instabilities.

Enhance the width

Furthermore, by rising the variety of channels (function maps) by 50% the entire variety of trainable parameters is doubled. Batch-norm with conditional shared embedding, reduces time and house complexity, in addition to it improves the coaching pace. Consequently, the width enhance results in a further 21% enchancment.

Enhance depth

To extend the community’s depth, they designed direct skip connections from the noise vector z to a number of generator layers, known as skip-z. The instinct behind this design is to permit G to make use of the latent house z to straight affect options at totally different resolutions and ranges of hierarchy. Within the larger fashions, they merely concatenate the z with the conditional class vector. Amazingly, skip-z connections present 4%, whereas pace up coaching by 18%!

Truncation Trick

Given the initially sampled vector z that’s sampled from a standard distribution, one can compute the vector norm (magnitude). The concept is to resample the vector if the calculated magnitude just isn’t above a set hardcoded threshold (i.e. 1). That is carried out solely within the already skilled mannequin. Consequently, they experimentally noticed that this minor change results in a major enchancment in particular person pattern high quality at the price of diminished range. Nevertheless, this trick could be problematic for some fashions. To face this difficulty,the authors attempt to constrain G to be easy, so that each one the vary of z will map to good output samples. That’s why they make use of orthogonal regularization.

Outcomes

All of the aforementioned modifications result in the state-of-the-art class-conditioned picture era, illustrated beneath:

A important discovering is that stability comes from the balanced interplay between G and D by the adversarial coaching course of. Furthermore, Large-GAN interpolates with success between same-class samples, suggesting that it doesn’t merely memorize coaching information. One other vital remark that’s derived from this examine is the class leakage, the place in photos from one class comprise properties of one other. This can be justified because of the big batch sizes. Lastly, additionally it is price noticing that many courses that exist in massive parts and are distinguished by their texture (i.e. canines) are simpler to generate. However, restricted portion-classes which have extra large-scale constructions (crowds) are more durable to generate.

Conclusion

On this tutorial, we inspected among the top-performing GANs in laptop imaginative and prescient for 2018. We supplied a large perspective of one of the best strategies within the discipline, rising naturally from tackling real-world large-scale issues. We began with a 2K picture era and a number of mills and discriminator. Then, we moved to video synthesis conditioned on previous frames with assured temporal consistency. Lastly, we revisited class-conditioned picture era in ImageNet, taking into consideration all of the engineering methods recognized within the discipline.

For our math-keen GAN readers, we encourage you to take a very good have a look at Spectral normalization in GANs, which we didn’t cowl on this tutorial. One other relative laptop imaginative and prescient utility of GANs is StarGAN, which is fairly attention-grabbing to research additionally. There isn’t a one measurement suits all. Discover one of the best technique that tackles your drawback. We actually hope that this tutorial collection offers you with a transparent overview of the sector.

Nonetheless, there’s an infinite set of instructions to discover, as we’ll see within the subsequent half.

For a hands-on video course we extremely advocate coursera’s brand-new GAN specialization.Nevertheless, for those who favor a guide with curated content material in order to begin constructing your personal fancy GANs, begin from the “GANs in Motion” guide! Use the low cost code aisummer35 to get an unique 35% low cost out of your favourite AI weblog.

Cited as:

@article{adaloglou2020gans,

title = "GANs in laptop imaginative and prescient",

writer = "Adaloglou, Nikolas and Karagiannakos, Sergios ",

journal = "https://theaisummer.com/",

yr = "2020",

url = "https://theaisummer.com/gan-computer-video-synthesis/"

}

References

- Wang, T. C., Liu, M. Y., Zhu, J. Y., Tao, A., Kautz, J., & Catanzaro, B. pix2pixHD: Excessive-Decision Picture Synthesis and Semantic Manipulation with Conditional GANs.

- Wang, T. C., Liu, M. Y., Zhu, J. Y., Liu, G., Tao, A., Kautz, J., & Catanzaro, B. (2018). Video-to-video synthesis. arXiv preprint arXiv:1808.06601.

- Brock, A., Donahue, J., & Simonyan, Ok. (2018). Giant scale gan coaching for prime constancy pure picture synthesis. arXiv preprint arXiv:1809.11096.

- Johnson, J., Alahi, A., & Fei-Fei, L. (2016, October). Perceptual losses for real-time type switch and super-resolution. In European convention on laptop imaginative and prescient (pp. 694-711). Springer, Cham.

- Silberman, N., Sontag, D., & Fergus, R. (2014, September). Occasion segmentation of indoor scenes utilizing a protection loss. In European Convention on Laptop Imaginative and prescient (pp. 616-631). Springer, Cham.

- Ilg, E., Mayer, N., Saikia, T., Keuper, M., Dosovitskiy, A., & Brox, T. (2017). Flownet 2.0: Evolution of optical circulate estimation with deep networks. In Proceedings of the IEEE convention on laptop imaginative and prescient and sample recognition (pp. 2462-2470).

- He, Ok., Gkioxari, G., Dollár, P., & Girshick, R. (2017). Masks r-cnn. In Proceedings of the IEEE worldwide convention on laptop imaginative and prescient (pp. 2961-2969).

* Disclosure: Please observe that among the hyperlinks above is likely to be affiliate hyperlinks, and at no extra price to you, we’ll earn a fee for those who determine to make a purchase order after clicking by.