-

For a complete checklist of all of the papers and articles of this collection examine our Git repo

-

For a hands-on course we extremely suggest coursera’s brand-new GAN specialization

An vital lesson from my journey in GANs is that you simply can not begin to study deep studying from GANs. There’s a large set of background data to know every design alternative. Every paper has its personal creativity that derives from a basic understanding of how deep studying actually works. Proposing basic options in generative studying is extraordinarily powerful. However once you begin to deal with the particular duties, creativity has no ceiling within the sport of designing a GAN. This is among the causes that we selected to deal with pc imaginative and prescient. After a bunch of opinions, you begin to perceive that the highest papers we embrace begin to make sense. It’s certainly like a puzzle.

So, let’s attempt to clear up it!

Within the earlier put up, we mentioned 2K image-to-image translation, video to video synthesis, and large-scale class-conditional picture technology. Particularly, pix2pixHD, vid-to-vid, and BigGAN. On this half, we are going to begin with unconditional picture technology in ImageNet, exploiting the latest developments in self-supervised studying. Lastly, we are going to deal with type incorporation through adaptive occasion normalization. To take action, we are going to revisit ideas of in-layer normalization that will likely be confirmed fairly helpful in our understanding of GANs.

We’ve got already mentioned quite a bit about class-conditional GANs (BigGAN) in addition to image-conditioned ones (pix2pixHD), within the earlier put up. These strategies have achieved prime quality in giant resolutions, particularly 512×512 and 2048×1024, respectively. Nonetheless, we didn’t talk about what issues one could face when he tries to scale GANs in an unconditional setup.

This is among the first works that deliver concepts from the sphere of self-supervised studying into generative adversarial studying on giant scales. Furthermore, it’s revolutionary when it comes to introducing the notion of forgetting in GANs, in addition to a way to come across it. Unlabeled information are considerable in comparison with human-annotated datasets which can be pretty restricted. Due to this fact, it’s a course that we wish to discover.

Earlier than we begin, let’s make clear one factor which will appear imprecise at first: the position of self-supervision targets the discriminator to be able to study significant function representations. By way of adversarial coaching, the generator can be affected by the injection of self-supervised steerage. Now, allow us to begin by first understanding the notion of self-supervision.

1. What’s self-supervised studying?

Usually, GANs are thought of a type of unsupervised studying. However why? The reason being principally that all information have the label “actual”, whereas the generated ones have the label “faux” (at the least for the coaching of D).

Unsupervised means now we have no info on the information so principally we can not merely create a loss perform and backpropagate. In different phrases, with GANs we do that through labeling all of the coaching information as actual. So, we solid the issue as binary classification: actual VS faux.

Unsupervised information exists in giant portions. What if we might produce “correct and low cost labels” from the information itself? Since we’re specializing in pc imaginative and prescient, we confer with producing labels from the photographs or movies. In different phrases, we set studying targets correctly (in unlabeled information) in order to get supervision from the information itself. That is what self-supervised studying is all about!

With self-supervised studying, one can information the coaching course of through an invented supervised loss perform. It’s price noting that we don’t truly care concerning the outcomes of the duty that we design. As a substitute, our purpose is to study significant intermediate representations. It’s assumed that the realized illustration has memorized relative semantic or structural info to the duty that we wish to clear up.

In easy phrases, self-supervised refers to producing pretty correct and computationally low cost labels.

Correct refers to the truth that we wish to be virtually certain that the label is right. Some could marvel why we do not use well-trained fashions to foretell some kind of downstream label from the picture. This will certainly be a good selection provided that the mannequin can obtain superb accuracy. Nonetheless, this isn’t at all times the case. Some profitable large-scale examples may be present in Xie et. al. 2019.

Low cost refers to the truth that we do not use people to annotate. It’s normally the case that the label is generated from the picture/video. Usually, any transformation is appropriate. The large query is how will you select the self-supervised process? That is the place it will get actually inventive. In essence, we’re strongly primarily based on the idea that the duty that we select offers steerage for realized representations which can be significant for our desired process, which in our case is unconditional picture technology. Lastly, needless to say we normally confer with a self-supervised process as a pretext or proxy process.

Self-supervised rotation baseline

Let’s begin by inspecting the baseline purely self-supervised strategy by Gidaris et al. In our case, the authors selected to use rotation within the photographs of sure levels {0, 90, 180, 270}, whereas D tries to search out which rotation has been utilized to the true picture. For every picture, 4 totally different rotations are utilized as illustrated within the picture under.

Picture is taken from the unique work of Gidaris et al. ICLR 2018

On this manner, the mannequin (D in our case) is guided to study semantic function studying content material through recognizing rotations.

GAN instability from forgetting

Throughout coaching GANs, instability could come up from the truth that the generator and discriminator

study in a non-stationary setting. Let’s see this by way of the mathematics:

Within the well-known equation, you most likely noticed that PG adjustments throughout coaching. It’s the generated distribution of hallucinated photographs. That is the place the time period non-stationary coaching comes from. It’s recognized that, in non-stationary on-line environments similar to GANs, fashions neglect earlier duties [Kirkpatrick et al. 2017]. On-line refers back to the alternating gradient descent coaching of G and D.

Let’s see an attention-grabbing experiment to make clear this idea.

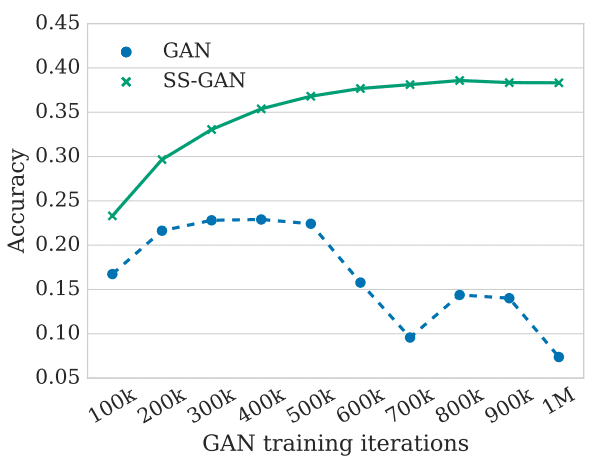

The authors educated the identical mannequin with and with out self-supervision in ImageNet with out labels (unconditional setup). As you see within the depicted determine after 500k iterations efficiency decreases. This efficiency drop of the unconditional GAN signifies that details about the photographs (on this case the category label) is acquired and later forgotten. That is what we name forgetting and it’s associated to coaching instability. By including the proper self-supervised process, info forgetting is restricted.

Determine: Analyzing and understanding forgetting of D in unconditional setup, taken from the unique work.

Somebody could marvel: how is that this accuracy acquired? Effectively, for every of the 100k iterations, they practice offline a logistic regression classifier on the final function maps. In order that they consider the efficiency by classifying the 1000 ImageNet lessons (or 10 lessons in CIFAR10). As a matter of truth, ImageNet comprises 1.3M photographs so we’re principally seeing that this phenomenon is fairly intense.

Proposed resolution: Collaborative Adversarial Coaching

As defined, G doesn’t contain any rotation in its architectures. The hallucinated photographs are solely rotated to be fed in D. Other than the adversarial zero-sum sport, G and D collaborate to be able to encounter the auxiliary rotation process. Curiously, D is educated to detect rotations primarily based solely on true information. Despite the fact that hallucinate photographs are rotated D parameters are not up to date. Since G is inspired to generate photographs that seem like the true ones, it tends to provide rotation-detectable photographs. Consequently, D and G are collaborative with respect to the rotation process.

In additional sensible phrases, the authors used a single D with two last layers for rotations detection and for distribution discrimination(actual vs faux), QD, and PD, respectively. Mathematically, this may be expressed as:

On this equation, V is the well-known adversarial criterion, R is the set of doable rotations, r is the chosen rotation, x superscript r is the rotated actual picture, and α, β are the hyperparameters. Be aware that, in G loss the rotation detection of the true photographs is indicated. On this manner, G learns to generate photographs, whose representations within the function area of D permits detecting rotations. Nonetheless, convergence to the true information distribution will not be assured by this extra constraint. That’s why, α begins from a constructive worth round 0.2 and is slowly lowered to zero, whereas β is solely set to 1.

Outcomes and dialogue

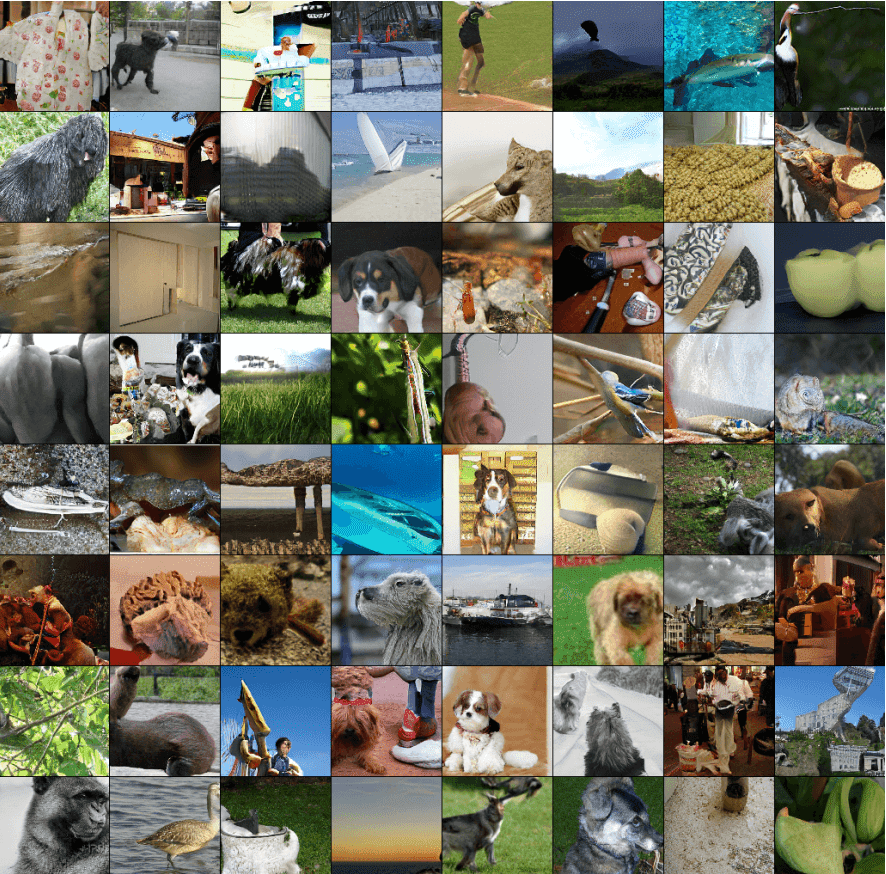

Within the experiments, the authors used ResNet architectures for G and D, much like Miyato et al., in addition to the proposed hinge loss to discriminate the true photographs. As well as, they used a batch measurement of 64, which is principally 16 photographs rotated within the set {0, 90, 180,270} for the smaller datasets like CIFAR-10. For the experiments in ImageNet, they educated the mannequin on 128 cores of TPU, utilizing a batch measurement of 2048, with resized photographs of 128×128 decision. Let’s see some photographs:

Outcomes of randomly generated photographs educated on ImageNet in 128×128 decision, as reported within the authentic work.

The core discovering is that, below the identical coaching situations, the self-supervised GAN closes the hole in pure picture synthesis between unconditional and conditional fashions. In different phrases, self-supervised GANs can match equal conditional GANs on the duty of picture synthesis, with out gaining access to labeled information.

One purpose to elucidate the worst efficiency within the baseline mannequin (named Cond-GAN) is that it most likely overfits the coaching information. One might examine the illustration efficiency of Cond-GAN on the coaching information for this. Authors declare to discovered a generalization hole, which presumably signifies overfitting.

In the other way, once they take away the adversarial loss leaving simply the rotation loss, the illustration high quality considerably decreases. This examine signifies that the adversarial and rotation losses most likely complement one another, each when it comes to quantitative and qualitative outcomes. Lastly, we conclude by highlighting that the illustration high quality and picture high quality are associated. This work proves with simple proof that the thought of self-supervised-GANs will not be trivial, because the proposed mannequin does study highly effective picture representations.

StyleGAN (A Fashion-Based mostly Generator Structure for Generative Adversarial Networks 2018)

Constructing on our understanding of GANs, as a substitute of simply producing photographs, we are going to now be capable to management their type! How cool is that? However, wait a minute. We already noticed partly 1 (InfoGAN) that controlling picture generations depends on disentangled representations. Let’s see how one can design such a community step-by-step.

This work is closely depending on Progressive GANs, Adaptive Occasion Normalization(AdaIN), and elegance switch. We’ve got already lined Progressive GANs within the earlier half, so let’s dive into the remainder of them earlier than we deal with the understanding of this work.

Understanding function area normalization and elegance switch

The human visible system is strongly attuned to picture statistics. It’s recognized that spatially invariant statistics similar to channel-wise imply and variance reliably encode the type of a picture. In the meantime, spatially various options encode a particular occasion.

Batch normalization

Batch Normalization (BN) normalizes the imply and customary deviation for every particular person function channel. Imply and customary deviation of picture options are first-order statistics that relate to world traits which can be visually interesting similar to type. Bearing in mind all of the picture options we by some meanscombine their world traits. This technique is definitely superb once we need our illustration to share these traits, similar to in downstream duties (picture classifications). Mathematically, this may be expressed as:

N is the variety of picture batch H the peak and W the width. The Greek letter μ() refers to imply and the Greek letter σ() refers to straightforward deviation. Equally, γ and β correspond to the trainable parameters that outcome within the linear/affine transformation, which is totally different for all channels. Particularly γ,β are vectors with the channel dimensionality. The batch options are x with a form of [N, C, H, W], the place the index c denotes the per-channel imply. Notably, the spatial dimensions, in addition to the picture batch, are averaged. This fashion, we focus our options in a compact area, which is normally helpful.

Nonetheless, when it comes to type and world traits, all particular person channels share the disgrace realized traits, particularly γ,β. Due to this fact, BN may be intuitively understood as normalizing a batch of photographs to be centered round a single type. Nonetheless, the convolutional layers are capable of study some intra-batch type variations. As such, each single pattern should still have totally different kinds. This, for instance, was undesirable if you wish to switch all photographs to the identical shared type (i.e. Van Gogh type).

However what if we do not combine the function batch traits?

Occasion normalization

Totally different from the BN layer, Occasion Normalization (IN) is computed solely throughout the options spatial dimensions, however once more independently for every channel (and every pattern). Actually, we simply take away the sum over N within the earlier equation. Surprisingly, it’s experimentally validated that the affine parameters in IN can utterly change the type of the output picture. Versus BN, IN can normalize the type of every particular person pattern to a goal type (modeled by γ and β). Because of this, coaching a mannequin to switch to a particular type is simpler. As a result of the remainder of the community can focus its studying capability on content material manipulation and native particulars whereas discarding the unique world ones (i.e. type info).

On this method, by introducing a set that consists of a number of γ, one can design a community to mannequin a plethora of finite kinds, which is strictly the case of conditional occasion normalization.

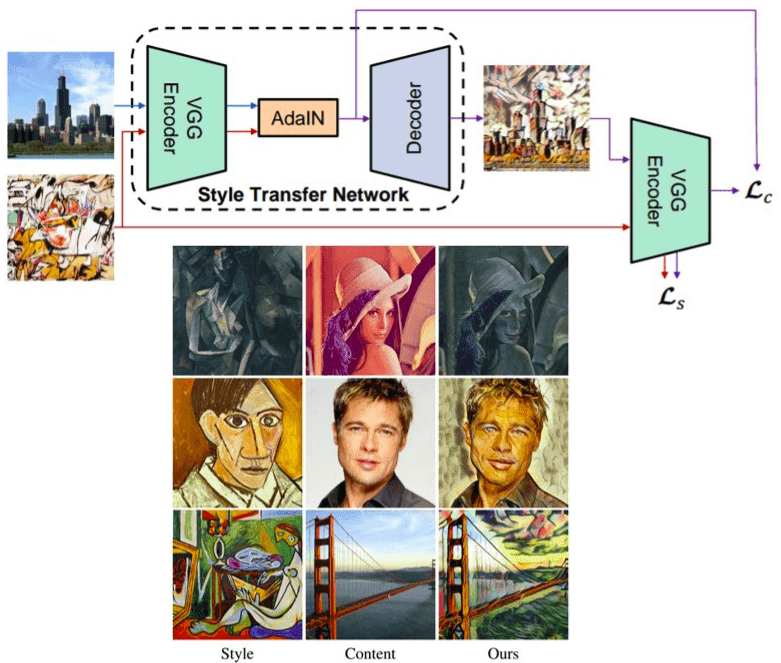

Adaptive Occasion Normalization (AdaIN)

The thought of fashion switch of one other picture begins to change into pure. What if γ, β is injected from the function statistics of one other picture? On this manner, we will mannequin any arbitrary type by simply giving our desired function picture imply as β and variance as γ. AdaIN does precisely that: it receives an enter x(content material) and a method enter y, and easily aligns the channel-wise imply and variance of x to match these of y. Mathematically:

That is all! So what can we do with only a single layer with this minor modification? Allow us to see!

Structure and outcomes utilizing AdaIN. Borrowed from the unique work.

Within the higher half, you see a easy encoder-decoder community structure with an additional layer of AdaIN for type alignment. Within the decrease half, you see some outcomes of this superb thought! To summarize, AdaIN performs type switch (within the function area) by aligning the first-order statistics (μ and σ), at no extra price when it comes to complexity. If you wish to mess around with this concept code is out there right here (official) and right here (unofficial)

The style-based generator

Let’s return to our authentic objective of understanding Fashion-GAN. Principally, Nvidia on this work completely nailed our understanding and design of the vast majority of the mills in GANs. Let’s see how.

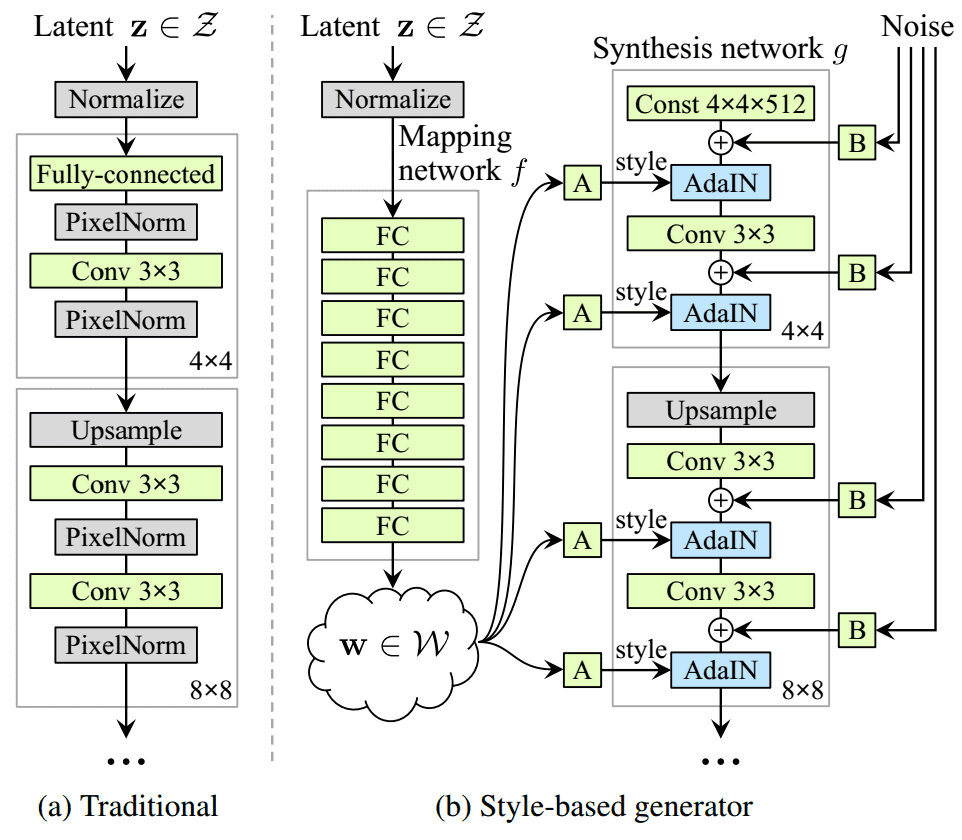

In a typical GAN generator, the sampled enter latent area vector z is projected and reshaped, so will probably be additional processed by transpose convolutional or upsample with or with out convolutions. Right here, the latent vector is remodeled by a collection of absolutely linked layers, the so-called mapping community f! This ends in one other realized vector w, referred to as intermediate latent area W. However why would anyone do this?

The superior thought of a style-based generator. Taken from the unique Fashion-GAN paper

Mapping community f

The foremost purpose for this alternative is that the intermediate latent area W doesn’t should assist sampling in line with any mounted distribution. With steady mapping, its sampling density is induced. This mapping f “unwraps” the area of W, so it implicitly enforces a kind of disentangled illustration. Which means the elements of variation change into extra linear. The authors argue that will probably be simpler to generate reasonable photographs primarily based on a disentangled illustration in comparison with entangled ones. With this completely unsupervised trick, we at the least count on W to be much less entangled than Z area. Let’s transfer onto the depicted A within the determine.

A block: the type options

Then, the vector w is cut up by half, leading to two realized sub-vectors (ys and yb within the paper) that correspond to the type function statistics (affine transformation) of adaptive occasion normalization (AdaIN) operations. The latter is positioned after every convolutional layer of the synthesis community. Every such block will likely be used to mannequin a distinct type variation in the identical picture.

B block: noise

Moreover, the authors present G with express noise inputs, as a direct technique to mannequin stochastic particulars. B blocks are single-channel photographs consisting of uncorrelated Gaussian noise. They’re fed as an additive noise picture to every layer of the synthesis community. The one noise picture is broadcasted to all function maps.

Synthesis community g

The synthesis community g consists of blocks ranging from 4×4 to 1024×1024 equally to the baseline structure of Progressive rising GANs. To maneuver from one block to the following, the spatial dimension is doubled by bilinear up-sampling layers. Equally in D, bilinear downsampling is carried out. The blocks are incrementally added, primarily based on the instinct that the community step by step learns low-level info first. On this manner, the brand new blocks mannequin finer particulars.

Now, let’s look inside the synthesis block. A elementary distinction is that the preliminary low dimensional enter of the community g is a fixed tensor of 4x4x512, as a substitute of a projected and reshaped random sampling vector.

Apart from the preliminary block, every layer begins with an upsampling layer to double spatial decision. Then, a convolution block is added. After every convolution, a 2D per-pixel noise is added to mannequin stochasticity. Lastly, with the magic AdaIN layer, the realized intermediate latent area that corresponds to type is injected.

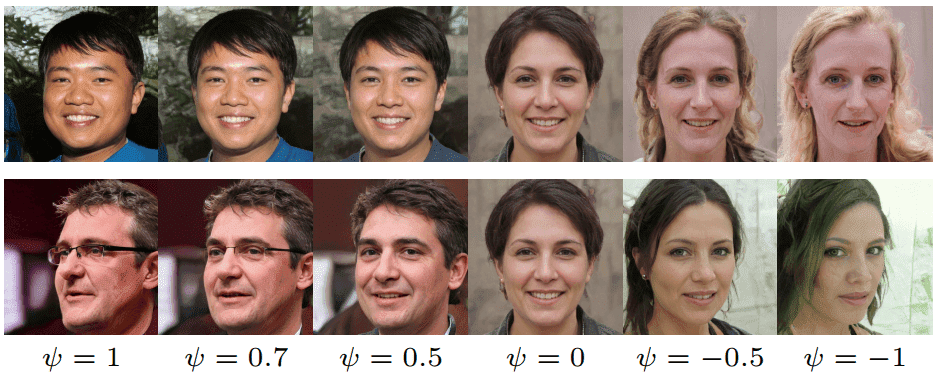

Fashion mixing and truncation methods

As a substitute of truncating the latent vector z as in BigGAN, the use it within the intermediate latent area W. That is carried out as: w’ = E(w) – ψ* ( w – E(w) ), the place E(w)= E(f(z)). Ε denotes the anticipated worth. The vital parameter that controls pattern high quality is ψ. When it’s nearer to 0, we roughly get the sampled faces converging to the imply picture of the dataset. Truncation in W area appears to work effectively as illustrated within the picture under:

Taken from the unique Fashion-GAN paper

As completely described by the unique paper: “It’s attention-grabbing that varied high-level attributes usually flip between the opposites, together with viewpoint, glasses, age, coloring, hair size, and infrequently gender.”

One other trick that was launched is the type mixing. Sampling 2 samples from the latent area Z they generate two intermediate latent vectors w1, w2 corresponding to 2 kinds. w1 is utilized earlier than the crossover level and w2 after it. This most likely is carried out contained in the block. This regularization trick prevents the community from assuming that adjoining kinds are correlated. A proportion of generated photographs (normally 90%) use this trick and it’s randomly utilized to a distinct place within the community each time.

Wanting by way of the design selections of the style-based generator

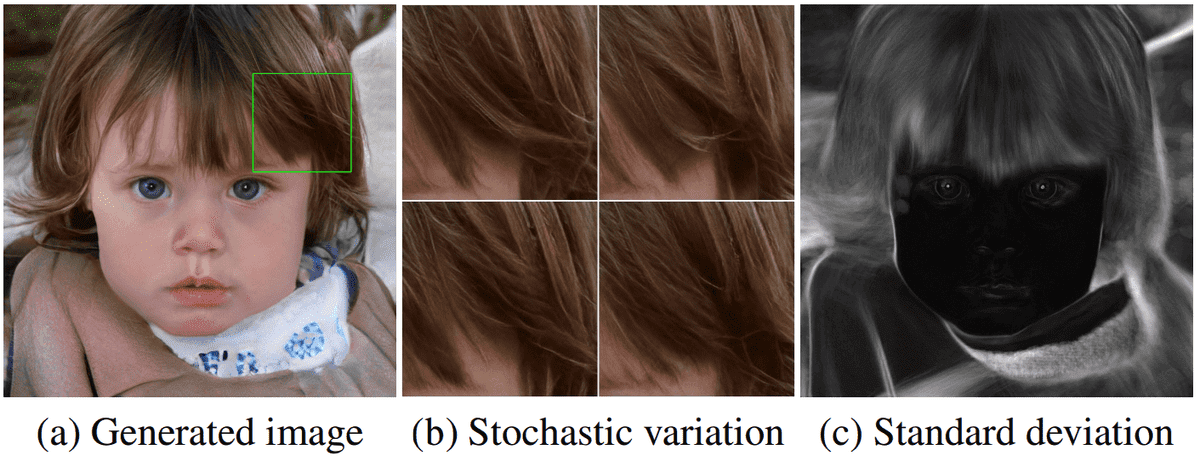

Every injected type and noise (blocks A and B) are localized within the community. Which means when modifying a particular subset of kinds/noises, it’s anticipated to have an effect on solely sure facets of the picture.

Fashion: Let’s see the rationale for this localization, ranging from type. We extensively noticed that AdaIN operation first normalizes every channel to zero imply and unit variance. Then, it applies the style-based scales and biases. On this manner, the function statistics for the following convolution operation are modified. Much less actually, earlier statistics/kinds are discarded within the subsequent AdaIN layer. Thus, every type controls just one convolution earlier than being overridden by the following AdaIN operation.

Noise: In a traditional generator, the latent vector z is fed within the enter of the community. That is thought of sub-optimal because it consumes the generator’s studying capability. That is justified as the necessity of the community to invent a method to generate spatially-varying numbers from earlier activations.

By including per-pixel noise after every convolution, it’s experimentally validated that the impact of noise seems localized within the community. New noise is out there for each layer, much like BigGAN, and thus there isn’t any motivation to generate the stochastic results from earlier activations.

Furthermore, localized kinds globally have an effect on the whole picture. Since AdaIN is utilized to function maps, world type/results similar to pose and lighting may be managed. Extra importantly, they’re coherent, as a result of type is a world attribute. In parallel, the noise is added independently to every pixel and is thus ideally fitted to controlling small and native stochastic variations. As a consequence, the worldwide (type) and native (noise) are realized with out extra and express steerage to the community.

All of the above may be illustrated under:

Taken from the unique Fashion-GAN paper

On the left, now we have the generated picture. Within the center, 4 totally different noises utilized to a particular sub-region. The usual deviation of a giant set of samples with totally different noise may be noticed on the best.

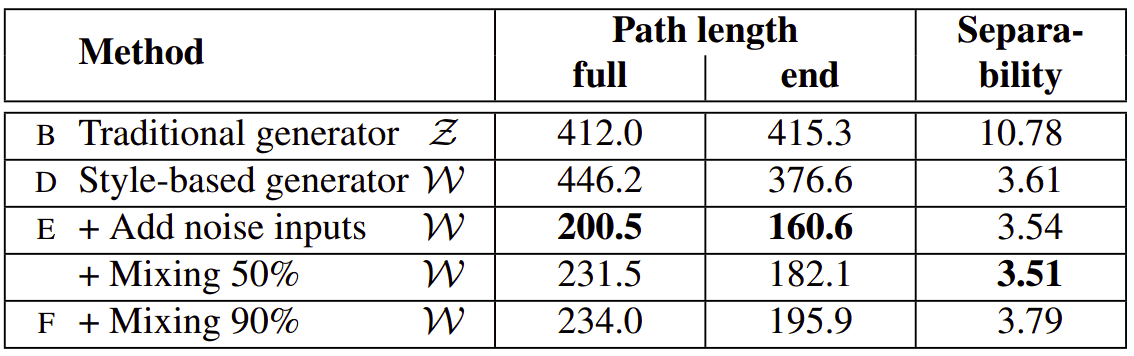

Quantifying disentanglement of areas

The awesomeness arises by the truth that they had been additionally capable of quantify the disentanglement of areas for the primary time! As a result of if you cannot depend it, it would not exist! To this finish, they introduce two new methods of quantifying the disentanglement of areas.

Perceptual path size

In case you do not really feel comfy with entangled and disentangled representations you possibly can revisit InfoGAN. In quite simple phrases, entangled is blended and disentangled associated to encoded however separable indirectly. I prefer to confer with disentangled representations as a sort of decoded info of a decrease dimensionality of the information.

Allow us to suppose that now we have two latent area vectors and we wish to interpolate between them? How might we presumably establish that the “strolling of the latent area” corresponds in an entangled or disentangled illustration? Intuitively, a less-sharp latent area ought to lead to a perceptually {smooth} transition as noticed within the picture.

Interpolation of latent-space vectors can inform us quite a bit. As an illustration, non-linear, non-smooth, and sharp adjustments within the picture could seem. How would you name such a illustration? For example, options which can be absent in both endpoint can seem in the midst of a linear interpolation path. It is a signal of a messy world, particularly entangled illustration.

The quantification comes when it comes to a small step ε within the latent area. If we subdivide a latent area interpolation path into small segments, we are able to measure a distance. The latter is measured between two steps, particularly, t, the place t in [0,1] and t+ε. Nonetheless, it’s significant to measure the space primarily based on the generated photographs. So, one can sum the distances from all of the steps to stroll throughout two random samples of latent area z1 and z2. Be aware that the space is measured within the picture area. Truly, on this work, they measure the pairwise distance between two VGG community embeddings. Mathematically this may be described as (slerp denotes spherical interpolation):

Curiously, they discovered that by including noise, the trail size (common distance) is roughly halved whereas mixing kinds is a little bit bit elevated (+10%). Moreover, this measurement proves that the 8-layer absolutely linked structure clearly produces an intermediate latent area W, which is extra disentangled than Z.

Linear separability

Let’s see how this works.

-

We begin by producing 200K photographs with z ∼ P (z) and classify them utilizing an auxiliary classification community with label Y.

-

We preserve 50% of samples with the very best confidence rating. This ends in 100k high-score auto-labeled (Y) latent-space vectors z for progressive GAN and w for the Fashion-GAN.

-

We match a linear SVM to foretell the label X primarily based solely on the latent-space level ( z and w for the Fashion-GAN) and classify the factors by this airplane.

-

We compute the conditional entropy H(Y | X) the place X represents the lessons predicted by the SVM and Y are the lessons decided by the classifier.

-

We calculate the separability rating as exp( Σ (H(Y| X) ) ), summing for all of the given attributes of the dataset. We principally match a mannequin for every attribute. Be aware that the CelebA dataset comprises 40 attributes similar to gender data.

Quantitative outcomes may be noticed within the following desk:

Taken from the unique Fashion-GAN paper

In essence, the conditional entropy H tells how a lot extra info is required to find out the true class of a pattern, given the SVM label X. A super linear SVM would lead to understanding Y with full certainty leading to entropy of 0. A excessive worth for the entropy would recommend excessive uncertainty, so the label primarily based on a linear SVM mannequin will not be informative in any respect. Much less actually,the decrease the entropy H, the higher.

As a last observe, you possibly can reevaluate your understanding by watching the official accompanying video.

Conclusion

In case you adopted our GANs in pc imaginative and prescient article collection, you’ll most likely get to the purpose that GANs are unstoppable. Personally talking, GANs engineering and their options by no means stop to amaze me. On this article, we spotlight the significance of self-supervision and the attention-grabbing instructions that seem. Then, we see some thrilling and cautious generator design to inject the type of a reference picture through adaptive occasion normalization. Fashion-GAN is certainly probably the most revolutionary works within the area. Lastly, we highlighted the proposed metrics for linear separability, which makes us dive into increasingly more superior ideas on this collection.

I actually do hope that our co-learners are capable of sustain with this. A substantial amount of work from our workforce is devoted to creating this collection easy and exact. As at all times, we deal with instinct and we imagine that you’re not discouraged to start out experimenting with GANs. If you want to start out experimenting with a bunch of fashions to breed the state-of-the-art outcomes, it’s best to undoubtedly examine this open-source library in Tensorflow or this one in Pytorch.

That being mentioned, prepare for the following half.

We will likely be again for extra!

For a hands-on video course we extremely suggest coursera’s brand-new GAN specialization.Nonetheless, should you choose a guide with curated content material in order to start out constructing your personal fancy GANs, begin from the “GANs in Motion” guide! Use the low cost code aisummer35 to get an unique 35% low cost out of your favourite AI weblog.

Cited as:

@article{adaloglou2020gans,

title = "GANs in pc imaginative and prescient",

creator = "Adaloglou, Nikolas and Karagianakos, Sergios",

journal = "https://theaisummer.com/",

12 months = "2020",

url = "https://theaisummer.com/gan-computer-vision-style-gan/"

}

References

- Karras, T., Laine, S., & Aila, T. (2019). A method-based generator structure for generative adversarial networks. In Proceedings of the IEEE Convention on Laptop Imaginative and prescient and Sample Recognition (pp. 4401-4410).

- Ioffe, S., & Szegedy, C. (2015). Batch normalization: Accelerating deep community coaching by lowering inside covariate shift. arXiv preprint arXiv:1502.03167.

- Ulyanov, D., Vedaldi, A., & Lempitsky, V. (2016). Occasion normalization: The lacking ingredient for quick stylization. arXiv preprint arXiv:1607.08022.

- Xie, Q., Hovy, E., Luong, M. T., & Le, Q. V. (2019). Self-training with Noisy Pupil improves ImageNet classification. arXiv preprint arXiv:1911.04252.

- Chen, T., Zhai, X., Ritter, M., Lucic, M., & Houlsby, N. (2019). Self-supervised gans through auxiliary rotation loss. In Proceedings of the IEEE Convention on Laptop Imaginative and prescient and Sample Recognition (pp. 12154-12163).

- Kirkpatrick, J., Pascanu, R., Rabinowitz, N., Veness, J., Desjardins, G., Rusu, A. A., … & Hassabis, D. (2017). Overcoming catastrophic forgetting in neural networks. Proceedings of the nationwide academy of sciences, 114(13), 3521-3526.

- Miyato, T., Kataoka, T., Koyama, M., & Yoshida, Y. (2018). Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957.

* Disclosure: Please observe that a number of the hyperlinks above is perhaps affiliate hyperlinks, and at no extra price to you, we are going to earn a fee should you determine to make a purchase order after clicking by way of.