Coaching is indubitably an important a part of creating a machine studying utility. It’s once you begin realizing whether or not or not your mannequin is value it, how your hyperparameters ought to appear to be and what do you could change in your structure. Usually, most machine studying engineers spend fairly a while on coaching, experimenting with totally different fashions, tuning their structure and discovering the perfect metrics and losses for his or her drawback.

On this article, we’re persevering with the Deep Studying in Manufacturing collection by constructing a mannequin coach for our segmentation instance we’re utilizing to date. I assumed that it might be good this time as a substitute of outlining the fundamental matters and software program engineering rules, to undergo the entire improvement lifecycle step-by-step. So, we are going to program your entire Coach class as we’d do in our daily job. It is usually an important alternative to use all the perfect practices that we talked about within the collection. We’re going to discover how we will construct excessive efficiency and extremely maintainable software program in real-time.

So be ready for lots of code this time. With out additional ado, let’s get began.

Constructing a coaching loop in Tensorflow

First issues first. Let’s keep in mind our code to date. All we now have in our colab pocket book by now could be boilerplate Keras code, which incorporates the mannequin compilation and match.

self.mannequin.compile(optimizer=self.config.practice.optimizer.sort,

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=self.config.practice.metrics)

LOG.information('Coaching began')

model_history = self.mannequin.match(self.train_dataset, epochs=self.epoches,

steps_per_epoch=self.steps_per_epoch,

validation_steps=self.validation_steps,

validation_data=self.test_dataset)

return model_history.historical past['loss'], model_history.historical past['val_loss']

Some issues to notice right here earlier than we proceed. Since we get most of our hyperparameters from a config file, I feel that it might be helpful to know precisely what we’re utilizing right here. Particularly, we select “SparseCategoricalCrossentropy” as our loss, Adam optimizer and “SparseCategoricalAccuracy” for our major metric.

Additionally observe that I am utilizing Python 3.7 and Tensorflow 2.0. To see my full setup and comply with alongside, you’ll be able to revisit the primary article of the collection the place we arrange our laptop computer and description a high-level overview of our drawback and finish objective.

To maintain issues easy, I feel we will substitute the above code within the practice operate inside our mannequin class with the code under:

optimizer = tf.keras.optimizers.Adam()

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

metrics = tf.keras.metrics.SparseCategoricalAccuracy()

coach = Coach(self.mannequin, self.train_dataset, loss, optimizer, metrics, self.epoches)

coach.practice()

All I am doing right here is defining the optimizer, the loss, the metrics and passing them together with the mannequin and the info set in a coach class referred to as “Coach”. As soon as we create a brand new occasion of the category, we will name the practice technique triggering the start of our coaching.

A very good apply is to attempt to preserve the category unaware of all the opposite elements of the applying such because the mannequin and the info. Every class ought to have a sole function and carry out solely a single factor with out relying on different parts.

That is what we name separation of issues and it’s a important idea to make sure maintainability and scalability of our software program.

So, let’s outline our class in a separate file. I normally have a folder named executors and embrace there all primary ML functionalities resembling coaching, validation and prediction however you might be clearly free to do no matter you want. Every coach relies on solely six issues: mannequin, enter knowledge, loss operate, optimizer, metric and the variety of epochs.

class Coach:

def __init__(self, mannequin, enter, loss_fn, optimizer, metric, epoches):

self.mannequin = mannequin

self.enter = enter

self.loss_fn = loss_fn

self.optimizer = optimizer

self.metric = metric

self.epoches = epoches

Nothing fancy right here, as you’ll be able to see. Contained in the Coach class, we additionally want a practice operate, which can have the general coaching performance, and a train_step operate that may comprise solely a single coaching step.

A lot of the instances, it is most popular to have a customized coaching loop as a substitute of counting on the excessive degree APIs resembling Keras, as a result of we’re capable of tune each little element and have full management over the method.

Within the train_step operate, we carry out the precise coaching of a single batch. First, we have to get the coaching variables aka the mannequin weights and extract the enter and the label from the batch.

trainable_variables = self.mannequin.trainable_variables

inputs, labels = batch

Then we have to feed the enter into the mannequin and calculate the loss based mostly on the labels and the prediction of the Unet.

with tf.GradientTape() as tape:

predictions = self.mannequin(inputs)

step_loss = self.loss_fn(labels, predictions)

We’re utilizing “ tf.GradientTape()” from Tensorflow to seize the gradients throughout this step, We are able to then apply them into the optimizer and alter the weights accordingly.

grads = tape.gradient(step_loss, trainable_variables)

self.optimizer.apply_gradients(zip(grads, trainable_variables))

In essence, we’re working the backpropagation algorithm by benefiting from the APIs supplied by Tensorflow. For a really low-level understanding of how backpropagation works, you’ll be able to verify our article on constructing a Neural community from scratch.

Lastly, we have to replace our metric and return the step loss and the predictions to be consumed by the practice operate

self.metric.update_state(labels, predictions)

return step_loss, predictions

And finally we now have one thing like this:

def train_step(self, batch):

trainable_variables = self.mannequin.trainable_variables

inputs, labels = batch

with tf.GradientTape() as tape:

predictions = self.mannequin(inputs)

step_loss = self.loss_fn(labels, predictions)

grads = tape.gradient(step_loss, trainable_variables)

self.optimizer.apply_gradients(zip(grads, trainable_variables))

self.metric.update_state(labels, predictions)

return step_loss, predictions

Now let’s go to the practice technique. The practice technique will merely be a for-loop that iterates over the variety of epochs and a secondary for loop inside, that trains each batch (that is our coaching step).

def practice(self):

for epoch in vary(self.epoches):

LOG.information(f'Begin epoch {epoch}')

for step, training_batch in enumerate(self.enter):

step_loss, predictions = self.train_step(training_batch)

LOG.information("Loss at step %d: %.2f" % (step, step_loss))

train_acc = self.metric.outcome()

LOG.information(f'Saved checkpoint: {save_path}')

As I discussed earlier than, we merely have two for loops and plenty of logging. Offering logs is significant so we will have a transparent picture of what is going on on contained in the computational graph. On this method, we’re capable of cease/proceed the coaching based mostly on the information we acquired from them and to acknowledge errors and bugs instantly. The LOG variable is a continuing outlined on the highest of the file which initializes a logging util.

LOG = get_logger('coach')

For extra data on this and on find out how to use logs in a deep studying utility, see our earlier article of the collection which is devoted to debugging and logging.

A few observations earlier than we proceed: Initially, the enter is a tensorflow dataset (tf.knowledge) and as you’ll be able to see, we will iterate over it as we are going to do for a traditional array or record. We even have lined that in a earlier article on knowledge preprocessing. Secondly, I am certain you observed that we seize each the loss and the accuracy all through your entire program. This isn’t simply to offer logs but in addition to allow visualization of our coaching utilizing Tensorboard Extra on that shortly.

Thirdly, we’d like a solution to save the state of our coaching periodically as a result of deep studying fashions can practice for lots of time. And I imply plenty of time. To keep away from dropping our weights and to have the ability to reuse a skilled mannequin afterwards, we have to incorporate some type of checkpoints. Fortunately, there may be an already built-in performance for that.

Coaching checkpoints

Saving the present state in checkpoints is definitely fairly simple. All we now have to do is outline a checkpoint supervisor and an preliminary checkpoint to proceed the coaching from there. If it’s the first time we practice the mannequin, this will likely be empty in any other case will probably be loaded from an exterior folder. So in our init operate, we now have:

self.checkpoint = tf.practice.Checkpoint(optimizer=optimizer, mannequin=mannequin)

self.checkpoint_manager = tf.practice.CheckpointManager(self.checkpoint, './tf_ckpts')

The checkpoint is outlined by the optimizer and the mannequin whereas the checkpoint supervisor by the preliminary checkpoint and a folder to avoid wasting them. And to avoid wasting the present state all we now have to do is:

save_path = self.checkpoint_manager.save()

LOG.information(f'Saved checkpoint: {save_path}')

We place this normally on the finish of every epoch or after a subset of them are accomplished.

Saving the skilled mannequin

As soon as your entire coaching is completed, we need to retailer our skilled mannequin so we will use that to serve precise customers. Once more, that is fairly easy in Tensorflow and could be performed in a few traces:

self.model_save_path = 'saved_models/'

save_path = os.path.be a part of(self.model_save_path, "unet/1/")

tf.saved_model.save(self.mannequin, save_path)

And naturally, loading a saved mannequin could not be that tough both:

mannequin = tf.saved_model.load(save_path)

Visualizing the coaching with Tensorboard

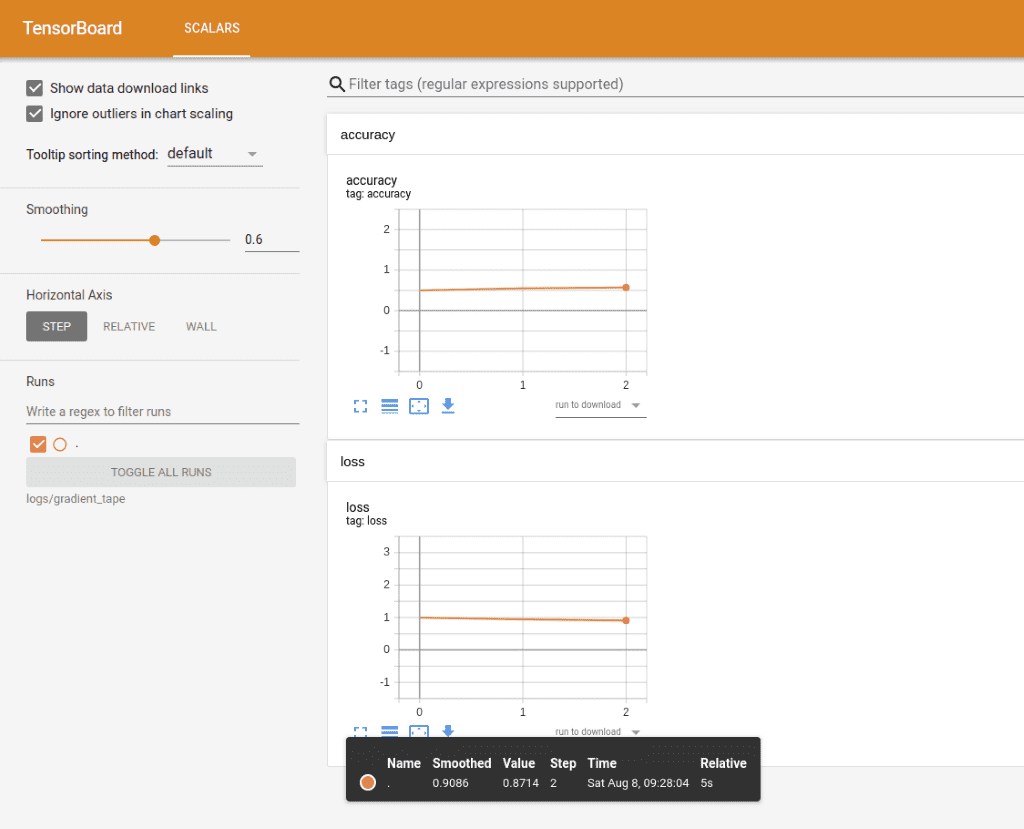

In case you are a visible individual like me, logs usually are not the best factor to have a look at when making an attempt to get a way of how your coaching is continuing. It will be significantly better to have a solution to visualize the method and have a look at charts as a substitute of traces and features of logs. If you happen to aren’t conscious of such a software, Tensorboard is a solution to plot the metrics captured throughout coaching and create lovely graphs for them. It is also an excellent solution to see the computational graph and get a high-level picture of our complete structure. And it is also a bit of cake to make use of (surprisingly huh?)

tf.abstract is a really elegant technique to write down our metrics into logs which could be later utilized by Tensorboard. We are able to construct a abstract author utilizing an exterior path like this:

self.train_log_dir = 'logs/gradient_tape/'

self.train_summary_writer = tf.abstract.create_file_writer(self.train_log_dir)

And on the finish of every epoch, we will use this author to avoid wasting the present metrics like the instance under:

def _write_summary(self, loss, epoch):

with self.train_summary_writer.as_default():

tf.abstract.scalar('loss', loss, step=epoch)

tf.abstract.scalar('accuracy', self.metric.outcome(), step=epoch)

Some issues to note right here:

-

tf.abstract.scalar creates a file and writes the metrics beneath the hood

-

The logs are literally saved on a binary format so we will not actually learn them

-

We are able to both move the metric as an argument on the operate (as we do for the loss) or we will make the most of tf.metric that shops the state contained in the computational graph

For the latter, we will replace the metric contained in the graph utilizing

self.metric.update_state(labels, predictions)

After which get the present state by:

self.metric.outcome()

the place:

self.metric = tf.keras.metrics.SparseCategoricalAccuracy()

As soon as we now have the abstract written, we will spin up Tensorboard in our localhost by executing the next command:

$ tensorboard --logdir logs/gradient_tape

And behold:

Tensorboard will proceed to print the metrics because the coaching is working. I extremely advocate to spend a while and play with it, so you’ll be able to have a transparent sense of all of the wonderful stuff we will do. It will likely be very useful to your future tasks, consider me.

Earlier than we wrap up this text, I want to point out one factor that we have not touched upon. And that’s validation. A lot of the instances, after we carry out some type of coaching, we apply a validation process so we will make certain that our outcomes are appropriate and their mannequin is just not overfitted. Precisely the identical rules with those we talked to date are utilized right here. We’d nonetheless have a check and a test_step operate and we’d nonetheless use tf.metrics, logs and Tensorboard. In truth, it isn’t that totally different from coaching in 90% of the instances. The one distinction is that we wouldn’t must compute the gradients.

Conclusion

We noticed find out how to construct a customized coach from scratch, together with some finest practices to maintain it maintainable and extensible. In parallel, we dived into some Tensorflow methods to make the code a lot smaller and far less complicated. Let me let you know right here, that that is precisely the thought course of I’d comply with in a real-life challenge and the code that will likely be run in manufacturing will likely be virtually similar to this one. I hope you discover such a article helpful and please tell us if you want to see extra of them. After all, there are all the time methods and strategies to optimize even additional however I feel you bought the gist of it by now. For the complete code, be at liberty to verify our Github repository

The subsequent two articles are additionally going to be centered on coaching and find out how to optimize it. We’re gonna see find out how to distribute our coaching in GPU’s and a number of machines. Then, we are going to current a full instance on find out how to run a coaching job on the cloud.

I simply realized that that is the seventh article of the collection and I do not see that will probably be over anytime quickly. We nonetheless have plenty of issues to debate earlier than we lastly have our mannequin deployed and utilized by thousands and thousands of customers. I hope that you’ll nonetheless be with me then.

Till then, have enjoyable and luxuriate in studying AI.

* Disclosure: Please observe that a few of the hyperlinks above is likely to be affiliate hyperlinks, and at no further price to you, we are going to earn a fee should you determine to make a purchase order after clicking by means of.