In terms of coaching a big Deep Studying mannequin, there are numerous obstacles that we have to overcome. First, we have to purchase heaps and plenty of information. Coaching depends on different steps akin to fine-tuning our structure and hyperparameters, the accuracy, and constructing some type of logging and visualization. Clearly, we should always maintain monitor of progress and lots of many extra. Nevertheless, some of the frequent struggles is the lack of {hardware} assets. Most of us do not personal an NVIDIA Titan RTX or we do not have entry to a cluster of PCs, so we’re compelled to attend hours and hours on every coaching iteration to guage our mannequin.

I additionally perceive that we’re tempted to purchase a high-end GPU. And to be trustworthy with you I’ve already tried it. However, there’s a a lot simpler option to prepare our fashions and I am certain you are conscious of that. It’s referred to as Cloud. Cloud suppliers akin to Google cloud, Amazon Internet Companies and Microsoft Azure are glorious examples of low value, high-end infrastructure. Cloud is normally focused to machine studying functions.

In at the moment’s article, we are going to take our beforehand constructed Unet mannequin that performs picture segmentation, deploy it within the Google cloud and run a full coaching job there. In the event you bear in mind from our final article of the sequence, we developed a customized coaching loop in Tensorflow. The objective is to take that code virtually unchanged and run it in a Google cloud occasion.

What’s an occasion you could suppose? We’ll get there,don’t fret.

Relating to the construction of the article, I believe I ought to take it step-by-step and clarify necessary subjects and particulars whereas I am outlining all the mandatory directions to succeed in our finish objective.

Shall we start?

Cloud computing

I am certain you already know what cloud computing is however for consistency causes, let’s give a high-level definition.

Cloud computing is the on-demand supply of IT assets through the web. As an alternative of shopping for and sustaining bodily servers and information facilities, we are able to entry infrastructure akin to pc energy and storage from cloud suppliers.

About 90% of all the businesses on this planet use some type of cloud service at the moment. I hope that is sufficient to persuade you concerning the energy of the Cloud. And probably the most astonishing factor is that you just actually have entry to an enormous number of completely different techniques and functions that might be unimaginable to keep up by yourself.

Woooah, am I proper?

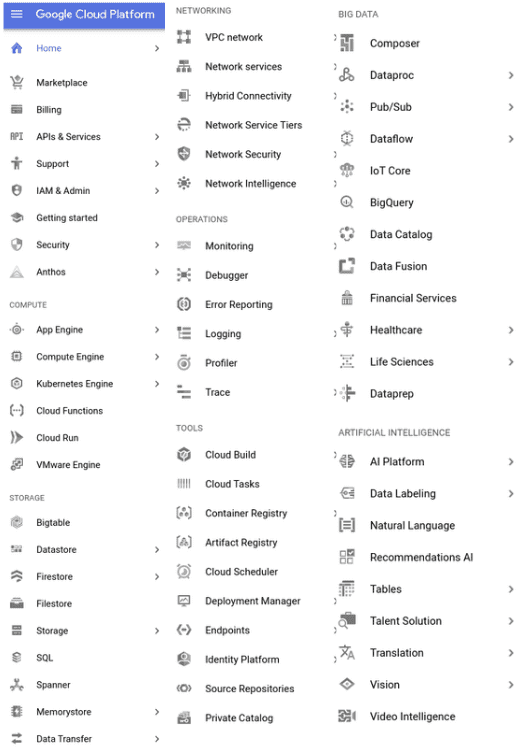

For our use case, we’re gonna want solely certainly one of all of the providers referred to as Compute Engine. Compute Engine allow us to use digital machine cases hosted within the Google servers and maintained by them.

A digital machine (VM) is an emulation of a pc system. Digital machines are primarily based on pc architectures and supply performance of a bodily pc. Their implementations might contain specialised {hardware}, software program, or a mix of them.

So, in essence we borrow a small PC in Google servers, set up no matter working system and software program we might want (aka construct a Digital Machine) and do remotely no matter we would do in our personal native laptop computer. It’s that easy.

OK now that we all know the fundamentals let’s proceed with a hands-on strategy. If you have not created a Google account by now, be happy to do this. All it’s important to do is go right here, register an account (a bank card is required for safety causes nevertheless it will not be charged for a minimum of a 12 months or when you surpass the free quotas) and revel in a full 300$ free credit score (a minimum of on the time of penning this publish).

One factor I forgot to say is that generally the cloud follows a pay as you go pricing mannequin, which means that we get charged relying on what number of assets we use.

Making a VM occasion

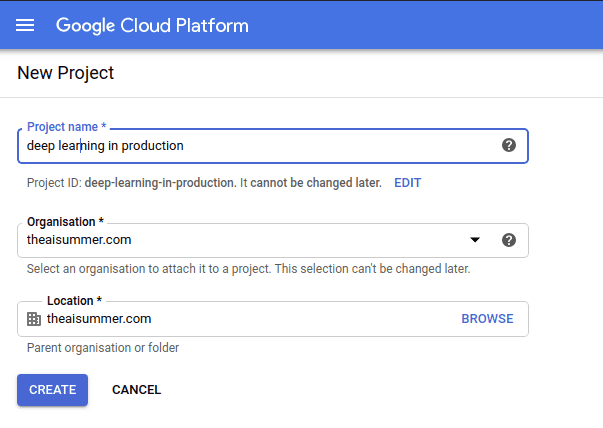

After getting a Google cloud account, it is time to create a model new mission to host our utility by clicking on the highest left “New mission” and naming it no matter you want.

When the mission is initialized, we are able to navigate to: Compute Engine > VM cases from the sidebar on the left and create a brand new VM.

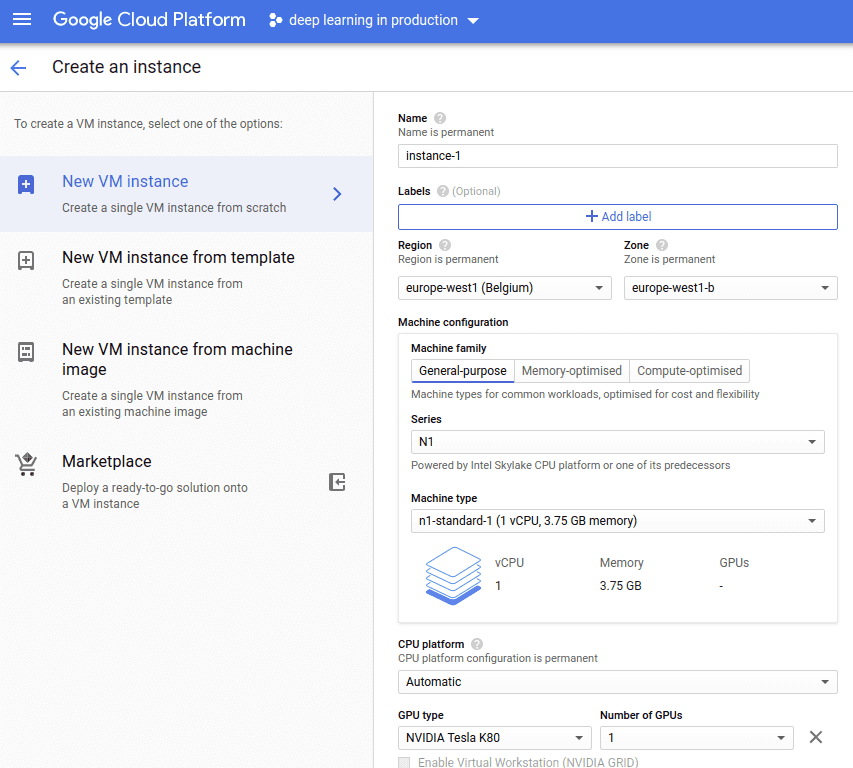

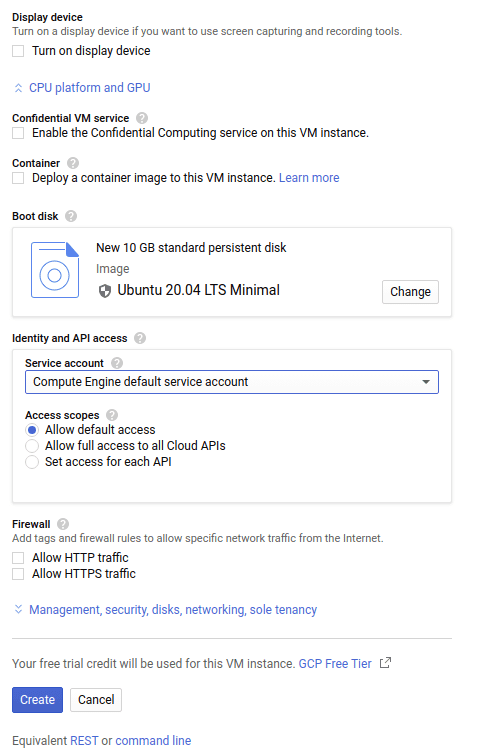

As you’ll be able to see within the picture above you’ll be able to customise the occasion in any style you want. You may select your CPU, your RAM, you’ll be able to add a GPU and you can also make it as excessive performant as you want. I am gonna maintain issues easy right here and choose an ordinary CPU with 3.75 GB of reminiscence and an Nvidia Tesla K80. And naturally you’ll be able to decide your personal OS. I’ll use Ubuntu’s 20.04 minimal picture with a 10GB disk measurement.

You may also choose another issues like deploying a container to the occasion or enable visitors from the Web, however let’s not take care of them proper now.

Connecting to the VM occasion

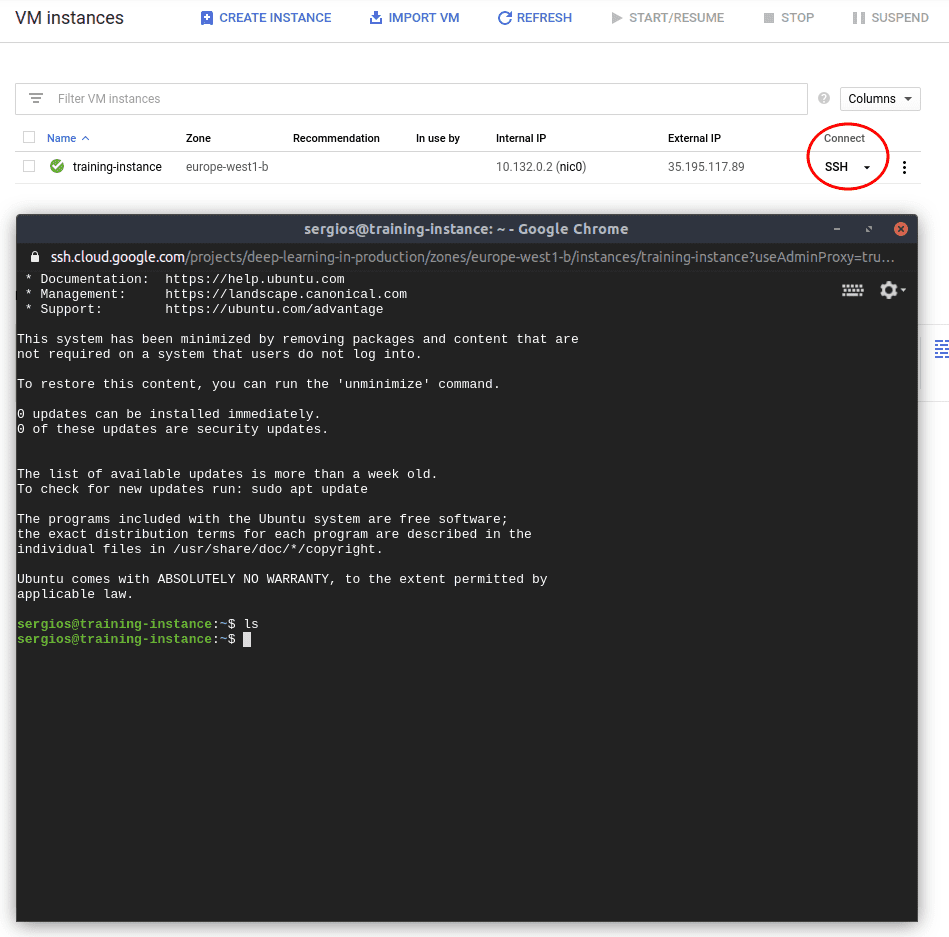

OK nice, we now have our occasion up and operating. It is time to begin utilizing it. We will connect with it utilizing commonplace SSH by clicking the SSH button. This can open a brand new browser window and provides us entry to the Google machine.

As you’ll be able to see we actually have entry via a terminal to a distant machine in Google servers and as you’ll be able to think about we are able to set up what we wish as we might usually do in our laptop computer.

The following step is to switch our information and code into the distant machine from our laptop computer. Google cloud makes use of a specialised command referred to as “gcloud” that handles many issues akin to authentication (utilizing SHH beneath the hood). An identical command exists in virtually all cloud suppliers so the next steps have just about the identical logic.

SHH is a protocol that makes use of encryption to safe the connection between a shopper and a server and permit us to soundly connect with a distant machine.

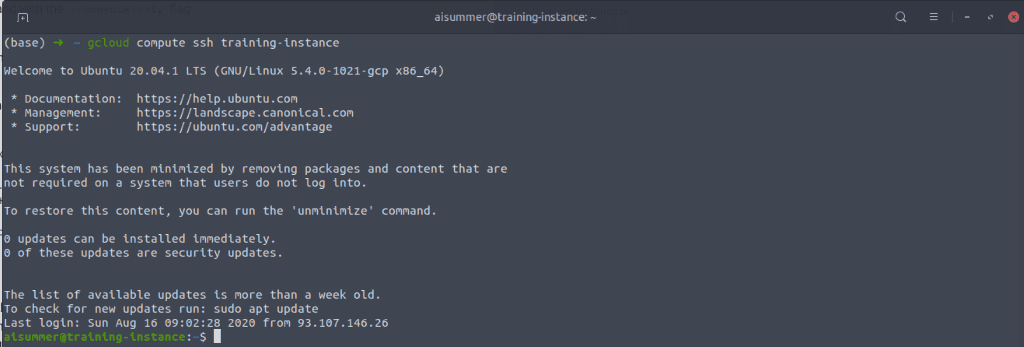

You may very simply obtain and set up the Google cloud SDK following the wonderful docs right here. Truly, it is fairly simple and it is solely 5 instructions it is advisable run. After than that we are able to very simply connect with the occasion from our native terminal utilizing

gcloud compute ssh coaching-occasion

And we at the moment are contained in the distant occasion from our terminal. How cool is that?

Notice that we are able to shut the connection and return to the native terminal by typing “exit”.

Transferring recordsdata to the VM occasion

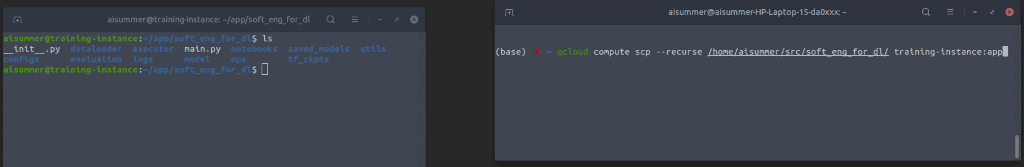

To switch the recordsdata from our native system to the distant occasion, we are able to use the “gcloud scp“ command. I’ll switch the entire mission within the occasion so we are able to run the coaching precisely the identical manner we did regionally. Given the truth that it is gonna be an one-time coaching and never a recurrent job, there isn’t a want to attenuate our code and ship solely the mandatory recordsdata.

gcloud compute scp --recurse /residence/aisummer/src/soft_eng_for_dl/ coaching-occasion:app

As you’ll be able to see, on the left terminal we’re related to the gcloud occasion and we record all of our not too long ago transferred recordsdata utilizing the command “ls” whereas on the best terminal we’re inside our native system.

*The recordsdata you see is the entire mission developed via the article sequence. For extra particulars test our earlier articles or our Github repo.

Operating the coaching remotely

Now all we now have to do is solely execute the principle.py within the left terminal and the coaching will begin instantly. Yeah I do know that we forgot to put in all the mandatory dependencies akin to Python, Tensorflow and so forth, however I belief that you are able to do that with out my assist. Simply apt-get python and “pip set up” all of the libraries.

sudo apt set up python3-pip

pip set up -r necessities.txt

Tip: Google cloud gives many prepared to make use of cases for numerous use circumstances. After we create the occasion, we are able to select a premade template that accommodates all of our important libraries and languages.

As soon as the coaching is full we are able to switch again the saved mannequin weights and maybe the logs and I assume we’re achieved. Notice that as we did regionally within the earlier article, we are able to once more monitor the logs through the coaching and we are able to even arrange Tensorboard. As I instructed you a lot instances by now, we are able to actually do something we might do on our personal laptop computer. However with much more assets and ready-made options.

gcloud compute scp --recurse coaching-occasion:app /residence/aisummer/src/soft_eng_for_dl/

What concerning the coaching information?

Those that have an inherited expertise to watch the small print, might need seen that I intentionally have not talked about information up thus far. Till now I assumed that we bake the information inside our utility and add them within the VM occasion alongside with the code. So when the coaching kicks off, it reads the pet photos from an area path. After all that is not very environment friendly and must be prevented.

A greater choice is perhaps to retailer the information within the cloud as effectively however exterior of our pc atmosphere. All cloud suppliers have a storage resolution that permits us to do precisely that. AWS calls it S3, Azure calls it Azure Storage and Google calls it Cloud Storage.

Object storages are pc information storage architectures that handle information as distinct models referred to as objects versus different architectures which handle information as hierarchical recordsdata or blocks. Every object has the information, some type of metadata and a globally distinctive identifier. Cloud storages present safety, scalability, versatile administration and resilience.

Objects are organized in buckets and a mission can comprise a number of buckets. For instance in our case we are able to create a bucket referred to as “deep-learning-in-production-data” and save all of our pet photos there. And that is precisely what we’re gonna do.

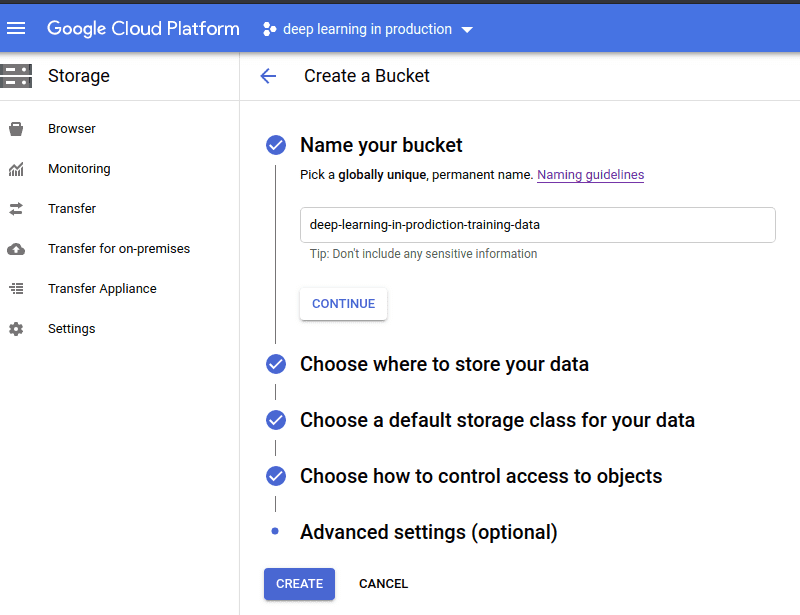

We will create a bucket very simply by navigating to the storage browser and click on “create bucket” to open the bucket creation kind proven beneath.

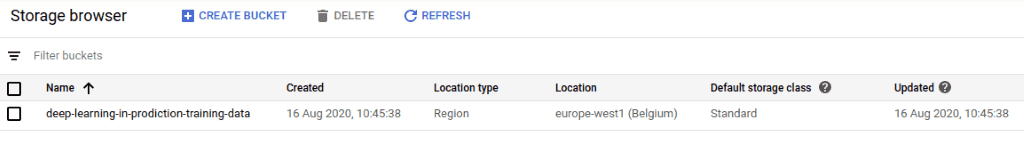

We will in fact have many buckets to arrange our information as we wish. Every has a definite identify, a location, a storage class and naturally all of our objects.

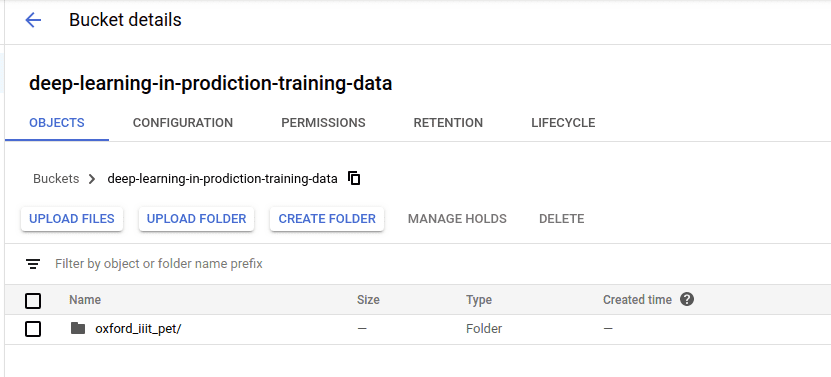

When the bucket is created, we are able to add an object straight from the UI. We will completely do all of that utilizing the terminal and even http requests however I personally see no worth in doing it.

Cool. As you’ll be able to see we now have our information uploaded and it is time to use them for coaching. This may be achieved very simply utilizing tf.information and enter pipelines as we defined in additional particulars in a earlier article about enter pipelines.

filenames = "gs://deep-learning-in-prodiction-training-data/oxford_iiit_pet/*"

filepath = tf.io.gfile.glob(filenames)

dataset = tf.information.TFRecordDataset(filepath)

Or we are able to use TFDS ( Tensorflow Datasets) and do one thing like this:

tfds.load(identify=" oxford_iiit_pet ", data_dir=" gs://deep-learning-in-prodiction-training-data/")

And that is it. We incorporate this small turn into our enter pipeline code, redeploy our utility into the occasion and it’ll mechanically stream the information from cloud storage into our mannequin throughout coaching. Lastly, remember the fact that cloud storage is ridiculously low-cost.

Conclusion

In the present day I attempted to present you an outline of how straightforward it’s to coach a deep studying mannequin and retailer your information within the cloud. Generally attempting to run issues regionally and spend numerous cash shopping for {hardware} and optimize your PC, it is simply not value it. Particularly when there are such a lot of ready-to-use options on the market. In the event you ask me, I exploit cloud infrastructure every day and like it for many of my tasks, due to its simplicity.

Admittedly, that is true not just for coaching ML fashions but additionally for serving them. As we are going to see within the subsequent articles, it’s totally simple to deploy a service and use it to serve our customers because it gives issues like safety, scalability and ease of upkeep. It isn’t simply concerning the {hardware} it gives, It is concerning the abstraction it offers us to concentrate on the issues that matter. We intention our concentrate on our machine studying mannequin as a substitute of the right way to construct and optimize our infrastructure.

I hope that by now you develop into extra aware of cloud providers and I’d recommend to spend extra time enjoying round, particularly if you wish to proceed with us on this sequence. As a result of we’ll use it. Rather a lot!

In the event you favored this text, you’ll be able to present your help by sharing it!

As a facet materials, I strongly recommend the TensorFlow: Superior Methods Specialization course by deeplearning.ai hosted on Coursera, which will provide you with a foundational understanding on Tensorflow

Keep tuned for extra AI articles.

References

* Disclosure: Please be aware that among the hyperlinks above is perhaps affiliate hyperlinks, and at no further value to you, we are going to earn a fee when you resolve to make a purchase order after clicking via.