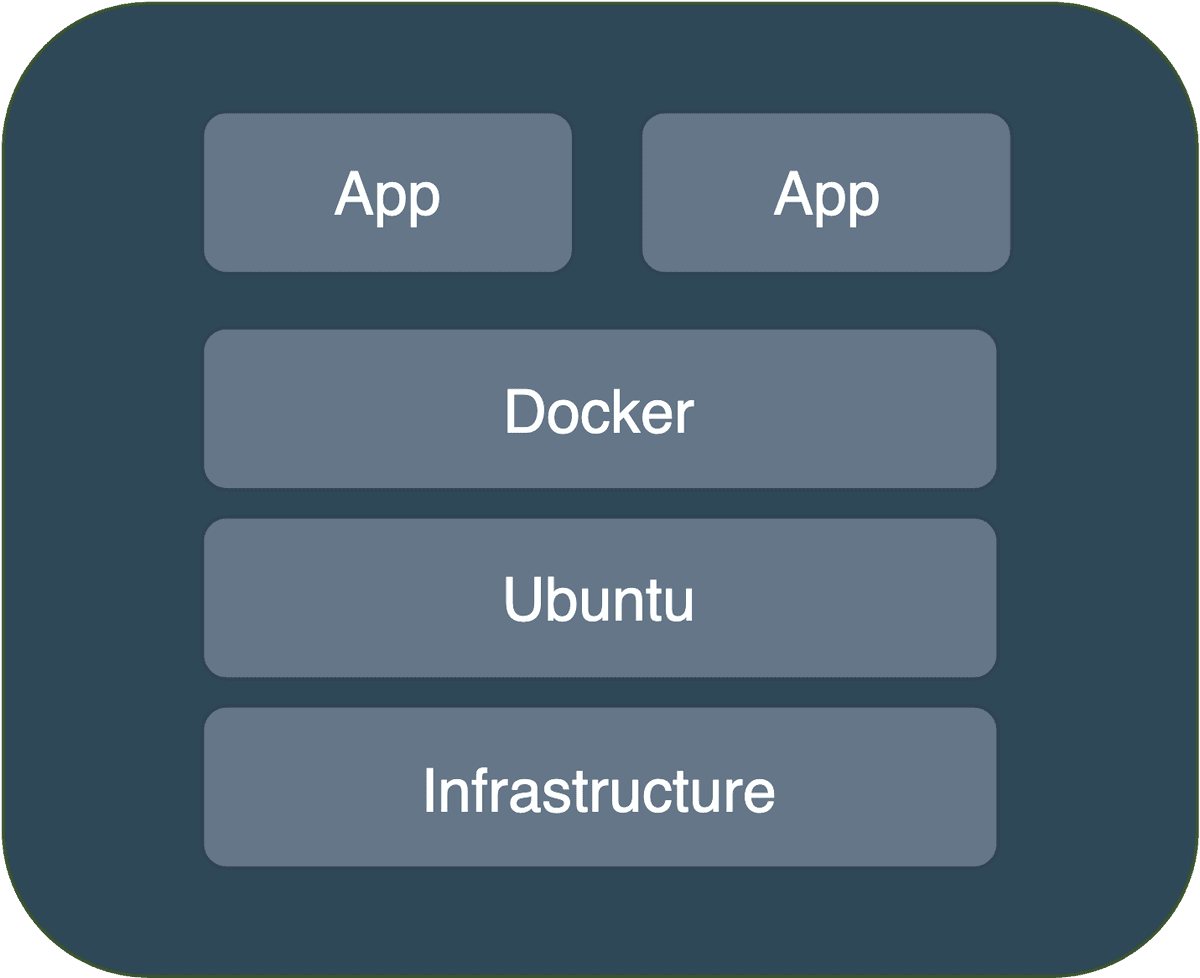

Containers have develop into the usual approach to develop and deploy purposes today with Docker and Kubernetes dominating the sector. That is very true in machine studying and for an excellent motive! Containers present flexibility to experiment with totally different frameworks, variations, GPU’s with minimal overhead. In addition to, they remove discrepancies between the event and the manufacturing setting, they’re light-weight in comparison with digital machines, they usually can simply be scaled up or down.

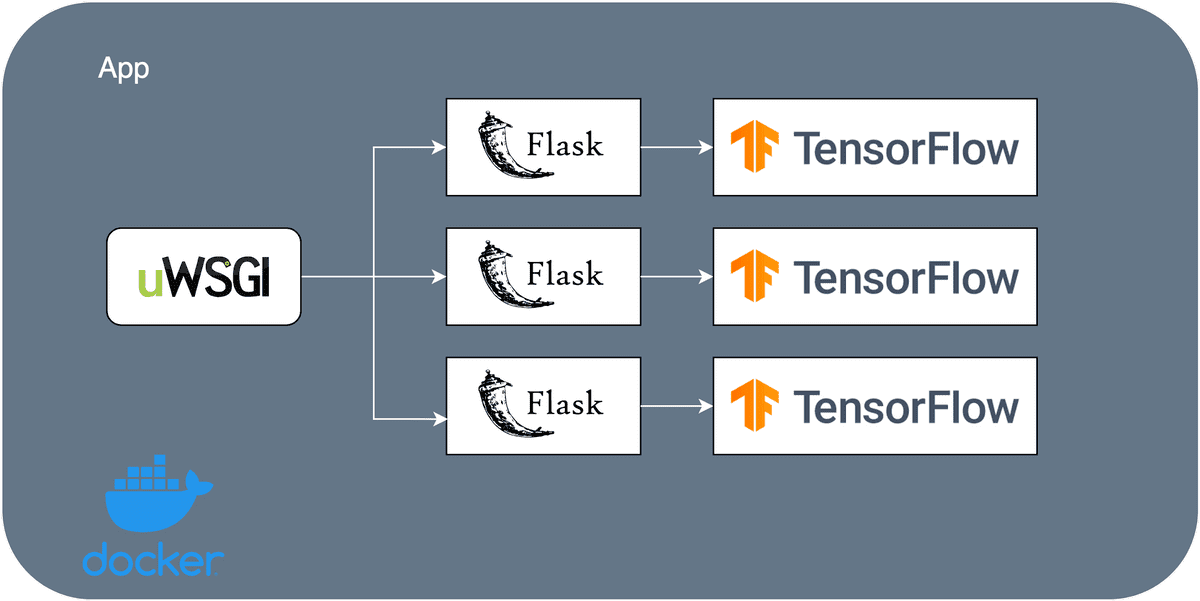

On this article, we’ll containerize our Deep Studying software utilizing Docker. Our software consists of a Tensorflow mannequin that performs picture segmentation, Flask, uWSGI for serving functions, and Nginx for load balancing. For many who have not adopted this text sequence, I counsel taking a look on the earlier articles.

For this goal,we’ll construct a Docker picture that packages our Deep Studying/Flask code, a picture for Nginx and we’ll mix them utilizing Docker Compose. I’m positive that some don’t totally perceive what this implies, so I hope to make it crystal clear via this text.

If all that sounds attention-grabbing to you, hope in. Keep in mind that our final aim is to deploy our mannequin into the cloud and scale it to hundreds of thousands of customers.

What’s a Container?

A container is a regular unit of software program that packages the code and all its dependencies in order that the appliance runs rapidly and reliably from one computing setting to a different.

This merely signifies that we are able to run the very same software program in any setting whatever the working system and {hardware}.

As you might need guessed, it instantly solves issues comparable to lacking dependencies, permits straightforward collaboration between builders, and offers isolation from different purposes.

And naturally, it remove a statements that origins from the start of time:

“It really works on my machine, I don’t perceive why it doesn’t work right here.”

Containers additionally run on prime of the working system’s kernel they usually do not “replicate” the Working system as Digital machines do, and subsequently are much more light-weight and moveable.

What’s Docker?

Docker has develop into so extremely widespread over the previous years that it basically grew to become synonymous with containers. These two phrases have been used indistinguishably. I used to be tempted to say a number of different choices however truthfully, there isn’t any level right now.

Docker is an open-source platform as a service for constructing, deploying, managing containerized purposes.

Furthermore, it comes with a really highly effective Command Line Interface (CLI), a desktop Person Interface (UI) for people who choose a visible method (Docker Desktop), in addition to a group of 1000’s of prepared to make use of container photos (Docker Hub).

OK let’s begin utilizing Docker in our instance. It’s simpler to discover extra of its benefits alongside the method.

Easy methods to arrange Docker?

The very first thing we have to do is set up the docker engine on our machine. As a result of it’s greater than a easy “apt-get set up” command and since I gained’t do pretty much as good of a job because the official Docker documentation, I’ll immediate you to go there and are available again when you end the set up (ensure to comply with all of the steps).

Don’t be concerned I will wait.

Carried out? Okay, let’s transfer on.

If all the things went nicely you need to be capable of execute the next command on a terminal and begin a pattern container:

$ docker run ubuntu

Right here, “ubuntu” is a container picture that features a minimal ubuntu set up. With “docker run IMAGE”, we are able to spin up the container.

A docker picture is nothing greater than a template that accommodates a set of directions for making a container. It consists of the weather wanted to run the appliance as a container comparable to code, config recordsdata, setting variables, libraries. If the picture is deployed to a Docker setting it might then be executed as a Docker container.

If we’d wish to enter the container, we are able to run the very same command with an “–it” argument.

$ docker run -it ubuntu

As soon as we have accomplished that, a bash terminal that offers us entry to the container seems. Right here we are able to do regardless of the heck we would like. We will set up issues, execute software program, and virtually all the things we do in a standard system. To exit the terminal, simply kind “exit”.

root@5bb91d6c145d:/

bin boot dev and so on residence lib lib32 lib64 libx32 media mnt choose proc root run sbin srv sys tmp usr var

root@5bb91d6c145d:/

To examine all working containers, we now have the “docker ps” command:

$ docker ps

The identical container might be pulled from one other setting or system and it’ll have the very same software program and dependencies.

OK, what’s subsequent?

Easy methods to construct a deep studying docker picture?

Sufficient playing around. It is time to construct our Tensorflow/Flask/uWSGI picture that accommodates our Unet mannequin.

However earlier than that, we have to know what dependencies are obligatory to incorporate within the picture.

In case you comply with together with the sequence and develop the entire app inside a digital setting with solely the necessities libraries, we are able to do:

$ pip freeze > necessities.txt

This command will take all of our put in libraries contained in the digital setting and write them in a necessities.txt file alongside their variations. Just a few handpicked libraries contained in the file are:

Flask==1.1.2

uWSGI==2.0.18

Tensorflow==2.2.0

Tip: An necessary element to know right here is that we most likely need not declare all these libraries as a result of most of them are dependencies of different libraries. If I needed to guess I would say that together with solely these three, can be ample however do not take my phrase as a right.

And actually, we cannot even want Tensorflow as a result of we’ll use an present Tensorflow picture from Docker Hub that already accommodates it. Extra on that shortly.

The subsequent step is to restructure our software so that every one the required code wanted to run an inference lives inside a single folder. This is because of the truth that we are able to’t have the docker container reads from a number of folders and modules. Let’s remind ourselves of our software first.

We’ve the Flask app written in Python right here:

import os

import traceback

from flask import Flask, jsonify, request

from unet_inferrer import UnetInferrer

app = Flask(__name__)

APP_ROOT = os.getenv('APP_ROOT', '/infer')

HOST = "0.0.0.0"

PORT_NUMBER = int(os.getenv('PORT_NUMBER', 8080))

u_net = UnetInferrer()

@app.route(APP_ROOT, strategies=["POST"])

def infer():

information = request.json

picture = information['image']

return u_net.infer(picture)

Other than an inferrer class, I don’t see every other dependencies so let’s embrace that one as nicely.

import tensorflow as tf

class UnetInferrer:

def __init__(self):

self.image_size = 128

self.saved_path = 'unet'

self.mannequin = tf.saved_model.load(self.saved_path)

self.predict = self.mannequin.signatures["serving_default"]

def preprocess(self, picture):

picture = tf.picture.resize(picture, (self.image_size, self.image_size))

return tf.solid(picture, tf.float32) / 255.0

def infer(self, picture=None):

tensor_image = tf.convert_to_tensor(picture, dtype=tf.float32)

tensor_image = self.preprocess(tensor_image)

form= tensor_image.form

tensor_image = tf.reshape(tensor_image,[1, shape[0],form[1], form[2]])

pred = self.predict(tensor_image)['conv2d_transpose_4']

pred = pred.numpy().tolist()

return {'segmentation_output':pred}

Once more, in addition to Tensorflow I am unable to see every other dependency, so I suppose we’re accomplished right here.

Oh oh wait a second, I forgot the saved mannequin.

We should always after all embrace all of the skilled variables contained in the folder as nicely. And I forgot one thing else too. The uWSGI config file. So we now have our skilled Tensorflow mannequin, our Flask software, our uWSGI configuration, and the necessities.txt file. Now we’re all set and our folder construction appears to be like like this:

app/

unet/

app.ini

necessities.txt

service.py

unet_inferrer.py

Now that we now have our “app” folder, it’s time to write our Dockerfile.

Dockerfiles are contracts that present all the required steps Docker must take throughout constructing a picture.

They will range from having 5 traces of easy instructions to tons of. The wonderful thing about Dockerfiles is that Docker gained’t execute the entire thing each time. As soon as the picture is constructed, on any subsequent execution it should run solely the instructions under the road that modified. Subsequently we are able to experiment with totally different libraries and haven’t got to attend each time for your complete picture to been constructed.

So let’s open an empty textual content file and begin constructing our Dockerfile.

Each docker picture must be constructed on prime of one other picture. This generally is a very primitive one comparable to “ubuntu” with solely the OS kernel and primary libraries or a high-level one like “TensorFlow” which is the one we’ll use as we speak.

FROM tensorflow/tensorflow:2.0.0

Tensorflow offers a wide range of photos for various variations and totally different environments (CPU vs GPU). “TensorFlow” is the title of the picture whereas “2.0.0” is the tag. Tags are used to distinguish photos from the identical vendor.

Alternatively, we might use a primary Linux picture and set up all of our dependencies ourselves, however more often than not this isn’t most popular as a result of the official photos are extremely optimized when it comes to reminiscence consumption and construct time

WORKDIR /app

The WORKDIR line units the working listing contained in the container into the /app folder in order that any instructions or code executions will occur there. And naturally, we have to copy our native folder contained in the container which we are able to do like this:

ADD . /app

All of our recordsdata at the moment are current contained in the container so we must always be capable of set up all of the required Python libraries.

RUN pip set up -r necessities.txt

Did you discover that we haven’t put in Python to this point? The rationale behind that’s that the “TensorFlow” picture accommodates Python3, pip, and different obligatory libraries. To have a peek contained in the picture, you may go to the Docker Hub web site.

Lastly, as all the things is in place, we are able to provoke the uWSGI server with the CMD command. The CMD command won’t be invoked throughout constructing time however throughout runtime.

CMD ["uwsgi", "app.ini"]

Writing Dockerfiles shouldn’t be at all times as simple as it’s possible you’ll think about. For extra superior utilization, I’d advocate spending a while going via the documentation.

If we have not missed something, we are able to now soar again to the terminal, construct the picture and instruct Docker to execute all these steps so as. I’ll title the picture “deep-learning-production” and provides it a “1.0” tag to distinguish it from future variations.

$ docker construct -t deep-studying-manufacturing:1.0 .

Bear in mind that this will likely take some time as a result of the TensorFlow picture is sort of large, so we now have to be affected person. If all the things went wonderful you need to see

Efficiently constructed SOME_RANDOM_ID

Efficiently tagged deep-studying-manufacturing:1.0

Easy methods to run a deep studying docker container?

It is lastly time to run our container and hearth up our server inside it

$ docker run --publish 80:8080 --title dlp deep-studying-manufacturing:1.0

Two issues to note right here:

-

The “publish” argument will expose the 8080 port of the container to the 80 port of our native system. Mainly, every request coming to localhost:80 shall be routed to the 8080 contained in the container. And do not forget that 8080 is the uWSGI listening port.

-

A superb apply can be to specify a reputation when working a container. In any other case, we have to use the random ID by Docker to seek advice from the container and this may be fairly cumbersome.

If the container was executed efficiently we’ll see logs of spawned uWSGI staff and we are able to take a look at it by working the consumer script we construct within the earlier article and ship a picture to the server for inference.

Is all the things working completely? I am positive it does.

Sum up

So as a substitute of working the server regionally, we now have developed a container that:

-

consists of all the required dependencies,

-

is absolutely remoted from the remainder of our system,

-

might be moved intact to a different machine, or deployed within the cloud.

I hope that by now you see the flexibility and the simplicity containers present.

To move the container to a different machine, we are able to both push the container to the Docker registry in order that different individuals can pull from it or we are able to merely move the Dockerfile and let the opposite developer construct the picture. And we are able to make sure that all the things shall be 100% the identical.

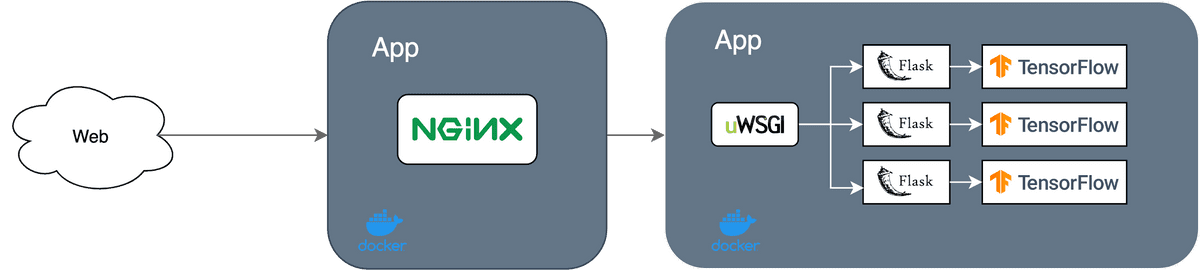

Easy methods to arrange an Nginx container?

Following the identical rules, we are able to construct the Nginx container which is able to act in entrance of the uWSGI server as a reverse proxy. Once more we now have to maneuver all of the recordsdata inside a folder ( on this case it’s only the Nginx config), assemble the Dockerfile, construct and run the picture.

The Nginx config (nginx.conf) has the under construction (extra particulars within the earlier article):

server {

hear 80;

location / {

embrace uwsgi_params;

uwsgi_pass app:660 ;

}

The config basically tells us that the Nginx server will hear on port 80 and route all the skin requests coming there to the uWSGI server (app) in port 660 by way of the uwsgi protocol.

Keep in mind that within the uWSGI config file (app.ini), we now have to alter the listening port from the HTTP port 8080 to be a socket port (660) to make it work with Nginx.

[uwsgi]

module = service

socket= :660

callable = app

die-on-time period = true

processes = 1

grasp = false

vacuum = true

The Nginx Dockerfile shall be fairly easy as a result of it does solely two issues. It pulls the unique Nginx picture and it replaces the default configuration file with our customized one:

FROM nginx

RUN rm /and so on/nginx/conf.d/default.conf

COPY nginx.conf /and so on/nginx/conf.d/

And that is it. This time there isn’t any level to run the container alone as a result of it does not do something. As a substitute, we must mix these two containers right into a single system to allow them to run concurrently.

Which brings us to the final half: Docker Compose.

Easy methods to outline and run multi-container Docker purposes utilizing Docker Compose?

Docker compose is a software for working a number of containers as a single service.

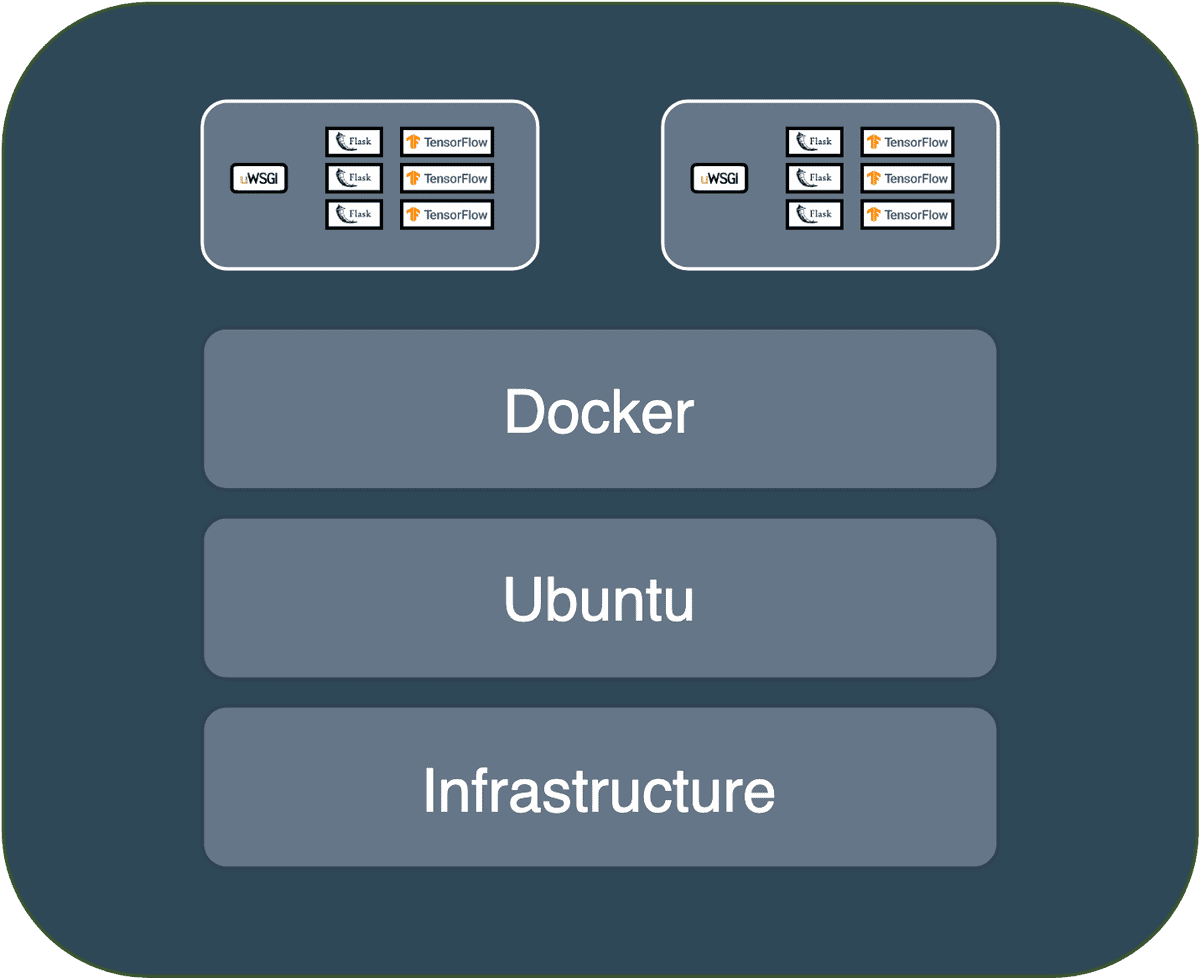

You could be questioning why we have to have a number of containers? Can’t we run all the things on a single container? For instance, wouldn’t it’s good to have each Nginx and uWSGI inside the identical container?

The reply isn’t any! As a result of we’d lose the flexibility to have many uWSGI situations however just one Nginx occasion. Plus the container would develop into huge.

Think about the use case the place our software consists of a database, a backend, frontend, messaging methods, job queues. We will’t presumably run all the things right into a single container. That is the place Docker Compose comes into play.

From the developer’s perspective, Docker compose is nothing greater than a configuration file during which we outline all of the containers and the way they work together with one another.

We will switch our total service to a different machine by merely shifting the Dockerfiles and the docker compose file and construct it.

Earlier than we start, we have to set up Docker Compose. Once more, it’s greatest to comply with the directions within the unique docs.

To outline the configuration, we create a “docker-compose.yml” file that declares our two containers and the community interactions.

model : "3.7"

companies:

app:

construct: ./app

container_name: deep-studying-manufacturing

restart: at all times

expose:

- 660

nginx:

construct: ./nginx

container_name: nginx

restart: at all times

ports:

- "80:80"

The very first thing we have to arrange is the docker compose model and the principle companies. Right here we now have an app service containing the Tensorflow mannequin and the uWSGI server, and the Nginx service.

Every service has a “construct” parameter that declares the relative folder path that the service lives and instructs Docker to look it up there when constructing the picture. Additionally they have a customized container title which would be the reference title that different companies will use to outline interplay with it. For instance, the nginx config has the next line:

uwsgi_pass app:660

which matches the title of the uWSGI service declared within the yaml file.

We additionally must restart the service every time the container exits because of failure, to be sure that the companies are at all times up, and eventually, we have to outline our ports (which truthfully is crucial half right here).

The uWSGI service has to open the 660 port to hear for requests from the Nginx server. In case you keep in mind, we outlined the very same socket port inside our app.ini file. Notice that the port shall be opened inside Docker and it’ll not be uncovered within the outer world.

The Nginx service has to open the 80 port to the exterior world and map all requests coming there to the 80 port of the container. The primary 80 port is chosen randomly however the second 80 port is the listening port declared within the Nginx config

server {

hear 80;

...

So the movement right here is as follows: ]

-

Requests from the outer world are coming to the 80 port of the environment,

-

they mapped to the Nginx container’s 80 port

-

they routed to the uWSGI 660 port,

-

uWSGI calls the Flask endpoint and executes the Tensorflow inference.

-

uWSGIsend again the response all through the identical pipeline.

We will lastly construct the Docker community with each companies utilizing

$ docker-compose construct

and begin the docker containers with

$ docker-compose up

The second of fact is right here. Let’s attempt to hit our community utilizing our consumer (and be sure that we’re utilizing the right ports).

We’re working the script with and wait…

python consumer.py

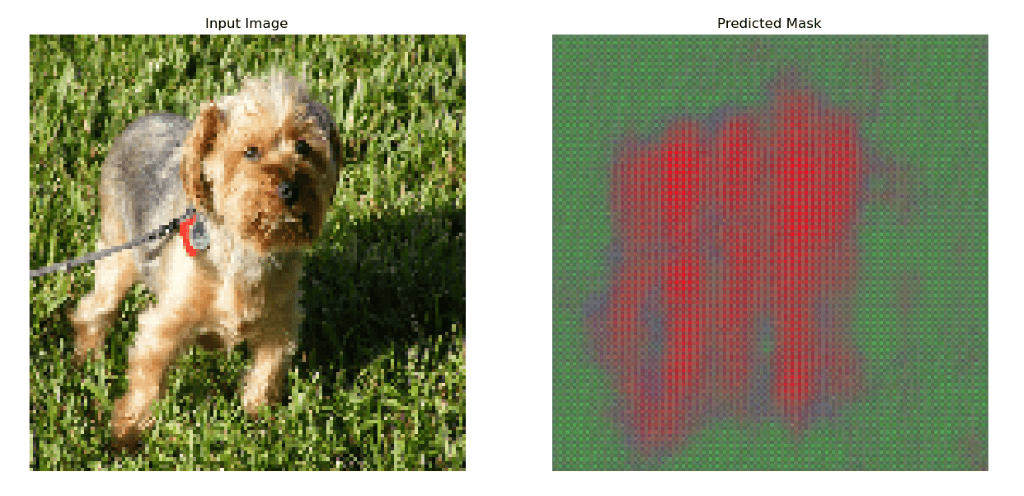

Did it work? It labored on my laptop computer !! The whole lot appears to work completely and I acquired again the segmentation masks of a Yorkshire terrier. Awesomeeeeeeeee!!!

Notice that you will discover your complete code in our Github repository (together with code from the earlier articles)

As a aspect materials, there is a superb course on Cloud computing provided by the College of Illinois: Cloud Computing Specialization: Cloud Computing Functions, Half 1: Cloud Techniques and Infrastructure

What’s subsequent?

I’ve to confess that the final couple of articles have been fairly technical and we dove into many particulars considerably unrelated to machine studying. However that is simply a part of being a machine studying engineer. Generally we must take care of stuff like this if we would like our fashions for use in the true world.

Let’s recognize what we now have achieved to this point.

-

We remodeled our deep studying mannequin into an internet software utilizing Flask

-

We served the appliance with uWSGI

-

We hid it behind an Nginx reverse proxy for load balancing and safety

-

We containerized each companies utilizing Docker and Docker Compose

-

We lastly executed your complete system regionally.

So what’s the subsequent step? Clearly, we wish to make the appliance seen to the world. And to do this we must deploy it within the cloud and expose it to the net. Subsequently, within the subsequent articles, we’ll see tips on how to deploy and serve the appliance in Google cloud and tips on how to use Kubernetes to orchestrate the containers, monitor them, and scale them as much as serve hundreds of thousands of customers.

Tchau…

References

* Disclosure: Please observe that among the hyperlinks above could be affiliate hyperlinks, and at no further price to you, we’ll earn a fee for those who resolve to make a purchase order after clicking via.