The well-known paper “Consideration is all you want” in 2017 modified the best way we have been desirous about consideration. With sufficient knowledge, matrix multiplications, linear layers, and layer normalization we are able to carry out state-of-the-art-machine-translation.

Nonetheless, 2020 was undoubtedly the 12 months of transformers! From pure language now they’re into laptop imaginative and prescient duties. How did we go from consideration to self-attention? Why does the transformer work so rattling nicely? What are the important elements for its success?

Learn on and discover out!

In my view, transformers should not so onerous to know. It is the mix of all the encompassing ideas that could be complicated, together with consideration. That’s why we are going to slowly construct round all the basic ideas.

With Recurrent Neural Networks (RNN’s) we used to deal with sequences sequentially to maintain the order of the sentence in place. To fulfill that design, every RNN part (layer) wants the earlier (hidden) output. As such, stacked LSTM computations have been carried out sequentially.

Till transformers got here out! The basic constructing block of a transformer is self-attention. To start with, we have to recover from sequential processing, recurrency, and LSTM’s!

How?

By merely altering the enter illustration!

For an entire e-book to information your studying on NLP, check out the “Deep Studying for Pure Language Processing” e-book. Use the code aisummer35 to get an unique 35% low cost out of your favourite AI weblog 🙂

Representing the enter sentence

Units and tokenization

The transformer revolution began with a easy query: Why don’t we feed all the enter sequence? No dependencies between hidden states! That is perhaps cool!

For example the sentence “whats up, I like you”:

This processing step is often known as tokenization and it is the primary out of three steps earlier than we feed the enter within the mannequin.

So as an alternative of a sequence of components, we now have a set.

Units are a group of distinct components, the place the association of the weather within the set does not matter.

In different phrases, the order is irrelevant. We denote the enter set as the place . The weather of the sequence are known as tokens.

After tokenization, we mission phrases in a distributed geometrical area, or just construct phrase embeddings.

Phrase Embeddings

Typically, an embedding is a illustration of a logo (phrase, character, sentence) in a distributed low-dimensional area of continuous-valued vectors.

Phrases should not discrete symbols. They’re strongly correlated with one another. That’s why once we mission them in a steady euclidean area we are able to discover associations between them.

Then, depending on the duty, we are able to push phrase embeddings additional away or maintain them shut collectively.

Ideally, an embedding captures the semantics of the enter by putting semantically comparable inputs shut collectively within the embedding area.

In pure language, we are able to discover comparable phrase meanings and even comparable syntactic constructions (i.e. objects get clustered collectively). In any case, once you mission them in 2D or 3D area you’ll be able to visually determine some clusters. I discovered this 3D illustration attention-grabbing:

To achieve a sensible understanding of word-embeddings, strive enjoying round with this pocket book.

Transferring on, we are going to devise a cool trick to offer some notion of order within the set.

Positional encodings

Once you convert a sequence right into a set (tokenization), you lose the notion of order.

Can you discover the order of phrases (tokens) from the sequence: “Hey I like you”? In all probability sure! However what about 30 unordered phrases?

Keep in mind, machine studying is all about scale. The neural community actually can’t perceive any order in a set.

Since transformers course of sequences as units, they’re, in concept, permutation invariant.

Let’s assist them have a way of order by barely altering the embeddings primarily based on the place. Formally, positional encoding is a set of small constants, that are added to the phrase embedding vector earlier than the primary self-attention layer.

So if the identical phrase seems in a distinct place, the precise illustration might be barely totally different, relying on the place it seems within the enter sentence.

Supply

Within the transformer paper, the authors got here up with the sinusoidal perform for the positional encoding. The sine perform tells the mannequin to concentrate to a specific wavelength . Given a sign the wavelength might be . In our case the might be depending on the place within the sentence. is used to tell apart between odd and even positions.

Mathematically:

For the report, , which is the dimensionality of the embedding vectors.

A 2D Vizualization of a positional encoding. Picture from The Transformer Household by Lil’Log

That is in distinction to recurrent fashions, the place now we have an order however we’re struggling to concentrate to tokens that aren’t shut sufficient.

Elementary ideas of the Transformer

This part offers some mandatory background. Be happy to skip it and soar in self-attention straight on should you already really feel snug with the ideas.

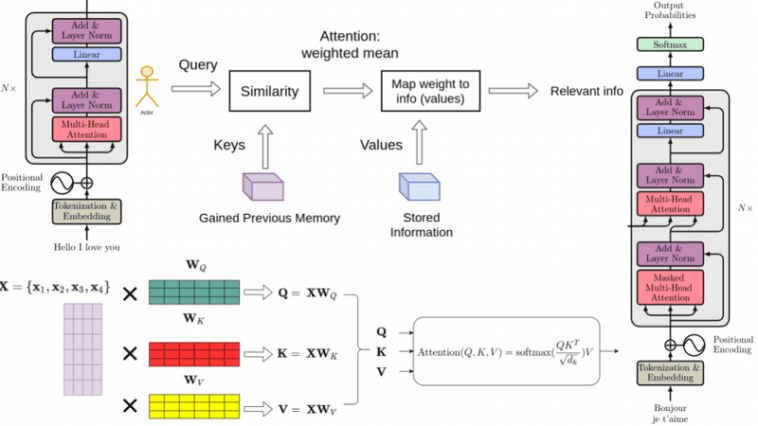

Characteristic-based consideration: The Key, Worth, and Question

Key-value-query ideas come from info retrieval methods. I discovered it extraordinarily useful to make clear these ideas first.

Let’s begin with an instance of looking for a video on youtube.

Once you search (question) for a specific video, the search engine will map your question in opposition to a set of keys (video title, description, and so on.) related to doable saved movies. Then the algorithm will current you the best-matched movies (values). That is the muse of content material/feature-based lookup.

Bringing this concept nearer to the transformer’s consideration now we have one thing like this:

Within the single video retrieval, the eye is the selection of the video with a most relevance rating.

However we are able to chill out this concept. To this finish, the principle distinction between consideration and retrieval methods is that we introduce a extra summary and easy notion of ‘retrieving’ an object. By defining a level of similarity (weight) between our representations (movies for youtube) we are able to weight our question.

As an alternative of selecting the place to look in keeping with the place inside a sequence, we now attend to the content material that we wanna have a look at!

So, by shifting one step ahead, we additional cut up the information into key-value pairs.

We use the keys to outline the consideration weights to take a look at the information and the values because the info that we’ll really get.

For the so-called mapping, we have to quantify similarity, that we’ll be seeing subsequent.

Vector similarity in excessive dimensional areas

In geometry, the interior vector product is interpreted as a vector projection. One method to outline vector similarity is by computing the normalized interior product. In low dimensional area, just like the 2D instance under, this may correspond to the cosine worth.

Mathematically:

We will affiliate the similarity between vectors that characterize something (i.e. animals) by calculating the scaled dot product, specifically the cosine of the angle.

In transformers, that is essentially the most primary operation and is dealt with by the self-attention layer as we’ll see.

Self-Consideration: The Transformer encoder

What’s self-attention?

“Self-attention, generally known as intra-attention, is an consideration mechanism relating totally different positions of a single sequence as a way to compute a illustration of the sequence.” ~ Ashish Vaswani et al. [2] from Google Mind.

Self-attention permits us to seek out correlations between totally different phrases of the enter indicating the syntactic and contextual construction of the sentence.

Let’s take the enter sequence “Hey I like you” for instance. A educated self-attention layer will affiliate the phrase “love” with the phrases ‘I” and “you” with a better weight than the phrase “Hey”. From linguistics, we all know that these phrases share a subject-verb-object relationship and that’s an intuitive method to perceive what self-attention will seize.

In apply, the Transformer makes use of 3 totally different representations: the Queries, Keys and Values of the embedding matrix. This will simply be achieved by multiplying our enter with 3 totally different weight matrices , and . In essence, it is only a matrix multiplication within the unique phrase embeddings. The resulted dimension might be smaller:

Having the Question, Worth and Key matrices, we are able to now apply the self-attention layer as:

Within the unique paper, the scaled dot-product consideration was chosen as a scoring perform to characterize the correlation between two phrases (the eye weight). Notice that we are able to additionally make the most of one other similarity perform. The is right here merely as a scaling issue to ensure that the gradients received’t explode.

Following the database-query paradigm we launched earlier than, this time period merely finds the similarity of the looking question with an entry in a database. Lastly, we apply a softmax perform to get the ultimate consideration weights as a chance distribution.

Keep in mind that now we have distinguished the Keys () from the Values () as distinct representations. Thus, the ultimate illustration is the self-attention matrix multiplied with the Worth () matrix.

Personally, I like to think about the eye matrix as the place to look and the Worth matrix as what I really need to get.

Discover any variations between vector similarity?

First, now we have matrices as an alternative of vectors and consequently matrix multiplications. Second, we don’t scale down by the vector magnitude however by the matrix measurement (), which is the variety of phrases in a sentence! Sentence measurement varies 🙂

What would we do subsequent?

Normalization and brief skip connections, just like processing a tensor after convolution or recurrency.

Quick residual skip connections

In language, there’s a vital notion of a wider understanding of the world and our capacity to mix concepts. People extensively make the most of these top-down influences (our expectations) to mix phrases in numerous contexts. In a really tough method, skip connections give a transformer a tiny capacity to permit the representations of various ranges of processing to work together.

With the forming of a number of paths, we are able to “move” our higher-level understanding of the final layers to the earlier layers. This permits us to re-modulate how we perceive the enter. Once more, this is identical concept as human top-down understanding, which is nothing greater than expectations.

For a extra detailed and basic overview, recommendation our article on skip connections.

Layer Normalization

Subsequent, let’s open the Layer Norm black field.

In Layer Normalization (LN), the imply and variance are computed throughout channels and spatial dims. In language, every phrase is a vector. Since we’re coping with vectors we solely have one spatial dimension.

In a 4D tensor with merged spatial dimensions, we are able to visualize this with the next determine:

An illustration of Layer Norm.

After making use of a normalization layer and forming a residual skip connection we’re right here:

Supply

Although this might be a stand-alone constructing block, the creators of the transformer add one other MLP (see under) on prime and renormalize it together with one other skip connection.

The MLP: 2 linear layers + non linear activation

Right here, I need to make clear the linear transformation layer. There are loads of fancy methods to say trainable matrix multiplication; linear layer (PyTorch), dense layer (Keras), feed-forward layer (outdated ML books), absolutely related layer. For this tutorial, we are going to merely say linear layer which is:

The place is a matrix and are vectors.

Actually, they add two linear layers with dropout and non-linearities in between. That is often refered to as MLP, which stands for multilayer perceptron.

import torch.nn as nn

dim = 512

dim_linear_block = 512*4

mlp = nn.Sequential(

nn.Linear(dim, dim_linear_block),

nn.ReLU,

nn.Dropout(dropout),

nn.Linear(dim_linear_block, dim),

nn.Dropout(dropout))

The principle instinct is that they mission the output of self-attention in a better dimensional area (X4 within the paper). This solves unhealthy initializations and rank collapse. We’ll depict it within the diagrams merely as Linear.

That is the encoder a part of the transformer with N such constructing blocks, as depicted under:

Supply

Truly that is virtually the encoder of the transformer. There may be one distinction. Multi-head consideration.

The core constructing block: Multi-head consideration and parallel implementation

Within the unique paper, the authors increase on the thought of self-attention to multi-head consideration. In essence, we run by way of the eye mechanism a number of occasions.

Every time, we map the unbiased set of Key, Question, Worth matrices into totally different decrease dimensional areas and compute the eye there (the output is named a “head”). The mapping is achieved by multiplying every matrix with a separate weight matrix, denoted as and

To compensate for the additional complexity, the output vector measurement is split by the variety of heads. Particularly, within the vanilla transformer, they use and heads, which supplies us vector representations of 64. Now, the mannequin has a number of unbiased paths (methods) to grasp the enter.

The heads are then concatenated and reworked utilizing a sq. weight matrix , since

Placing all of it collectively we get:

the place once more:

Since heads are unbiased from one another, we are able to carry out the self-attention computation in parallel on totally different staff:

However why undergo all this hassle?

The instinct behind multi-head consideration is that it permits us to take care of totally different components of the sequence in a different way every time. This virtually signifies that:

-

The mannequin can higher seize positional info as a result of every head will attend to totally different segments of the enter. The mixture of them will give us a extra strong illustration.

-

Every head will seize totally different contextual info as nicely, by correlating phrases in a singular method.

To cite the unique paper [2]:

“Multi-head consideration permits the mannequin to collectively attend to info from totally different illustration subspaces at totally different positions. With a single consideration head, averaging inhibits this.”

We’ll depict Multi-head self-attention in our diagrams like this:

Supply

To get your thoughts round multihead consideration, be at liberty to take a look at our Pytorch implementation utilizing the einsum notation.

Sum up: the Transformer encoder

To course of a sentence we want these 3 steps:

-

Phrase embeddings of the enter sentence are computed concurrently.

-

Positional encodings are then utilized to every embedding leading to phrase vectors that additionally embody positional info.

-

The phrase vectors are handed to the primary encoder block.

Every block consists of the next layers in the identical order:

-

A multi-head self-attention layer to seek out correlations between all pairs of phrases in a sentence.

-

A normalization layer

-

A residual connection across the earlier two sublayers

-

An MLP (bear in mind: 2 linear layers + non linear activation)

-

A second normalization layer

-

A second residual connection

Notice that the above block will be replicated a number of occasions to kind the Encoder. Within the unique paper, the encoder composed of 6 an identical blocks.

Supply

A small element: Although the unique paper states that the normalization layer is utilized after the skip connection, in apply the precise reverse is established. That is often phrases as “prenorm“, that means earlier than the skip connection. Then again when Layer norm is used after the skip connection is named “postnorm“. My recommendation is do not trouble a lot about it at this stage.

Let’s see what is perhaps totally different within the decoder half.

Transformer decoder: what’s totally different?

The decoder consists of all of the aforementioned elements plus two novel ones. As earlier than:

-

The output sequence is fed in its entirety and phrase embeddings are computed

-

Positional encoding are once more utilized

-

And the vectors are handed to the primary Decoder block

Every decoder block contains:

-

A Masked multi-head self-attention layer

-

A normalization layer adopted by a residual connection

-

A brand new multi-head consideration layer (often known as Encoder-Decoder consideration)

-

A second normalization layer and a residual connection

-

A linear layer and a 3rd residual connection

The decoder block seems once more 6 occasions. The ultimate output is reworked by way of a last linear layer and the output chances are calculated with the usual softmax perform.

Supply

The output chances predict the subsequent token within the output sentence. How? In essence, we assign a chance to every phrase within the French language and we merely maintain the one with the very best rating.

To place issues into perspective, the unique mannequin was educated on the WMT 2014 English-French dataset consisting of 36M sentences and 32000 tokens.

Whereas most ideas of the decoder are already acquainted, there are two extra that we have to talk about. Let’s begin with the Masked multi-head self-attention layer.

Masked Multi-head consideration

In case you haven’t realized, within the decoding stage, we predict one phrase (token) after one other. In such NLP issues like machine translation, sequential token prediction is unavoidable. Consequently, the self-attention layer must be modified as a way to take into account solely the output sentence that has been generated to date.

In our translation instance, the enter of the decoder on the third move might be “Bonjour”, “je” … …”.

As you’ll be able to inform, the distinction right here is that we don’t know the entire sentence as a result of it hasn’t been produced but. That’s why we have to disregard the unknown phrases. In any other case, the mannequin would simply copy the subsequent phrase! To attain this, we masks the subsequent phrase embeddings (by setting them to ).

Mathematically now we have:

the place the matrix M (masks) consists of zeros and .

Zeros will change into ones with the exponential whereas infinities change into zeros.

This successfully has the identical impact as eradicating the corresponding connection. The remaining rules are precisely the identical because the encoder’s consideration. And as soon as once more, we are able to implement them in parallel to hurry up the computations.

Clearly, the masks will change for each new token we compute.

Encoder-Decoder consideration: the place the magic occurs

That is really the place the decoder processes the encoded illustration. The eye matrix generated by the encoder is handed to a different consideration layer alongside the results of the earlier Masked Multi-head consideration block.

The instinct behind the encoder-decoder consideration layer is to mix the enter and output sentence. The encoder’s output encapsulates the ultimate embedding of the enter sentence. It’s like our database. So we are going to use the encoder output to provide the Key and Worth matrices. Then again, the output of the Masked Multi-head consideration block incorporates the to date generated new sentence and is represented because the Question matrix within the consideration layer. Once more, it’s the “search” within the database.

The encoder-decoder consideration is educated to affiliate the enter sentence with the corresponding output phrase.

It is going to ultimately decide how associated every English phrase is with respect to the French phrases. That is basically the place the mapping between English and French is going on.

Discover that the output of the final block of the encoder might be utilized in every decoder block.

Intuitions on why transformers work so rattling nicely

-

Distributed and unbiased representations at every block: Every transformer block has contextualized representations. Intuitively, you’ll be able to consider it because the a number of characteristic maps of a convolution layer that seize totally different options from the picture. The distinction with convolutions is that right here now we have a number of views (linear reprojections) to different areas. That is in fact doable by initially representing phrases as vectors in a euclidean area (and not as discrete symbols).

-

The that means closely is determined by the context: That is precisely what self-attention is all about! We affiliate relationships between phrase illustration expressed by the eye weights. There is no such thing as a notion of locality since we naturally let the mannequin make world associations.

-

A number of encoder and decoder blocks: With extra layers, the mannequin makes extra summary representations. Much like stacking recurrent or convolution blocks we are able to stack a number of transformer blocks. The primary block associates word-vector pairs, the second pairs of pairs, the third of pairs of pairs of pairs, and so forth. In parallel, the a number of heads deal with totally different segments of the pairs. That is analogous to the receptive discipline however when it comes to pairs of distributed representations.

-

Mixture of excessive and low-level info: with skip-connections in fact! They allow top-down understanding to movement again with the a number of gradient paths that movement backward.

Self-attention VS linear layers VS convolutions

What’s the distinction between consideration and a feedforward layer? Do not linear layers do precisely the identical operations to an enter vector as consideration?

Good query! The reply is no should you delve deep into the ideas.

You see the values of the self-attention weights are computed on the fly. They’re data-dependent dynamic weights as a result of they modify dynamically in response to the information (quick weights).

For instance, every phrase within the translated sequence (Bonjour, je t’aime) will attend in a different way with respect to the enter.

Then again, the weights of a feedforward (linear) layer change very slowly with stochastic gradient descent. In convolutions, we additional constrict the (gradual) weight to have a set measurement, specifically the kernel measurement.

Conclusion

Should you felt that you just gained a brand new perception from this text, we kindly ask you to share it along with your colleagues, mates, or in your social web page. As a follow-up studying, check out how one can implement a transformer with multi-head self-attention from scratch!

Acknowledgments

For the visualizations, I used the superior repo of Renato Negrinho. Most of my enlightenment on the transformer structure got here from the lecture of Felix Hill [1]. It is among the only a few sources the place you’ll be able to study extra about intuitions somewhat than pure math.

Cited as

@article{adaloglou2021transformer,

title = "Transformers in Laptop Imaginative and prescient",

creator = "Adaloglou, Nikolas",

journal = "https://theaisummer.com/",

12 months = "2021",

howpublished = {https://github.com/The-AI-Summer season/self-consideration-cv},

}

References

[1] DeepMind’s deep studying movies 2020 with UCL, Lecture: Deep Studying for Pure Language Processing, Felix Hill

[2] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., … & Polosukhin, I. (2017). Consideration is all you want. In Advances in neural info processing methods (pp. 5998-6008).

[3] Stanford CS224N: NLP with Deep Studying , Winter 2019 , Lecture 14 – Transformers and Self-Consideration

[4] CS480/680 Lecture 19: Consideration and Transformer Networks by Pascal Poupart

[5] Neural Machine Translation and Fashions with Consideration – Stanford

* Disclosure: Please be aware that among the hyperlinks above is perhaps affiliate hyperlinks, and at no extra price to you, we are going to earn a fee should you resolve to make a purchase order after clicking by way of.