Why ought to I care about Kubernetes? I don’t wish to be a DevOps Engineer. I’m simply curious about Machine Studying and constructing fashions. I’m positive that many people have this thought of their minds. Whereas that is partially true, I imagine that having a fundamental understanding of Infrastructure and DevOps is a should for a lot of practitioners. Why?

As a result of fashions are ineffective until they’re deployed into manufacturing. And even this isn’t sufficient. We then have to keep up it. We have to care about issues comparable to scalability, A/B testing, retraining. MLOps is an ongoing effort to discover a standardized answer and clear up all of them. Kubernetes appears to be the frequent denominator for a lot of of these options and that’s why I believe it could be helpful for a lot of to write down this text.

Right here we are going to discover:

-

What’s Kubernetes?

-

The essential rules behind it

-

Why it could be the most suitable choice to deploy Machine Studying functions and

-

What options it gives to assist us preserve and scale our infrastructure.

-

How one can arrange a easy Kubernetes cluster in Google cloud

So far as Google cloud considerations, it’s an apparent alternative as a result of Kubernetes is partially constructed from a Google workforce and Google Kubernetes Engine (GKE) is extremely coupled with it (extra on GKE later).

What’s Kubernetes?

In a earlier article, we mentioned what containers are and what benefits they supply over traditional strategies comparable to Digital Machines (VMs). To sum it up, containers give us isolation, portability, straightforward experimentation, and consistency over totally different environments. Plus they’re way more light-weight than VMs. If you’re satisfied about utilizing containers and Docker, Kubernetes will come as a pure alternative.

Kubernetes is a container orchestration system that automates deployment, scaling, and administration of containerized functions.

In different phrases, it helps us deal with a number of containers with the identical or totally different functions utilizing declarative configurations (config recordsdata).

Due to my Greek origin, I could not maintain myself from telling you that Kubernetes means helmsman or captain in Greek. It’s the individual/system that controls the entire ship and makes positive that all the things is working as anticipated.

Why use Kubernetes

However why use Kubernetes in any respect?

-

It manages the complete container’s lifecycle from creation to deletion

-

It gives a excessive degree of abstraction utilizing configuration recordsdata

-

It maximizes {hardware} utilization

-

It is an Infrastructure as a Code (IaaC) framework, which implies that all the things is an API name whether or not it’s scaling containers or provisioning a load balancer

Regardless of the high-level abstractions it gives, it additionally comes with some essential options that we use in our on a regular basis ML DevOps life:

-

Scheduling: it decides the place and when containers ought to run

-

Lifecycle and well being: it ensures that each one containers are up all time and it spins up new ones when an outdated container dies

-

Scaling: it gives a straightforward strategy to scale containers up or down, manually or robotically (autoscaling)

-

Load balancing

-

Logging and monitoring techniques

If you happen to aren’t positive we’d like all of them, make certain to verify the earlier article of the collection the place we undergo a journey to scale a machine studying software from 1 to thousands and thousands customers.

Truthfully, the trickiest half about Kubernetes is its naming of various parts. When you get a grasp of it, all the things will simply click on in your thoughts. The truth is, it’s not that totally different from our commonplace software program techniques.

Kubernetes fundamentals

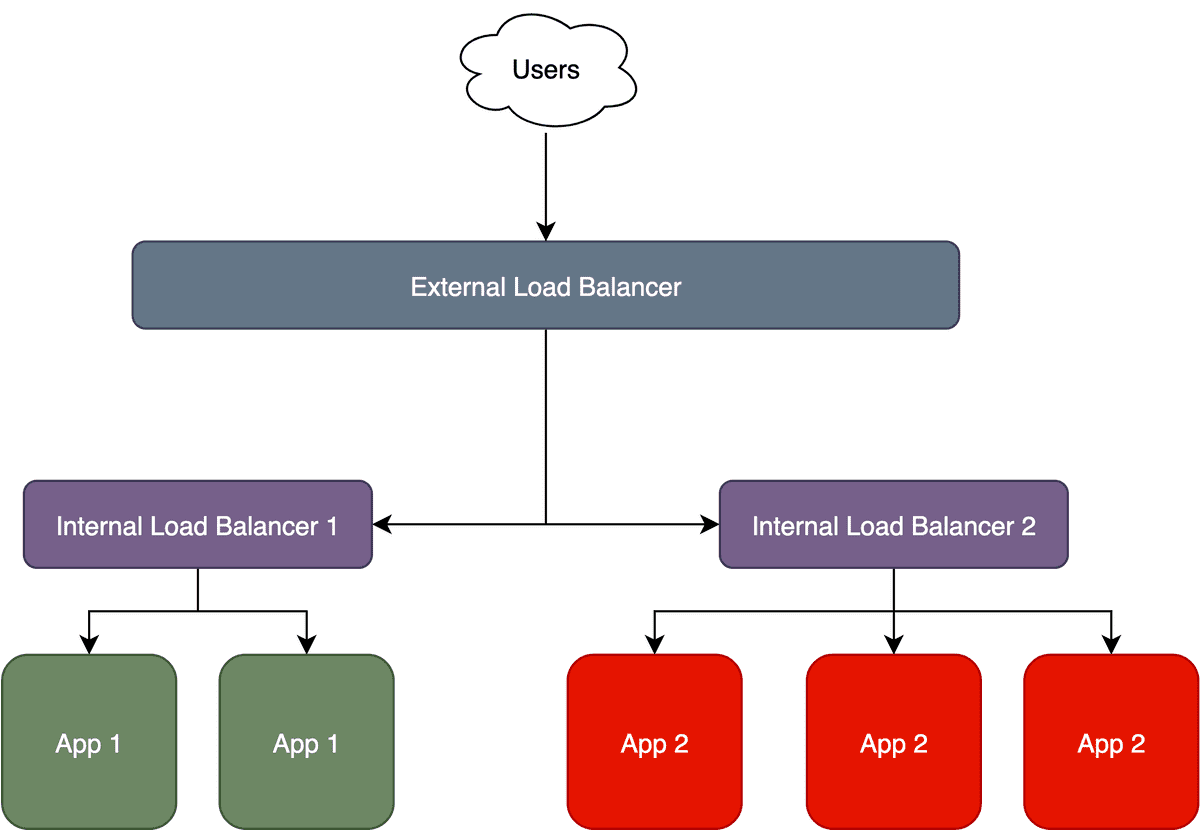

In fashionable net functions, we often have a server that’s uncovered to the net and is been hit by totally different shoppers. Historically, a server is both a bodily machine or a VM occasion. When we’ve got elevated site visitors, we add one other occasion of the server and handle the load utilizing a load balancer. However we would even have many servers internet hosting totally different functions in the identical system. Then we’d like an exterior load balancer to route the requests to the totally different inside load balancers, which can lastly ship it to the right occasion, as illustrated under:

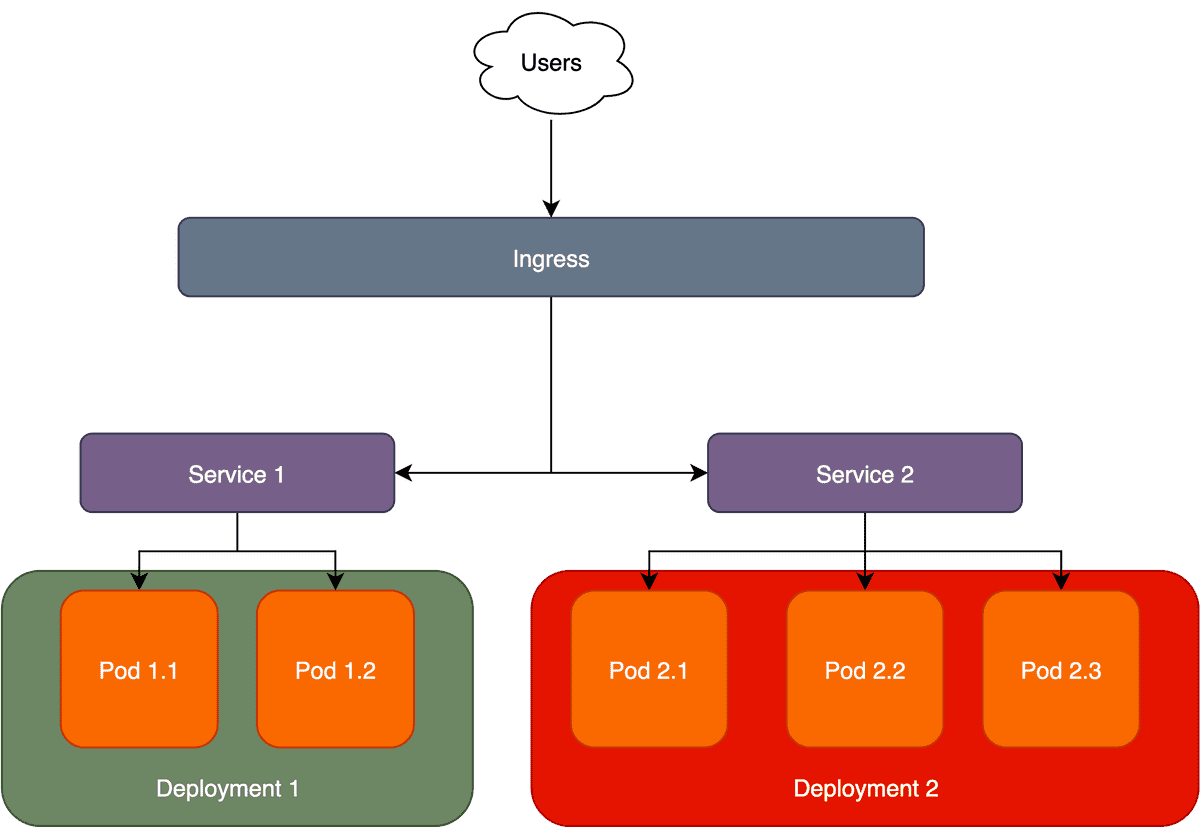

Kubernetes does the very same factor however calls all the things with totally different names. The inner load balancer is known as a Service and the exterior load balancer is known as Ingress. The totally different apps are the Deployments whereas the appliance containers are known as Pods.

A node is a bodily or digital machine that runs many pods (aka containers) and a cluster is solely a set of nodes. And to get issues extra complicated, we will both have a bodily cluster or a digital cluster (that is known as namespace) that runs a number of clusters.

I do know there are too many new phrases however issues are fairly easy really in the event you be mindful the mapping of the totally different definitions I simply talked about.

| With out Kubernetes | With Kubernetes |

|---|---|

| Containers | Pod |

| Inside Load Balancer | Service |

| Exterior Load Balancer | Ingress |

| Utility | Deployment |

| Bodily Machine | Node |

| Cluster of Machines | Cluster |

| Digital Cluster | Namespace |

Let’s examine how issues work in observe to hopefully make extra sense of it. To do this we are going to deploy our containerized software in Google cloud utilizing the Google Kubernetes Engine (GKE). For many who are new to the collection, our software consists of a Tensorflow mannequin that performs picture segmentation. Don’t overlook that we already constructed a Docker picture so all the things is in place to get began.

What’s Google Kubernetes Engine(GKE): fundamentals and set up

Google Kubernetes Engine (GKE) is a Google cloud service that gives an setting and APIs to handle Kubernetes functions deployed in Google’s infrastructure. Conceptually it’s much like what Compute Engine is for VMs. The truth is, GKE runs on high of Compute Engine situations however that is one other story.

The very first thing we have to deal with is how we work together with the Google cloud and GKE. We’ve got three choices right here:

-

Use Google cloud’s UI immediately (Google cloud console)

-

Use Google’s built-in terminal referred to as cloud shell

-

Or deal with all the things from our native terminal.

For academic functions, I’ll go along with the third possibility. To configure our native terminal to work together with Kubernetes and GKE, we have to set up two instruments: Google cloud SDK (gcloud) and the Kubernetes CLI (kubectl).

Do not forget that in Kubernetes all the things is an API name? Properly, we will use these two instruments to do just about all the things.

We wish to create a cluster? We are able to. Enhance the variety of pods? See the logs? You betcha.

To put in gcloud, one can comply with the distinctive official documentation. For kubectl, the official Kube docs are fairly good as nicely. Each of them should not take that lengthy as they’re solely a few instructions.

GKE may be managed inside a Google cloud undertaking so we will both create a brand new one or use an current one.

And now for the good things.

How one can deploy a machine studying software in Google cloud with Google Kubernetes Engine?

First, we wish to create a brand new cluster.e can try this just by working:

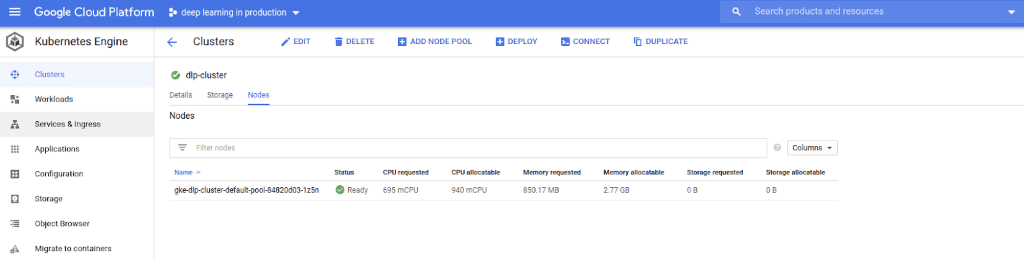

$ gcloud container clusters create CLUSTER_NAME --num-nodes=1

Sure, it is that straightforward. Confirm that the cluster is created accurately utilizing the Google Cloud Console. We should always see one thing like this:

Notice that I selected to make use of just one node on this cluster to maintain issues easy.

The subsequent factor is to configure kubectl to work with GKE and the cluster we simply created. If we initialized gcloud accurately, we needs to be good to go by working:

$ gcloud container clusters get-credentials CLUSTER_NAME

After that, each kubectl command might be robotically mapped to our GKE cluster. Our cluster and nodes are initialized and ready for our software to be deployed.

Kubernetes defines Deployment as a particular time period that represents an object that manages an software usually by working pods. So to deploy any software we have to create a Deployment. Every deployment is related to a Docker picture.

In GKE’s case, the Docker picture must be positioned within the Google containers registry (Google’s repository of docker photos) in order that GKE can pull it and construct the container.

To push a picture within the container’s registry:

$ HOSTNAME = gcr.io

$ PROJECT_ID = deep-learning-production

$ IMAGE = dlp

$ TAG= 0.1

$ SOURCE_IMAGE = deep-learning-in-production

$ docker tag ${IMAGE} $ HOSTNAME /${PROJECT_ID}/${IMAGE}:${TAG}

$ docker push $ HOSTNAME /${PROJECT_ID}/${IMAGE}:${TAG}

The above instructions will merely switch the Dockerfile for the native picture “deep-learning-in-production” and all the required recordsdata to the distant registry. To ensure that it’s been pushed, take a look at the add photos utilizing:

$ gcloud container photos list-tags [HOSTNAME]/[PROJECT_ID]/[IMAGE]

As soon as the picture is uploaded, we will construct the container by:

$ gcloud builds submit --tag gcr.io/deep-learning-in-production/app .

Making a Deployment

Following that we will now create a brand new Kubernetes Deployment. We are able to both do that with a easy kubectl command

$ kubectl create deployment deep-learning-production --image=gcr.io/dlp-project/deep-learning-in-production 1.0

or we will create a brand new configuration file (deployments.yaml) to be extra exact with our choices.

apiVersion: apps/v1

type: Deployment

metadata:

title: deep-learning-production

spec:

replicas: 3

selector:

matchLabels:

app: dlp

template:

metadata:

labels:

app: dlp

spec:

containers:

- title: dlp

picture: gcr.io/$PROJECT_ID/$IMAGE

ports:

- containerPort: 8080

env:

- title: PORT

worth: "8080"

What did we outline right here? In a number of phrases, the above file tells Kubernetes that it must create a Deployment object named “deep-learning-production” with three distinct pods (replicas). The pods will comprise our beforehand constructed picture and can expose the 8080 port to obtain exterior site visitors. For extra particulars on the right way to construction the yaml file, advise the kubernetes docs.

Is not it nice that we will do all of those utilizing a easy config file? No ssh into the occasion, no putting in a bunch of libraries, no looking out the UI for the right button. Only a small config file.

By apply the deployments.yaml config as proven under, the deployment will robotically be created:

$ kubectl apply -f deployment.yaml

We are able to now monitor our deployment with kubectl:

$ kubectl get deployments

which can checklist all out there deployments

NAME READY UP-TO-DATE AVAILABLE AGE

deep-learning-production 1/1 1 1 40s

Identical for the pods

$ kubectl get pods

As we will see all 3 pods are up and working

NAME READY STATUS RESTARTS AGE

deep-learning-production-57c495f96c-lblrk 1/1 Operating 0 40s

deep-learning-production-57c495f96c-sdfui 1/1 Operating 0 40s

deep-learning-production-57c495f96c-plgio 1/1 Operating 0 40s

Making a Service/load balancer

Because of the truth that we’ve got many pods working, we should always have a load balancer in entrance of them. Once more, Kubernetes calls inside load balancer Providers. Providers present a single level of entry to a set of pods and they need to all the time be used as a result of pods are ephemeral and may be restarted with none warning. To create a Service, a configuration file is once more all we’d like.

apiVersion: v1

type: Service

metadata:

title: deep-learning-production-service

spec:

kind: LoadBalancer

selector:

app: deep-learning-production

ports:

- port: 80

targetPort: 8080

As you possibly can see, we outline a brand new Service of kind load balancer which targets pods (the selector subject) with the title “deep-learning-production”. It additionally exposes the 80 port and maps it to the 8080 goal port of our pods ( which we outlined earlier than within the deployment.yaml file)

We apply the config

$ kubectl apply -f service.yml

and we get the standing of our service:

$ kubectl get companies

As you possibly can observe under, the “deep-learning-production” service has been created and is uncovered in an exterior IP by means of the predefined port. Notice that the Kubernetes service is the exterior service run by Kubernetes itself and it’s one thing we received’t take care of.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

deep-learning-production LoadBalancer 10.39.243.226 34.77.224.151 80:30159/TCP 75s

kubernetes ClusterIP 10.39.240.1 <none> 443/TCP 35m

If we hit this API with a consumer, we should always get again a response from one of many pods containing the Tensorflow mannequin. And in case you didn’t discover it, we simply deployed our scalable software into the cloud.

Placing all of it collectively

To sum up, all we have to do to deploy an app is:

-

Create a cluster of machines to run it

-

Arrange a Kubernetes Deployment

-

Arrange a Service

The above 3 steps will deal with the creation of pods and all of the networking between the totally different parts and the exterior world.

Now it’s possible you’ll marvel: had been all of the above crucial? Couldn’t we create a VM occasion and push the container there? Wouldn’t or not it’s a lot less complicated?

Possibly it could, to be sincere.

However the motive we selected Kubernetes is what comes after the deployment of our app. Let’s take a look round and see what goodies Kubernetes give us and the way it may help us develop the appliance as an increasing number of customers are becoming a member of. And most significantly: Why it’s particularly helpful for machine studying functions?

Why use Kubernetes (once more)?

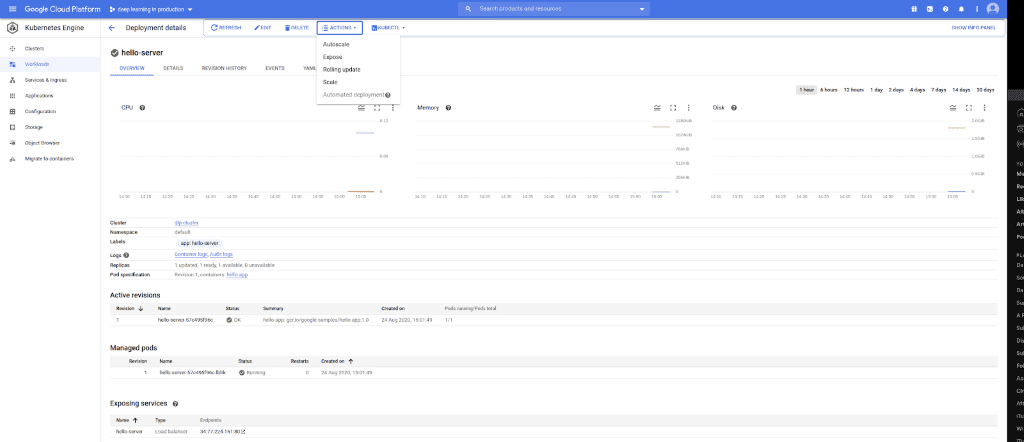

On this part, I’ll use a extra visible strategy. We are going to discover Google Kubernetes Engine’s UI so as to uncover a few of the functionalities it gives. Here’s what the within of GKE appears like.

You could have to squint a bit however you possibly can see that it provides us a straightforward strategy to handle all the things. We are able to immediately see all of our companies, our pods, the variety of replicas in addition to some good visualizations about CPU, reminiscence, and disk utilization. That means we will pay attention to our app’s efficiency at each time. However that is not all. Let’s zoom in a bit.

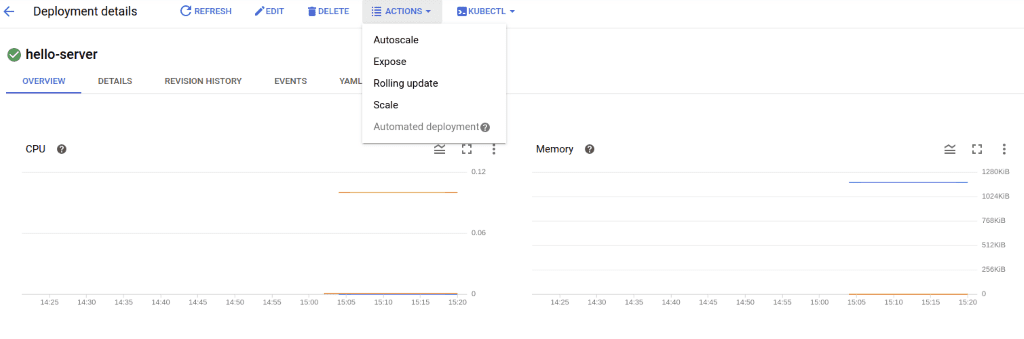

Within the dropdown menu, there are some actions which might be out there concerning our Kubernetes setup.

Scaling the appliance

Ranging from the underside, we will manually scale the variety of pods contained in the deployment. For instance: If we observe an enormous spike within the variety of requests, we will improve the replicas of pods. Consequently, the appliance’s response time will steadily begin to decline once more. In fact, we will accomplish that from the terminal as nicely. So we effortlessly virtually clear up a significant drawback proper right here: Scalability. As we develop, we will add an increasing number of replicas hidden behind the service, which can deal with all of the load balancing and routing stuff.

$ kubectl scale deployment DEPLOYMENT_NAME --replicas 4

You may additionally have seen that there’s an autoscale button. That is proper. Kubernetes and GKE allow us to outline autoscaling habits a priori. We are able to instruct GKE to trace a number of metrics comparable to response time or request fee, after which improve the variety of pods after they cross a predefined threshold. Kubernetes helps two sorts of auto-scaling:

-

Horizontal Pod Autoscaling (HPA), and

-

Vertical Pod Autoscaling (VPA).

HPA creates new replicas to scale the appliance. Within the under instance, HPA targets Cpu utilization and autoscales when it turns into bigger than 50%. Notice that we have to outline a minimal and a most variety of replicas to maintain issues below management.

$ kubectl autoscale deployment DEPLOYMENT_NAME --cpu-percent=50 --min=1 --max=10

Or we will modify the deployment.yaml accordingly. To trace our HPA:

$ kubectl autoscale deployment DEPLOYMENT_NAME --cpu-percent=50 --min=1 --max=10

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

dlp Deployment/dlp 0%/50% 1 10 3 61s

In an analogous method, we will configure VPA.

In my expertise although, HPA needs to be most popular more often than not, as a result of scaling out is often higher than scaling up.

With HPA, we managed to even get rid of the necessity for a human presence always and we will sleep higher at night time.

Updating the appliance (with a brand new Deep Studying mannequin)

After we wish to replace our software with a brand new Docker picture (maybe to make use of a brand new mannequin, or a retrained one), we will carry out a rolling replace. A rolling replace implies that Kubernetes will steadily substitute all of the working pods, 1 by 1, with the brand new model. On the similar time, it ensures that there might be no downfall within the efficiency. Within the under instance, we substitute the docker picture with a more moderen model.

$ kubectl set picture deployment dlp dlp_image=dlp_image:2.0

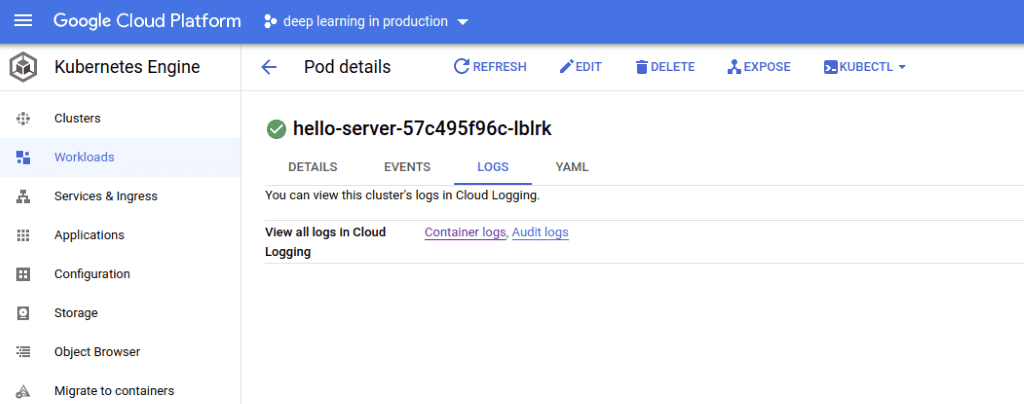

Monitoring the appliance

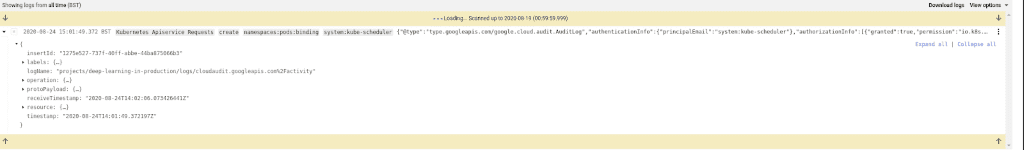

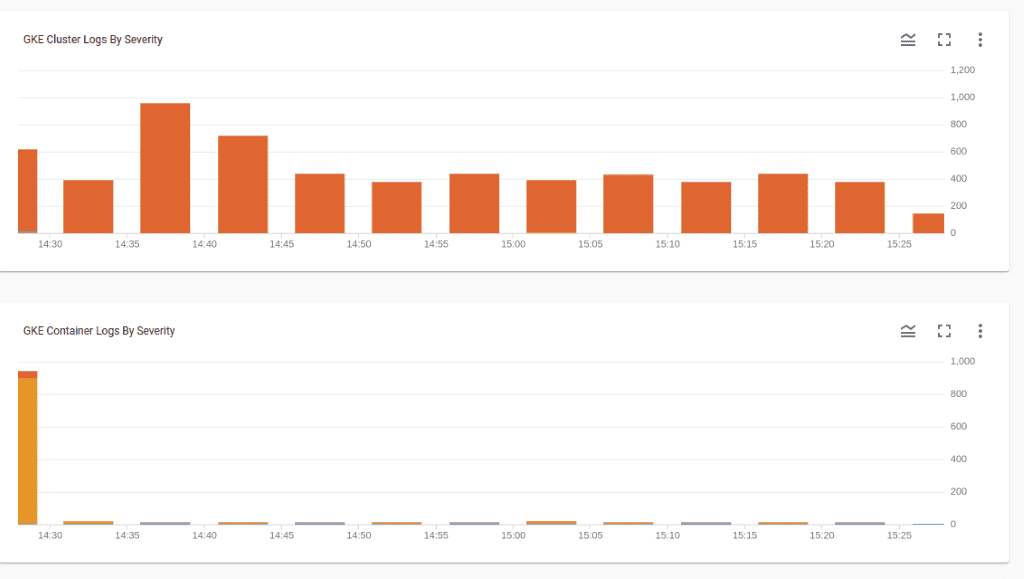

So as to optimize our infrastructure and maintain monitor of the mannequin’s accuracy, we additionally have to have some type of monitoring and logging. GKE has been robotically configured with Google Clouds monitoring system. So we will simply entry all of the logs.

If we click on on the container logs inside a particular pod, we will navigate to the GCS’s “Cloud logging” service and debug our app for errors.

Furthermore, we’ve got entry to some fairly cool visualizations and charts, to assist us monitor issues higher.

Operating a (coaching) job

Operating jobs is one other integral a part of fashionable functions. Within the case of machine studying, the most typical instance is a coaching job the place we prepare (or retrain) our mannequin within the cloud on a set of information. Once more, with Kubernetes, it turns into simply one other yaml file.

apiVersion: batch/v1

type: Job

metadata:

title: train-job

spec:

template:

metadata:

title: train-job

spec:

containers:

- title: train-dlp

picture: training_image

command: ["python"]

args: ["train.py", "-model", "unet", "-data", "gs://deep-learning-in-prodiction-training-data/oxford_iiit_pet/"]

restartPolicy: By no means

backoffLimit: 4

As soon as we apply the config file, Kubernetes will spin up the required pods and run the coaching job. We are able to even configure issues comparable to parallelism of a number of jobs and the variety of retries after failures. Extra particulars on the right way to run jobs, you could find on the docs

Notice: On the whole GKE docs are very nicely written and you could find something there!

Utilizing Kubernetes with GPUs

Deep studying with out GPUs is like ice cream with low-fat milk (respectable however not superior). To make use of Kubernetes with GPU’s, all we’ve got to do is :

-

Create nodes outfitted with NVIDIA GPUs

-

Set up the required drivers

-

Set up the Cuda library and that is it.

Every little thing else will keep precisely the identical. Including GPUs to an current cluster is a bit trickier than creating a brand new one however once more the official documentation has all the things we’d like.

Mannequin A/B Testing

One other frequent deep studying particular use case is mannequin A/B testing by way of working a number of variations of the identical mannequin concurrently. By doing that we will maintain monitor of which one performs higher and maintain solely the most effective fashions in manufacturing. There are a number of methods to perform that utilizing Kubernetes.

Here’s what I’d do:

I’d create a separate Service and Deployment to host the second model of the mannequin and I’d conceal each companies behind an exterior load balancer (or an Ingress as Kubernetes calls it).

The primary half is fairly simple and it is precisely what we did earlier than. The second half is barely harder. I will not go into many particulars about Ingress as a result of it is not an easy idea to know. However let’s talk about a number of issues.

In essence, an Ingress object defines a algorithm for routing HTTP site visitors inside a cluster. An Ingress object is related to a number of Service objects, with each related to a set of Pods. Underneath the hood is successfully a load balancer, with all the usual load balancing options (single level of entrance, authentication, and nicely… load balancing)

To create an Ingress object, we should always construct a brand new config file with a construction because the one which follows:

apiVersion: networking.k8s.io/v1

type: Ingress

metadata:

title: dlp-ingress

spec:

guidelines:

- http:

paths:

- path: /model1

backend:

serviceName: model1

servicePort: 8080

- path: /model2

backend:

serviceName: model2

servicePort: 8081

The Ingress load balancer will route the requests on the totally different companies primarily based on the trail of the request. All requests coming within the /model1 endpoint might be served by the primary service and all requests on /model2 by the second service (FYI that is known as Fanout in engineering phrases).

Now let’s take a look at the consumer aspect. There, I’d ship every request randomly to a distinct path. This may be achieved by a easy random quantity generator. We are able to after all construct a extra advanced logic in response to our wants.

Wrapping up

Okay, I believe I’ll finish this now as a result of we lined numerous stuff. However make certain that I may maintain occurring and on by discovering new machine studying use circumstances and discover whether or not Kubernetes would be capable of clear up them. Within the majority, I am constructive that we may discover a respectable answer.

This entire article might sound like a Kubernetes commercial however my true objective is to encourage you to attempt it out and to provide you a tough overview of its options and capabilities. Notice that a few of them are tightly coupled with Google cloud however normally, they apply to any cloud supplier.

A remaining conclusion is that deploying an software (even when it is a Deep studying mannequin) is just not one thing essentially laborious. The toughest half comes afterwards when we have to preserve it, scale it, and add new options to it. Happily, these days there are many subtle instruments to assist us alongside the method and make our lives simpler. As a psychological train, try to think about how you’ll do all of them ten years in the past.

In case you have not realized by now, this complete article-series is an effort to look outdoors the machine studying bubble and all of the cool algorithms that include it. It’s important that we perceive the exterior techniques and infrastructure required to run them and make them out there to the general public.

If in case you have discovered the articles helpful to date, remember to subscribe to our e-newsletter as a result of we’ve got much more to cowl. AI Summer season will proceed to offer authentic academic content material round Machine Studying.

And we’ve got a number of extra surprises developing for all of you who wish to study AI, so keep tuned…

References

* Disclosure: Please observe that a few of the hyperlinks above could be affiliate hyperlinks, and at no extra value to you, we are going to earn a fee in the event you resolve to make a purchase order after clicking by means of.