Right here is my story: I just lately gave a college tutoring class to MSc college students on deep studying. Particularly, it was about coaching their first multi-layer perceptron (MLP) in Pytorch. I used to be actually shocked from their questions as newbies within the discipline. On the identical time, I resonated with their struggles and mirrored again to being a newbie myself. That’s what this blogpost is all about.

In case you are used to numpy, tensorflow or if you wish to deepen your understanding in deep studying, with a hands-on coding tutorial, hop in.

We are going to prepare our very first mannequin referred to as Multi-Layer Perceptron (MLP) in pytorch whereas explaining the design selections. Code is on the market on github.

Shall we start?

Imports

import torch

import torch.nn as nn

import torch.nn.useful as F

import torch.optim as optim

import torchvision

import torchvision.transforms as transforms

import numpy as np

import matplotlib.pyplot as plt

The torch.nn bundle incorporates all of the required layers to coach our neural community. The layers have to be instantiated first after which referred to as utilizing their situations. Throughout initialization we specify all our trainable elements. The weights sometimes reside in a category that inherits the torch.nn.Module class. Alternate options embody the torch.nn.Sequential or the torch.nn.ModuleList class, which additionally inherit the torch.nn.Module class. Layers lessons sometimes begin with a capital letter even when they don’t have any trainable parameters so really feel like declaring them like:

The torch.nn.useful incorporates all of the capabilities that may be referred to as straight with out prior initialization. Mosttorch.nn modules have their corresponding mapping in a useful module like:

A really useful instance of a operate I usually use is the normalize operate:

Machine: GPU

College students despise utilizing the GPU. They don’t see any motive to since they’re solely utilizing tiny toy datasets. I counsel them to suppose when it comes to scaling up the fashions and the info, however I can see it’s not that apparent at first. My answer was to assign them to coach a resnet18 in 100K picture dataset in google colab.

gadget = 'cuda:0' if torch.cuda.is_available() else 'cpu'

print('gadget:', gadget)

There’s one and just one motive we use the GPU: velocity. The identical mannequin might be educated a lot a lot quicker in a high-end GPU.

Nonetheless, we wish to have the choice to modify to the CPU execution of our pocket book/script, by declaring a “gadget” variable on the prime.

Why? Nicely, for debugging!

It’s fairly widespread to have GPU-related errors, which are literally easy logical errors, however as a result of the code is executed on the GPU, pytorch isn’t capable of hint again the error correctly. Examples might embody slicing errors, like assigning a tensor of mistaken form to a slice of one other tensor.

The answer is to run the code on the CPU as an alternative. You’ll in all probability get a extra correct error message.

GPU message instance:

RuntimeError: CUDA error: device-side assert triggered

CUDA kernel errors could be asynchronously reported at another API name,so the stacktrace under could be incorrect.

For debugging contemplate passing CUDA_LAUNCH_BLOCKING=1.

CPU message instance:

Index 256 is out of bounds

Picture transforms

We are going to use a picture dataset referred to as CIFAR10 so we might want to specify how the info will likely be fed within the community.

rework = transforms.Compose([transforms.ToTensor(), transforms.Normalize(mean=(0.5, 0.5, 0.5), std=(0.5, 0.5, 0.5))])

Normally pictures are learn from reminiscence pillow pictures or as numpy arrays. We thus have to convert them to tensors. I received’t go into particulars into what pytorch tensors right here. The vital factor is to know that we will observe gradients of a tensor and transfer them within the GPU. Numpy and pillow pictures do not present GPU assist.

Enter normalization brings the values round zero. One worth for the means and std is supplied for every channel. For those who present just one worth for the imply or std, pytorch is sensible sufficient to repeat the worth for all channels (transforms.Normalize(imply=0.5, std=0.5) ).

The pictures are within the vary of . After subtracting 0.5 and dividing by 0.5 the brand new vary will likely be .

Assuming that the weights are additionally initialized round zero, that’s fairly helpful. In observe, it makes the coaching a lot simpler to optimize. In deep studying we like to have our values round zero as a result of the gradients are rather more steady (predictable) on this vary.

Why we’d like enter normalization

If the photographs have been within the vary that might disrupt the coaching rather more severely. Why? Assuming that the weights initialized round 0, the output of the layer can be principally dominated by giant values, therefore the big picture intensities. That implies that the weights will solely be influenced by the big enter values.

To persuade you I wrote a small script for that:

x = torch.tensor([1., 1., 255.])

w = torch.tensor([0.1, 0.1, 0.1], requires_grad=True)

goal = torch.tensor(10.0)

for i in vary(100):

with torch.no_grad():

w.grad = torch.zeros(3)

l = goal - (x*w).sum()

l.backward()

w = w - 0.01 * w.grad

print(f"Ultimate weights {w.detach().numpy()}")

Which outputs:

Ultimate weights [ 0.11 0.11 2.65]

In essence, solely the burden that corresponds to the big enter worth modifications.

The CIFAR10 picture dataset class

trainset = torchvision.datasets.CIFAR10(root='./knowledge', prepare=True, obtain=True, rework=rework)

valset = torchvision.datasets.CIFAR10(root='./knowledge', prepare=False, obtain=True, rework=rework)

Pytorch gives a few toy dataset for experimentation. Particularly, CIFAR10 has 50K coaching RGB pictures of measurement 32×32 and 10K take a look at samples. By specifying the boolean prepare variable we get the prepare and take a look at respectively. Information will likely be downloaded within the root path. The required transforms will likely be utilized whereas getting the info. For now we’re simply rescaling the picture intensities to .

The three knowledge splits in machine studying

Usually we have now 3 knowledge splits: the prepare, validation and take a look at set. The primary distinction between the validation and take a look at set is that the take a look at set will likely be seen solely as soon as. The validation efficiency metrics can be dependable to trace the efficiency throughout coaching, despite the fact that the mannequin’s parameters is not going to be straight optimized from the validation knowledge. Nonetheless, we use the validation knowledge to decide on hyperparameters corresponding to studying price, batch measurement and weight decay (aka L2 regularization).

The right way to entry this knowledge?

Visualize pictures and perceive label representations

def imshow(img, i, imply, std):

unnormalize = transforms.Normalize((-imply / std), (1.0 / std))

plt.subplot(1, 10 ,i+1)

npimg = unnormalize(img).numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

img, label = trainset[0]

print(f"Photos have a form of {img.form}")

print(f"There are {len(trainset.lessons)} with labels: {trainset.lessons}")

plt.determine(figsize = (40,20))

for i in vary(10):

imshow(trainset[i][0], i, imply=0.5, std=0.5)

print(f"Label {label} which corresponds to {trainset.lessons[label]} will likely be transformed to one-hot encoding by F.one_hot(torch.tensor(label),10)) as: ", F.one_hot(torch.tensor(label),10))

Right here is the output:

Photos have a form of torch.Dimension([3, 32, 32])

There are 10 with labels: ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

Instance pictures from the Cifar10 dataset

Every picture label will likely be assigned one class id:

id=0 → airplane

id=1 → car

id=2 → fowl

. . .

The category indices will likely be transformed to one-hot encodings. You are able to do this manually as soon as to be 100% certain what it means by calling:

Label 6 which corresponds to frog will likely be transformed to one-hot encoding by F.one_hot(torch.tensor(label),10)) as: tensor([0, 0, 0, 0, 0, 0, 1, 0, 0, 0])

The Dataloader class

train_loader = torch.utils.knowledge.DataLoader(trainset, batch_size=256, shuffle=True)

val_loader = torch.utils.knowledge.DataLoader(valset, batch_size=256, shuffle=False)

The usual observe is to make use of solely a batch of pictures as an alternative of the entire dataset at every step. That’s why the dataloader class stacks collectively quite a lot of pictures with their corresponding labels in a single batch at every step.

It’s crucial to know that the coaching knowledge have to be randomly shuffled.

This fashion, the info indices are randomly shuffled at every epoch. Thus, every batch of pictures is consultant of the info distribution of the entire dataset. Machine studying closely depends on the i.i.d. assumption which implies impartial and identically distributed sampled knowledge. This suggests that the validation and take a look at set needs to be sampled from the identical distribution because the prepare set.

Let’s summarize the dataset/dataloader half:

print("Listing of label names are:", trainset.lessons)

print("Whole coaching pictures:", len(trainset))

img, label = trainset[0]

print(f"Instance picture with form {img.form}, label {label}, which is a {trainset.lessons[label]} ")

print(f'The dataloader incorporates {len(train_loader)} batches of batch measurement {train_loader.batch_size} and {len(train_loader.dataset)} pictures')

imgs_batch , labels_batch = subsequent(iter(train_loader))

print(f"A batch of pictures has form {imgs_batch.form}, labels {labels_batch.form}")

The output of the above code is:

Listing of label names are: ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

Whole coaching pictures: 50000

Instance picture with form torch.Dimension([3, 32, 32]), label 6, which is a frog

The dataloader incorporates 196 batches of batch measurement 256 and 50000 pictures

A batch of pictures has form torch.Dimension([256, 3, 32, 32]), labels torch.Dimension([256])

Constructing a variable measurement MLP

class MLP(nn.Module):

def __init__(self, in_channels, num_classes, hidden_sizes=[64]):

tremendous(MLP, self).__init__()

assert len(hidden_sizes) >= 1 , "specify at the very least one hidden layer"

layers = nn.ModuleList()

layer_sizes = [in_channels] + hidden_sizes

for dim_in, dim_out in zip(layer_sizes[:-1], layer_sizes[1:]):

layers.append(nn.Linear(dim_in, dim_out))

layers.append(nn.ReLU())

self.layers = nn.Sequential(*layers)

self.out_layer = nn.Linear(hidden_sizes[-1], num_classes)

def ahead(self, x):

out = x.view(x.form[0], -1)

out = self.layers(out)

out = self.out_layer(out)

return out

Since we inherit the torch.nn.Module class, we have to outline the init and ahead operate. init has all of the layers appended within the nn.ModuleList(). Module record is simply an empty record that’s conscious that every one the weather of the record are modules of the torch.nn bundle. Then we put all the weather of the record to torch.nn.Sequential. The asterisk (*) signifies that the layers will likely be handed as every layer being one enter of the operate like:

torch.nn.Sequential( nn.Linear(1,2), nn.ReLU(), nn.Linear(2,5), ... )

When there are not any skip connections inside a block of layers and there is just one enter and one output, we will simply cross every part within the torch.nn.Sequential class. In consequence, we is not going to should repeatedly specify that the output of the earlier layer is the enter to the subsequent one.

Throughout ahead we are going to simply name it as soon as:

y = self.layers(x)

That makes the code rather more compact and straightforward to learn. Even when the mannequin consists of different ahead paths shaped by skip connections, the sequential half might be properly packed like this.

Writing the validation loop

def validate(mannequin, val_loader, gadget):

mannequin.eval()

criterion = nn.CrossEntropyLoss()

appropriate = 0

loss_step = []

with torch.no_grad():

for inp_data, labels in val_loader:

labels = labels.view(labels.form[0]).to(gadget)

inp_data = inp_data.to(gadget)

outputs = mannequin(inp_data)

val_loss = criterion(outputs, labels)

predicted = torch.argmax(outputs, dim=1)

appropriate += (predicted == labels).sum()

loss_step.append(val_loss.merchandise())

val_acc = (100 * appropriate / len(val_loader.dataset)).cpu().numpy()

val_loss_epoch = torch.tensor(loss_step).imply().numpy()

return val_acc , val_loss_epoch

Assuming we have now a classification activity, our loss will likely be categorical cross entropy. If you wish to dive into why we use this loss operate check out most chance estimation.

In the course of the validation/take a look at time, we’d like to verify of two issues. First, no gradients needs to be tracked, since we aren’t updating the parameters at this stage. Second, the mannequin behaves as it could behave throughout take a look at time. Dropout is a superb instance: throughout coaching we zero p.c of the activations, whereas at take a look at time it behaves like an identification operate ().

-

with torch.no_grad():can be utilized to verify we aren’t monitoring gradients. -

mannequin.eval()mechanically modifications the conduct of our layers to the take a look at conduct. We have to name mannequin.prepare() to undo its impact.

Subsequent we have to transfer the info to the GPU. We preserve utilizing the variable gadget to have the ability to swap between GPU and CPU execution.

outputs = mannequin(inputs)calls the ahead operate and computes the unnormalized output prediction. Individuals normally discuss with the unnormalized predictions of the mannequin as logits. Make sure you don’t get misplaced within the jargon jungle.

The logits will likely be normalized with softmax and the loss is computed. Throughout the identical name (criterion(outputs, labels)) the goal labels are transformed to at least one scorching encodings.

Here’s a factor that many college students get confused on: how you can compute the accuracy of the mannequin. We’ve got solely seen how you can compute the cross entropy loss. Nicely, the reply is somewhat easy: take the argmax of the logits.This provides us the prediction. Then, we examine how most of the predictions are equal to the targets.

The mannequin will be taught to assign larger chances to the goal class. However with a purpose to compute the accuracy we have to see how most of the most chances are the proper ones. For that one can use predicted = torch.max(outputs, dim=1)[1] or predicted = torch.argmax(outputs, dim=1). torch.max() returns a tuple of the max values and indices and we’re solely within the latter.

One other fascinating factor is the worth.merchandise() name. This methodology can solely be used for scalar values just like the loss capabilities. For tensors we normally do one thing like t.detach().cpu().numpy(). Detach makes certain no gradients are tracked. Then we transfer it again to the cpu and convert it to a numpy array.

Lastly discover the distinction between len(val_loader) and len(val_loader.dataset). len(val_loader) returns the overall variety of batches the dataset was cut up into. len(val_loader.dataset) is the variety of knowledge samples.

Writing the coaching loop

def train_one_epoch(mannequin, optimizer, train_loader, gadget):

mannequin.prepare()

criterion = nn.CrossEntropyLoss()

loss_step = []

appropriate, complete = 0, 0

for (inp_data, labels) in train_loader:

labels = labels.view(labels.form[0]).to(gadget)

inp_data = inp_data.to(gadget)

outputs = mannequin(inp_data)

loss = criterion(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

with torch.no_grad():

_, predicted = torch.max(outputs, 1)

complete += labels.measurement(0)

appropriate += (predicted == labels).sum()

loss_step.append(loss.merchandise())

loss_curr_epoch = np.imply(loss_step)

train_acc = (100 * appropriate / complete).cpu()

return loss_curr_epoch, train_acc

def prepare(mannequin, optimizer, num_epochs, train_loader, val_loader, gadget):

best_val_loss = 1000

best_val_acc = 0

mannequin = mannequin.to(gadget)

dict_log = {"train_acc_epoch":[], "val_acc_epoch":[], "loss_epoch":[], "val_loss":[]}

pbar = tqdm(vary(num_epochs))

for epoch in pbar:

loss_curr_epoch, train_acc = train_one_epoch(mannequin, optimizer, train_loader, gadget)

val_acc, val_loss = validation(mannequin, val_loader, gadget)

msg = (f'Ep {epoch}/{num_epochs}: Accuracy: Practice:{train_acc:.2f} Val:{val_acc:.2f}

|| Loss: Practice {loss_curr_epoch:.3f} Val {val_loss:.3f}')

pbar.set_description(msg)

dict_log["train_acc_epoch"].append(train_acc)

dict_log["val_acc_epoch"].append(val_acc)

dict_log["loss_epoch"].append(loss_curr_epoch)

dict_log["val_loss"].append(val_loss)

return dict_log

mannequin.prepare()switches again the layers (e.g. dropout, batch norm) to their coaching behaviour.

The primary distinction is that backpropagation and the replace rule come into play right here by way of:

loss = criterion(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

First, loss should at all times be a scalar. Second, every trainable parameter has an attribute referred to as grad. This attribute is a tensor of the identical form of the tensor the place gradients are saved. By calling optimizer.zero_grad() we undergo all of the parameters and substitute the gradient values of the tensor to zero. In pseudocode:

for param in parameters:

param.grad = 0

Why? As a result of the brand new gradients have to be computed throughout loss.backward(). Throughout a backward name the gradients are computed and added to the previously-existing values.

for param, new_grad in zip(parameters, new_gradients):

param.grad = param.grad + new_grad

That offers quite a lot of flexibility with respect to how usually we might replace our mannequin. This may be helpful as an example to coach with an even bigger batch measurement than our {hardware} allows us to, a way referred to as gradient accumulation.

In lots of circumstances we have to replace the parameters at every step. Thus, the gradients have to be saved whereas deleting the values from the earlier batch.

Computing the gradients isn’t updating the parameters. We have to go as soon as once more by way of all of the mannequin’s parameters and apply the replace rule with optimizer.step() like:

for param in parameters:

param = param - lr * param.grad

The remainder is similar as within the validation operate. Each losses and accuracies per epoch are saved in a dictionary for plotting in a while.

Placing all of it collectively

in_channels = 3 * 32 * 32

num_classes = 10

hidden_sizes = [128]

epochs = 50

lr = 1e-3

momentum = 0.9

wd = 1e-4

gadget = "cuda"

mannequin = MLP(in_channels, num_classes, hidden_sizes).to(gadget)

optimizer = optim.SGD(mannequin.parameters(), lr=lr, momentum=momentum, weight_decay=wd)

dict_log = prepare(mannequin, optimizer, epochs, train_loader, val_loader, gadget)

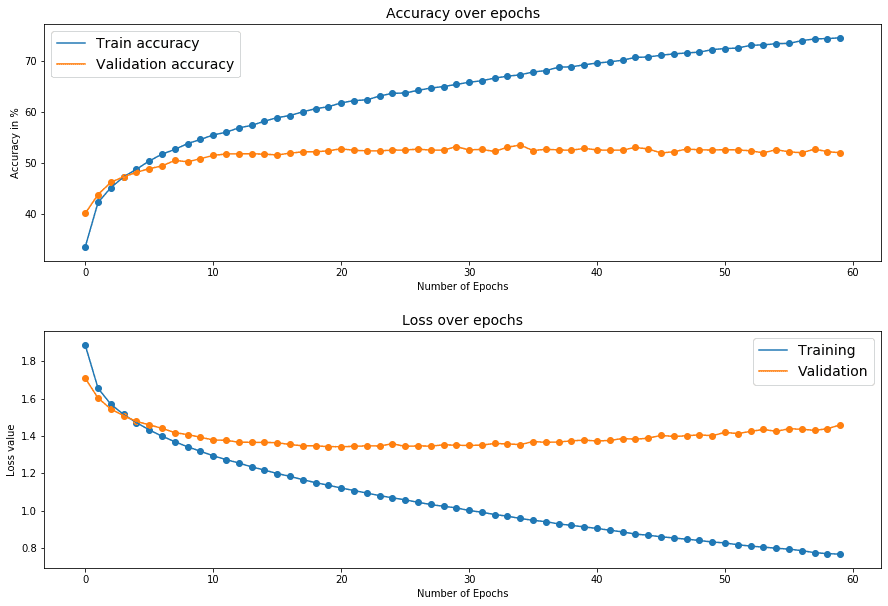

Greatest validation accuracy: 53.52% on CIFAR10 utilizing a two layer MLP.

Losses and accuracies throughout coaching.

Design selections

So how do you design and prepare an MLP neural community?

-

Batch measurement: very small batch sizes, sometimes < 8, might result in unstable coaching and even fail, due to numerical points. The default in a PyTorch Dataloader is 1, so be certain to at all times specify the batch measurement! As soon as a scholar was complaining that coaching takes 3 hours (as an alternative of 5 minutes) as a result of he forgot to specify the batch measurement. Use multiples of 32 for max GPU utilization, if attainable.

-

Unbiased and Identically Distributed (IID): batches ought to ideally comply with the IID assumption, so make sure to at all times shuffle your coaching knowledge, until you’ve got a really particular motive to not.

-

All the time go from easy to advanced design selections when designing fashions. When it comes to mannequin structure and measurement this interprets to ranging from a small community. Go massive when you suppose that efficiency saturates. Why? As a result of a small mannequin might already carry out sufficiently effectively in your use-case. Within the meantime, you save tons of time, since smaller fashions might be coaching quicker. Picture that in a real-life state of affairs you have to to coach your mannequin a number of occasions to determine one of the best setup. And even retrain it as extra knowledge turns into accessible.

-

All the time shuffle your coaching knowledge. Don’t shuffle the validation and take a look at set.

-

Design versatile mannequin implementations. Despite the fact that we begin small and use solely a hidden layer, there may be nothing stopping us from going massive. Our mannequin implementation helps us having as many layers as we wish. In observe, I’ve not often seen an MLP with greater than 3 layers and greater than 4096 dimensions.

-

Enhance mannequin dimensions in multiples of 32. The optimization house is insanely large and makes smart selections like taking account of the {hardware} (GPU).

-

Add regularization after you determine overfitting and never earlier than.

-

You probably have no concept concerning the mannequin measurement, begin by overfitting a small subset of information with out augmentations (examine torch.utils.knowledge.Subset).

To persuade you much more, right here is a web-based tutorial that somebody used 3 hidden layers in CIFAR10 and achieved the identical validation accuracy as us (~53%).

Conclusion & the place to go subsequent

Is our classifier adequate?

Nicely, sure! In comparison with a random guess (1/10) we’re capable of get the proper class greater than 50%.

Is our classifier good in comparison with a human?

No, human-level picture recognition on this dataset would simply be greater than 90%.

What’s our classifier missing?

You can find out within the subsequent tutorial.

Please be aware your entire code is on the market on github. Keep tuned!

At this level it’s essential implement your individual fashions into new datasets. An instance: attempt to enhance your classifier much more by including regularization to forestall overfitting. Submit your outcomes on social media and tag us alongside.

Lastly, when you really feel such as you want a structured undertaking to get your palms soiled contemplate these additional assets:

Or you’ll be able to strive our very personal course: Introduction to Deep Studying & Neural Networks

* Disclosure: Please be aware that among the hyperlinks above could be affiliate hyperlinks, and at no further price to you, we are going to earn a fee when you determine to make a purchase order after clicking by way of.