Have you ever ever caught on an error for means too lengthy? I keep in mind as soon as after I spend over 2 weeks on a silly little typo that didn’t crash this system however returned inexplicable outcomes. I actually couldn’t sleep due to this. As a result of I’m 100% sure that this occurred to you as properly , on this 4th episode of the “Deep Studying in Manufacturing” collection we’re specializing in how you can debug Deep Studying code and how you can use logging to catch bugs and errors earlier than deploying our mannequin. We are going to use Tensorflow to showcase some examples (following the instance of picture segmentation we’ve got constructed over the previous 3 articles) however the very same ideas apply additionally to PyTorch and all the opposite AI frameworks.

As I mentioned within the introduction of the collection, Machine Studying is bizarre software program and may all the time be handled like one. And one of the crucial important components of the software program growth lifecycle is debugging. Correct debugging may also help get rid of future pains when our algorithms can be up and working and utilized by actual customers and might make our system as strong and dependable as our customers count on it to be. And it is usually integral within the early levels of coding to hurry up the event of our algorithm.

Learn how to debug Deep Studying?

Deep Studying debugging is harder than regular software program due to a number of causes:

-

Poor mannequin efficiency doesn’t essentially imply bugs within the code

-

The iteration cycle (constructing the mannequin, coaching, and testing) is kind of lengthy

-

Coaching/testing information can even have errors and anomalies

-

Hyperparameters have an effect on the ultimate accuracy

-

It’s not all the time deterministic (e.g. probabilistic machine studying)

-

Static computation graph (e.g. Tensorflow and CNTK)

Based mostly on the above, one of the simplest ways to start out serious about debugging is to simplify the ML mannequin growth course of as a lot as attainable. And I imply simplify to a ridiculous stage. When experimenting with our mannequin, we should always begin from a quite simple algorithm, with solely a handful of options and regularly preserve increasing by including options and tuning hyperparameters whereas conserving the mannequin easy. As soon as we discover a passable set of options, we are able to begin growing our mannequin’s complexity, preserve observe of the metrics, and proceed incrementally till the outcomes are passable for our utility.

However even then bugs and anomalies would possibly happen. Really, they may positively happen. Once they do, our subsequent step is to reap the benefits of Python debugging capabilities.

Python debugger (Pdb)

Python debugger is a part of the usual python library. The debugger is actually a program that may monitor the state of our personal program whereas it’s working. An important command of any debugger is named a breakpoint. We are able to set a breakpoint anyplace in our code and the debugger will cease the execution on this actual level and provides us entry to the values of all of the variables at that time in addition to the traceback of python calls.

There are two methods to work together with Python Debugger. Command line and IDEs. If you wish to use the terminal you’ll be able to go forward, however I need to warn you that it’s fairly tedious (I’ll go away a hyperlink for you on the finish). Thank god for IDEs. Since we’ve got used PyCharm all through the collection, we are going to keep constant and use it right here as properly.

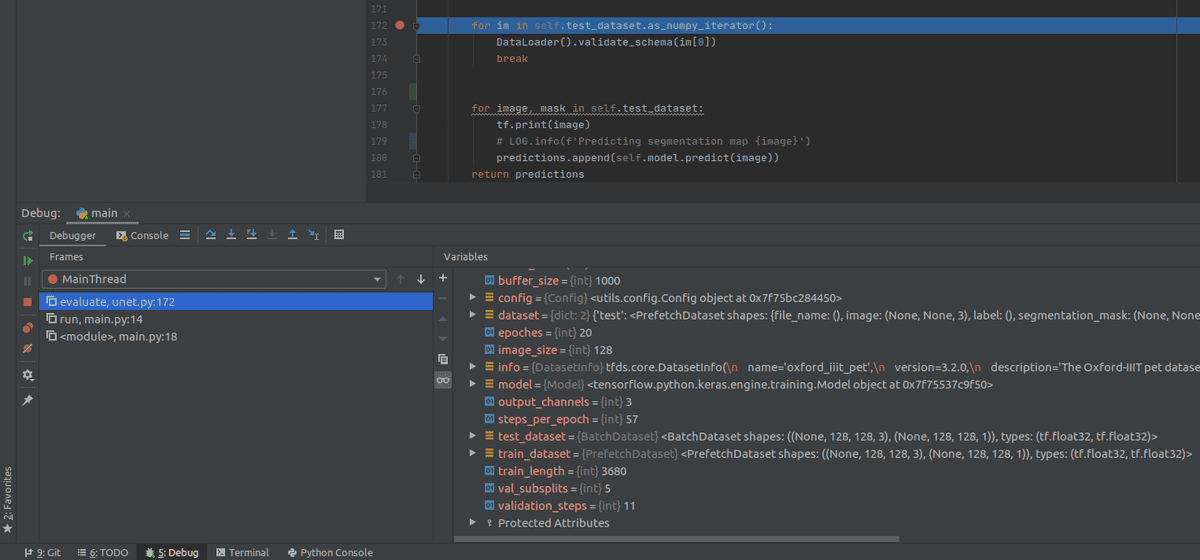

Let’s have a better have a look at the above picture. As you’ll be able to see, we’ve got set a breakpoint (the crimson dot on line 172) in the beginning of the for loop within the “predict” operate after which we press the debug button on the highest proper nook.

This system then is executed usually till it hits the breakpoint the place the debugger pauses the state. Within the debug window under the code, we are able to see all of the variables within the level. Let’s say for instance that we wished to debug the coaching size: as you’ll be able to see it’s 3680. Or the picture dimension of the enter information. Once more it’s very straightforward to see that it’s 128.

Tip: Utilizing the Debugger, we are able to entry anyplace, any variable we would like. So we are able to keep away from having print statements in every single place.

From the breakpoint, we are able to proceed to a different breakpoint, or we are able to end this system’s execution. We even have a third possibility. We are able to use the step operate of the debugger to enter the following code line. After which go to the second subsequent line. That means, we are able to select to run our code as slowly as we would like till we determine what the heck is mistaken.

Let’s see now, a brief video from the official Tensorflow channel on how Pycharm, Python Debugger, and Tensorflow play alongside. It’s solely 3 minutes.

Debug Information ( schema validation)

Now that we’ve got an honest strategy to discover bugs within the code, let’s take a look on the second most typical supply of errors in Machine Studying: Information. Information is not all the time in excellent type (in truth they by no means are). They could comprise corrupted information factors, some values could also be lacking, they might have a special format or they might have a special vary/distribution than anticipated.

To catch all these earlier than coaching or prediction, one of the crucial frequent methods is Schema Validation. We are able to outline schema as a contract of the format of our information. Virtually, the schema is a JSON file containing all of the required options for a mannequin, their type and their sort. Be aware that it may be whichever format we would like (many TensorFlow fashions use proto recordsdata). To watch the incoming information and catch abnormalities, we are able to validate them towards the schema.

Schema validation is very helpful when the mannequin is deployed on a manufacturing atmosphere and accepts consumer information. Within the case of our venture, as soon as we’ve got the UNet working in manufacturing, the consumer will have the ability to ship no matter picture he needs. So we have to have a strategy to validate them.

Since our enter information are photographs, which is actually a four-dimensional array of form [batch, channels, height, width], our JSON schema will look as depicted under. (This isn’t what your typical schema will appear like however as a result of in our case we’ve got photographs as enter, we must be concise and provides the right schema)

SCHEMA = {

"sort": "object",

"properties": {

"picture":{

"sort":"array",

"gadgets":{

"sort": "array",

"gadgets": {

"sort": "array",

"gadgets": {

"sort": "array",

"gadgets": {

"sort": "quantity"

}

}

}

}

}

},

"required":["image"]

}

So what’s happening up there?

Our information sort is a python object as you’ll be able to see within the first line. This object accommodates a property known as “picture” which is of sort array and has the proven gadgets. Sometimes your schema will finish on this level however in our case, we have to go deep to declare all 4 dimensions of our picture.

You’ll be able to consider it as a sort of recursion the place we outline the identical merchandise inside the opposite. Deep into the recursion, you outline the kind of our values to be numeric. Lastly, the final line of the schema signifies all of the required properties of our object. On this case, it’s simply the picture.

A extra typical instance of a schema will appear like this:

SCHEMA = {

"sort": "object",

"properties":{

"feature-1":{

"sort":"string"

},

"feature-2":{

"sort":"integer"

},

"feature-3":{

"sort":"string"

}

},

"required":["feature-1", "feature-3"]

}

I hope that clears issues up. As soon as we’ve got our schema, we are able to use it to validate our information. In python the built-in jsonschema bundle, which may also help us do precisely that.

import jsonschema

from configs.data_schema import SCHEMA

class DataLoader:

"""Information Loader class"""

@staticmethod

def validate_schema(data_point):

jsonschema.validate({'picture':data_point.tolist()}, SCHEMA)

So we are able to name the “validate_schema” operate each time we wish to examine our information towards

our schema. Fairly straightforward I dare to say.

Caveat: Schema validation is an costly and fairly sluggish operation normally, so we should always consider carefully the place and when to implement it as it’s going to have an effect on our program efficiency.

Superior Tip: For individuals who use TensorFlow Prolonged (TFX) to serve their fashions, the information validation library can infer a schema robotically from the information. However we are going to talk about extra on TFX in one other article.

Logging

Logging goes toe to toe with Debugging. However why do we have to preserve logs? Logs are a necessary a part of troubleshooting utility and infrastructure efficiency. When our code is executed on a manufacturing atmosphere in a distant machine, let’s say Google cloud, we are able to’t actually go there and begin debugging stuff. As a substitute, in such distant environments, we use logs to have a transparent picture of what’s happening. Logs will not be solely to seize the state of our program but additionally to find attainable exceptions and errors.

However why not use easy print statements? Aren’t they sufficient? Really no they don’t seem to be! Why? Let’s define some benefits logs present over the print assertion:

-

We are able to log totally different severity ranges (DEBUG, INFO, WARNING, ERROR, CRITICAL) and select to indicate solely the extent we care about. For instance, we are able to stuff our code with debug logs however we could not need to present all of them in manufacturing to keep away from having tens of millions of rows and as a substitute present solely warnings and errors.

-

We are able to select the output channel (not attainable with prints as they all the time use the console). A few of our choices are writing them to a file, sending them over http, print them on the console, stream them to a secondary location, and even ship them over electronic mail.

-

Timestamps are included by default.

-

The format of the message is definitely configurable.

A basic rule of thumb is to keep away from print statements as a lot as attainable and change them with both debugging processes as proven above or logs.

And it’s extremely straightforward to make use of. Don’t imagine me? Let’s dive in and use it in our codebase…

Python Logging module

Python’s default module for logging is named… properly logging. In an effort to use all of it we’ve got to do is:

import logging

logging.warning('Warning. Our pants are on hearth...")

However since we’re creating a production-ready pipeline, extremely extensible code, let’s use it in a extra elegant means. I’ll go into the utils folder and create a file known as “logger.py” so we are able to import it anyplace we like.

import logging.config

import yaml

with open('configs/logging_config.yaml', 'r') as f:

config = yaml.safe_load(f.learn())

logging.config.dictConfig(config)

logging.captureWarnings(True)

def get_logger(identify: str):

"""Logs a message

Args:

identify(str): identify of logger

"""

logger = logging.getLogger(identify)

return logger

The “get_logger” operate can be imported each time we need to log some stuff and it’ll create a logger with a selected identify. The identify is important so we are able to establish the origin of our log rows. To make the logger simply configurable, I’ll put all of the specs inside a config file. And since we’ve got already seen json, let’s use a special format known as yaml (in apply it’s higher to stay to a single format however right here I’ll use a special one for academic functions).

Our file will load the yaml file and can go it’s parameters into the logging module (globally) to set its default conduct.

A easy configuration file seems one thing like this:

model: 1

formatters:

easy:

format: '%(asctime)s - %(identify)s - %(levelname)s - %(message)s'

handlers:

console:

class: logging.StreamHandler

formatter: easy

stream: ext://sys.stdout

Root:

Stage: DEBUG

handlers: [console]

As you’ll be able to see, we set the default format within the formatters node, we outline the console because the output channel and streaming as a transmission methodology and we set the default stage to DEBUG. Meaning all logging above that stage can be printed. For future reference, the order of ranges are: DEBUG < INFO < WARNING < ERROR < CRITICAL

For extra particulars into logging configuration, you’ll be able to try the hyperlink ultimately of the article as all the time.

So each time we have to log one thing all we’ve got to do is import the file and use the built-in capabilities corresponding to .information(), .debug() and .error()

from utils.logger import get_logger

LOG = get_logger('unet')

def consider(self):

"""Predicts outcomes for the take a look at dataset"""

predictions = []

LOG.information('Predicting segmentation map for take a look at dataset')

for picture, masks in self.test_dataset:

LOG.debug(f'Predicting segmentation map {picture}')

predictions.append(self.mannequin.predict(picture))

return predictions

What I love to do is to log information on essential turning factors corresponding to “Information loading”, “Information preprocessed”, “Coaching began” and use debug to print information factors, variables and lower-level employees.

Tip: Typically, most engineers log infos and above when the code is executed in a manufacturing atmosphere and preserve the debug stage for when issues break or to debug some performance.

Final however not least, I need to shut this text by mentioning a couple of extraordinarily helpful Tensorflow capabilities and packages we are able to use to log Tensorflow associated stuff.

Helpful Tensorflow debugging and logging capabilities

I really feel like I ought to warn you that on this paragraph, we are going to take a moderately deep dive into Tensorflow so if you’re not conversant in it otherwise you favor a special framework be happy to skip. However since our codebase for this collection is utilizing Tensorflow, I couldn’t actually keep away from mentioning these. I warned you within the first article that we’re going to dive into particulars right here. So if you’re nonetheless with me, let’s do it.

Let’s begin with what’s a computational graph. I couldn’t describe it higher than Andrew Ng. This brief video makes issues crystal clear.

Tensorflow code is just not your regular node and as we mentioned it’s not trivial to debug and take a look at it. One of many predominant causes is that Tensorflow used to have a static computational graph, that means that you just needed to outline the mannequin, compile it after which run it. This made debugging a lot a lot tougher, since you couldn’t entry variables and states as you usually do in python.

Nonetheless, in Tensorflow 2.0 the default execution mode is the keen (dynamic) mode, that means that the graph is dynamic following the PyTorch sample. In fact, there are nonetheless instances when the code can’t be executed eagerly. And even now, the computational graph nonetheless exists within the background. That’s why we’d like these capabilities as they’ve been constructed with that in thoughts. They only present further flexibility that ordinary logging merely received’t.

Be aware: The python debugger doesn’t work if Tensorflow is just not working in keen mode as a result of the graph is just not but compiled.

-

tf.print is Tensorflow built-in print operate that can be utilized to print tensors but additionally allow us to outline the output stream and the present stage. It’s ease of use is predicated on the truth that it’s really a separate element contained in the computational graph, so it communicates by default with all different elements. Particularly within the case that some operate is just not run eagerly, regular print statements received’t work and we’ve got to make use of tf.print().

-

tf.Variable.assign can be utilized to assign values to a variable throughout runtime, in case you need to take a look at issues or discover totally different alternate options. It would immediately change the computational graph in order that the brand new worth could be picked from the remainder of the nodes.

-

tf.abstract offers an api to write down abstract information into recordsdata. Let’s say you need to save metrics on a file or some tensor to trace its values. You are able to do simply that with tf.abstract. In essence it’s a logging system to avoid wasting something you want right into a file. Plus it’s built-in with Tensorboard so you’ll be able to visualize your summaries with little to no effort.

-

tf.debugging is a set of assert capabilities (tailor-made to tensors) that may be put inside your code to validate your information, your weights or your mannequin.

-

tf.debugging.enable_check_numerics is a part of the identical module however I needed to point out it individually as a result of it’s merely superb. This little operate will trigger the code to error out as quickly as an operation’s output tensor accommodates infinity or NaN. Do I have to say extra?

-

get_concrete_function(enter).graph. This straightforward however superb easy operate can be utilized to transform any python operate right into a tf.Graph so we are able to entry all kinds of issues from right here (shapes, worth sorts and many others).

-

tf.keras.callbacks are capabilities which can be used throughout coaching to go data to exterior sources. The most typical use case is passing coaching information into Tensorboard however that isn’t all. They can be used to avoid wasting csv information, early cease the coaching based mostly on a metric and even change the training fee. It’s a particularly great tool particularly for individuals who don’t to write down Tensorflow code and like the simplicity of Keras

mannequin.match(

x_train,

y_train,

batch_size=train_size,

verbose=0,

epochs=100,

validation_data=(x_test, y_test),

callbacks=[tf.keras.callbacks.TensorBoard(log_dir=logdir)],

)

Conclusion

So right here we’re. We noticed how we are able to debug our machine studying code utilizing python’s debugger and PyCharm, then we talked about information debugging utilizing schema validation, after which we found why to make use of logs and how you can make the most of them in Python. Final however not least we supplied a listing of TensorFlow capabilities that may alleviate ache factors with regards to debugging and logging of Deep Studying code. By the way in which, if you need one thing comparable for PyTorch don’t hesitate to ping us on our social pages on X or LinkedIn.

What we’ve lined up to now: finest practices to write down deep studying, unit testing, logging and debugging. I feel that we are able to with certainty say that our code must be strong and dependable by now. So what’s subsequent? I feel it’s time to get our palms soiled with information pipelines. Don’t you assume?

What we are going to cowl subsequent: Information processing strategies corresponding to vectorization, batching, prefetching and parallel execution

As a facet materials, I strongly recommend the TensorFlow: Superior Strategies Specialization course by deeplearning.ai hosted on Coursera, which gives you a foundational understanding on Tensorflow

So keep tuned…

References:

* Disclosure: Please word that a few of the hyperlinks above may be affiliate hyperlinks, and at no further value to you, we are going to earn a fee when you determine to make a purchase order after clicking by.