Neural Structure Search (NAS) is the method of automating the design of neural networks’ topology so as to obtain the perfect efficiency on a selected process. The objective is to design the structure utilizing restricted sources and with minimal human intervention.

Following the work of Ren et. al, let’s talk about a normal framework for NAS. At its core, NAS is a search algorithm.

-

It operates on the search house of doable community topologies, which consists of a listing of predefined operations (e.g.convolutional layers, recurrent, pooling, absolutely linked and many others.) and their connections.

-

A controller then chooses a listing of doable candidate architectures from the search house.

-

The candidate architectures are skilled and ranked primarily based on their efficiency on the validation check.

-

The rating is used to readjust the search and procure new candidates.

-

The method iterates till reaching a sure situation and supplies the optimum structure.

-

The optimum structure is evaluated on the check set.

NAS normal framework

Basically, the panorama of NAS algorithms is sort of complicated. The most well-liked categorization characterizes NAS primarily based on three main parts:

a) the search house

b) the search technique, which entails the kind of controller and the analysis of the candidates and

c) the efficiency analysis method.

You may confer with this wonderful overview by Esken et.al for extra particulars. Additionally be happy to recommendation the good article by Lillian Weng as an additional useful resource.

Nonetheless, latest approaches mix the search technique with the analysis step, making it exhausting to differentiate algorithms between them.

For that purpose, we are going to discover NAS primarily based solely on the search technique. As we progress, we are going to look at totally different search areas and analysis methods. Additionally observe that many implementations experiment with differing types or search methods so the next categorization shouldn’t be at all times strict.

Search technique refers back to the methodology used to seek for the optimum structure within the search house. We are able to classify NAS algorithms by their search technique into 5 fundamental areas:

-

Random search

-

Reinforcement studying

-

Evolutionary algorithms

-

Sequential model-based optimization

-

Gradient optimization

Random search

Probably the most naive method is clearly random search, which is usually used as a baseline. Right here a legitimate structure is chosen at random with no studying concerned in any respect.

Reinforcement studying

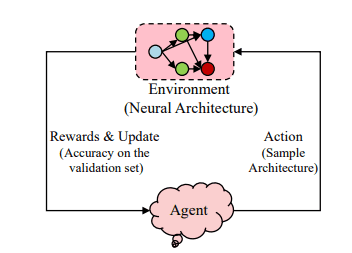

NAS will be very elegantly formulated as an RL downside. The agent’s motion is the technology of a neural structure whereas the agent’s reward is the efficiency analysis. The motion house is in fact the search house. Because of this, totally different RL strategies can be utilized to unravel the issue.

NAS with Reinforcement Studying

Early works of NAS (NAS-RL, NASNet) used a recurrent neural community (RNN) as a coverage community (controller). The RNN is liable for producing candidate architectures. The structure is then skilled and evaluated on the validation set. The parameters of the RNN controller are optimized so as to maximize the anticipated validation accuracy. How? Utilizing coverage gradients methods similar to REINFORCE and Proximal Coverage Optimization (PPO).

An RNN controller samples a convolutional community to foretell its hyperparameters

Equally, ENAS makes use of an RNN controller skilled with coverage gradients. Notably, it is likely one of the first works that successfully share parameters amongst architectures. The instinct is that the architectures will be considered as half of a giant graph, an method that has been used extensively as we are going to see beneath. ENAS coaching is carried out in two alternating steps: a) the RNN controller is skilled with REINFORCE and b) the shared parameters are skilled in typical gradient descent kind

One other profitable effort known as MetaQNN makes use of Q Studying with an e-greedy exploration mechanism and expertise replay.

Earlier than we proceed, let’s open a parenthesis and talk about the search house,

World search house

NAS-RL is searching for all doable mixtures of operations, leading to an enormous and really costly search house. It tried to mix the operations so as to kind chain-structured (a.okay.a sequential) networks. The search house is parameterized by a) the variety of layers, b) the kind of every operation and c) the hyperparameters of every operation (e.g kernel dimension, variety of filters).

In a while, skip connections had been additionally added to the combination, permitting muli-branch architectures similar to ResNet or DenseNet like topologies.

Modular search house

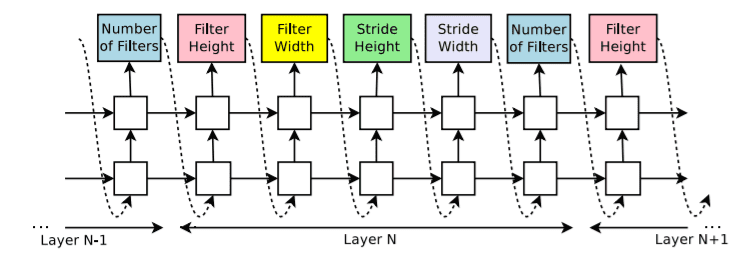

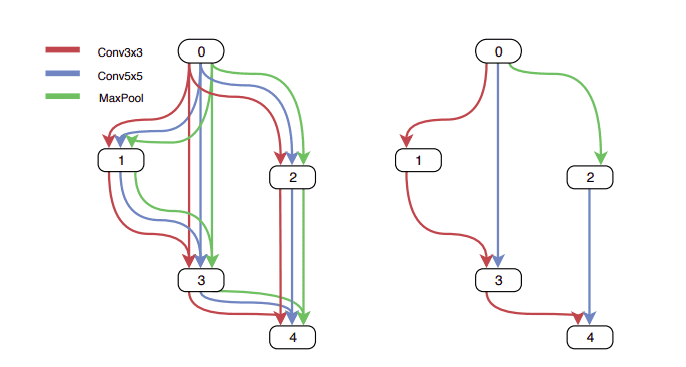

To resolve the worldwide house downside, cell-based approaches had been proposed so as to “modularize” the search house. That’s, mixing totally different blocks of layers known as modules. NASNet is the most well-liked algorithm in that class. NASNet learns solely two sorts of modules or “cells” : a standard cell that performs function extraction and a discount cell that downsamples the enter. The ultimate structure is constructed by stacking these cells in a predefined approach.

Left: An instance of a search house with two cells. Proper: An instance of the perfect structure for a standard cell as discovered by NAS

The cell-based method is extensively utilized in different works similar to ENAS. However modularization by way of cells shouldn’t be the one different. FPNAS emphasizes in block range by alternatively optimizing blocks whereas retaining different blocks mounted. FBNet makes use of a layer-wise search house. Every searchable layer within the community can select a special block from the layer-wise search house.

Evolutionary algorithms

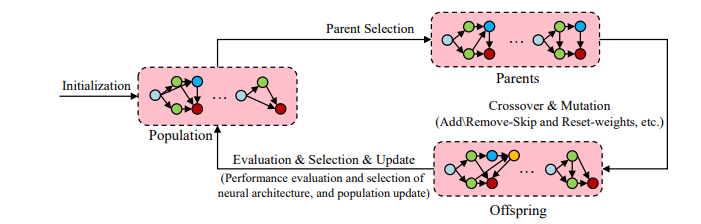

Genetic Algorithms (GA) is another method to optimize the community structure. Evolutionary algorithms begin with a inhabitants of fashions. In every step, some fashions are sampled and “reproduce” to generate offsprings by making use of mutations to them. Mutations will be native operations such because the addition of a layer, the modification of a hyperparameter, and many others. After coaching, they evaluated and added again to the inhabitants. The method repeats itself till a sure situation is met.

NAS with evolutionary algorithms

GeNet proposes an encoding methodology to signify every structure as a fixed-length binary string, which will probably be used from a normal genetic algorithm.

AmoebaNet makes use of the match choice evolutionary algorithm, or somewhat a modification of it known as regularized evolution. The distinction is that the “age” (steps of the GA) of every mannequin can be considered by favoring the youthful ones. Observe that the NASNet search house is used right here as nicely.

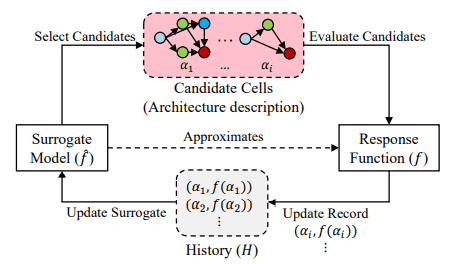

Sequential model-based optimization

In sequential model-based optimization, we will view NAS as a sequential course of that builds increasingly complicated networks iteratively. A surrogate mannequin evaluates all candidate modules (or cells) and selects some promising candidate modules. It then evaluates the efficiency of the generated community on a validation set and updates itself primarily based on that efficiency. By means of iteration, the mannequin is progressively increasing and reaching the specified efficiency.

NAS with sequention model-based optimization

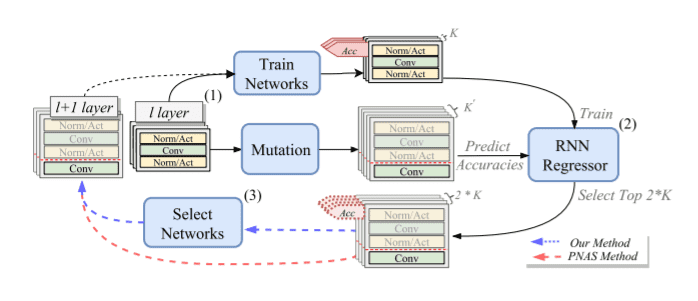

Progressive NAS (PNAS) begins with the NASNet search house and searches the house in a progressive order. Every cell can comprise as much as 5 predefined blocks. It begins by producing, coaching, and evaluating all doable cells with only one block. It then expands to cells with 2 blocks. This time although, as an alternative of coaching all cells, a surrogate mannequin (an RNN) predicts the efficiency of every structure. Observe that the surrogate mannequin is skilled primarily based on the validation efficiency of 1-block cells. The method continues as much as 5-block cells.

DPP-Web follows a really comparable method with PNAS nevertheless it additionally accounts for the system it’s executed on. Given the system the search is carried out, constraints are set primarily based on its reminiscence dimension and comparable {hardware} traits. Fashions that don’t meet the constraints are both faraway from the candidate record or optimized utilizing Paretto optimality.

Illustration of DPP-Web’s search technique: (1) Practice and Mutation, (2) Replace and Inference, and (3) Mannequin Choice.

Gradient optimization and one-shot approaches

Gradient optimization strategies use, typically, an one-shot mannequin. A one-shot mannequin, often known as supermodel ro supernet, is often a single giant community that incorporates all doable operations within the search house and is used to generate the weights for different candidate networks. How?

After coaching the mannequin, we will use it to pattern “sub-architectures’’ and examine them on the validation set. In a approach, we benefit from parameter sharing to its most.

One shot structure search

The one-shot community is often skilled with gradient descent. The query is how we will run gradient-based strategies on discrete search areas?

Steady search house

However are we actually constrained solely on discrete areas? Each world and modular search areas assume that we’ve got a discrete set of doable options. An thrilling thought is to rework the house right into a steady and differentiable kind.

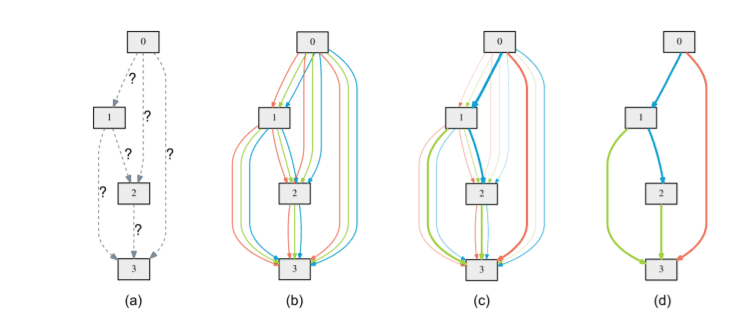

On this path, DARTS relaxes the NASNet cell-based house in a steady approach by representing every cell as a node in a Directed Acyclic Graph (DAG). Each DAG is shaped as a sequential connection of N nodes and has two enter nodes and one output node. The comfort is carried out by formulating the discrete selection of operations as a softmax; a steady selection of possibilities.

DARTS treats NAS as a bi-level optimization downside as a result of it collectively trains the structure parameters and community weights with gradient descent.

Illustration of DARTS: (a) Operations on the perimeters are initially unknown. (b) Steady leisure of the search house. (c) Joint optimization of the blending possibilities and the community weights (d) Inducing the ultimate structure

In a really comparable approach, Stochastic NAS (SNAS) search house is a set of one-hot random variables from a totally factorizable joint distribution. This search house is made differentiable by enjoyable the structure distribution with concrete distribution, thus enabling the usage of gradient optimization.

NAO, however, maps the discrete search house to repeatedly embedded encoding. That approach, they will receive the optimum embedded encoding utilizing gradient optimization. A decoder is then used to discretize the optimum steady illustration into the ultimate structure.

One shot approaches

Each DARTS and NAO fall into one-shot approaches as a result of they use a supermodel to deduct the optimum architectures. Let’s discover another ones.

SMASH trains an auxiliary mannequin known as HyperNet as an alternative of coaching all doable candidates, decreasing the search house even additional. The HyperNet is used to generate the weights for different candidate networks. How?

At every coaching step, they select a random structure and feed an outline of it (within the type of a one-hot tensor) to the HyperNet so as to generate its weights. After coaching the complete system, they examine a bunch of sampled architectures with their generated weights on the validation set. The “greatest” one is then skilled usually.

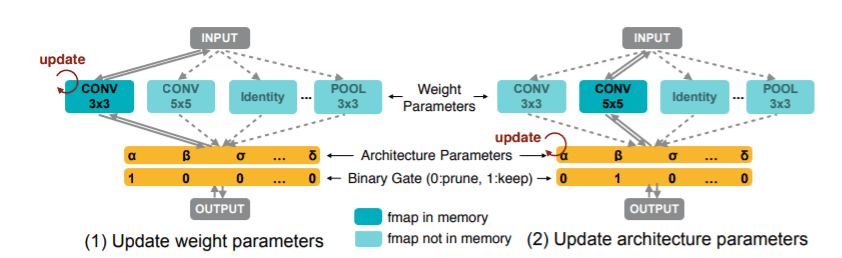

ProxylessNAS proposes a path-level pruning perspective. Its different main contribution is this concept of binarizing the structure parameters so as to have just one lively path at a time. The likelihood {that a} path will probably be pruned or not (structure parameters) are realized collectively with the weights parameters

So beginning with an over-parameterized community with all candidate paths, the coaching executes the 2 following steps alternatively:

-

The coaching of weight parameters is carried out by sampling just one path at a time and coaching it utilizing gradient descent. Observe that the remaining structure parameters are frozen throughout this step.

-

The structure parameters are skilled utilizing BinaryConnect, whereas the weights parameters are frozen.

As soon as the coaching of structure parameters is completed, they derive the ultimate structure by pruning redundant paths.

Studying strategy of each weight parameters and structure parameters on the Proxyless NAS method

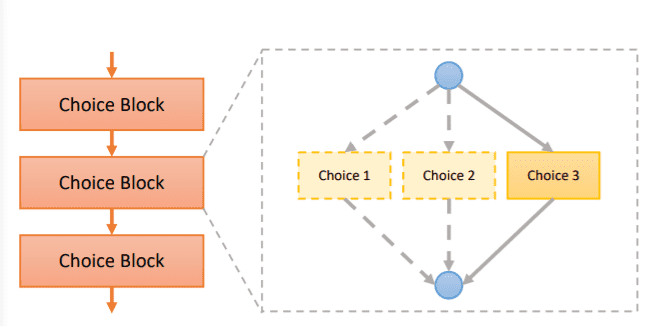

Single Path One-Shot (SPOS) constructs a simplified supermodel, the place all architectures are single paths. In addition they eradicate the necessity for the joint distribution of structure parameters and weights we beforehand noticed, by decoupling the supernet coaching and structure search in two distinct steps.

The supernet optimization is finished by merely sampling paths in a uniform method whereas the precise NAS is carried out with an evolutionary algorithm.

Alternative blocks for a single path supernet

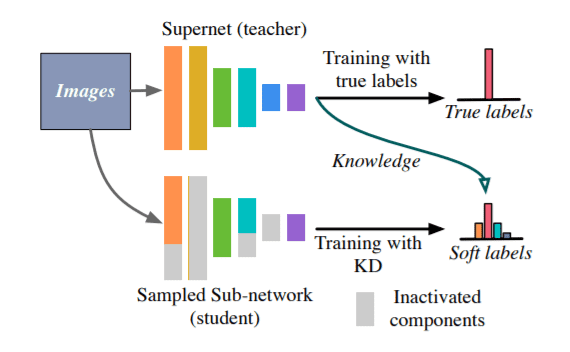

AlphaNet builds on prime of earlier works (Liu et al), which used in-place data distillation (KD) to deal with NAS. By distilling the data of the supernet (trainer), they will tremendously enhance the efficiency of sub-networks. Normal KD is often formulated with KL divergence which quantifies the distinction between trainer and college students. AlphaNet takes it a step additional and applies alpha-divergence to the issue, enhancing the coaching of the supernet. On the time of writing this text, AlphaNet achieves top-1 accuracy on the ImageNet dataset in line with benchmarks proven in paperswithcode.com.

An illustration of coaching a supernet with data distillation

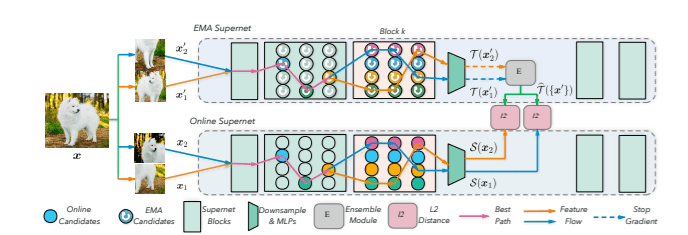

One other top-1 mannequin is BossNAS. BossNAS (Block-wisely Self-supervised Neural Structure Search) adopts a novel self-supervised illustration studying scheme known as ensemble bootstrapping. The authors first factorize the search house into blocks. It’s value mentioning that the unique work focuses solely on imaginative and prescient fashions and makes use of a mixture of CNN and transformer blocks.

The supernet can be skilled with self-supervision, in a style just like BYOL. Two siamese supernetworks are studying the illustration utilizing a pair of augmented views of the identical picture and minimizing the gap between their outputs. The subnetworks are skilled to foretell the likelihood ensemble of all of the sampled ones within the supernet. The likelihood ensemble is used because the analysis metric of the sampled fashions.

Illustration of two Siamese supernets coaching

Neural Structure Switch

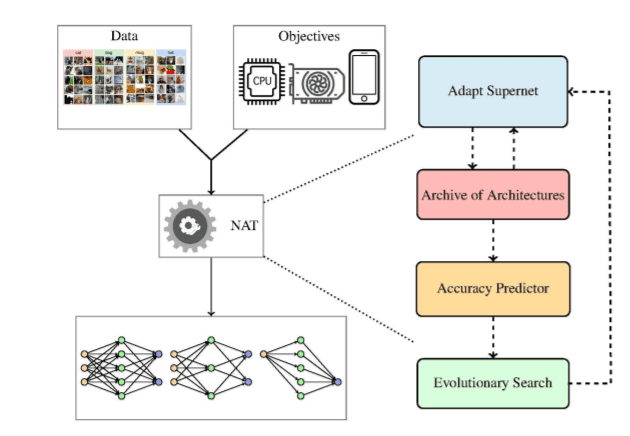

The authors of NAT (Neural Structure Switch) had this concept of utilizing switch studying within the context of NAS by transferring an current supernet right into a task-specific supernet. On the identical time, they will additionally seek for architectures that higher remedy the a number of targets of pursuits. Thus, combining switch studying with the search course of.

Multi-objective refers back to the presence of a number of conflicting targets. In multi-objective issues, the algorithms ought to account for all targets by providing the perfect trade-offs between them. Within the context of NAS, examples of targets embody inference time restrictions, reminiscence capability, dimension of the ultimate mannequin and many others. Multi-objective NAS is a analysis space that’s evolving quickly.

NAT is split into three parts: a) an accuracy predictor b) an evolutionary search course of and c) a supernet. The entire course of goes as follows:

-

Ranging from a listing of skilled supernets, they uniformly pattern a set of them.

-

The efficiency of the supernets is evaluated.

-

The accuracy predictor is then constructed with the objective to drive the search taking into consideration the a number of targets.

-

The evolutionary search proposes a set of promising architectures

-

The architectures are again to the unique record of supernets and the method iterates till satisfying a terminal situation.

Illustration of the Neural Structure Switch course of

Implementation with neural community intelligence (nni)

For higher understanding, we may even current an instance implementation of NAS utilizing the neural community intelligence (nni) bundle by Microsoft. There are different AutoML libraries on the market that help NAS similar to AutoKeras, Auto-Pytorch, AutoGluon however nni is by far probably the most full and nicely maintained. nni helps quite a lot of strategies. Examples embody: PPO-based strategies, AmoebaNet, ENAS, DARTS, ProxylessNAS, FBNet, SPOS and extra.

Since one-shot fashions outperform different methods persistently and it’s the principle analysis path in the mean time, we are going to showcase methods to execute them with nni. The best method is the DARTS algorithm.

The showcase code will be discovered on this colab pocket book. Observe that it’s closely impressed by the official examples and tutorials of the nni library.

DARTS makes use of a supergraph as its search house. Every cell is thought to be a DAG and they’re mixed right into a multi-path supermodel. To declare a cell, nni supplies the LayerChoice which accepts a number of normal Pytorch modules (convolutional, pooling and many others). That approach, it defines a number of paths contained in the supermodel. After coaching, just one path will probably be chosen to kind the optimum structure.

self.conv1 = nn.LayerChoice([nn.Conv2d(3, 6, 3, padding=1), nn.Conv2d(3, 6, 5, padding=2)])

Another choice is to make use of the InputChoice module, which is primarily used to discover totally different connections. In our instance, we are going to use skip connections. The tip structure will both hold the skip connection or not.

self.skipconnect = nn.InputChoice(n_candidates=2)

x1 = self.skipconnect([x1, x1+x0])

The supergraph will be outlined as a typical Pytorch mannequin, however as an alternative of utilizing the unique Pytorch bundle, we have to use the one supplied by nni. An instance will be discovered beneath:

import torch.nn.practical as F

import nni.retiarii.nn.pytorch as nn

class Web(nn.Module):

def __init__(self):

tremendous().__init__()

self.conv1 = nn.LayerChoice([nn.Conv2d(3, 6, 3, padding=1), nn.Conv2d(3, 6, 5, padding=2)])

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.LayerChoice([nn.Conv2d(6, 16, 3, padding=1), nn.Conv2d(6, 16, 5, padding=2)])

self.conv3 = nn.Conv2d(16, 16, 1)

self.skipconnect = nn.InputChoice(n_candidates=2)

self.bn = nn.BatchNorm2d(16)

self.hole = nn.AdaptiveAvgPool2d(4)

self.fc1 = nn.Linear(16 * 4 * 4, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def ahead(self, x):

bs = x.dimension(0)

x = self.pool(F.relu(self.conv1(x)))

x0 = F.relu(self.conv2(x))

x1 = F.relu(self.conv3(x0))

x1 = self.skipconnect([x1, x1+x0])

x = self.pool(self.bn(x1))

x = self.hole(x).view(bs, -1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

mannequin = Web()

As soon as we declare the supergraph, we will outline the NAS coaching utilizing the DartsTrainer class. DARTS requires a metric to guage every candidate structure in addition to typical coaching arguments ( optimizer, loss, variety of epochs and many others). In a approach, it’s just like coaching a normal deep community.

import torch

from nni.retiarii.oneshot.pytorch import DartsTrainer

def accuracy(output, goal):

batch_size = goal.dimension(0)

_, predicted = torch.max(output.knowledge, 1)

return {"acc1": (predicted == goal).sum().merchandise() / batch_size}

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(mannequin.parameters(), lr=0.001, momentum=0.9)

coach = DartsTrainer(

mannequin=mannequin,

loss=criterion,

metrics=lambda output, goal: accuracy(output, goal),

optimizer=optimizer,

num_epochs=1,

dataset=train_dataset,

batch_size=64,

log_frequency=10

)

coach.match()

After coaching, we will receive the optimum structure. The nni API will present it within the type of “mutations”. The mutations are exhibiting which cell topology DARTS determined to maintain. For every cell, we are going to get a mutation exhibiting the perfect topology.

In our case, the primary conv layer will probably be shaped by the fist declared block (nn.Conv2d(3, 6, 3, padding=1)) whereas the second by the second (nn.Conv2d(6, 16, 5, padding=2)). Skip connections have been discovered to additionally present higher outcomes, so we’re retaining them (mutation_3).

print('Closing structure:', coach**.**export())

nni is far richer than we’re in a position to present in a single article, so we extremely suggest taking part in round with it.

Observe that the primary a part of the Machine Studying Modeling Pipelines in Manufacturing course by Robert Crowe is solely devoted to NAS, so make certain to test it out.

Conclusion

NAS analysis continues to be in its infancy in my private opinion. Benchmarking can be not a trivial endeavor. In the intervening time, we’ve got some normal benchmarks similar to ImageNet, NAS-Bench0201, CIFAR10, however extra work is unquestionably wanted on this path. Lastly open supply libraries, excluding nni, are barely touching NAS as an issue.

I hope that this overview serves as an introduction and can encourage extra practitioners to pursue NAS as their analysis subject. For any questions, doable errors or additions, be happy to ping us on our Discord server.

Cite as

@article{karagiannakos2022nas,

title = "Neural Structure Search (NAS): primary rules and totally different approaches",

writer = "Karagiannakos, Sergios",

journal = "https://theaisummer.com/",

12 months = "2021",

howpublished = {https://theaisummer.com/neural-structure-search/},

}

References

* Disclosure: Please observe that a few of the hyperlinks above is perhaps affiliate hyperlinks, and at no extra value to you, we are going to earn a fee in case you determine to make a purchase order after clicking by.