These days, switch studying from pretrained fashions on Imagenet is the final word normal in pc imaginative and prescient. Self-supervised studying dominates pure language processing, however this doesn’t imply that there are not any important use-cases for pc imaginative and prescient that it ought to be thought-about. There are certainly numerous cool self-supervised duties that one can devise when she/he’s coping with pictures, resembling jigsaw puzzles [6], picture colorization, picture inpainting, and even unsupervised picture synthesis.

However what occurs when the time dimension comes into play? How are you going to method the video-based duties that you simply want to resolve?

So, let’s begin from the start, one idea at a time. What’s self-supervised studying? And the way is it totally different from switch studying? What’s a pretext process?

Self-supervised studying VS switch studying

Switch studying is a good level to start out.

Switch studying permits us to take advantage of the weights of a (convolutional) neural community used for process A and apply it to a different process (B), provided that the enter domains are by some means associated.

The coaching course of in process A known as pretraining in machine studying terminology. The core concept behind that is that the acquired ‘information’ of process A could also be exploited to boost generalization in process B. And that is normally the case, as a result of who needs to start out from a random weight initialization?

Clearly, switch studying is one approach to maximize the efficiency of your mannequin, by pretraining on an identical supervised (video) dataset. Nevertheless, in domains resembling movies the issue of annotation (supervision) rapidly arises. It’s troublesome to search out video annotated information and switch weights.

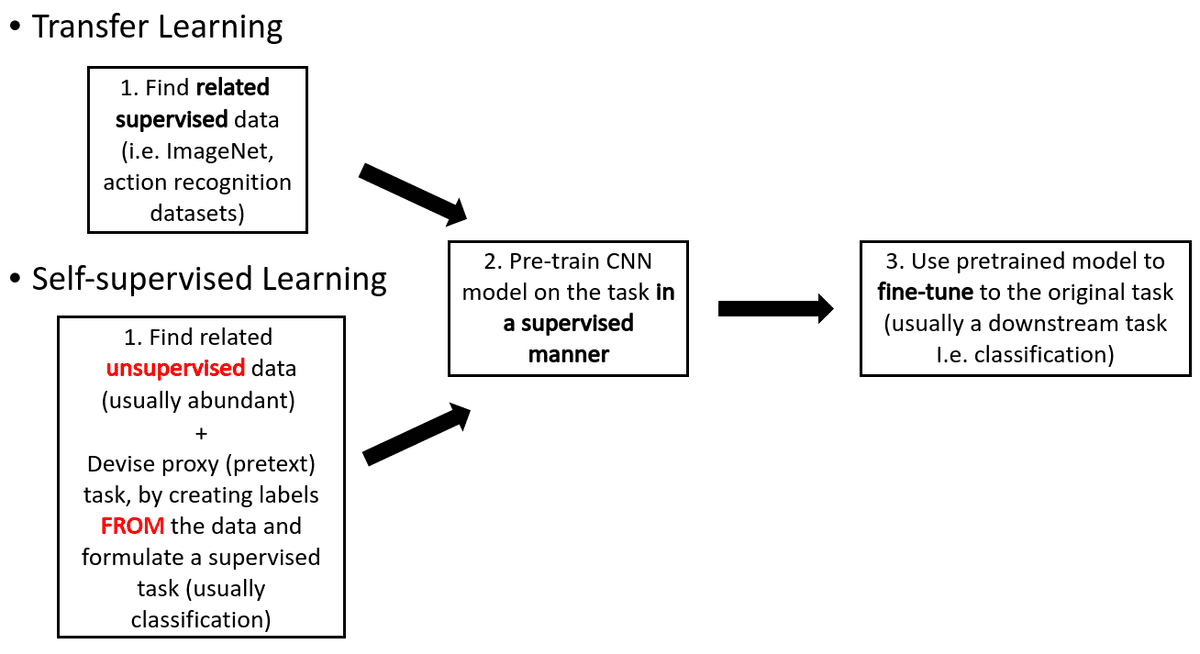

However even earlier than that, let’s make clear the inherent variations of self-supervised studying VS switch studying. I created this easy diagram to make issues crystal clear:

Self-supervized VS transfer-learning

As an answer to supervision-deprived domains, self-supervised studying is one approach to switch weights, by pretraining your mannequin on labels which might be artificially produced from the information/movies.

This may be achieved with a bunch of various (and typically tricking) transformations, as we are going to see. That is precisely what we name self-supervised illustration studying.

Observe that, for self supervised studying, you’ll be able to even use simply your personal information, however with out the supplied labels

In essence, in self-supervised studying we try to guess a associated process A so the load switch to process B, could be a greater initialization level than random.

Notations, ideas and instance duties

The devised self-supervised process A is normally referred to as pretext or proxy process, whereas the specified process B we wish to resolve is referred within the literature as a downstream process. I normally seek advice from it as authentic process additionally.

Again on the earth of movies, video-based studying fall into the class of sequential studying. These approaches might be broadly divided into two lessons: sequence prediction and verification. Moreover, (picture) tuples seek advice from a bunch of frames of a video that will likely be used because the enter to the deep studying structure. Siamese fashions or multi-branch fashions are used interchangeably within the totally different papers. It’s mainly impartial predictions of a number of enter information. You may merely suppose that numerous ahead passes of the siamese mannequin should be carried out earlier than a backward cross, whereas the extracted options are fused within the subsequent layers. Consequently, the loss that will likely be backpropagated taken under consideration all of the ahead passes.

Now we’ve a transparent high-level idea of self-supervision. Let’s see why it can be crucial, particularly on video datasets!

Why Self-Supervised studying?

Initially, you can not create a brand new video-dataset (or any sort of dataset) for every explicit process. Video annotations are additionally costly and time-consuming. Secondly, in a site resembling medical imaging, it’s onerous to acquire any knowledgeable annotation in any respect. Then again, a whole bunch of 1000’s of hours of unlabeled movies are uploaded day by day on youtube! If you’re nonetheless not satisfied that self-supervised studying is an superior route, let’s advise the consultants:

“Most of what we be taught as people and most of what animals be taught is in a self-supervised mode, not a reinforcement mode. It’s mainly observing the world and interacting with it a bit bit, largely by remark in a test-independent approach.” ~ Yann LeCun, Director of Fb AI Analysis (FAIR)

Now I consider that you’re satisfied. Nevertheless, remember that each thrilling concept comes with its assumptions and counterparts. However earlier than that allow’s make clear our terminology first.

Shifting on, what’s the core assumption of self-supervised studying on movies?

Intuitively, we argue that efficiently fixing the “pretext” process A will enable our mannequin to be taught helpful visible illustration to get well the temporal coherence of a video, or usually studying from the statistical temporal construction of movies.

One instance of temporal consistency may be observing how objects (automobiles, people) transfer within the scene.

Among the questions which will are available your thoughts, as completely described by Misra et al. [1]:

-

“How does the mannequin be taught from the spatiotemporal construction current within the video with out utilizing supervised semantic labels?”

-

“Are the representations realized utilizing the unsupervised/self-supervised spatiotemporal data current in movies significant?”

-

“And eventually, are these representations complementary to these realized from strongly supervised picture information?”

We are going to reply these questions by inspecting totally different approaches.

However even earlier than that, how does one design a self-supervised process?

In brief, a great self-supervised process is neither easy nor ambiguous [6].

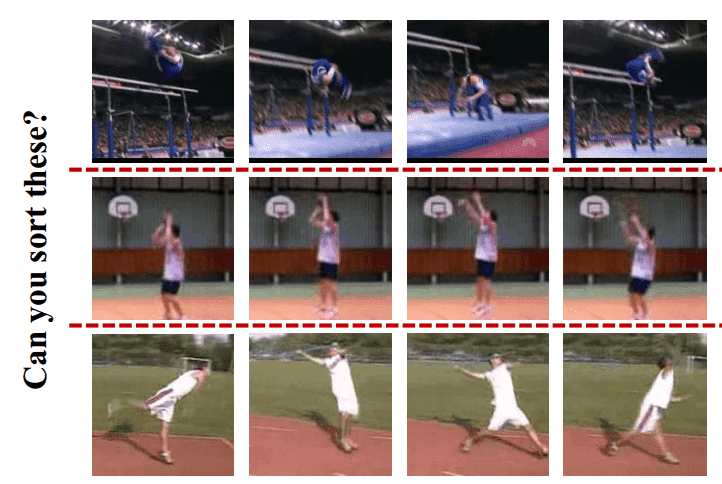

One other vital issue that we bear in mind is if people can resolve the pretext process. For example, attempt to perceive the sequence of the frames under. With a bit little bit of give attention to the relative poses and speculations about “how an individual strikes”, we will predict the chronological order of those frames.

Taken from the unique work [2]

Furthermore, we have to select one thing that if solved, would require an understanding of our information. That’s why it falls into the class of illustration studying anyway

Perception: the important thing concept is to leverage the inherent construction of uncooked pictures and formulate the issue as discriminative (classification) or introduce a reconstruction loss perform to coach the community.

That being mentioned, I hope you might be able to briefly study essentially the most influential papers for self-supervised illustration studying on movies.

Shuffle and Be taught: Unsupervised Studying utilizing Temporal Order Verification, ECCV 2016

This is without doubt one of the first works launched by Misra et al. [1]. They formulate their pretext process as a sequence verification downside.

In (sequential) verification, one predicts the ‘temporal validity’ of the sequence.

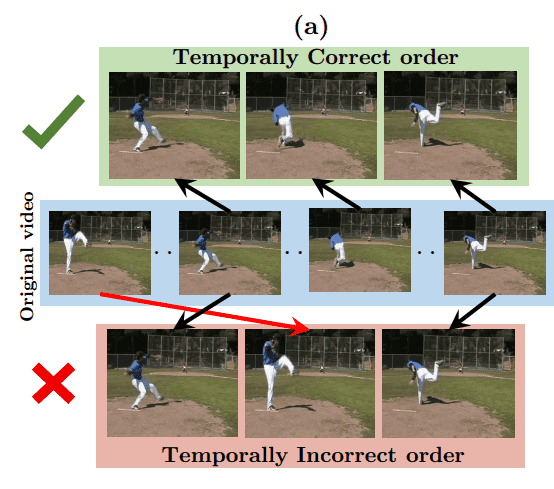

On this work, the authors discover the duty of whether or not a sequence of video frames is within the right temporal order. To take action, that they had to make use of a sampling scheme with a purpose to pattern the movies. The rationale that is essential is after all the computational complexity.

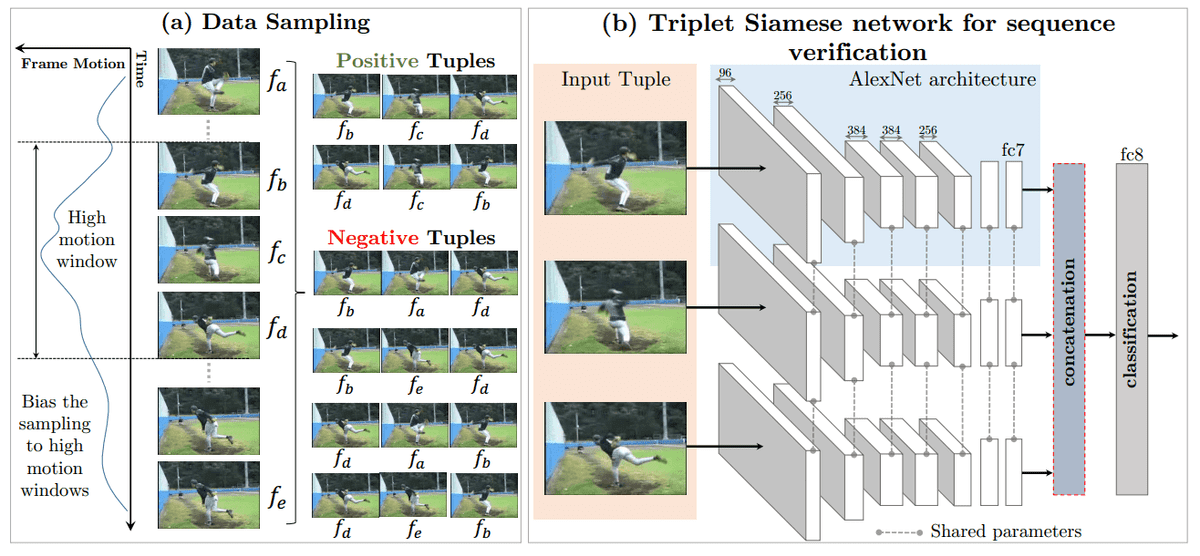

To this finish, they sampled pictures with excessive movement, based mostly on the imply optical stream magnitude per body. With a purpose to create optimistic and damaging tuples, samples of 5 frames have been used as an enter. Constructive samples correspond to the right order, whereas damaging ones seek advice from frames within the improper order. The next picture additional illustrates the distinction.

An instance of video shuffling, optimistic and damaging examples. Taken from the unique work [1]

Coaching trick: Throughout coaching, the authors used the identical starting and ending body, whereas solely altering the center body for each optimistic and damaging examples. As a consequence, the community is inspired to give attention to this sign to be taught the refined distinction between positives and negatives, slightly than irrelevant options.

An summary of the proposed community structure of [1]. The picture is taken from the unique work.

Based mostly on the structure scheme, it’s value noting that every body is processed independently by the AlexNet, whereas the picture options outputs are concatenated for the ultimate classification.

Lastly, they used about 900k picture tuples, with a balanced mini-batch ratio of optimistic and damaging cases. Because it was came upon, it’s vital to have a bigger proportion of damaging examples for order verification (75%).

Outcomes and dialogue

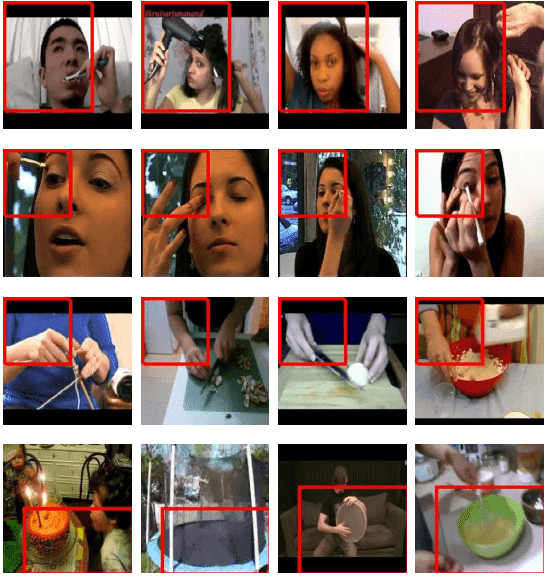

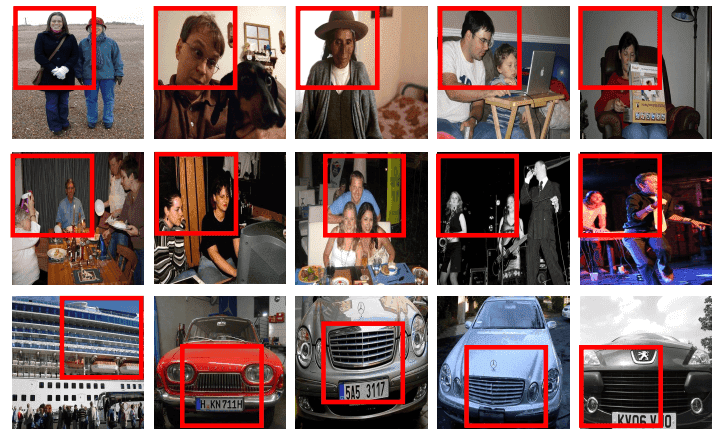

The query that was posed was what does the mannequin actually be taught from the temporal construction? Within the following picture, the authors declare to show the receptive fields (marked in purple bins) for these items. As our community is skilled on human motion recognition movies, many items present a choice for human physique components and pose, since they’re associated to excessive movement. Though it’s referenced within the paper as a receptive subject the truth is that that is the characteristic activation of the layer.

Visualizing activations, taken from [1]

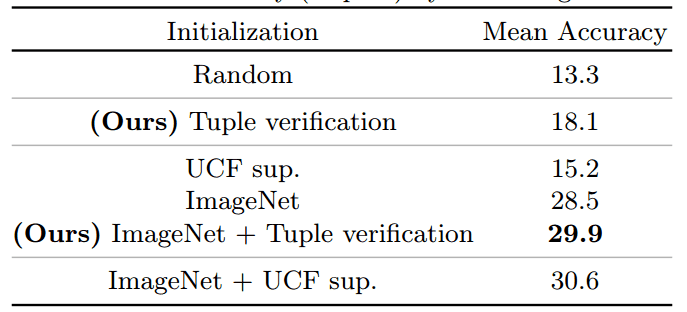

Numerically, by combining this scheme with imagenet pretrained weights, we get nearly the identical imply accuracy as with supervision from movies, as illustrated under (UCF sup. is the supervised database with motion movies):

Outcomes on motion recognition, borrowerd from [1]

Lastly, based mostly on the introduced outcomes, one can validate that sequence verification requires an understanding of the video-based process at hand (motion recognition).

Unsupervised Illustration Studying by Sorting Sequences, ICCV 2017

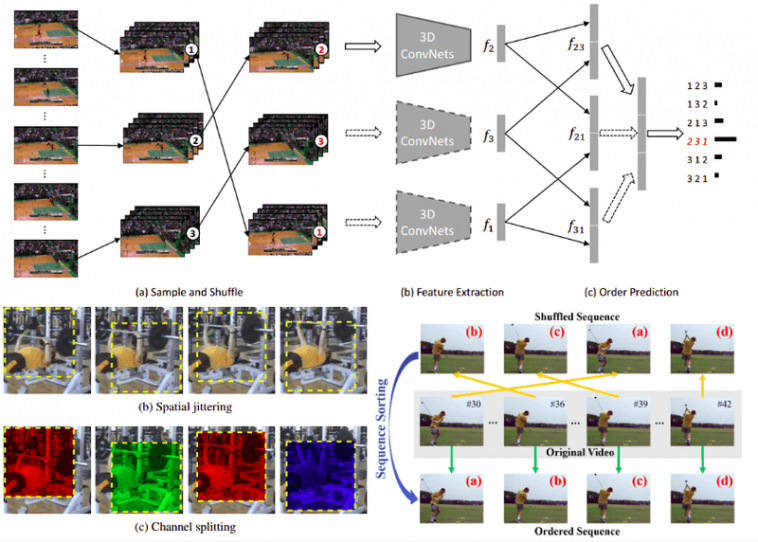

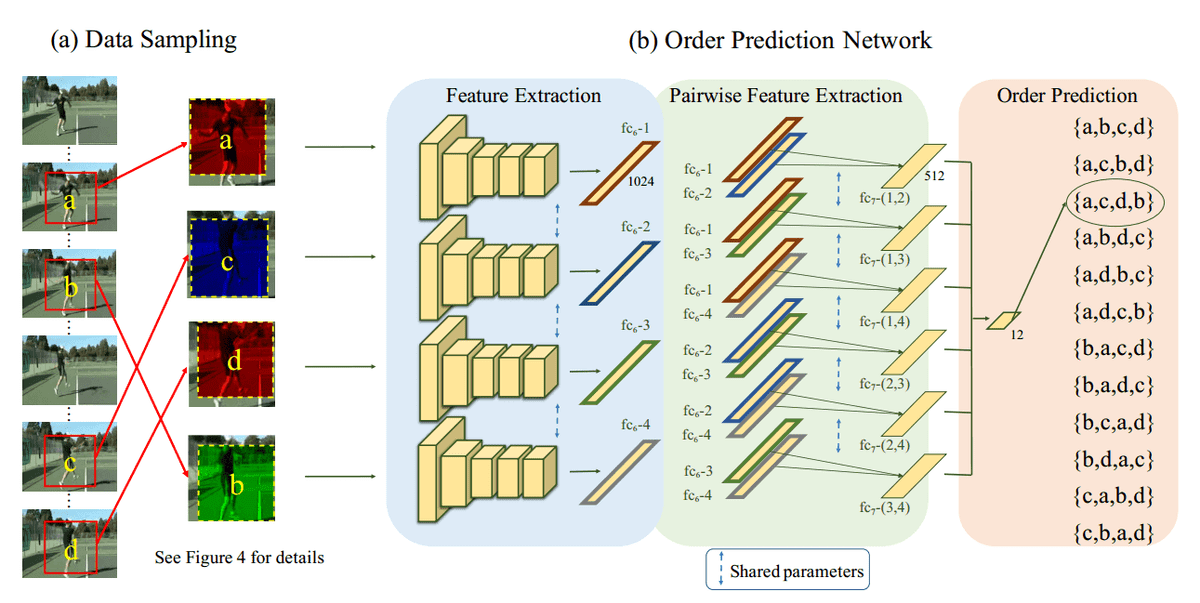

Core contribution: Lee et al 2017 [2] suggest an Order Prediction Community (OPN) structure

to unravel the sequence sorting process by pairwise characteristic extraction.

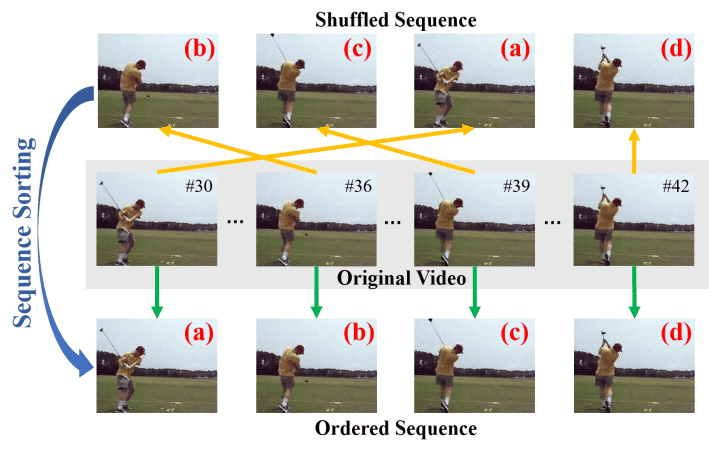

Extending the earlier work, we try to type the shuffled picture sequence. If verification requires an understanding of the statistical temporal construction of pictures, one can most likely guess that picture sorting will present richer and extra generalizable visible representations. To this finish, the authors try to coach with such a proxy process given a tuple of 4 sampled pictures, as illustrated under.

An instance of a sequence sampling and sorting, borrowed from [2]

For a tuple with n frames, there are n! (! is the factorial) potential combos. In observe, the authors used 4 randomly shuffled frames. Just like the jigsaw puzzle downside [6], they solid the issue as multi-class classification. For every tuple of 4 frames, there are 24 potential permutations. However, ahead and backward permutations might be casted as one class, as they are often potential options to the motion, leading to 12 lessons in whole.

Coaching information sampling

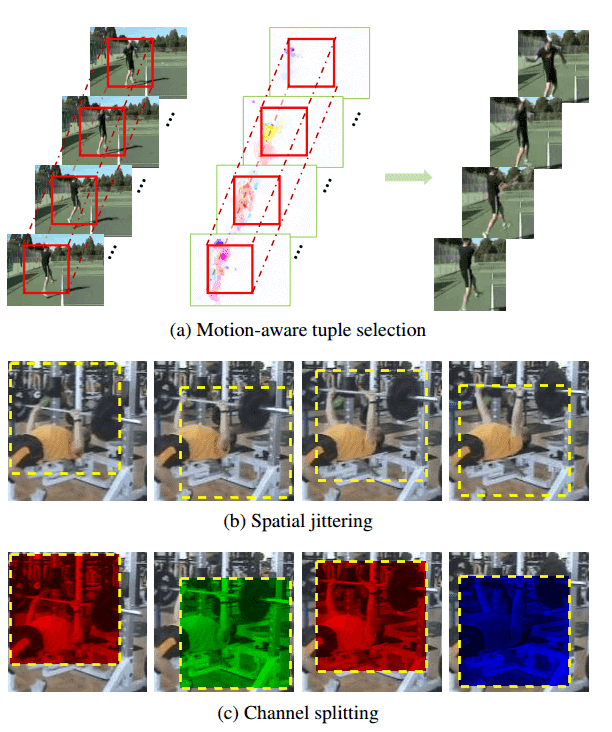

Just like different works, they sampled frames based mostly on movement magnitude. Along with utilizing optical stream magnitude for body choice solely, they additional choose spatial patches with giant movement (a in Determine). Plus, they apply spatial jittering within the already extracted cropped pictures (proven as b in Determine). Moreover, they introduce a brand new tactic referred to as channel splitting (proven as c). That is roughly just like utilizing grayscale pictures, nevertheless it performs higher based mostly on the experimental evaluation. Particularly, they select one channel and duplicate the values to the opposite two channels of the RGB picture. Consequently, the community is guided to give attention to the semantics of the photographs, slightly than low-level options.

Coaching sampling tips, taken from [2]

Mannequin structure, information, and a few outcomes

Just like [1], they produce picture options based mostly on a typical convolutional neural community. In literature, that is known as Siamese structure. Once more, every body is processed independently by the identical mannequin (normally referred to as multi-branch to be fancier), whereas the parameters are the identical. One other essential level is the pairwise characteristic extraction, as proven within the overview of the structure. As it may be illustrated under, the options of fc6 options are used to kind pairs of body options. These enable some sort of fusion, or in different phrases to bear in mind the pairwise relationships of options.

Siamese mannequin structure with pairwise feat extraction

Lastly, they extracted 280k tuples from the ~30K UCF-101 motion recognition dataset because the coaching information, with a comparatively large batch measurement of 128. The enter patches have been 80×80. It’s value noting that one potential motive for the poor efficiency of utilizing bigger patches may be the inadequate quantity of coaching information.

Some illustrations of the characteristic activations might be seen under. It may be noticed that the characteristic activations correspond to human head and object components.

the activation as taken from [2]

Self-Supervised Video Illustration Studying With Odd-One-Out Networks, CVPR 2017

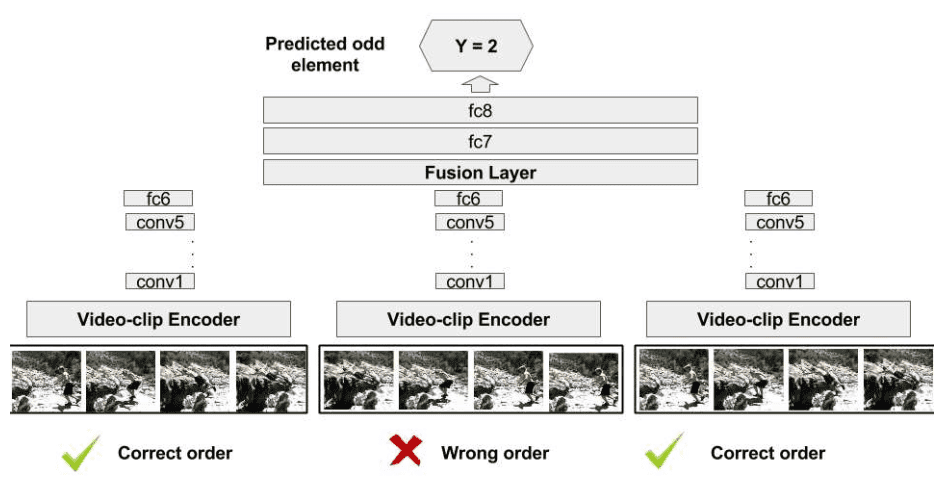

Let’s begin from the duty that was devised, referred to as Odd-One-Out. Briefly, the purpose of odd-one-out studying is to predict the odd (unrelated) ingredient from a set of in any other case associated components.

Formally, given a set of a number of associated components and just one odd ingredient comprise a query

Mannequin structure

The prediction mannequin is a multi-branch CNN, which known as an odd-one-out community (O3N). The O3N consists of N + 1 enter branches, every accommodates 5 conv. layers (weights are shared throughout the enter layers). The odd-one-out process requires a comparability amongst (N+1) components. To unravel it, one can’t be wanting on the particular person components. Consequently, they additional introduce a fusion layer which merges the knowledge from the totally different branches. Lastly, they experimented with two kinds of fusions, the concatenation, and the sum of distinction.

mannequin o3n overview [3]

Self-supervised Spatiotemporal Studying by way of Video Clip Order Prediction, CVPR 2019

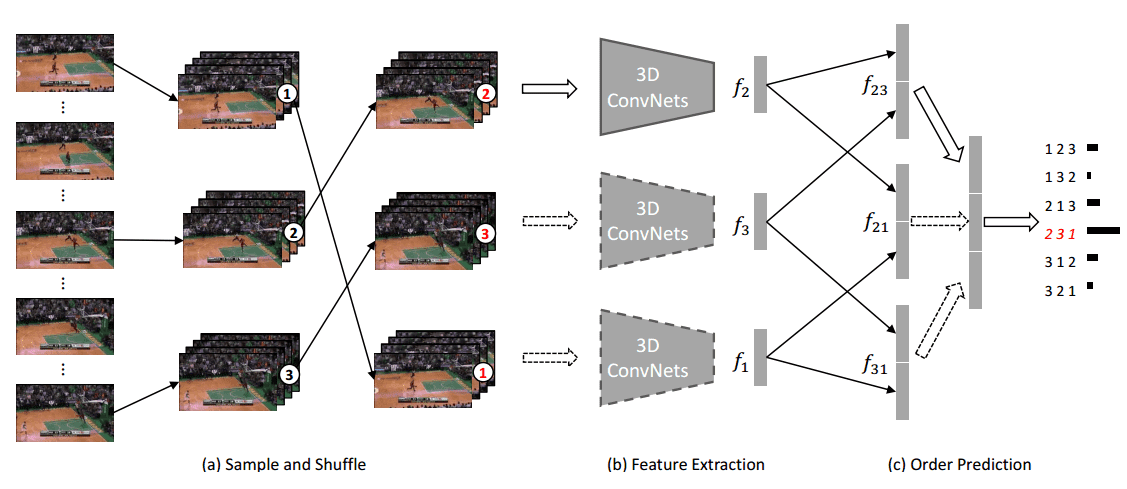

Once more, Xu et al 2019 [4] formulate the duty of order prediction, as a classification downside. Along with earlier works, they combine 3D CNNs by producing impartial options of small video clips slightly than pure pictures. Thus, the duty at hand is now referred to as clip order prediction. Their method might be summarized as follows:

-

First, a number of fixed-length (16 frames) clips are sampled from the video and shuffled randomly,

-

Then, 3D CNNs are used to extract impartial options for these clips, utilizing shared weights (siamese structure).

-

Lastly, a easy neural community is employed to foretell the precise order of the shuffled clips.

The realized 3D CNNs might be both used as a clip characteristic extractor or a pre-trained mannequin to be fine-tuned to motion recognition. To show their concepts, three highly effective 3D-CNN architectures are evaluated, specifically C3D [9], R3D, and R(2+1)D [10] (denoted as 3D ConvNets within the picture under). An summary of the proposed structure might be depicted under:

The proposed mannequin structure [4]

Observe: Since clip order prediction is only a proxy process and the purpose is to be taught video representations, the duty ought to be solvable. For tuple size, 3 clips per tuple are chosen, with a batch measurement of 8 tuples. The video clip consists of 16 frames for the 3D CNNs, whereas the interval between movies is ready to be 8 frames, to keep away from trivial options.

With a purpose to validate the realized representations, they use the closest neighbor retrieval based mostly on the produced options from the 3D-CNNs. To guage their method to motion recognition, 10 clips are sampled from the video to get clip predictions, that are then averaged to acquire the ultimate video prediction.

As a remaining notice, whereas this examine reveals promising outcomes, the finetuning from supervised pre-training on bigger datasets resembling Kinetics nonetheless acts as the very best pretraining scheme.

Conclusion

I hope you get the concept by now. We’re going to see an increasing number of cool purposes of self-supervised studying. To briefly summarize, we had our little dive in self-supervised studying, specializing in movies. We launched the issues that come up with the extra time dimension and the way one can deal with them. Based mostly on the introduced works, listed here are my closing conclusion factors:

TL;DR

-

An excellent-designed self-supervised process is neither easy nor ambiguous. A human should be capable of resolve it, and the pretext process optimization should require an understanding of our information for our authentic/downstream process.

-

We argue that efficiently fixing the “pretext” process will enable our mannequin to be taught helpful visible illustration to get well the temporal coherence of a video.

-

In movies, sequence sampling is absolutely essential, in addition to coaching tips (i.e. channel splitting, identical begin & and body and many others.)

-

The core concept is to leverage the inherent construction of uncooked/unlabeled information and formulate a supervised downside, normally classification.

-

Unbiased characteristic extraction is utilized within the sequence’s components (referred as siamese re shared weight networks or multi-branch fashions).

-

These impartial options are normally processed with absolutely related layers, the place pairwise matching and have variations appears to work higher than easy concatenation.

As further materials, an inspiring presentation that’s actually value watching is once more by Yann LeCun in ICLR 2020. The optimistic aspect of digital conferences I suppose. I actually loved watching this one.

For many who need one thing extra superior you’ll be able to transfer on to Self-Supervised Generative Adversarial Networks, as you know the way a lot we like generative studying in AI summer season. Lastly, for a extra complete method to a abstract of a plethora of self-supervised illustration studying (not targeted on movies), take a look at this weblog put up [8] by Lilian Weng.

Cited as:

@article{adaloglou2020sslv,

title = "Self-supervised illustration studying on movies",

writer = "Adaloglou, Nikolas",

journal = "https://theaisummer.com/",

yr = "2020",

url = "https://theaisummer.com/self-supervised-learning-videos/"

}

References

[1] Misra, I., Zitnick, C. L., & Hebert, M. (2016, October). Shuffle and be taught: unsupervised studying utilizing temporal order verification. In European Convention on Pc Imaginative and prescient (pp. 527-544). Springer, Cham.

[2] Lee, H. Y., Huang, J. B., Singh, M., & Yang, M. H. (2017). Unsupervised illustration studying by sorting sequences. In Proceedings of the IEEE Worldwide Convention on Pc Imaginative and prescient (pp. 667-676).

[3] Fernando, Basura, et al. “Self-supervised video illustration studying with odd-one-out networks.” Proceedings of the IEEE convention on pc imaginative and prescient and sample recognition. 2017.

[4] Xu, D., Xiao, J., Zhao, Z., Shao, J., Xie, D., & Zhuang, Y. (2019). Self-supervised spatiotemporal studying by way of video clip order prediction. In Proceedings of the IEEE Convention on Pc Imaginative and prescient and Sample Recognition (pp. 10334-10343).

[5] Jiao, J., Droste, R., Drukker, L., Papageorghiou, A. T., & Noble, J. A. (2020, April). Self-Supervised Illustration Studying for Ultrasound Video. In 2020 IEEE seventeenth Worldwide Symposium on Biomedical Imaging (ISBI) (pp. 1847-1850). IEEE.

[6] Noroozi, M., & Favaro, P. (2016, October). Unsupervised studying of visible representations by fixing jigsaw puzzles. In European Convention on Pc Imaginative and prescient (pp. 69-84). Springer, Cham.

[7] Gopnik, A., Meltzoff, A. N., & Kuhl, P. Ok. (2000). The scientist within the crib: What early studying tells us concerning the thoughts. William Morrow Paperbacks.

[8][self supervised representation learning](https://lilianweng.github.io/lil-log/2019/11/10/self-supervised-learning.html), Weng, Lilian 2019

[9] Tran, D., Bourdev, L., Fergus, R., Torresani, L., & Paluri, M. (2015). Studying spatiotemporal options with 3d convolutional networks. In Proceedings of the IEEE worldwide convention on pc imaginative and prescient (pp. 4489-4497).

[10] Tran, D., Wang, H., Torresani, L., Ray, J., LeCun, Y., & Paluri, M. (2018). A more in-depth have a look at spatiotemporal convolutions for motion recognition. In Proceedings of the IEEE convention on Pc Imaginative and prescient and Sample Recognition (pp. 6450-6459).

* Disclosure: Please notice that a number of the hyperlinks above may be affiliate hyperlinks, and at no further price to you, we are going to earn a fee for those who determine to make a purchase order after clicking by.