Deep studying and medical imaging

The rise of deep networks within the area of pc imaginative and prescient offered state-of-the-art options in issues that classical picture processing strategies carried out poorly. Within the generalized job of picture recognition, which incorporates issues corresponding to object detection, picture classification, and segmentation, exercise recognition, optical circulation and pose estimation, we will simply declare that DNN (Deep Neural Networks) have achieved superior efficiency.

Together with this rise in pc imaginative and prescient, there was plenty of curiosity within the software within the area of medical imaging. Despite the fact that medical imaging information will not be really easy to acquire, DNN’s appear to be a great candidate to mannequin such complicated and excessive dimensional information.

Not too long ago, Imperial Faculty of London launched a course on COVID-19. A whole lot of researches have already tried to routinely detect COVID-19 via deep networks from 3D CT scans. However, the application-specific information are nonetheless not out there it’s clear that AI will massively influence the evolution of drugs via medical imaging.

As we are going to see a medical picture is usually three or four-dimensional. Another excuse that this area attracts plenty of consideration is its direct influence on human lives. Medical errors are the third-leading reason behind loss of life, after coronary heart illness and most cancers within the

USA. Consequently, it’s apparent that the primary three causes of human deaths are associated to medical imaging. That’s why it’s estimated that AI and deep studying in medical imaging will create a model new market of greater than a billion {dollars} by 2023.

This work serves as an intersection of those two worlds: Deep neural networks and medical imaging. On this put up, we are going to sort out the issue of medical picture segmentation, targeted on magnetic resonance photos, which is likely one of the hottest duties, as a result of it’s the job with probably the most well-structured datasets that somebody can get entry to. Since on-line medical information assortment isn’t as easy as it might sound; a group of hyperlinks to start out your journey is offered on the finish of the article.

This text presents some preliminary outcomes of an beneath growth open-source library, known as MedicalZoo that may be discovered right here.

To dive deeper into how AI is utilized in Drugs, you may’t go unsuitable with the AI for Drugs there’s on the market! Use the low cost code aisummer35 to get an unique 35% low cost out of your favourite AI weblog.

The necessity for 3D Medical picture segmentation

3D Volumetric picture segmentation in medical photos is obligatory for analysis, monitoring, and therapy planning. We’ll simply use magnetic resonance photos (MRI). Guide practices require anatomical data and they’re costly and time-consuming. Plus, they are often inaccurate because of the human issue. However, automated quantity segmentation can save physicians time and supply an correct reproducible answer for additional evaluation.

We’ll begin by describing the basics of MR Imaging as a result of it’s essential to know your enter information to coach a deep structure. Then, we offer the reader with an outline of 3D-UNET that may be effectively used for this job.

Medical photos and MRI

Medical imaging seeks to disclose inside buildings hidden by the pores and skin and bones, in addition to to diagnose and deal with illnesses. Medical magnetic resonance (MR) imaging makes use of the sign from the nuclei of hydrogen atoms for picture technology. Within the case of hydrogen nuclei: when it’s uncovered to an exterior magnetic area, denoted as B0, the magnetic moments, or spins, align with the path of the sector like compass needles.

All the fixed magnetization is rotated into one other aircraft by a further radio-frequency pulse that’s sturdy sufficient and utilized lengthy sufficient to tip the magnetization. Instantly after excitation, the magnetization rotates within the different aircraft. The rotating magnetization offers rise to the MR sign within the receiver coil. Nevertheless, the MR sign quickly fades as a result of two unbiased processes that cut back magnetization and thus trigger a return to the steady state current earlier than excitation that produce the so-called T1 photos and T2 magnetic resonance photos. T1 rest is said to the nuclei that extra power to their environment, whereas T2 rest refers back to the phenomenon of the person magnetization vectors that start to cancel one another. The aforementioned phenomena are fully unbiased. As a consequence, completely different intensities characterize completely different tissues, as illustrated under:

Picture taken from this e-book.

3D Medical picture illustration

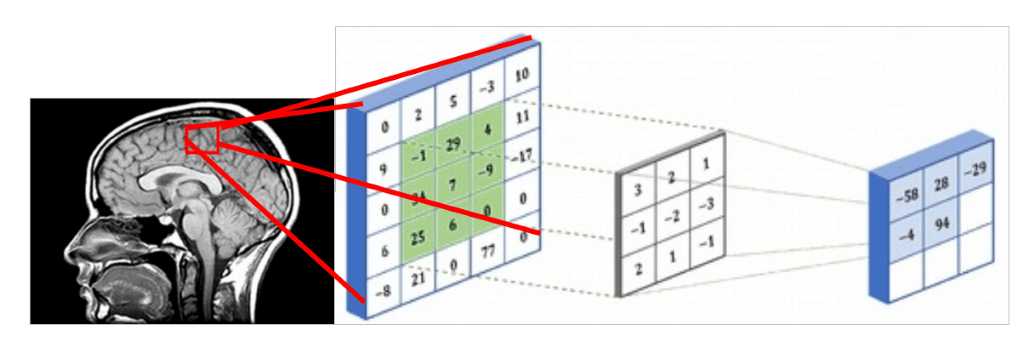

Since medical photos characterize 3D construction, a method which you could take care of them is through the use of slices of the 3D quantity and carry out common 2D sliding convolutions, as illustrated within the determine under. Let’s suppose that the purple rectangle is a picture 5×5 patch that may be represented with a matrix that accommodates the depth values. The voxel intensities and the kernel are convolved with a 3×3 convolution kernel, as proven within the Determine under. In the identical sample, the kernel is slided throughout the entire 2D grid (medical picture slice) and each time we carry out cross-correlation. The results of a convolved 5×5 patch is saved in a 3×3 matrix (no padding for illustration functions) and is propagated within the subsequent layer of the community.

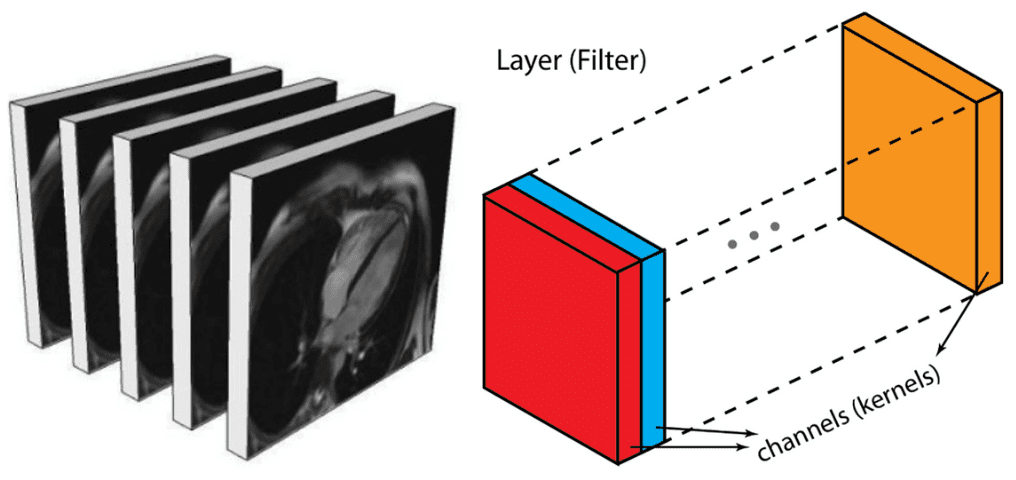

Alternatively, you may characterize them just like an output of an intermediate layer. In deep architectures, we often have a number of characteristic maps, which is virtually a 3D tensor. If there’s a purpose to consider that there are patterns among the many further dimension it’s optimum to carry out 3D sliding convolution. That is the case in medical photos. Just like the 2D convolutions, which encode spatial relationships of objects in a 2D area, 3D convolutions can describe the spatial relationships of objects within the 3D house. Since 2D illustration is sub-optimal for medical photos, we are going to decide out to make use of 3D convolutional networks on this put up.

Medical picture slices will be seen as a number of characteristic maps of an intermediate layer, with the distinction that they’ve a robust spatial relationship

Mannequin: 3D-Unet

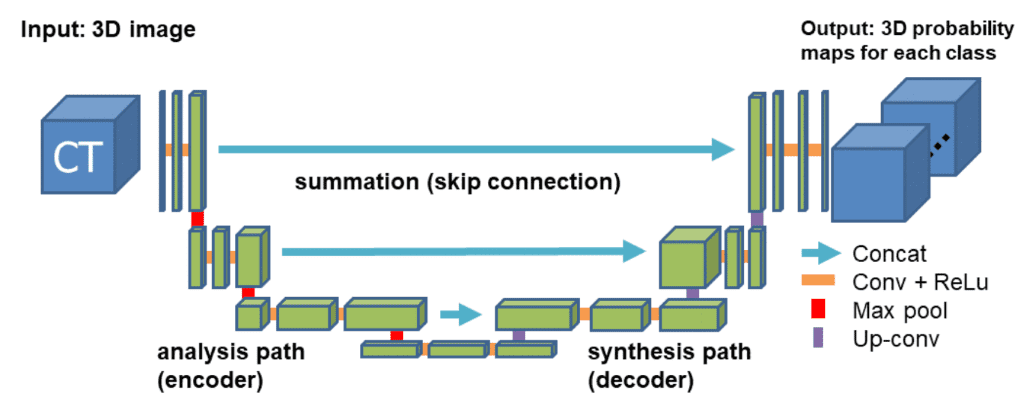

For our instance, we are going to use the well-accepted 3D U-shaped community. The latter (code) expands the successive concept of a symmetrical u-shaped 2D Unet community that yields spectacular leads to RGB-related duties, corresponding to semantic segmentation. The mannequin has an encoder(contracting path) and a decoder (synthesis path) path every with 4 decision steps. Within the encoder path, every layer accommodates two 3 ×3 ×3 convolutions every adopted by a rectified linear unit (ReLu), after which a 2 ×2 ×2 max pooling with strides of two in every dimension. Within the decoder path, every layer consists of a transpose convolution of two ×2 ×2 by strides of two in every dimension, adopted by two 3 ×3 ×3 convolutions every adopted by a ReLu. Shortcut skip connections from layers of equal decision within the evaluation path present the important high-resolution options to the synthesis path. Within the final layer, a 1×1×1 convolution reduces the variety of output channels to the variety of labels. Bottlenecks are averted by doubling the variety of channels already earlier than max pooling. 3D batch

normalization is launched earlier than every ReLU. Every batch is normalized throughout coaching with its imply and commonplace deviation and international statistics are up to date utilizing these values. That is adopted by a layer to be taught scale and bias explicitly. The Fig. under illustrates the community structure.

Community structure taken from the 3D Unet authentic paper

Loss operate: Cube Loss

As a result of inherent job imbalance, cross-entropy can not all the time present good options for this job. Particularly, cross-entropy loss examines every pixel individually, evaluating the category predictions (depth-wise pixel vector) to our one-hot encoded goal vector. As a result of the cross-entropy loss evaluates the category predictions for every pixel vector individually after which averages over all pixels, we’re basically asserting equal studying to every pixel within the picture. This generally is a downside in case your varied lessons have unbalanced illustration within the picture, as probably the most prevalent class can dominate coaching.

The 4 lessons that we’ll attempt to distinguish in mind MRI have completely different frequencies in a picture (i.e. air has far more cases than the opposite tissues). That’s why the cube loss metric is adopted. It’s primarily based on the Cube coefficient, which is basically a measure of overlap between two samples. This measure ranges from 0 to 1 the place a Cube coefficient of 1 denotes excellent and full overlap. Cube loss was initially developed for binary classification, however it may be generalized to work with a number of lessons. Be happy to make use of our multi-class implementationof Cube loss.

Medical imaging information

Deep architectures requiring numerous coaching samples earlier than they’ll produce something helpful generalized illustration and labeled coaching information are sometimes each costly and troublesome to provide. That’s why we see day-after-day new strategies that use generative

studying to provide an increasing number of medical imaging information. Moreover, the coaching information have to be consultant of the info the community will meet sooner or later. If the coaching samples are drawn from an information distribution that’s completely different from the one would meet in the actual world, then the community’s generalization efficiency will probably be decrease than anticipated.

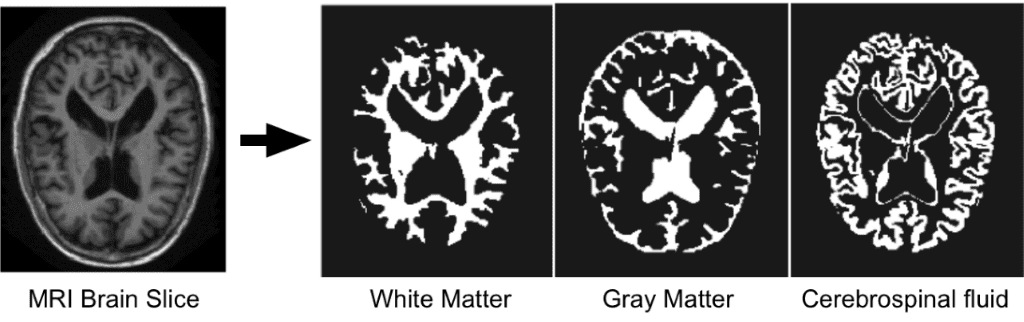

Since we’re specializing in mind MRI automated segmentation, you will need to briefly describe the essential buildings of the mind that DNN’s are attempting to tell apart a) White matter(WM), b) Gray matter(GM), c) Cerebrospinal fluid(CSF). The next determine illustrates the segmented tissues in mind MRI slice.

Borrowed from I-seg 2017 medical information MICCAI problem

Correct segmentation of toddler mind MRI photos into white matter (WM), grey matter (GM), and cerebrospinal fluid (CSF) on this essential interval are of elementary significance in finding out each regular and irregular early mind growth. The primary yr of life is probably the most dynamic section of the postnatal human mind growth, together with fast tissue development and growth of a variety of cognitive and motor capabilities. This early interval is essential in lots of neurodevelopmental and neuropsychiatric issues, corresponding to schizophrenia and autism. Increasingly consideration has been paid to this essential interval.

This dataset goals to advertise automated segmentation algorithms on 6-month toddler mind MRI. This problem was carried out along with MICCAI 2017, with a complete of 21 worldwide groups. The dataset accommodates 10 densely annotated photos from specialists and 13 imaging for testing. Check labels will not be offered, and you’ll solely see your rating after importing the outcomes on the official web site. For every topic, there’s a T1 weighted and T2 weighted picture.

The primary topic will probably be used for testing. The unique MR volumes are of measurement 256x192x144. In 3D-Unet the sampled sub-volumes that have been used are of measurement 128x128x64. The coaching dataset that was generated consisted of 500 sub-volumes. For the validation set, 10 random samples from one topic have been used.

WHY:

Our aim is to implement an open-source medical picture segmentation library of state-of-the-art 3D deep neural networks in PyTorch together with information loaders of the most typical medical datasets. The primary steady launch of our repository is predicted to be revealed quickly.

We strongly consider in open and reproducible deep studying analysis. As a way to reproduce our outcomes, the code and supplies of this work can be found on this repository. This undertaking began as a MSc Thesis and is at the moment beneath additional growth.

Placing all of it collectively

Implementation Particulars

We used PyTorch framework, which is taken into account probably the most extensively accepted deep studying analysis device. Stochastic gradient descend with a single batch measurement with studying fee 1e-3 and weight decay 1e-8 was used for all experiments. We offered assessments in our repository which you could simply reproduce our outcomes so that you could use the code, fashions, and information loaders.

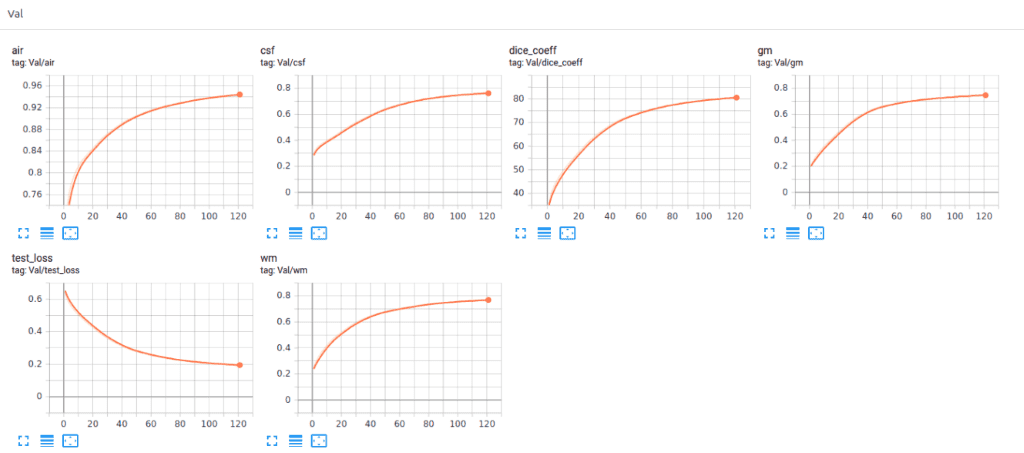

Not too long ago we added Tensorboard visualization with Pytorch. This superb characteristic retains your sanity in-place and allows you to monitor the coaching technique of your mannequin. Under you may see an instance of maintaining the coaching stats, cube coeff. and loss in addition to the per class-score to know the mannequin conduct.

Code

Let’s put all of the described modules collectively to arrange an experiment in a brief script (for illustration functions) with MedicalZoo.

import argparse

import os

import lib.medloaders as medical_loaders

import lib.medzoo as medzoo

import lib.prepare as prepare

import lib.utils as utils

from lib.losses3D import DiceLoss

def major():

args = get_arguments()

utils.make_dirs(args.save)

training_generator, val_generator, full_volume, affine = medical_loaders.generate_datasets(args,

path='.././datasets')

mannequin, optimizer = medzoo.create_model(args)

criterion = DiceLoss(lessons=args.lessons)

if args.cuda:

mannequin = mannequin.cuda()

print("Mannequin transferred in GPU.....")

coach = prepare.Coach(args, mannequin, criterion, optimizer, train_data_loader=training_generator,

valid_data_loader=val_generator, lr_scheduler=None)

print("START TRAINING...")

coach.coaching()

def get_arguments():

parser = argparse.ArgumentParser()

parser.add_argument('--batchSz', sort=int, default=4)

parser.add_argument('--dataset_name', sort=str, default="iseg2017")

parser.add_argument('--dim', nargs="+", sort=int, default=(64, 64, 64))

parser.add_argument('--nEpochs', sort=int, default=200)

parser.add_argument('--classes', sort=int, default=4)

parser.add_argument('--samples_train', sort=int, default=1024)

parser.add_argument('--samples_val', sort=int, default=128)

parser.add_argument('--inChannels', sort=int, default=2)

parser.add_argument('--inModalities', sort=int, default=2)

parser.add_argument('--threshold', default=0.1, sort=float)

parser.add_argument('--terminal_show_freq', default=50)

parser.add_argument('--augmentation', motion='store_true', default=False)

parser.add_argument('--normalization', default='full_volume_mean', sort=str,

assist='Tensor normalization: choices ,max_min,',

selections=('max_min', 'full_volume_mean', 'brats', 'max', 'imply'))

parser.add_argument('--split', default=0.8, sort=float, assist='Choose proportion of coaching information(default: 0.8)')

parser.add_argument('--lr', default=1e-2, sort=float,

assist='studying fee (default: 1e-3)')

parser.add_argument('--cuda', motion='store_true', default=True)

parser.add_argument('--loadData', default=True)

parser.add_argument('--resume', default='', sort=str, metavar='PATH',

assist='path to newest checkpoint (default: none)')

parser.add_argument('--model', sort=str, default='VNET',

selections=('VNET', 'VNET2', 'UNET3D', 'DENSENET1', 'DENSENET2', 'DENSENET3', 'HYPERDENSENET'))

parser.add_argument('--opt', sort=str, default='sgd',

selections=('sgd', 'adam', 'rmsprop'))

parser.add_argument('--log_dir', sort=str,

default='../runs/')

args = parser.parse_args()

args.save = '../saved_models/' + args.mannequin + '_checkpoints/' + args.mannequin + '_{}_{}_'.format(

utils.datestr(), args.dataset_name)

return args

if __name__ == '__main__':

major()

Experimental outcomes

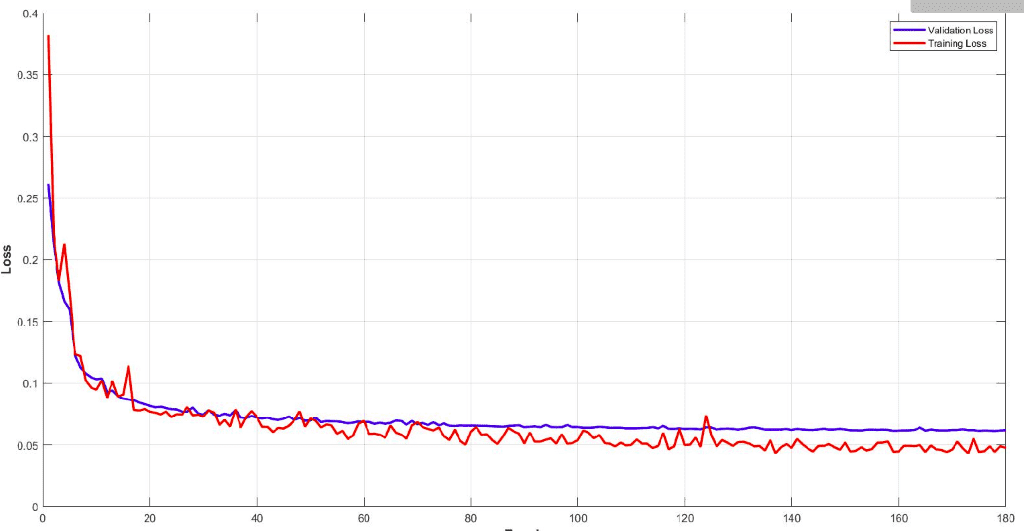

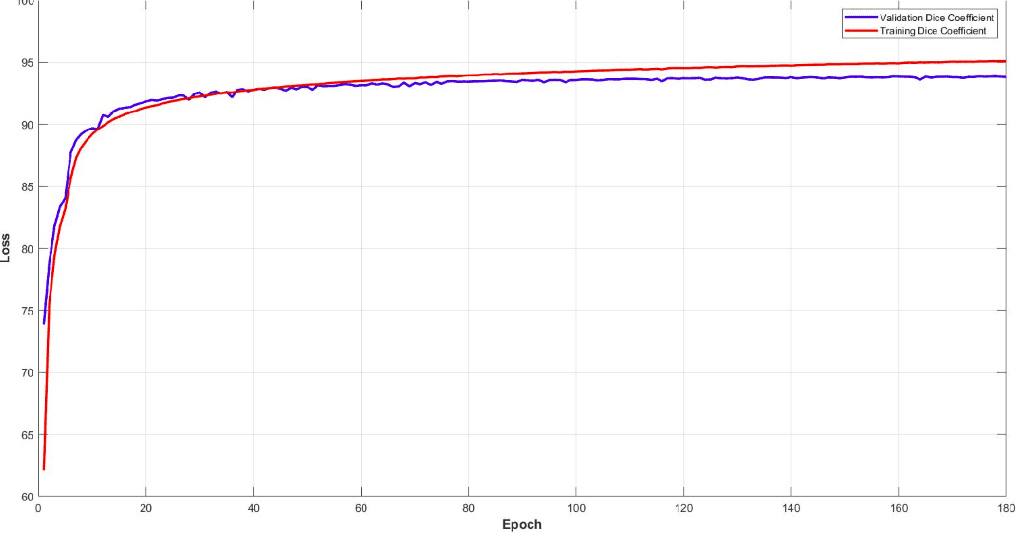

Under you may see the coaching and validation cube loss curve of the mannequin. You will need to monitor your mannequin efficiency and tune the parameters to get such a easy coaching curve. It’s simple to know the effectivity of this mannequin.

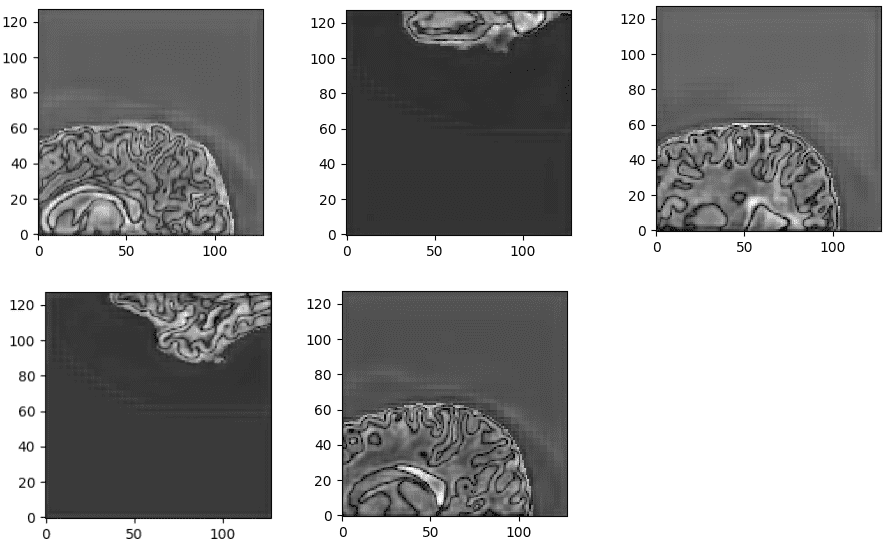

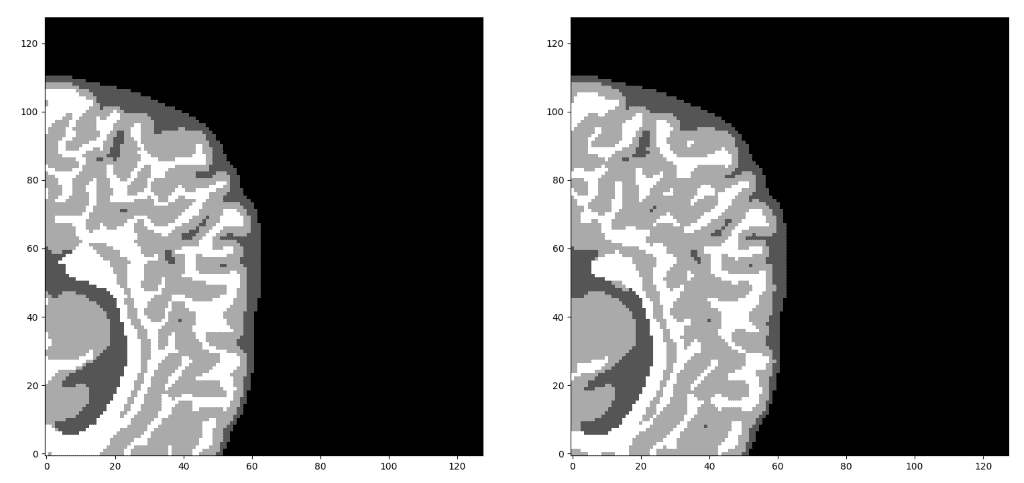

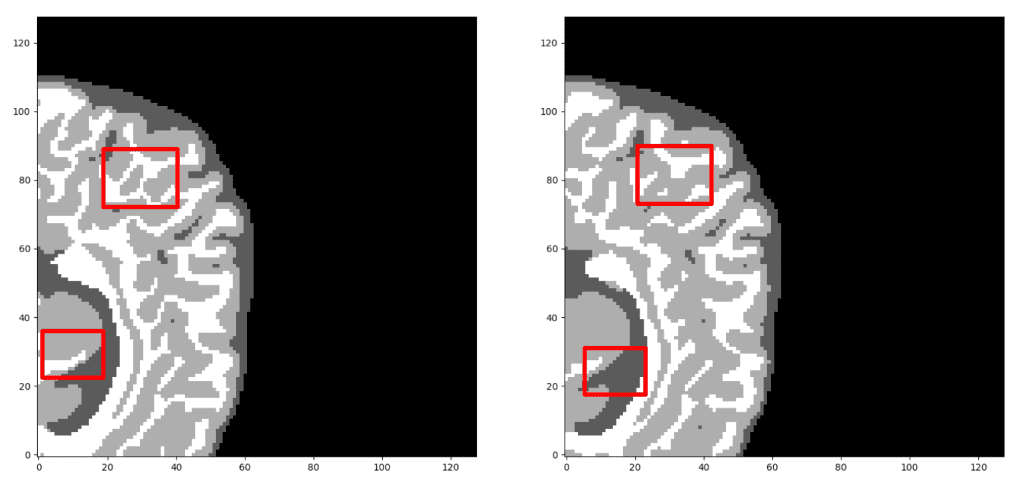

Surprisingly, the mannequin reaches a cube coeff rating of roughly 93% within the validation set of sub-volumes. Final however not least, let’s see some visualisation predictions from 3D-Unet within the validation set. We current solely a consultant slice right here, though the prediction is a 3D-volume. By taking a number of sub-volumes of the MRI, one can mix them to kind a full 3D MRI segmentation. Be aware that, the truth that we use sub-volumes sampling serves as information augmentation.

Unnormalized final layer pre-activation from educated 3D-Unet. The community learns extremely semantic task-relevant content material that corresponds to mind buildings just like the enter.

Our prediction VS Floor fact. Which prediction do you suppose is the bottom fact? Look carefully earlier than you determine! As a observe, we solely current the median axial slice right here, however the prediction is a 3D quantity. One can observe that the community predicts air voxels completely, whereas it has problem in distinguishing the tissue boundaries. However, let’s verify once more to search out out the actual one!

Now, I’m certain you may distinguish the bottom fact. In case you are undecided, verify the tip of the article 🙂

Not too long ago we additionally added Tensorboard vizualization with Pytorch. This superb characteristic retains your sanity in-place and let’s you monitor the coaching technique of your mannequin. Under you may see an instance of maintaining the coaching stats, cube coefficient and loss in addition to the per class-score to know the mannequin behaviour.

It’s apparent that the completely different tissues have completely different accuracies, even from the beginning of the coaching. For instance, have a look at air voxels within the validation set that begin from a excessive worth as a result of it’s the most dominant class of an imbalanced dataset. However, gray matter begins from the bottom worth, as a result of it’s the most troublesome to tell apart and with the much less coaching cases.

Conclusion

This put up serves partly as an illustration of a number of the options of MedicalZoo Pytorch library that’s developed by our crew. Deep studying fashions will present society with immerse medical picture options.

On this article, we reviewed the essential ideas of medical imaging and MRI, in addition to how they are often represented and utilized in a deep studying structure. Then, we described an environment friendly extensively accepted 3D structure (Unet) and the cube loss operate to deal with class imbalance. Lastly, we mixed all of the above-described options and used the library scripts to offer the preliminary outcomes of our experimental evaluation in mind MRI. The outcomes display the effectivity of 3D architectures and the potential of deep studying in medical picture evaluation.

Lastly, there are limitless alternatives to enhance present medical picture options for a plethora of issues, so keep up to date for extra biomedical imaging posts with Python and our beloved Pytorch. To dive deeper into how AI is utilized in Drugs, you may’t go unsuitable with this on-line course by Coursera: AI for Drugs

Keep tuned for extra medical imaging AI summer time tutorials.

Appendix – The place to search out medical imaging information

Should you reached this level and understood the details of this text, I’m actually glad. That’s why I’ll reveal that the bottom fact picture is the left one 😊. Sadly, medical picture information can’t be shared or used for business causes. Please be happy to navigate within the following hyperlinks with a view to obtain the info. Be happy to share with us your personal thrilling machine studying options.

References:

* Disclosure: Please observe that a number of the hyperlinks above may be affiliate hyperlinks, and at no further value to you, we are going to earn a fee if you happen to determine to make a purchase order after clicking via.